Transcription

Web Relation Extraction withDistant SupervisionIsabelle AugensteinThe University of SheffieldSubmitted in partial fulfillment of the requirements for the degree ofDoctor of PhilosophyJuly 2016

AcknowledgementsFirst and foremost, I would like to thank my PhD supervisors Fabio Ciravegna andDiana Maynard for their support and guidance throughout my PhD and their valuableand timely feedback on this thesis. I would also like to thank the Department ofComputer Science, The University of Sheffield for funding this research in the form ofa doctoral studentship. My thanks further goes to my thesis examiners Rob Gaizauskasand Ted Briscoe for their constructive criticism.During my thesis I had a number of additional mentors and collaborators to whomI am indebted. I am grateful to Andreas Vlachos for introducing me to the conceptof imitation learning and his continued enthusiasm and feedback on the topic. Mygratitude goes to Kalina Bontcheva, for offering me a Research Associate position whileI was still working on my PhD and supporting my research plans. I am thankful toLeon Derczynski for sharing his expertise on named entity recognition and experimentdesign. I further owe my thanks to Anna Lisa Gentile, Ziqi Zhang and Eva Blomqvistfor collaborations during the earlier stages of my PhD. My thanks goes to advisorson my thesis panel Guy Brown, Lucia Specia and Stuart Wrigley, who challenged myresearch ideas and helped me form a better research plan. I also want to thank thosewho encouraged me to persue a research degree before I moved to Sheffield, specificallySebastian Rudolph and Sebastian Padó.My PhD years would not have been the same without colleagues, fellow PhD studentsand friends in the department. Special thanks go to Rosanna Milner and SuvodeepMazumdar for cheering me up when things seemed daunting, and to Johann Petrakand Roland Roller for co-representing the German-speaking minority in the departmentand not letting me forget home.Last but not least, my deep gratitude goes to my friends and family and my partnerBarry for their love, support and encouragement throughout this not always easyprocess.

AbstractBeing able to find relevant information about prominent entities quickly is the mainreason to use a search engine. However, with large quantities of information on theWorld Wide Web, real time search over billions of Web pages can waste resources andthe end user’s time. One of the solutions to this is to store the answer to frequentlyasked general knowledge queries, such as the albums released by a musical artist, in amore accessible format, a knowledge base. Knowledge bases can be created and maintained automatically by using information extraction methods, particularly methodsto extract relations between proper names (named entities). A group of approachesfor this that has become popular in recent years are distantly supervised approachesas they allow to train relation extractors without text-bound annotation, using insteadknown relations from a knowledge base to heuristically align them with a large textualcorpus from an appropriate domain. This thesis focuses on researching distant supervision for the Web domain. A new setting for creating training and testing data fordistant supervision from the Web with entity-specific search queries is introduced andthe resulting corpus is published. Methods to recognise noisy training examples as wellas methods to combine extractions based on statistics derived from the backgroundknowledge base are researched. Using co-reference resolution methods to extract relations from sentences which do not contain a direct mention of the subject of the relationis also investigated. One bottleneck for distant supervision for Web data is identified tobe named entity recognition and classification (NERC), since relation extraction methods rely on it for identifying relation arguments. Typically, existing pre-trained toolsare used, which fail in diverse genres with non-standard language, such as the Webgenre. The thesis explores what can cause NERC methods to fail in diverse genres andquantifies different reasons for NERC failure. Finally, a novel method for NERC forrelation extraction is proposed based on the idea of jointly training the named entityclassifier and the relation extractor with imitation learning to reduce the reliance onexternal NERC tools. This thesis improves the state of the art in distant supervisionfor knowledge base population, and sheds light on and proposes solutions for issuesarising for information extraction for not traditionally studied domains.

ContentsContentsiList of TablesvList of Figuresvii1 Introduction11.1Problem Statement . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .11.2Contribution . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .41.3Thesis Structure . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .61.4Previously Published Material . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .62 Background on Relation Extraction92.1Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .92.2The Task of Relation Extraction . . . . . . . . . . . . . . . . . . . . . . . . . . . .102.2.1Formal Definition . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .102.2.2Relation Extraction Pipeline . . . . . . . . . . . . . . . . . . . . . . . . . .102.2.3The Role of Knowledge Bases in Relation Extraction . . . . . . . . . . . . .122.3Relation Extraction with Minimal Supervision. . . . . . . . . . . . . . . . . . . .152.3.1Semi-supervised Approaches . . . . . . . . . . . . . . . . . . . . . . . . . . .152.3.2Unsupervised Approaches . . . . . . . . . . . . . . . . . . . . . . . . . . . .172.3.3Distant Supervision Approaches . . . . . . . . . . . . . . . . . . . . . . . .182.3.4Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .20Distant Supervision for Relation Extraction . . . . . . . . . . . . . . . . . . . . . .212.4.1Background Knowledge and Corpora . . . . . . . . . . . . . . . . . . . . . .212.4.2Extraction and Evaluation of Distant Supervision Methods . . . . . . . . .222.4.3Distant Supervision Assumption and Heuristic Labelling . . . . . . . . . . .232.4.4Named Entity Recognition for Distant Supervision . . . . . . . . . . . . . .282.4.5Applications of Distant Supervision . . . . . . . . . . . . . . . . . . . . . .292.5Limitations of Current Approaches . . . . . . . . . . . . . . . . . . . . . . . . . . .312.6Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .322.4i

iiCONTENTS3 Research Aims353.1Methodology Overview and Experiment Design . . . . . . . . . . . . . . . . . . . .353.2Setting and Evaluation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .373.2.1Setting. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .373.2.2Evaluation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .383.3Selecting Training Instances . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .393.4Named Entity Recognition . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .403.4.1Named Entity Recognition of Diverse NEs . . . . . . . . . . . . . . . . . . .403.4.2NERC for Distant Supervision . . . . . . . . . . . . . . . . . . . . . . . . .41Training and Feature Extraction . . . . . . . . . . . . . . . . . . . . . . . . . . . .413.5.1Training . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .413.5.2Feature Extraction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .42Selecting Testing Instances and Combining Predictions . . . . . . . . . . . . . . . .433.6.1Selecting Testing Instances . . . . . . . . . . . . . . . . . . . . . . . . . . .433.6.2Combining Predictions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .43Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .443.53.63.74 Distant Supervision for Web Relation Extraction454.1Distantly Supervised Relation Extraction . . . . . . . . . . . . . . . . . . . . . . .484.2Training Data Selection . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .494.2.1Ambiguity Of Objects . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .494.2.2Ambiguity Across Classes . . . . . . . . . . . . . . . . . . . . . . . . . . . .494.2.3Relaxed Setting . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .504.2.4Information Integration . . . . . . . . . . . . . . . . . . . . . . . . . . . . .52System . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .534.3.1Corpus. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .534.3.2NLP Pipeline . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .544.3.3Annotating Sentences . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .564.3.4Training Data Selection . . . . . . . . . . . . . . . . . . . . . . . . . . . . .574.3.5Models. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .584.3.6Predicting Relations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .58Evaluation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .594.4.1Manual Evaluation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .594.4.2Automatic Evaluation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .594.5Results . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .604.6Discussion . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .644.7Summary . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .654.34.45 Recognising Diverse Named Entities695.1Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .695.2Related Work . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .715.3Experiments . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .72

CONTENTS5.45.55.6iiiDatasets and Methods . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .725.4.1Datasets . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .725.4.2NER Models and Features . . . . . . . . . . . . . . . . . . . . . . . . . . . .78Experiments . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .795.5.1RQ1: NER performance in Different Domains . . . . . . . . . . . . . . . . .795.5.2RQ2: Impact of NE Diversity . . . . . . . . . . . . . . . . . . . . . . . . . .845.5.3RQ3: Out-Of-Genre NER Performance and Memorisation . . . . . . . . . .865.5.4RQ4: Memorisation, Context Diversity and NER performance. . . . . . .90. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .91Conclusion6 Extracting Relations between Diverse Named Entities956.1Introduction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .956.2Background on Imitation Learning . . . . . . . . . . . . . . . . . . . . . . . . . . .976.3Approach Overview6.4Named Entity Recognition and Relation Extraction . . . . . . . . . . . . . . . . . . 1016.56.66.76.4.1Imitation Learning for Relation Extraction . . . . . . . . . . . . . . . . . . 1026.4.2Relation Candidate Identification . . . . . . . . . . . . . . . . . . . . . . . . 1066.4.3RE Features . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1086.4.4Supervised NEC Features for RE . . . . . . . . . . . . . . . . . . . . . . . . 109Evaluation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1106.5.1Corpus6.5.2Models and Metrics . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1117.2. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 110Results and Discussion . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1136.6.1Comparison of Models . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1146.6.2Imitation Learning vs One-Stage . . . . . . . . . . . . . . . . . . . . . . . . 1146.6.3Comparison of Features . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1156.6.4Overall Comparison . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 115Conclusion and Future Work . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1157 Conclusions7.1. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 100119Conclusions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1197.1.1Setting and Evaluation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1207.1.2Selecting Training Instances . . . . . . . . . . . . . . . . . . . . . . . . . . . 1217.1.3Named Entity Recognition of Diverse NEs . . . . . . . . . . . . . . . . . . . 1227.1.4NERC for Distant Supervision . . . . . . . . . . . . . . . . . . . . . . . . . 1247.1.5Feature Extraction . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1257.1.6Selecting Testing Instances and Combining Predictions . . . . . . . . . . . . 125Future Work and Outlook . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1277.2.1Imitation Learning with Deep Learning . . . . . . . . . . . . . . . . . . . . 1277.2.2Distantly Supervised Relation Extraction for New Genres . . . . . . . . . . 1287.2.3Joint Learning of Additional Stages . . . . . . . . . . . . . . . . . . . . . . 1287.2.4Joint Extraction from Different Web Content . . . . . . . . . . . . . . . . . 129

ivCONTENTS7.2.57.3Differences in Extraction Performance between Relations . . . . . . . . . . 129Final Words . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 130Bibliography131

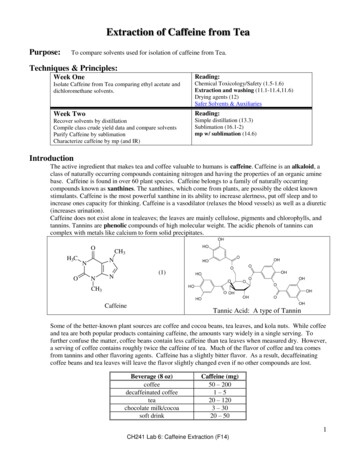

List of Tables1.1Information about The Beatles in the knowledge base Freebase . . . . . . . . . . .22.1Comparison of different minimally supervised relation extraction methods . . . . .204.1Freebase classes and properties/relations used . . . . . . . . . . . . . . . . . . . . .544.2Distribution of websites per class in the Web corpus sorted by frequency . . . . . .554.3Manual evaluation results: Number of true positives (N) and precision (P) for allFreebase classes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .4.459Training data selection results: micro average of precision (P), recall (R) and F1measure (F1) over all relations, using the Multilab Limit75 integration strategy anddifferent training data selection models. The estimated upper bound for recall is0.0917. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .4.560Information integration results: micro average of precision (P), recall (R) and F1measure (F1) over all relations, using the CorefN Stop Unam Stat75 model anddifferent information integration methods. . . . . . . . . . . . . . . . . . . . . . . .4.661Co-reference resolution results: micro average of precision (P), recall (R) and F1measure (F1) over all relations, using the CorefN Stop Unam Stat75 model anddifferent co-reference resolution methods. . . . . . . . . . . . . . . . . . . . . . . .4.761Best overall results: micro average of precision (P), recall (R), F1 measure (F1)and estimated upper bound for recall over all relations. The best normal method isthe Stop Unam Stat75 training data selection strategy and the MultiLab Limit75integration strategy, the best “relaxed” method uses the same strategies for trainingdata selection and information integration and CorefN for co-reference resolution. .615.1Corpora genres and number of NEs of different types . . . . . . . . . . . . . . . . .745.2Token/type ratios and normalised token/type ratios of different corpora . . . . . .755.3NE/Unique NE ratios and normalised NE/Unique NE ratios of different corpora .765.4Tag density and normalised tag density, the proportion of tokens with NE tags toall tokens . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .5.55.677P, R and F1 of NERC with different models evaluated on different testing corpora,trained on corpora normalised by size . . . . . . . . . . . . . . . . . . . . . . . . .79P, R and F1 of NERC with different models trained on original corpora . . . . . .80v

viLIST OF TABLES5.7F1 per NE type with different models trained on original corpora . . . . . . . . . .815.8Proportion of unseen entities in different test corpora . . . . . . . . . . . . . . . . .845.9P, R and F1 of NERC with different models of unseen and seen NEs . . . . . . . .855.10 Out of genre performance: F1 of NERC with different models . . . . . . . . . . . .875.11 Out of genre performance for unseen vs seen NEs: F1 of NERC with different models 886.1Results for POS-based candidate identification strategies compared to Stanford NER1086.2Freebase classes and properties/relations used . . . . . . . . . . . . . . . . . . . . . 1096.3Relation types and corresponding coarse NE types . . . . . . . . . . . . . . . . . . 1106.4Results for best model for each relation, macro average over all relations. Metricsreported are first best precision (P-top), first best recall (R-top), first best F1 (F1top), all precision (P-all), all recall (P-all), and all average precision (P-avg)(Manninget al., 2008). The number of all results for computing recall is the number of allrelation tuples in the KB. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1116.5Results for best model for each relation. Metrics reported are first best precision(P-top), first best recall (R-top), first best F1 (F1-top), all precision (P-all), all recall(P-all), and all average precision (P-avg)(Manning et al., 2008). The number of allresults for computing recall is the number of all relation tuples in the KB. Thehighest P-avg in bold. . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1126.6Best feature combination for IL . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1136.7Imitation learning results for different NE and relation features, macro average overall relations. Metrics reported are first best precision (P-top), first best recall (Rtop), first best F1 (F1-top), all precision (P-all), all recall (P-all), and all averageprecision (P-avg)(Manning et al., 2008). . . . . . . . . . . . . . . . . . . . . . . . . 113

List of Figures2.1Typical Relation Extraction Pipeline . . . . . . . . . . . . . . . . . . . . . . . . . .112.2LOD Cloud diagram, as of April 2014 . . . . . . . . . . . . . . . . . . . . . . . . .142.3Mintz et al. (2009) Distant Supervision Method Overview . . . . . . . . . . . . . .193.1Overview of Distant Supervision Approach of this Thesis . . . . . . . . . . . . . . .354.1Arctic Monkeys biography, illustrating discourse entities . . . . . . . . . . . . . . .465.1F1 of different NER methods with respect to corpus size, measured in log of numberof NEs . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .5.2Percentage of unseen features and F1 with Stanford NER for seen and unseen NEsin different corpora . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . .6.18289Overview of approach . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 101vii

viiiLIST OF FIGURES

Chapter 1Introduction1.1Problem StatementIn the information age, we are facing an abundance of information on the World Wide Web throughdifferent channels – news websites, blogs, social media, just to name a few. One way of makingsense of this information is to use search engines, which locate information for a specific user queryand sort Web pages by relevance. This still leaves the user to dig through several Web pages andmake sense of overlapping, and sometimes even contradictory pieces of information.These problems have partly been addressed by information extraction (IE), an area whichaims at capturing central conc

Abstract Being able to nd relevant information about prominent entities quickly is the main reason to use a search eng