Transcription

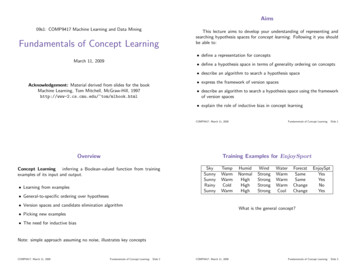

Aims09s1: COMP9417 Machine Learning and Data MiningFundamentals of Concept LearningThis lecture aims to develop your understanding of representing andsearching hypothesis spaces for concept learning. Following it you shouldbe able to: define a representation for conceptsMarch 11, 2009 define a hypothesis space in terms of generality ordering on concepts describe an algorithm to search a hypothesis spaceAcknowledgement: Material derived from slides for the bookMachine Learning, Tom Mitchell, McGraw-Hill, 1997http://www-2.cs.cmu.edu/ tom/mlbook.html express the framework of version spaces describe an algorithm to search a hypothesis space using the frameworkof version spaces explain the role of inductive bias in concept learningCOMP9417: March 11, 2009OverviewFundamentals of Concept Learning: Slide 1Training Examples for EnjoySportConcept Learning inferring a Boolean-valued function from trainingexamples of its input and output. Learning from EnjoySptYesYesNoYes General-to-specific ordering over hypotheses Version spaces and candidate elimination algorithmWhat is the general concept? Picking new examples The need for inductive biasNote: simple approach assuming no noise, illustrates key conceptsCOMP9417: March 11, 2009Fundamentals of Concept Learning: Slide 2COMP9417: March 11, 2009Fundamentals of Concept Learning: Slide 3

Representing HypothesesThe Prototypical Concept Learning TaskMany possible representations . . . Given:Here, h is a conjunction of constraints on attributes.– Instances X: Possible days, each described by the attributesEach constraint can be:AttributeSkyAirTempHumidWindWaterForecast a specific value (e.g., W ater W arm) don’t care (e.g., “W ater ?”) no value allowed (e.g.,“Water ”)ValuesSunny, Cloudy, RainyWarm, ColdNormal, HighStrong, WeakWarm, CoolSame, ChangeFor example,SkyhSunnyAirTemp?COMP9417: March 11, 2009Humid?WindStrongWater?ForecstSameiFundamentals of Concept Learning: Slide 4The Prototypical Concept Learning TaskCOMP9417: March 11, 2009Fundamentals of Concept Learning: Slide 5The inductive learning hypothesis– Target function c: EnjoySport : X {0, 1}– Hypotheses H: Conjunctions of literals. E.g.h?, Cold, High, ?, ?, ?i.– Training examples D: Positive and negative examples of the targetfunctionhx1, c(x1)i, . . . hxm, c(xm)iAny hypothesis found to approximate the target function well overa sufficiently large set of training examples will also approximate thetarget function well over other unobserved examples. Determine: A hypothesis h in H such that h(x) c(x) for all x in D(usually called the target hypothesis).COMP9417: March 11, 2009Fundamentals of Concept Learning: Slide 6COMP9417: March 11, 2009Fundamentals of Concept Learning: Slide 7

Concept Learning as SearchConcept Learning as SearchHypothesis spaceQuestion: What can be learned ?Answer: (only) what is in the hypothesis spaceSky AirTemp . . . Forecast 5 4 4 4 4 4How big is the hypothesis space for EnjoySport ? 5120Instance space(semantically distinct only) 1 (4 3 3 3 3 3) 973Sky AirTemp . . . Forecast 3 2 2 2 2 2 96 any hypothesis with an constraint covers no instances, hence all aresemantically equivalent.The learning problem searching a hypothesis space. How ?COMP9417: March 11, 2009Fundamentals of Concept Learning: Slide 8Instances, Hypotheses, and More-General-ThanInstances XCOMP9417: March 11, 2009Fundamentals of Concept Learning: Slide 9A generality order on hypothesesHypotheses HSpecifich1x1xhh2Definition: Let hj and hk be Boolean-valued functions defined overinstances X. Then hj is more general than or equal to hk (writtenhj g hk ) if and only if3( x X)[(hk (x) 1) (hj (x) 1)]2GeneralIntuitively, hj is more general than or equal to hk if any instancesatisfying hk also satisfies hj .x1 Sunny, Warm, High, Strong, Cool, Same h 1 Sunny, ?, ?, Strong, ?, ? x Sunny, Warm, High, Light, Warm, Same 2h Sunny, ?, ?, ?, ?, ? 2h Sunny, ?, ?, ?, Cool, ? 3hj is (strictly) more general than hk (written hj g hk ) if and only if(hj g hk ) (hk 6 g hj ).hj is more specific than hk when hk is more general than hj .COMP9417: March 11, 2009Fundamentals of Concept Learning: Slide 10COMP9417: March 11, 2009Fundamentals of Concept Learning: Slide 11

The Find-S AlgorithmHypothesis Space Search by Find-SInstances XHypotheses H1. Initialize h to the most specific hypothesis in Hh0-x32. For each positive training instance x For each attribute constraint ai in hIf the constraint ai in h is satisfied by xThen do nothingElse replace ai in h by the next more general constraint that issatisfied by xx 1 2x4 x 2 Sunny Warm High Strong Warm Same , x 3 Rainy Cold High Strong Warm Change , -x Sunny Warm High Strong Cool Change , 4Fundamentals of Concept Learning: Slide 12h 2,3x x 1 Sunny Warm Normal Strong Warm Same , COMP9417: March 11, 2009Specifich1hGeneralh , , , , , 0h1 Sunny Warm Normal Strong Warm Same h2 Sunny Warm ? Strong Warm Same h Sunny Warm ? Strong Warm Same 3h Sunny Warm ? Strong ? ? 4COMP9417: March 11, 2009Find-S - does it work ?4Fundamentals of Concept Learning: Slide 13Complaints about Find-S Can’t tell whether it has learned conceptAssume: a hypothesis hc H describes target function c, and trainingdata is error-free.By definition, hc is consistent with all positive training examples and cannever cover a negative example.For each h generated by Find-S, hc is more general than or equal toh.learned hypothesis may not be the only consistent hypothesis Can’t tell when training data inconsistentcannot handle noisy data Picks a maximally specific h (why?)might require maximally general hSo h can never cover a negative example.COMP9417: March 11, 2009Fundamentals of Concept Learning: Slide 14COMP9417: March 11, 2009Fundamentals of Concept Learning: Slide 15

Version SpacesThe List-Then-Eliminate AlgorithmA hypothesis h is consistent with a set of training examples D oftarget concept c if and only if h(x) c(x) for each training examplehx, c(x)i in D.1. V ersionSpace a list containing every hypothesis in H2. For each training example, hx, c(x)iremove from V ersionSpace any hypothesis h for which h(x) 6 c(x)Consistent(h, D) ( hx, c(x)i D) h(x) c(x)3. Output the list of hypotheses in V ersionSpaceThe version space, V SH,D , with respect to hypothesis space H andtraining examples D, is the subset of hypotheses from H consistentwith all training examples in D.V SH,D {h H Consistent(h, D)}COMP9417: March 11, 2009Fundamentals of Concept Learning: Slide 16COMP9417: March 11, 2009Example Version SpaceFundamentals of Concept Learning: Slide 17Representing Version SpacesThe General boundary, G, of version space V SH,D is the set of itsmaximally general membersS: { Sunny, Warm, ?, Strong, ?, ? }The Specific boundary, S, of version space V SH,D is the set of itsmaximally specific members Sunny, ?, ?, Strong, ?, ? Sunny, Warm, ?, ?, ?, ? ?, Warm, ?, Strong, ?, ? Every member of the version space lies between these boundariesV SH,D {h H ( s S)( g G)(g h s)}G:{ Sunny, ?, ?, ?, ?, ? , ?, Warm, ?, ?, ?, ? }COMP9417: March 11, 2009where x y means x is more general or equal to yFundamentals of Concept Learning: Slide 18COMP9417: March 11, 2009Fundamentals of Concept Learning: Slide 19

The Candidate Elimination AlgorithmThe Candidate Elimination AlgorithmG maximally general hypotheses in H If d is a negative exampleS maximally specific hypotheses in HFor each training example d, do If d is a positive example– Remove from G any hypothesis inconsistent with d– For each hypothesis s in S that is not consistent with d Remove s from S Add to S all minimal generalizations h of s such that1. h is consistent with d, and2. some member of G is more general than h Remove from S any hypothesis that is more general than anotherhypothesis in SCOMP9417: March 11, 2009Fundamentals of Concept Learning: Slide 20– Remove from S any hypothesis inconsistent with d– For each hypothesis g in G that is not consistent with d Remove g from G Add to G all minimal specializations h of g such that1. h is consistent with d, and2. some member of S is more specific than h Remove from G any hypothesis that is less general than anotherhypothesis in GCOMP9417: March 11, 2009Fundamentals of Concept Learning: Slide 21Example TraceExample TraceS :0S : { , , , , , }0{ Ø, Ø, Ø, Ø, Ø, Ø }S 1 : { Sunny, Warm, Normal, Strong, Warm, Same }S 2 : { Sunny, Warm, ?, Strong, Warm, Same }G ,G ,G :0 1 2{ ?, ?, ?, ?, ?, ? }Training examples:G 0:COMP9417: March 11, 20091 . Sunny, Warm, Normal, Strong, Warm, Same , Enjoy Sport Yes{ ?, ?, ?, ?, ?, ? }2 . Sunny, Warm, High, Strong, Warm, Same , Enjoy Sport YesFundamentals of Concept Learning: Slide 22COMP9417: March 11, 2009Fundamentals of Concept Learning: Slide 23

Example TraceExample TraceS 2 , S 3 : { Sunny, Warm, ?, Strong, Warm, Same }S 3 : { Sunny, Warm, ?, Strong, Warm, Same }S 4:G 3:{ Sunny, Warm, ?, Strong, ?, ? }{ Sunny, ?, ?, ?, ?, ? ?, Warm, ?, ?, ?, ? ?, ?, ?, ?, ?, Same }G 4: { Sunny, ?, ?, ?, ?, ? ?, Warm, ?, ?, ?, ? }G 2:{ ?, ?, ?, ?, ?, ? }G 3: { Sunny, ?, ?, ?, ?, ? ?, Warm, ?, ?, ?, ? ?, ?, ?, ?, ?, Same }Training Example:Training Example:3. Rainy, Cold, High, Strong, Warm, Change , EnjoySport No4. Sunny, Warm, High, Strong, Cool, Change , EnjoySport YesCOMP9417: March 11, 2009Fundamentals of Concept Learning: Slide 24Example TraceCOMP9417: March 11, 2009Fundamentals of Concept Learning: Slide 25Which Training Example Is Best To Choose Next ?S 4 : { Sunny, Warm, ?, Strong, ?, ? }S: { Sunny, Warm, ?, Strong, ?, ? } Sunny, ?, ?, Strong, ?, ? Sunny, Warm, ?, ?, ?, ? ?, Warm, ?, Strong, ?, ? Sunny, ?, ?, Strong, ?, ? Sunny, Warm, ?, ?, ?, ? ?, Warm, ?, Strong, ?, ? G : { Sunny, ?, ?, ?, ?, ? , ?, Warm, ?, ?, ?, ? }4G:COMP9417: March 11, 2009Fundamentals of Concept Learning: Slide 26{ Sunny, ?, ?, ?, ?, ? , ?, Warm, ?, ?, ?, ? }COMP9417: March 11, 2009Fundamentals of Concept Learning: Slide 27

Which Training Example To Choose Next ?How Should New Instances Be Classified ?S: { Sunny, Warm, ?, Strong, ?, ? }S: { Sunny, Warm, ?, Strong, ?, ? } Sunny, ?, ?, Strong, ?, ? Sunny, ?, ?, Strong, ?, ? G: Sunny, Warm, ?, ?, ?, ? { Sunny, ?, ?, ?, ?, ? , ?, Warm, ?, ?, ?, ? }hSunny W arm N ormal Light W arm SameiCOMP9417: March 11, 2009 Sunny, Warm, ?, ?, ?, ? ?, Warm, ?, Strong, ?, ? ?, Warm, ?, Strong, ?, ? Fundamentals of Concept Learning: Slide 28How Should New Instances Be Classified ?G:{ Sunny, ?, ?, ?, ?, ? , ?, Warm, ?, ?, ?, ? }h Sunny Warm Normal Strong Cool Change ih Rainy Cold Normal Light Warm Same ih Sunny Warm Normal Light Warm Same iCOMP9417: March 11, 2009Fundamentals of Concept Learning: Slide 29What Justifies this Inductive Leap ?S: { Sunny, Warm, ?, Strong, ?, ? } hSunny W arm N ormal Strong Cool Changei hSunny W arm N ormal Light W arm Samei Sunny, ?, ?, Strong, ?, ? Sunny, Warm, ?, ?, ?, ? ?, Warm, ?, Strong, ?, ? S : hSunny W arm N ormal ? ? ?iG:{ Sunny, ?, ?, ?, ?, ? , ?, Warm, ?, ?, ?, ? }h Sunny Warm Normal Strong Cool Change i (6 /0 )h Rainy Cold Normal Light Warm Same i (0 /6 )h Sunny Warm Normal Light Warm Same i (3 /3 )COMP9417: March 11, 2009Fundamentals of Concept Learning: Slide 30Why believe we can classify this unseen instance ?hSunny W arm N ormal Strong W arm SameiCOMP9417: March 11, 2009Fundamentals of Concept Learning: Slide 31

An UNBiased LearnerInductive BiasConsiderIdea: Choose H that expresses every teachable concept (i.e. H is thepower set of X)0Consider H disjunctions, conjunctions, negations over previous H.E.g. concept learning algorithm L instances X, target concept c training examples Dc {hx, c(x)i}hSunny W arm N ormal ? ? ?i h? ? ? ? ? ChangeiWhat are S, G in this case? let L(xi, Dc) denote the classification assigned to the instance xi by Lafter training on data Dc.Definition: The inductive bias of L is any minimal set of assertions Bsuch that for any target concept c and corresponding training examplesDc( xi X)[(B Dc xi) L(xi, Dc)]where A B means A logically entails BS G COMP9417: March 11, 2009Fundamentals of Concept Learning: Slide 32COMP9417: March 11, 2009Inductive Systems and Equivalent Deductive SystemsFundamentals of Concept Learning: Slide 33Three Learners with Different BiasesInductive systemTraining examplesCandidateEliminationAlgorithmNew instanceUsing HypothesisSpace HClassification ofnew instance, or"don’t know"1. Rote learner: Store examples, Classify x iff it matches previouslyobserved example.2. Version space candidate elimination algorithm3. Find-SEquivalent deductive systemClassification ofnew instance, or"don’t know"Training examplesNew instanceTheorem ProverAssertion " H containsthe target concept"Inductive biasmade explicitCOMP9417: March 11, 2009Fundamentals of Concept Learning: Slide 34COMP9417: March 11, 2009Fundamentals of Concept Learning: Slide 35

Summary Points1. Concept learning as search through H2. General-to-specific ordering over H3. Version space candidate elimination algorithm4. S and G boundaries characterize learner’s uncertainty5. Learner can generate useful queries6. Inductive leaps possible only if learner is biased7. Inductive learners can be modelled by equivalent deductive systems[Suggested reading: Mitchell, Chapter 2]COMP9417: March 11, 2009Fundamentals of Concept Learning: Slide 36

09s1: COMP9417 Machine Learning and Data Mining Fundamentals of Concept Learning March 11, 2009 Acknowledgement: Material derived from slides for the book . COMP9417: March 11, 2009 Fundamentals of Concept Learning: Slide 31. An UNBiased Learner Idea: Choose H that expresses every teachable concept (i.e. H is the power set of X )