Transcription

Basic Configuration and UsageRod Schultzemail: rod.schultz@bull.com

OutlineIntroductionCommands & Running JobsConfigurationSchedulingAccountingAdvanced Topics2 Bull, 2011SLURM User Group 2011

Simple Linux Utility for Resource ManagementDocumentation3 SchedMD.com, install loc /share/doc/ release /overview.html Bull, 2011(computing.llnl.gov/linux/slurm/)SLURM User Group 2011(.man index.html)

SLURM PrinciplesArchitecture Design:One central controller daemon (slurmctld) on a management nodeA daemon on each computing node (slurmd)One central daemon for the accounting database (slurmdbd)SLURM may be aware of network topology and use it in node selection.IO nodes are not managed by SLURM4 Bull, 2011SLURM User Group 2011

SLURM Principles .Principal Concepts:A general purpose plug-in mechanism (provides different behavior for features such asscheduling policies, process tracking, etc)Partitions represent group of nodes with specific characteristics (similar resources,priority, job limits, access controls, etc)One queue of pending workJob steps which are sets of tasks within a job5 Bull, 2011SLURM User Group 2011

SLURM Architecture6 Bull, 2011SLURM User Group 2011

Basic CPU Management StepsSLURM uses four basic steps to manage CPU resources for a job/step:1) Selection of Nodes2) Allocation of CPUs from Selected Nodes3) Distribution of Tasks to Selected Nodes4) Optional Distribution and Binding of Tasks to Allocated CPUs within a Node(Task Affinity)SLURM provides a rich set of configuration and command line options to control eachstepMany options influence more than one stepInteractions between options can be complexUsers are constrained by Administrator's configuration choices7 Bull, 2011SLURM User Group 2011

OutlineIntroductionCommands & Running JobsConfigurationSchedulingAccountingAdvanced Topics8 Bull, 2011SLURM User Group 2011

User & Admin Commandssinfosqueuescancelscontrolsstatsview9 Bull, 2011display characteristics of partitionsdisplay jobs and their statecancel a job or set of jobs.display and changes characteristics of jobs, nodes,partitions.show status of running jobs.graphical view of cluster. Display and changecharacteristics of jobs, nodes, partitions.SLURM User Group 2011

Examples of info commands sinfoPARTITION -3]trek[0-3] scontrol show node trek0NodeName trek3 Arch x86 64 CoresPerSocket 4CPUAlloc 0 CPUErr 0 CPUTot 16 Features HyperThreadGres (null)NodeAddr trek0 NodeHostName trek0OS Linux RealMemory 1 Sockets 2State IDLE ThreadsPerCore 2 TmpDisk 0 Weight 1BootTime 2011-06-30T11:04:22 SlurmdStartTime 2011-07-12T06:23:43Reason (null)10 Bull, 2011SLURM User Group 2011

User Commandssrunallocate resources ( number of nodes, tasks, partition,constraints, etc.) launch a job that will execute on eachallocated cpu.salloc allocate resources (nodes, tasks, partition, etc.), eitherrun a command or start a shell. Request launch srunfrom shell. (interactive commands within one allocation)sbatch allocate resources (nodes, tasks, partition, etc.) Launcha script containing sruns for series of steps.Similar set of command line options.Request number of nodes, tasks, cpus, constraints, user info, dependencies,and lots more.11 Bull, 2011SLURM User Group 2011

Sample srun srun -l –p P2 –N2 –tasks-per-node 2 –exclusive hostname-lprepend task number to output (debug)-p P2use Partition P2-N2use 2 nodes--tasks-per-nodelaunch 2 tasks on each node--exclusivedo not share the nodeshostnamecommand to run.0:1:2:3:12trek0trek0trek1trek1 Bull, 2011SLURM User Group 2011

Admin Commandssacctmgrsetup accounts, specify limitations on users andgroups. (more on this later)sreport display information from accounting database on jobs,users, clusters.sviewgraphical view of cluster. Display and changecharacteristics of jobs, nodes, partitions. (admin hasmore privilege.)13 Bull, 2011SLURM User Group 2011

Samplesrun& infosruncommand example srun srun srun srun-p P2 -N2 -n4 sleep 120 &-p P3 sleep 120 &-w trek0 sleep 120 &sleep 1srun: job 108 queued and waiting for resources sinfoPARTITION trek3trek[0-2]trek3trek[0-2]trek3 squeueJOBID PARTITION106P2107P3108all105all14 Bull, 2011NAMEsleepsleepsleepsleepUSER STslurmRslurmRslurm PDslurmRSLURM User Group 2011TIME NODES0:010:010:000:02NODELIST(REASON)2 trek[1-2]1 trek11 (Resources)1 trek0

More info commands . scontrol show job 108JobId 108 Name sleepUserId slurm(200) GroupId slurm(200)Priority 4294901733 Account slurm QOS normalJobState PENDING Reason Resources Dependency (null)Requeue 1 Restarts 0 BatchFlag 0 ExitCode 0:0RunTime 00:00:00 TimeLimit UNLIMITED TimeMin N/ASubmitTime 2011-07-12T09:15:39 EligibleTime 2011-07-12T09:15:39StartTime 2012-07-11T09:15:38 EndTime UnknownPreemptTime NO VAL SuspendTime None SecsPreSuspend 0Partition all AllocNode:Sid sulu:8023ReqNodeList trek0 ExcNodeList (null)NodeList (null)NumNodes 1 NumCPUs 1 CPUs/Task 1 ReqS:C:T *:*:*MinCPUsNode 1 MinMemoryNode 0 MinTmpDiskNode 0Features (null) Gres (null) Reservation (null)Shared OK Contiguous 0 Licenses (null) Network (null)Command /bin/sleepWorkDir /app/slurm/rbs/ Scripts15 Bull, 2011SLURM User Group 2011

OutlineIntroductionCommands & Running JobsConfigurationSchedulingAccountingAdvanced Topics16 Bull, 2011SLURM User Group 2011

Configurationslurm.confManagement policiesScheduling policiesAllocation policiesNode definitionPartition definitionPresent on controller and all compute nodesslurmdbd.confType of persistent storage (DB)Location of storageAdmin choicestopology.confSwitch hierarchyOthers:plugstack.conf, gres.conf, cgroup.conf, .17 Bull, 2011SLURM User Group 2011

Configuration (slurm.conf)Management PoliciesLocation of controllers, backups, logs, state infoAuthenticationCryptographic toolCheckpointAccountingLoggingProlog / epilog scriptsProcess tracking18 Bull, 2011SLURM User Group 2011

Configuration (slurm.conf) # Sample config for SLURM Users Group# Management PoliciesClusterName rodControlMachine suluSlurmUser slurmSlurmctldPort 7012SlurmdPort 7013AuthType auth/mungeCryptoType crypto/munge# Location of logs and state infoStateSaveLocation /app/slurm/rbs/tmp slurm/rbs-slurm/tmpSlurmdSpoolDir /app/slurm/rbs/tmp e /app/slurm/rbs/tmp slurm/rbs-slurm/var/run/slurmctld.pidSlurmdPidFile /app/slurm/rbs/tmp ile /app/slurm/rbs/tmp slurm/rbs-slurm/slurmctld.logSlurmdLogFile /app/slurm/rbs/tmp slurm/rbs-slurm/slurmd.%n.log.%h# AccountingAccountingStorageType accounting storage/slurmdbdAccountingStorageEnforce limitsAccountingStorageLoc slurm3 dbAccountingStoragePort 8513AccountingStorageHost sulu19 Bull, 2011SLURM User Group 2011

Configuration (slurm.conf) Scheduling policiesPriorityPreemptionBackfill# Scheduling PoliciesSchedulerType sched/builtinFastSchedule 1PreemptType preempt/partition prioPreemptMode GANG,SUSPEND20 Bull, 2011SLURM User Group 2011

Configuration (slurm.conf)Allocation policiesEntire nodes or 'consumable resources'Task Affinity (lock task on CPU)Topology (minimum number of switches)# Allocaton PoliciesSelectType select/cons resSelectTypeParameters CR CoreTaskPlugin task/cgroup21 Bull, 2011SLURM User Group 2011

Configuration (slurm.conf)Node definitionCharacteristics (sockets, cores, threads, memory, features)Network addresses# Node DefinitionsNodeName DEFAULT Sockets 2 CoresPerSocket 4 ThreadsPerCore 1NodeName trek[0-31]NodeName trek[32-63] Sockets 2 CoresPerSocket 4 ThreadsPerCore 2 Feature HyperThread22 Bull, 2011SLURM User Group 2011

Configuration (slurm.conf)Partition definitionSet of nodesSharingPriority/preemption# Partition DefinitionsPartitionName all Nodes trek[0-63] Shared NO Default YESPartitionName P2 Nodes trek[0-63] Shared NO Priority 2 PreemptMode CANCELPartitionName P3 Nodes trek[0-63] Shared NO Priority 3 PreemptMode REQUEUEPartitionName P4 Nodes trek[0-63] Priority 1000 AllowGroups vipPartitionName MxThrd Nodes trek[32-63] Shared NO23 Bull, 2011SLURM User Group 2011

Why use multiple partitionsProvide different capabilities for different groups of users.Provides multiple queue for priority (with different preemption behavior)Provide subsets of the cluster.Group machines with same features (hyperthreading)Provide sharing.24 Bull, 2011SLURM User Group 2011

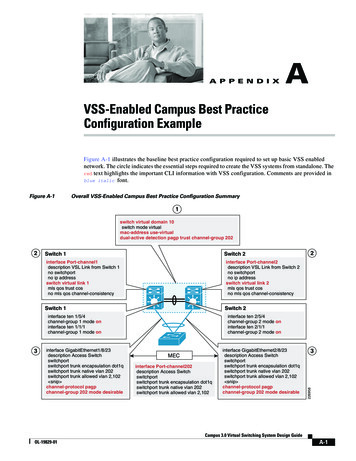

Network Topology Aware Placementtopology/tree SLURM Topology aware plugin. Best-Fitselection of resourcesIn fat-tree hierarchical topology: Bisection BandwidthConstraints need to be taken into account#slurm.conf fileTopologyPlugin topology/tree25 Bull, 2011SLURM User Group 2011

Configuration (topology.conf)topology.conf file needs to exist on all computing nodesfor network topology architecture description# topology.conf fileSwitchName Top Switches IS1,IS2SwitchName IS1 Switches TS1,TS2SwitchName IS2 Switches TS3,TS4SwitchName TS1 nodes knmi[1-18]SwitchName TS2 nodes knmi[19-37]SwitchName TS3 nodes knmi[38-56]SwitchName TS4 nodes knmi[57-75].26 Bull, 2011SLURM User Group 2011

OutlineIntroductionCommands & Running JobsConfigurationSchedulingAccountingAdvanced Topics27 Bull, 2011SLURM User Group 2011

Scheduling PoliciesScheduler TypeSched/builtin Default FIFOSched/backfill schedule jobs as long as they don’t delay awaiting job that is higher in the queue.Increases utilization of the cluster.Requires declaration of max execution time of jobs.--time on ‘srun’,DefaultTime or MaxTime on PartitionMaxWall from accounting association28 Bull, 2011SLURM User Group 2011

Backfill TheoryHoles can be filled if previous jobs order is not changedFIFO Scheduler29 Bull, 2011Backfill SchedulerSLURM User Group 2011

Backfill 4C5C6-N4-N1-N4-N2-N3–N1sleep 10–time 4 sleep–time 1 sleep–time-2 sleep–time 1 sleep–time 1 sleep6010301015FIFO SchedulerWith BackfillC1 TerminatesC2 StartsC3 Pending, not enough nodesC4 Backfills, limit less than C2C5 Pending, can't backfill as not enough nodesC6 Backfills, limit less than C2C4 TerminatesC6 TerminatesC5 now backfillsC2 terminatesC3 waits for C5 to terminate.C5's termnation still before C2's expectedtermination.Node0 C1 C2 C2 C2 C2 C2 C2 C3 C4 C4 C4 C51 C1C3 C4 C4 C4 C52 C1C3C53 C1C3C60:10 0:20 0:30 0:40 0:50 1:00 1:10 1:20 1:30 1:40 1:50 2:00 ---- Time ----- Backfill SchedulerNode0 C1 C2 C2 C2 C2 C2 C2 C31 C1 C4 C4 C4C5 C32 C1 C4 C4 C4C5 C33 C1 C6 C6 C6 C6C5 C30:10 0:20 0:30 0:40 0:50 1:00 1:10 1:20 ----- Time ----- Note: it is important to have accurate estimatedtimes.30 Bull, 2011SLURM User Group 2011

Preemption PoliciesPreempt TypesNonePartition prio priority defined on partition definition.Qosquality of service defined in accounting database.Example of Partition prioPartitionName all Nodes trek[0-63] Shared NO Default YESPartitionName P2 Nodes trek[0-63] Shared NO Priority 2 PreemptMode CANCELPartitionName P3 Nodes trek[0-63] Shared NO Priority 3 PreemptMode REQUEUEPartitionName P4 Nodes trek[0-63] Priority 1000 AllowGroups vipDefine QOSsacctmgr add qos meremortalsacctmgr add qos vip Preempt meremortal PreemptMode cancelInclude QOS in association definitionsacctmgr add user Rod DefaultAccount math qos vip,normal DefaultQOS normal31 Bull, 2011SLURM User Group 2011

Preemption PoliciesPreempt ModesOffCancelpreempted job is cancelled.CheckpointGangpreempted job is checkpointed if possible, or cancelled.enables time slicing of jobs on the same resource.Requeue job is requeued and restarted at the beginning (only for sbatch).Suspend job is suspended until the higher priority job ends (requires Gang).32 Bull, 2011SLURM User Group 2011

Preemption ExampleNaming Conventions,Partition nameRunning Jobs1stCharacter is Preemmpt mode (Requeue, Cancel, Suspend, None)2nd Character is priority.Job name1st Character is ‘B’, 2nd is submit order,3rd is priority, 4th is Preempt mode of partitionPartitionName R1PartitionName C1PartitionName S1PartitionName S2PartitionName R3PartitionName N4Nodes trek[0-2]Nodes trek[0-2]Nodes trek[0-2]Nodes trek[0-2]Nodes trek[0-2]Nodes B74NPriority 1Priority 1Priority 1Priority 2Priority 3Priority 4--time 02:00 –P--time 02:00 –P--time 01:00 –P--time 01:00 –P--time 01:00 –P--time 02:00 –P–P N4 sleep 5R1C1S1S1S2R3Node0 B11R B11R B52S B63R B74N B52S B63R B31S1B21C B52S B63R B74N B52S B63R B31S2B41S B52S B41S B74N B52S B41S B11R ---- Time ----- PreemptMode REQUEUEPreemptMode CANCELPreemptMode SUSPENDPreemptMode SUSPENDPreemptMode REQUEUEechodate.bash 30sleep 85sleep 10sleep 30sleep 20echodate.bash 60B31S is queue for resourceB41S backfills33 Bull, 2011SLURM User Group 2011Suspended JobsB41S B52S B52S B41SB52S B52SB52S B52SB41SQueued JobsB31S B31S B31S B63R B63R B31SB31S B31S B31S B63R B63R B31SB11R B11R B31S B31S B11RB31S B31SB11R B11R

Allocation PoliciesSelect TypesLinearentire nodes are allocated, regardless of the number of tasks (cpus)required.Cons res cpus and memory as a consumable resource. Individual resources ona node may be allocated (not shared) to different jobs. Options to treatCPUs, Cores, Sockets, and memory as individual resources that canbe independently allocated. Useful for nodes with several sockets andseveral cores per socket.Bluegene34 Bull, 2011for three-dimensional BlueGene systemsSLURM User Group 2011

Allocation (Task Assignment) PoliciesTask Plugin controls assignment (binding) of tasks to CPUsNoneAll tasks on a node can use all cpus on the node.Cgroup cgroup subsystem is used to contain job to allocated CPUs. PortableHardware Locality (hwloc) library used to bind tasks to CPUs.AffinityBind tasks with one of the followingCpusetsuse cpuset subsystem to contain cpus assigned to tasks.Sched use sched setaffinity to bind tasks to cpus.In addition, a binding unit may also be specified. It can be one ofSockets, Cores, Threads, NoneBoth the are specified on the TaskPluginParam statement.35 Bull, 2011SLURM User Group 2011

More on PartitionsShared OptionControls the ability of the partition to execute more than one job on a resource(node, socket, core)EXCLUSIVE allocates entire node (overrides cons res ability to allocate coresand sockets to multiple jobs)NO sharing of any resource.YES all resources can be shared, unless user specifies –exclusive on srun salloc sbatchFORCE all resources can be shared and user cannot override. (Generally onlyrecommended for BlueGene, although FORCE:1 means that userscannot use –exclusive, but resources allocated to a job will not beshared.)36 Bull, 2011SLURM User Group 2011

OutlineIntroductionCommands & Running JobsConfigurationSchedulingAccountingAdvanced Topics37 Bull, 2011SLURM User Group 2011

AccountingSLURM Accounting Records Resource usage by users and enables controllingtheir access (Limit Enforcement) to resources.Limit Enforcement mechanismsFairshareQuality of Service (QOS)Time and count limits for users and groupsMore on this later.For full functionality, the accounting daemon, slurmdbd must be running andusing the MySQL database.See the accounting.html page for more detail.38 Bull, 2011SLURM User Group 2011

Accounting .Configuration options associated with resource accountingAccountingStorageType controls how information is recorded (MySQL withSlurmDBD is best)AccountingStorageEnforce enables Limits Enforcement.JobAccntGatherType controls the mechanism used to gather data. (OSDependent)JobCompType controls how job completion information is recorded.Commandssacctmgr is used to create account and modify account settings.sacctsstatsreport39 Bull, 2011reports resource usage for running or terminated jobs.reports on running jobs, including imbalance between tasks.generates reports based on jobs executed in a time interval.SLURM User Group 2011

SacctmgrUsed to define clusters, accounts, users, etc in the database.Account Options Clusters to which the Account has accessName, Description and Organization.Parent is the name of an account for which this account is a child.User Options 40 Bull, 2011Account(s) to which the user belongs.AdminLevel is accounting privileges (for sacctmgr). None, Operator,AdminCluster limits clusters on which accounts user can be added to.DefaultAccount is the account for the user if an account is not specifiedon srunQOS quality of services user can useOther limits and much more.SLURM User Group 2011

Accounting AssociationsAn Association is a combination of a Cluster, a User, and anAccount.An accounting database may be used by multiple Clusters.Account is a slurm entity like 'science' or 'math'.User is a Linux user like 'Rod' or 'Nancy'Use –account srun/salloc/sbatch option to specify the AccountWith associations, a user may have different privileges on differentclusters.A user may also be able to use different accounts, with differentprivileges.Limit enforcement control apply to associations41 Bull, 2011SLURM User Group 2011

Accounting Association ExampleAdd a cluster to the database (matches ClusterName from slurm.conf)sacctmgr add cluster snowflakeAdd an accountsacctmgr add account math Cluster snowflake Description "math students" Organization "Bull"Add let a user use the account, and place limits on himsacctmgr add user Rod DefaultAccount math qos vip,normal DefaultQOS normal42 Bull, 2011SLURM User Group 2011

Accounting – Limits EnforcementIf a user has a limit set SLURM will read in those, if not we will refer to theaccount associated with the job. If the account doesn't have the limit set we willrefer to the cluster's limits. If the cluster doesn't have the limit set no limit will beenforced.Some (but not all limits are)Fairshare Integer value used for determining priority. Essentially this is the amount of claimthis association and it's children have to the above system.GrpCPUMins A hard limit of cpu minutes to be used by jobs running from this associationand its children. If this limit is reached all jobs running in this group will be killed,and no new jobs will be allowed to run. (GrpCPUs, GrpJobs, GrpNodes,GrpSubmitJobs, GrpWall)MaxCPUMinsPerJob A limit of cpu minutes to be used by jobs running from thisassociation. If this limit is reached the job will be killed. (MaxCPUsPerJob,MaxJobs, MaxNodesPerJob, MaxSubmitJobs, MaxWallDurationPerJob)QOS (quality of service) comma separated list of QOS's this association is able to run.43 Bull, 2011SLURM User Group 2011

Multifactor Priority PluginBy default, SLURM assigns job priority on a First In, First Out(FIFO) basis. (PriorityType priority/basic in the slurm.conf file.)SLURM now has a Multi-factor Job Priority plugin.(PriorityType priority/multifactor)This plugin provides a very versatile facility for ordering thequeue of jobs waiting to be scheduled.It requires the accounting database as previously described.44 Bull, 2011SLURM User Group 2011

Multifactor FactorsAgethe length of time a job has been waiting in the queue, eligible to bescheduledFair-share the difference between the portion of the computing resource that hasbeen promised and the amount of resources that has been consumedJob size the number of nodes a job is allocatedPartition a factor associated with each node partitionQOSa factor associated with each Quality Of ServiceAdditionally, a weight can be assigned to each of the above factors. This provides theability to enact a policy that blends a combination of any of the above factors in anyportion desired. For example, a site could configure fair-share to be the dominantfactor (say 70%), set the job size and the age factors to each contribute 15%, and setthe partition and QOS influences to zero.See priority multifactor.html and qos.html for more detail45 Bull, 2011SLURM User Group 2011

Partitions and Multifactor (with QOS)Partitions and Multifactor Priority are used in SLURM to group nodesand jobs characteristicsThe use of Partitions and Multifactor Priority entities in SLURM isorthogonal:– Partitions for grouping resources characteristics– QOS factor for grouping limitations and prioritiesPartition 1: 32 cores and high memoryPartition 2: 32 cores and low memoryPartition 3: 32 cores with multi threads46 Bull, 2011SLURM User Group 2011QOS 1:-High priority-Higher limitsQOS 2:-Low Priority-Lower limits

Partitions and QOS ConfigurationPartitions Configuration:In slurm.conf file# Partition DefinitionsPartitionName all Nodes trek[0-95] Shared NO Default YESPartitionName HiMem Nodes trek[0-31] Shared NOPartitionName LoMem Nodes trek[32-63] Shared NOPartitionName MxThrd Nodes trek[64-95] Shared NOQOS Configuration:In Database sacctmgr add qos name lowprio priority 10 PreemptMode Cancel GrpCPUs 10 MaxWall 60 MaxJobs 20 sacctmgr add qos name hiprio priority 100 Preempt lowprio GrpCPUs 40 MaxWall 120 MaxJobs 50 sacctmgr list qosName Priority Preempt PreemptMode GrpCPUs MaxJobs MaxWall---------- ---------- ---------- ---------- ----------- ---------------------------------------- ---------- -------- ----------- 47 Bull, 2011SLURM User Group 2011

Running JobsTo get resource characteristics select partitionTo get nodes with hyperthreadssrun -p MxThrd To get priority use appropriate QOSTo get high prioritysrun –qos hiprio --account vip48 Bull, 2011SLURM User Group 2011

OutlineIntroductionCommands & Running JobsConfigurationAccountingSchedulingAdvanced Topics49 Bull, 2011SLURM User Group 2011

Site Functionality for SLURMSite Optional ScriptsProlog (before an event) and Epilog (after an event)Before and after a job on the controller (slurmctld)Before and after a job an a compute nodeBefore and after each task on a compute node.Before and after srun (on the client machine)Spank plugin‘c’ code in a shared library.Don’t need to modify slurm source.Called at specific life cycle events.API to get job characteristics.50 Bull, 2011SLURM User Group 2011

51 Bull, 2011SLURM User Group 2011

7 Bull, 2011 SLURM User Group 2011 Basic CPU Management Steps SLURM uses four basic steps to manage CPU resources for a job/step: 1) Selection of Nodes 2) Allocation of CPUs from Selected Nodes 3) Distribution of Tasks to Selected Nodes 4) Optional Distribution and Binding of Tasks to Allocated CPUs within a Node (Task Affinity)