Transcription

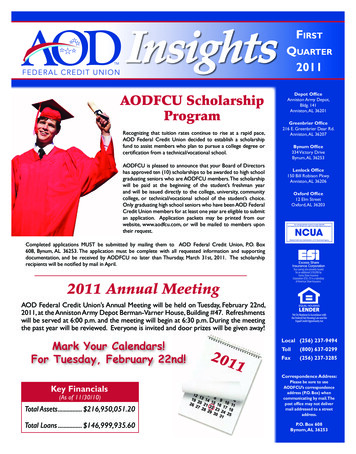

AOD-Net: All-in-One Dehazing NetworkBoyi Li1 , Xiulian Peng2 , Zhangyang Wang3 , Jizheng Xu2 , Dan Feng11Wuhan National Laboratory for Optoelectronics, Huazhong University of Science and Technology2Microsoft Research, Beijing, China3Department of Computer Science and Engineering, Texas A&M .cnAbstractAverage PSNR on Various State-of-the-art Methods22This paper proposes an image dehazing model built witha convolutional neural network (CNN), called All-in-OneDehazing Network (AOD-Net). It is designed based on are-formulated atmospheric scattering model. Instead of estimating the transmission matrix and the atmospheric lightseparately as most previous models did, AOD-Net directlygenerates the clean image through a light-weight CNN.Such a novel end-to-end design makes it easy to embedAOD-Net into other deep models, e.g., Faster R-CNN, forimproving high-level tasks on hazy images. Experimentalresults on both synthesized and natural hazy image datasetsdemonstrate our superior performance than the state-ofthe-art in terms of PSNR, SSIM and the subjective visualquality. Furthermore, when concatenating AOD-Net withFaster R-CNN, we witness a large improvement of the object detection performance on hazy 321AVERAGE 414ATMBCCRFVRNLDDCPMSCNNMethod(a) Comparison on PSNRAverage SSIM on Various State-of-the-art Methods0.950.92720.90.8879AVERAGE od(b) Comparison on SSIM1. IntroductionThe existence of haze dramatically degrades the visibility of outdoor images captured in the inclement weather andaffects many high-level computer vision tasks such as detection and recognition. These all make single-image hazeremoval a highly desirable technique. Despite the challengeof estimating many physical parameters from a single image, many recent works have made significant progress towards this goal [1, 3, 17]. Apart from estimating a global atmospheric light magnitude, the key to achieve haze removalis to recover a transmission matrix, towards which variousstatistical assumptions [8] and sophisticated models [3, 17]have been adopted. However, the estimation is not alwaysaccurate, and some common pre-processing such as guildfiltering or softmatting will further distort the hazy imagegeneration process [8], causing sub-optimal restoration performance. Moreover, the non-joint estimation of two criti Thework was done at Microsoft Research Asia.Figure 1. The PSNR and SSIM comparisons on dehazing 800 synthetic images from Middlebury stereo database. The results certifythat AOD-Net presents more faithful restorations of clean images.cal parameters, transmission matrix and atmospheric light,may further amplify the error when applied together.In this paper, we propose an efficient end-to-end dehazing convolutional neural network (CNN) model, called Allin-One Dehazing Network (AOD-Net). While some previous haze removal models discussed the “end-to-end” concept [3], we argue the major novelty of AOD-Net as thefirst to optimize the end-to-end pipeline from hazy imagesto clean images, rather than an intermediate parameter estimation step. AOD-Net is designed based on a re-formulatedatmospheric scattering model. It is trained on synthesizedhazy images, and tested on both synthetic and real naturalimages. Experiments demonstrate the superiority of AODNet over several state-of-the-art methods, in terms of not14770

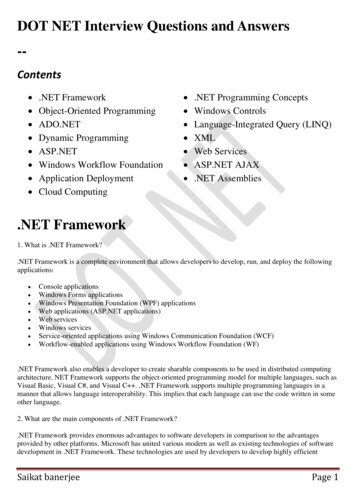

(a) Inputs(b) DCP [8](c) DehazeNet [3](d) MSCNN [17](e) AOD-NetFigure 2. Visual quality comparison between AOD-Net and several state-of-the-art methods on a natural hazy image. Please amplify figuresto view the detail differences in bounded regions.only PSNR and SSIM (see Figure 1), but also visual quality (see Figure 2). As a lightweight model, AOD-Net hasachieved a fast processing speed, costing as low as 0.026second to process one 480 640 image with a single GPU.Furthermore, we are the first to examine how a haze removalmodel could be utilized to assist the subsequent high-levelvision task. Benefiting from the end-to-end formulation,AOD-Net is easily embedded with Faster R-CNN [16] andimproves the object detection performance on hazy imageswith a large margin.2. Related WorkPhysical Model: The atmospheric scattering model hasbeen the classical description for the hazy image generationprocess [11, 13, 14].I (x) J (x) t (x) A (1 t (x)) ,(1)where I (x) is observed hazy image, J (x) is the scene radiance (“clean image”) to be recovered. There are two critical parameters: A denotes the global atmospheric light, andt (x) is the transmission matrix defined as:t (x) e βd(x) ,(2)where β is the scattering coefficient of the atmosphere, andd (x) is the distance between the object and the camera.Traditional Methods: [23] coped with haze removal bymaximizing the local contrast. [6] proposed a physicallygrounded method by estimating the albedo of the scene.[8, 24] discovered the effective dark channel prior (DCP) tomore reliably calculate the transmission matrix. [12] furtherenforced the boundary constraint and contextual regularization for sharper restored images. An accelerated methodfor the automatic recovery of the atmospheric light was presented in [22]. [32] developed a color attenuation prior andcreated a linear model of scene depth for the hazy image,and then learned the model parameters in a supervised way.Deep Learning Methods: CNNs have witnessed prevailing success in computer vision tasks, and are recentlyintroduced to haze removal. [17] exploited a multi-scaleCNN (MSCNN), that first generated a coarse-scale transmission matrix and later refined it. [3] proposed a trainable end-to-end model for medium transmission estimation,called DehazeNet. It takes a hazy image as input, and outputs its transmission matrix. Combined with the global atmospheric light estimated by empirical rules, a haze-freeimage is recovered via the atmospheric scattering model.All above methods share the same belief, that in orderto recover a clean scene from haze, it is the key to estimate an accurate medium transmission map. The atmospheric light is calculated separately, and the clean imageis recovered based on (1). Albeit being intuitive and physically grounded, such a procedure does not directly measure or minimize the reconstruction distortions. As a result, it will undoubtedly give rise to the sub-optimal imagerestoration quality. The errors in each separate estimationstep will accumulate and potentially amplify each other. Incontrast, AOD-Net is built with our different belief, that thephysical model could be formulated in a “more end-to-end”fashion, with all its parameters estimated in one unifiedmodel. AOD-Net will output the dehazed clean image directly, without any intermediate step to estimate parameters.Different from [3] that performs end-to-end learning fromthe hazy image to the transmission matrix, the fully endto-end formulation of AOD-Net bridges the ultimate targetgap, between the hazy image and the clean image.3. Modeling and ExtensionIn this section, the proposed AOD-Net is explained.We first introduce the transformed atmospheric scatteringmodel, based on which the AOD-Net is designed. The structure of AOD-Net is then described in detail. Further, wediscuss the extension of the proposed model to high-leveltasks on hazy images by embedding it directly with otherexisting deep models, thanks to its end-to-end design.3.1. Transformed FormulaBy the atmospheric scattering model in (1), the clean image is obtained byJ (x) 2477111I (x) A A.t (x)t (x)(3)

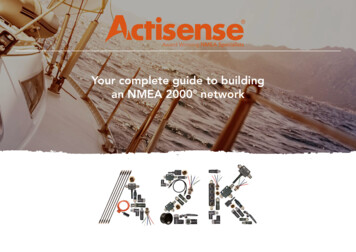

As explained in Section 2, previous methods such as [17]and [3] estimate t (x) and A separately and get the cleanimage by (3). They do not directly minimize the reconstruction errors on J (x), but rather optimize the quality oft (x). Such an indirect optimization causes a sub-optimalsolution. Our core idea is to unify the two parameters t (x)and A into one formula, i.e. K (x) in (4), and directly minimize the reconstruction errors in the image pixel domain.To this end, the formula in (3) is re-expressed asJ (x) K (x) I (x) K (x) b, whereK (x) 1t(x) (I(x) A) (A b)I (x) 1.(4)1and A are integrated into the newIn that way, both t(x)variable K (x). b is the constant bias with the default value1. Since K (x) is dependent on I (x), we then aim to buildan input-adaptive deep model, and train the model by minimizing the reconstruction errors between its output J (x)and the groundtruth clean image.A naive baseline that might be argued for is to learn t (x)(or 1/t (x)) from end to end by minimizing the reconstruction errors, with A estimated with the traditional method[8]. That requires no re-formulation of (3). To justify whyjointly learning t (x) and A in one is important, we compare the two solutions in experiments (see Section 4 forthe synthetic settings). As observed in Figure 3, the baseline tends to overestimate A and cause overexposure visualeffects. AOD-Net clearly produces more realistic lightingconditions and structural details, since the joint estimation1and A enables them to mutually refine each other. Inof t(x)addition, the inaccurate estimate of other hyperparameters(e.g., the gamma correction), can also be compromised andcompensated in the all-in-one formulation.3.2. Network DesignThe proposed AOD-Net is composed of two parts (SeeFigure 4): a K-estimation module that uses five convolutional layers to estimate K (x), followed by a clean imagegeneration module that consists of an element-wise multiplication layer and several element-wise addition layers togenerate the recovery image via calculating (4).The K-estimation module is the critical component ofAOD-Net, being responsible for estimating the depth andrelative haze level. As depicted in Figure 4 (b), we use fiveconvolutional layers, and form multi-scale features by fusing varied size filters. [3] used parallel convolutions withvarying filter sizes. [17] concatenated the coarse-scale network features with an intermediate layer of the fine-scalenetwork. Inspired by them, the “concat1” layer of AODNet concatenates features from the layers “conv1” and“conv2”. Similarly, “concat2” concatenates those from“conv2” and “conv3”; “concat3” concatenates those from(a) Inputs(b) AOD-Net using (4) (c) Baseline using (3)Figure 3. Visual comparison between AOD-Net using (4), and thenaive baseline using (3). The images are selected from the Challenging Real Photos: see more setting details in Section 4.“conv1”, “conv2”, “conv3”, and “conv4”. Such a multiscale design captures features at different scales, and theintermediate connections also compensate for the information loss during convolutions. As a simple baseline to justify concatenation, we tried on TestSetA (to be introducedin Section 4) using the structure ”“conv1” “conv2” “conv3” “conv4” “conv5”, with no concatenation.The resulting average PSNR is 19.0674 dB and SSIM is0.7707, both lower than current results in Table 1 (noticethe large SSIM drop in particular). Notably, each convolutional layer of AOD-Net uses only three filters. As a result,our model is much light-weight, compared to existing deepmethods such as [3] and [17].3.2.1Necessity of K-estimation moduleMost deep learning approaches for image restoration andenhancement have fully embraced end-to-end modeling:training a model to directly regress the clean image from thecorrupted image. Examples include image denoising [29],deblurring [20], and super resolution [28]. In comparison,there has been no end-to-end deep model for dehazing sofar1 . While that might appear weird at the first glance, oneneeds to realize that haze essentially brings in non-uniform,signal-dependent noise: the scene attenuation of a surfacecaused by haze is correlated with the physical distance between the surface and the camera (i.e., the pixel depth).That is different from common image degradation modelsthat assume signal-independent noise, in which case all signals go through the same parameterized degradation process. Their restoration models could thus be easily modeled1 [3] performed end-to-end learning from the hazy image to the transmission matrix, which is completely different from what we define here.34772

K-estimation module Clean image generation moduleAOD-Net ModelHazy imagesClean images(a) The diagram of AOD-Net3H azyImages3Conv1 63Concat1Conv3 3Conv2 6Concat23Conv4 12Concat33Conv5(K) (b) K-estimation module of AOD-NetFigure 4. The network diagram and configuration of AOD-Net.with one static mapping function. The same is not directlyapplicable to dehazing: the degradation process varies bysignals, and the restoration model has to be input-adaptiveas well.3.3. Incorporation with High-Level TasksHigh-level computer vision tasks, such as object detection and recognition, concern visual semantics and have received tremendous attentions [16, 30]. However, the performance of those algorithms is largely jeopardized by various degradations. The conventional approach first resorts toa separate image restoration step as pre-processing, beforefeeding into the target high-level task. Recently, [27, 4] validated that a joint optimization of restoration and recognition steps would boost the performance over the traditionaltwo-stage approach.Previous works [31] have examined the effects and remedies for common degradations such as noise, blur and lowresolution. However, to our best knowledge, there has beenno similar work to quantitatively study how the existenceof haze would affect high-level vision tasks, and how toalleviate its impact. Whereas current dehazing models focused merely on the restoration quality, we take the first steptowards this important mission. Owing to its unique endto-end design, AOD-Net can be seamlessly embedded withother deep models, to constitute one pipeline that performshigh-level tasks on hazy images, with an implicit dehazingprocess. Such a pipeline can be jointly optimized from endto end for improved performance, which is infeasible if replacing AOD-Net with other deep hehazing models [3, 17].4. Evaluations on Dehazing4.1. Datasets and ImplementationWe create synthesized hazy images by (1), using theground-truth images with depth meta-data from the indoorNYU2 Depth Database [21]. We set different atmosphericlights A, by choosing each channel uniformly between[0.6, 1.0], and select β {0.4, 0.6, 0.8, 1.0, 1.2, 1.4, 1.6}.For the NYU2 database, we take 27, 256 images as the training set and 3,170 as the non-overlapping TestSet A. We alsotake the 800 full-size synthetic images from the Middlebury stereo database [19, 18, 9] as the TestSet B. Besides,we also collect a set of natural hazy images to evaluate ourmodel generalization performance.During the training process, the weights are initialized44773

Table 1. Average PSNR and SSIM results on TestSet A.MetricsPSNRSSIMATM [22]14.14750.7141BCCR [12]15.76060.7711FVR [25]16.03620.7452NLD [1, 2]16.76530.7356DCP [8]18.53850.8337MSCNN [17]19.11160.8295DehazeNet [3]18.96130.7753CAP [32]19.63640.8374AOD-Net19.69540.8478DehazeNet [3]21.30460.8756CAP [32]21.45440.8879AOD-Net21.54120.9272Table 2. Average PSNR and SSIM results TestSet B.MetricsPSNRSSIMATM [22]14.33640.7130BCCR [12]17.02050.8003FVR [25]16.84880.8556NLD [1, 2]17.44790.7463Figure 5. Visual results on dehazing synthetic images. From leftto right columns: hazy images, DehazeNet results [3], MSCNNresults [17], AOD-Net results, and the groundtruth images. Pleaseamplify to view the detail differences in bounded regions.using Gaussian random variables. We utilize ReLU neuronas we found it more effective than the BReLU neuron proposed by [3], in our specific setting. The momentum andthe decay parameter are set to 0.9 and 0.0001, respectively.We adopt the simple Mean Square Error (MSE) loss function, and are pleased to find that it boosts not only PSNR,but also SSIM as well as visual quality.The AOD-Net model takes around 10 training epochsto converge, and usually performs sufficiently well after 10epochs. It is also found helpful to clip the gradient to constrain the norm within [ 0.1, 0.1]. The technique has beenpopular in stabilizing the recurrent network training [15].4.2. Quantitative Results on Synthetic ImagesWe compared the proposed model with several stateof-the-art dehazing methods: Fast Visibility Restoration(FVR) [25], Dark-Channel Prior (DCP) [8], BoundaryConstrained Context Regularization (BCCR) [12], Automatic Atmospheric Light Recovery (ATM) [22], Color Attenuation Prior (CAP) [32], Non-local Image Dehazing(NLD) [1], DehazeNet [3], and MSCNN [17]. Amongprevious experiments, few quantitative results about therestoration quality were reported, due to the absence ofhaze-free ground-truth when testing on real hazy images.DCP [8]18.97810.8584MSCNN [17]20.96530.8589Our synthesized hazy images are accompanied with groundtruth images, enabling us to measure the PSNR and SSIMand to examine if the dehazed results remain faithful.Tables 1 and 2 display the average PSNR and SSIM results on TestSets A and B, respectively. Since AOD-Net isoptimized from end to end under the MSE loss, it is notsurprising to see its higher PSNR performance than others.More appealing is the observation that AOD-Net obtainseven greater SSIM advantages over all competitors, eventhough SSIM is not directly referred to as an optimizationcriterion. As SSIM measures beyond pixel-wise errors andis well-known to more faithfully reflect the human perception, we become curious through which part of AOD-Net,such a consistent SSIM improvement is achieved.We conduct the following investigation: each image inTestSet B is decomposed into the sum of a mean image anda residual image. The former is constructed by all pixel locations taking the same mean value (the average 3-channelvector across the image). It is easily justified that the MSEbetween the two images equals the MSE between theirmean images added with that between two residual images.The mean image roughly corresponds to the global illumination and is related to A, while the residual concerns morethe local structural variations and contrasts, etc. We observethat AOD-Net produces the similar residual MSE (averagedon TestSet B) to a few competitive methods such as DehazeNet and CAP. However, the MSEs of the mean parts ofAOD-Net results are drastically lower than DehazeNet andCAP, as shown in Table 3. Implied by that, AOD-Net couldbe more capable to correctly recover A (global illumination), thanks to our joint parameter estimation scheme underan end-to-end reconstruction loss. Since the human eyes arecertainly more sensitive to large changes in global illumination than to any local distortion, it is no wonder why thevisual results of AOD-Net are also evidently better, whilesome other results often look unrealistically bright.The above advantage also manifests in the illumination(l) term of computing SSIM [26], and partially interpretsour strong SSIM results. The other major source of SSIMgains seems to be from the contrast (c) term. As exam54774

(a) Inputs(b) FVR(c) DCP(d) BCCR(e) ATM(f) CAP(g) NLD [1] (h)DehazeNet [3](i)MSCNN [17](j) AOD-NetFigure 6. Challenging natural images results compared with the state-of-art methods.MetricsMSEATM [22]4794.40BCCR [12]917.20FVR [25]849.23NLD [1]2130.60DCP [8]664.30MSCNN [17]329.97DehazeNet [3]424.90CAP [32]356.68AOD-Net260.12Table 3. Average MSE between the mean images of the dehazed image and the groundtruth image, on TestSet B.ples, we randomly select five images from TestSetB, onwhich the mean of contrast values of AOD-Net resultsis 0.9989, significantly higher than ATM (0.7281), BCCR(0.9574), FVR (0.9630), NLD(0.9250), DCP (0.9457) ,MSCNN (0.9697), DehazeNet (0.9076), and CAP (0.9760).4.3. Qualitative Visual ResultsSynthetic Images Figure 5 shows the dehazing resultson synthetic images from TestSet A. We observe that AODNet results generally possess sharper contours and richercolors, and are more visually faithful to the ground-truth.Challenging Natural Images Although trained withsynthesized indoor images, AOD-Net is found to generalizewell on outdoor images. We evaluate it against the stateof-the-art methods on a few natural image examples, thatwere found to be highly challenging to dehaze [8, 7, 3].The challenges lie the dominance of highly cluttered objects, fine textures, or illumination variations. As revealedby Figure 6, FVR suffers from overly-enhanced visual artifacts. DCP, BCCR, ATM, NLD, and MSCNN produce un-realistic color tones on one or several images, such as DCP,BCCR and ATM results on the second row (notice the skycolor), or BCCR, NLD and MSCNN results on the fourthrow (notice the stone color). CAP, DehazeNet, and AODNet have the most competitive visual results among all, withplausible details. Yet by a closer look, we still observe thatCAP sometimes blurs image textures, and DehazeNet darkens some regions. AOD-Net recovers richer and more saturated colors (compare among third- and fourth-row results),while suppressing most artifacts.White Scenery Natural Images White scenes or objecthas always been a major obstacle for haze removal. Manyeffective priors such as [8] fail on white objects since for objects of similar color to the atmospheric light, the transmission value is close to zero. DehazeNet [3] and MSCNN [17]both rely on carefully-chosen filtering operations for postprocessing, which improve their robustness to white objectsbut inevitably sacrifice more visual details.Although AOD-Net does no explicitly consider the handling of white scenes, our joint optimization scheme seems64775

(a) Input(b) DCP [8](c) CAP [32](d) DehazeNet [3](e) MSCNN [17](f) AOD-NetFigure 7. White scenery image dehazing results. Please amplify figures to view the detail differences in bounded regions.Table 4. Comparison of average model running time (in seconds).Image SizeATM [22]DCP [8]FVR [25]NLD [1, 2]BCCR [12]MSCNN [17]CAP [32]DehazeNet (Matlab) [3]DehazeNet (Pycaffe)2 [3]AOD-Net480 bPycaffePycaffeFigure 8. Examples for anti-halation enhancement. Left column:real photos with halation. Right column: results by AOD-Net.to contribute stronger robustness here. Figure 7 displaystwo hazy images of white scenes and their dehazing results.It is easy to notice the intolerable artifacts of DCP results,especially in the sky region of the first row. The problemis alleviated, but persists in CAP, DehazeNet and MSCNNresults, while the AOD-Net results are almost artifact-free.Moreover, CAP seems to blur the textural details on whiteobjects, while MSCNN creates the opposite artifact of overenhancement: see the cat head region for a comparison.AOD-Net is able to remove the haze, without introducingfake color tones or distorted object contours.Image Anti-Halation We try AOD-Net on another image enhancement task, called image anti-halation, withoutre-training. Halation is a spreading of light beyond properboundaries, forming an undesirable fog effect in the brightareas of photos. Being related to dehazing but followingdifferent physical models, the anti-halation results by AODNet are decent too: see Figure 8 for a few examples.to run, on the same machine (Intel(R) Core(TM) i7-6700CPU@3.40GHz and 16GB memory), without GPU acceleration. The per-image average running time of all models areshown in Table 4. Despite other slower Matlab implementations, it is fair to compare DehazeNet (Pycaffe version)and ours. The results illustrate the promising efficiency ofAOD-Net, costing only 1/10 time of DehazeNet per image.5. Improving High-level Tasks with Dehazing4.4. Running Time ComparisonWe study the problem of object detection and recognition [16, 30] in the presence of haze, as an example forhow high-level vision tasks can interact with dehazing. Wechoose the Faster R-CNN model [16] as a strong baseline3 ,and test on both synthetic and natural hazy images. Wethen concatenate the AOD-Net model with the Faster RCNN model, to be jointly optimized as a unified pipeline.General conclusions drawn from our experiments are: asthe haze turns heavier, the object detection becomes less reliable. In all haze conditions (light, medium or heavy), ourjointly tuned model constantly improves detection, surpassing both naive Faster R-CNN and non-joint approaches.The light-weight structure of AOD-Net leads to faster dehazing. We select 50 images from TestSet A for all models3 We use the VGG16 model pre-trained based on 20 classes of PascalVOC 2007 dataset provided by the Faster R-CNN authors.74776

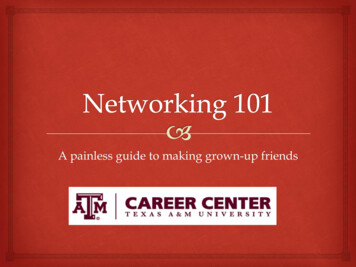

SettingmAPHeavy F0.5155Heavy A0.5794Medium F0.6046Medium A0.6401Light F0.6410Light A0.6701Goundtruth0.6990Table 5. mAP comparison on all seven settings: “Heavy F” and “Heavy A” are short for “Heavy Faster R-CNN” and “Heavy AOD-Net followed by Faster R-CNN”, respectively; similarly for the other two groups.(a) Faster R-CNN(b) DehazeNet Faster R-CNN(c) AOD-Net Faster R-CNN(d) AOD-Faster R-CNNFigure 9. Comparison of detection and recognition results on natural hazy images, using a threshold of 0.6.Quantitative Results on Pascal-VOC 2007 with Synthetic Haze We create three synthetic sets from the PascalVOC 2007 dataset (referred to as Groundtruth) [5]: HeavyHaze (A 1, β 0.1), Medium Haze (A 1, β 0.06),and Light Haze (A 1, β 0.04). The depth mapsare predicted via the method described in [10]. We calculate the mean average precision (mAP) on the sets (including Groundtruth), using both Faster R-CNN and AOD-Netconcatenated with Faster R-CNN (without joint tuning), ascompared in Table 5. The heavy haze degrades mAP fornearly 0.18. By appending AOD-Net, the mAP improvesby 4.54% for object detection in the light haze condition,5.88% in the medium haze, and 12.39% in the heavy haze.Furthermore, we jointly tune the end-to-end pipeline ofAOD-Net concatenated with Faster R-CNN in the HeavyHaze condition, with a learning rate of 0.0001. It furtherboosts the mAP from 0.5794 to 0.6819, showing the impressive power of joint tuning.Visualized Results Figure 9 displays a visual comparison of object detection results on web-source natural hazyimages. Four approaches are compared: (1) naive FasterRCNN: directly apply pre-trained Faster-RCNN to the hazyimage; (2) DehazeNet Faster R-CNN: DehazeNet concatenated with Faster R-CNN, without any joint tuning; (3)AOD-Net Faster R-CNN: AOD-Net concatenated withFaster R-CNN without joint tuning; (4) JAOD-Faster RCNN: jointly tuning the pipeline of AOD-Net and FasterR-CNN from end to end. We observe that haze can causemissing detections, inaccurate localizations and unconfidentcategory recognitions for Faster R-CNN. DehazeNet tendsto darken images, which often impacts detection negatively(see the first row, column (b)). While AOD-Net FasterR-CNN already show visible advantages over naive FasterRCNN, the performance is further dramatically improved inJAOD-Faster R-CNN results.Note that JAOD-Faster R-CNN benefits from joint optimization in two-folds: the AOD-Net itself jointly estimatesall parameters in one, and the entire pipeline tunes the lowlevel (dehazing) and high-level (detection and recognition)tasks from end to end.6. ConclusionThe paper proposes AOD-Net, an all-in-one pipelinethat direct reconstructs haze-free images via an end-to-endCNN. We compare AOD-Net with a variety of state-of-theart methods, on both synthetic and natural haze images, using both objective (PSNR, SSIM) and subjective measurements. Extensive experimental results confirm the superiority, robustness, and efficiency of AOD-Net. Moreover,we present the first-of-its-kind study, on how AOD-Net canboost the object detection and recognition performance onnatural hazy images, by joint tuning the pipeline.AcknowledgementBoyi Li and Dan Feng’s research are in part supported bythe National High Technology Research and DevelopmentProgram (863 Program) No.2015AA015301, and the NSFCgrant No. 61502191.84777

References[1] D. Berman, S. Avidan, et al. Non-local image dehazing. InProceedings of the IEEE conference on computer vision andpattern recognition, pages 1674–1682, 2016.[2] D. Berman, T. Treibitz, and S. Avidan. Air-light estimationusing haze-lines. In Computational Photography (ICCP),2017 IEEE International Conference on, pages 1–9, 2017.[3] B. Cai, X. Xu, K. Jia, C. Qing, and D. Tao. Dehazenet:An end-to-end system for single image haze removal. IEEETransactions on Image Processing, 25(11), 2016.[4] S. Diamond, V. Sitzmann, S. Boyd, G. Wetzstein, andF. Heide.Dirty pixels: Optimizing image classification architectures for raw sensor data. arXiv preprintarXiv:1701.06487, 2017.[5] M. Everingham, L. Van Gool, C. K. I. Williams, J. Winn,and A. Zisserman. The PASCAL Visual Object ClassesChallenge 2007 (VOC2007) Results. 7/workshop/index.html.[6] R. Fattal. Single image dehazing. ACM transactions ongraphics (TOG), 27(3):72, 2008.[7] R. Fattal. Dehazing using color-lines. ACM Transactions onGraphics (TOG), 34(1):13, 2014.[8] K. He, J. Sun, and X. Tang. Single image haze removal usingdark channel prior. IEEE transactions on pattern analysisand machine intelligence, 33(12):2341–2353, 2011.[9] H. Hirschmuller and D. Scharstein. Evaluation of cost functions for stereo matching. In Computer Vision and PatternRecognition, 2007. CVPR’07. IEEE Conference on, pages1–8. IEEE, 2007.[10] F. Liu, C. Shen, G. Lin, and I. Reid. Learning depth from single monocular images using deep convolutional neural fields.IEEE transactions on pattern analysis and machine intelligence, 38(10):2024–2039, 2016.[11] E. J. McCartney. Optics of the atmosphere: scattering bymolecules and particles. New York, John Wiley and Sons,Inc., 197

AOD-Net: All-in-One Dehazing Network Boyi Li1 , Xiulian Peng 2, Zhangyang Wang3, Jizheng Xu2, Dan Feng1 1Wuhan National Laboratory for Optoelectronics, Huazhong University of Science and Technology 2Microsoft Research, Beijing, China 3Department of Computer Science and Engineering, Texas A&M University mu.edu,jzxu@microsoft.com,dfeng@hust.edu.cn