Transcription

A Data-Driven CHC SolverHe ZhuStephen MagillSuresh JagannathanGalois, Inc., USAhezhu@galois.comGalois, Inc., USAstephen@galois.comPurdue University, USAsuresh@cs.purdue.eduAbstractcorrespond to unknown inductive loop invariants and inductive pre- and post-conditions of recursive functions. Ifadequate inductive invariants are given to interpret eachunknown predicate, the problem of checking whether a program satisfies its specification can be efficiently reduced todetermining the logical validity of the VCs, and is decidable with modern automated decision procedures for somefragments of first-order logic. However inductive invariantinference is still very challenging, and is even more so in thepresence of multiple nested loops and arbitrary recursion;these challenges pose a major impediment towards the useof fully automated verification methods.Constrained Horn clauses (CHC) are a very popular VCformalism for specifying and verifying safety properties ofprograms written in a variety of programming languagesand styles [6, 13, 15, 18]. Given a set of CHC constraints,with unknown predicate symbols, the goal is to produce aninterpretation to solve each unknown predicate symbol asa formula such that each constraint is logically valid. Manypowerful CHC solvers have been proposed to automaticallyand efficiently solve CHC constraints [1, 13, 16, 17, 19, 23ś25,32]; these systems typically rely on sophisticated first-ordermethods such as interpolation [22] and property-directedreachability [17].Consider the program1 shown in Fig. 1. The CHCs for thisprogram, generated by the SeaHorn C program verifier [15],can be expressed as:We present a data-driven technique to solve ConstrainedHorn Clauses (CHCs) that encode verification conditions ofprograms containing unconstrained loops and recursions.Our CHC solver neither constrains the search space fromwhich a predicate’s components are inferred (e.g., by constraining the number of variables or the values of coefficientsused to specify an invariant), nor fixes the shape of the predicate itself (e.g., by bounding the number and kind of logical connectives). Instead, our approach is based on a novelmachine learning-inspired tool chain that synthesizes CHCsolutions in terms of arbitrary Boolean combinations of unrestricted atomic predicates. A CEGAR-based verification loopinside the solver progressively samples representative positive and negative data from recursive CHCs, which is fed tothe machine learning tool chain. Our solver is implementedas an LLVM pass in the SeaHorn verification framework andhas been used to successfully verify a large number of nontrivial and challenging C programs from the literature andwell-known benchmark suites (e.g., SV-COMP).CCS Concepts · Software and its engineering Formal software verification; Automated static analysis;Keywords Constrained Horn Clauses (CHCs), InvariantInference, Program Verification, Data-Driven AnalysisACM Reference Format:He Zhu, Stephen Magill, and Suresh Jagannathan. 2018. A DataDriven CHC Solver. In Proceedings of 39th ACM SIGPLAN Conferenceon Programming Language Design and Implementation (PLDI’18).ACM, New York, NY, USA, 15 pages. https://doi.org/10.1145/3192366.3192416x 1 y 0 p(x, y)′′′′(1)′′p(x, y) x x y y y 1 p(x , y )′p(x, y) x x y y y 1 x yx 1 y 0 x y1 Introduction(2)′(3)(4)Here p(x, y) encodes the unknown loop invariant over theprogram variables x and y. Constraint (1) ensures that p(x, y)is established when the loop head is initially reached; constraint (2) guarantees that it is inductive with the loop body.Constraint (3) uses the loop invariant to show that the execution of the loop body does not violate the post condition. Constraint (4) corresponds to the trivial case when theloop body is never executed. Notably, a state-of-the-art CHCsolver, Spacer [19], times-out when attempting to infer aninterpretation of p because it diverges and fails to generalizean inductive invariant from the iteratively generated counterexamples produced by the solver, despite the simplicityof the formulas.Automated program verification typically encodes programcontrol- and data-flow using a number of first-order verification conditions (VCs) with unknown predicates, whichPermission to make digital or hard copies of all or part of this work forpersonal or classroom use is granted without fee provided that copies are notmade or distributed for profit or commercial advantage and that copies bearthis notice and the full citation on the first page. Copyrights for componentsof this work owned by others than ACM must be honored. Abstracting withcredit is permitted. To copy otherwise, or republish, to post on servers or toredistribute to lists, requires prior specific permission and/or a fee. Requestpermissions from permissions@acm.org.PLDI’18, June 18ś22, 2018, Philadelphia, PA, USA 2018 Association for Computing Machinery.ACM ISBN 978-1-4503-5698-5/18/06. . . 15.00https://doi.org/10.1145/3192366.31924161 In707our examples, we use to denote a nondeterministically chosen value.

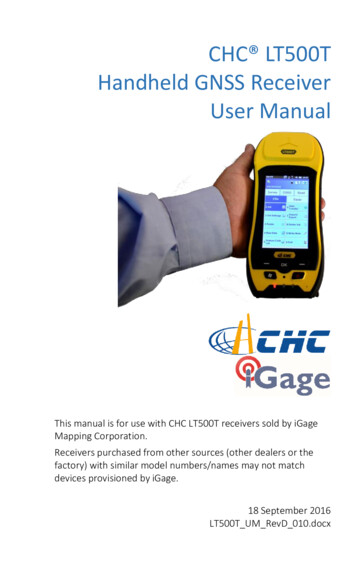

PLDI’18, June 18ś22, 2018, Philadelphia, PA, USAHe Zhu, Stephen Magill, and Suresh Jagannathanlearn invariants as arbitrary Boolean combinations of features learned via linear classification, the shape of whosecomponents are not pre-determined.main(){int x,y;x 1; y 0;while(*){x x y;y ;}assert(x y);}Generality vs. Safety. Machine learning algorithms striveto avoid over-fitting concepts to training data by relaxing therequirement that a proposed concept must be fully consistentwith given samples. Verification tasks, on the other hand,are centered on safety - their primary goal is to ensure thata hypothesis is correct, even if this comes at the expense ofgenerality. In other words, verification expects any classifierto be perfect, even if that entails over-fitting, while machinelearning algorithms expect classifiers to generalize, evenif that compromises precision. This tension between thedesire for producing general hypotheses, central to machinelearning, and the need to ensure these hypotheses producea perfect classification, central to verification, is a primaryobstacle to seamlessly adapting existing learning frameworksto solve general verification problems. To illustrate, considerthe example given below:Figure 1. Data-driven Invariant InferenceIn recent years, data-driven techniques have gained popularity to complement logic-based VC solvers. These approaches are constrained to find a solution that is consistentwith a finite number of concrete samples agnostic of the underlying logics used to encode VCs [10, 11, 29, 35, 37, 38, 40].Using these methods, one can reason about p(x, y) by sampling x and y, as depicted on the right-side of Fig. 1. Intuitively, the data labeled with are positive samples on theloop head that validate the assertion; data labeled with are negative loop head samples that cause the program totrigger an assertion violation. An interpretation of p(x, y)can be learned as a classifier of and data with off-theshelf machine learning algorithms. For example, supposewe constrain the interpretation of p(x, y) that we sampleto be drawn from the Box abstract interpretation domain(bounds on at most one variable x d where d is a constant). We can consider this domain as playing the role offeatures in machine learning parlance. In this case, standardlearning algorithms incorporated within data-driven verification frameworks [29, 35, 37] can be used to interpret theunknown loop invariant as x 1 y 0. Plugging thisinvariant as the concrete interpretation of p(x, y) in CHCconstraints (1)-(4) proves the program safe.Despite the promising prospect of learning invariantspurely from data as informally captured by this example,there are several key limitations of existing frameworks thatmake realizing this promise challenging; these challengesare addressed by the techniques described below.Here, it might not be possible to draw a linear classifierthat can separate all positive samples ({1, 3, 4, 5}) from allnegative samples ({0, 2}), or even all positives ({1, 3, 4, 5})from a single negative ({2}).Rather than strictly requiring a data-driven verificationframework to always produce a perfect classifier as a learnedfeature [38], we instead propose a technique that accepts precision loss. Our approach exploits a linear classification algorithm that may return a classifier which misclassifies eitherpositive data or negative data or both in order to facilitategeneralization. To recover the loss of precision, we develop anew data-driven algorithm, which we call LinearArbitrarythat applies a linear classification algorithm iteratively onunseparated (misclassified) samples, allowing us to learn afamily of linear classifiers that separate all samples correctlyin the aggregate; such classifiers are conceptually logicallyconnected with appropriate boolean operators and .Combating Over- and Under-fitting. Data-driven analysis techniques that suffer from over- and under-fitting arelikely to produce large, often unintuitive, invariants. Wheninput samples are not easily separable because of outliersand corner cases, inference may yield invariants with complex structure that arise because of the need to compensatefor the inability to discover a clean and general separator.Our approach is based on the simple observation that eachlearned linear classifier defines a hyperplane that separates asubset of positive samples from a subset of negative samples.Thus, each learned feature has a different information gainin splitting the current collection of samples, given in termsof Shannon Entropy [34], a well-established information theoretic concept, which can measure how homogeneous theLearning Features. A notable challenge in applying existing learning techniques to program verification is determining the set of atomic predicates (as features in machine learning) that should comprise an invariant. In some cases, simply choosing a suitably constrained domain (e.g., the Boxdomain as used in Fig. 1) is sufficient. However, for manyprogram verification tasks, richer domains (e.g., Polyhedra)are required; the feature space of these domains have highdimensionality, requiring techniques that go beyond exhaustive enumeration of feature candidates [29]. Additionally,realistic programs will have invariants that are expressed interms of arbitrary Boolean combinations of these features.We propose a new data-driven framework that allows us to708

A Data-Driven CHC SolverPLDI’18, June 18ś22, 2018, Philadelphia, PA, USAalgorithms. We show how to extract such predicateseven when samples are not linearly separable. We propose a layered machine learning toolchain tocombat over- and under-fitting of linear classification.We use decision tree learning on top of linear classification to improve the quality of learned hypotheses. We propose counter-example guided CHC samplingto verify CHC-modeled programs with complex loopand recursive structure.We have implemented this framework inside the SeaHornverification framework [15] as an LLVM pass. Evaluationresults over a large number of challenging and nontrivial Cprograms collected from the literature and SV-COMP benchmarks [39] demonstrate that our solver is both efficient inproving that a CHC system is satisfiable (a program is safe)and effective in generating concrete counterexamples whena CHC system is unsatisfiable (a program is unsafe).Figure 2. The overall framework of our approach.samples are after choosing a specific feature as a classifier.This observation motivates the idea of using standard Decision Trees to further generalize LinearArbitrary. Decisiontrees allow us to select high-quality features while dropping unnecessarily complex ones, based on the same datafrom which feature predicates are learned, by heuristicallyselecting features that lead to the greatest information gain.A learned decision tree defines a Boolean function over selected feature predicates and can be converted to a first-orderlogic formula for verification.2 OverviewIn this section, we present the main technical contributionsof the paper using a number of simple programs that nonetheless have intricate invariants that confound existing inference techniques.Recursive CHC Structure. In the presence of loops andrecursive functions, a CHC constraint (such as constraint (2)in the example above) may take the form:ϕ p1 (T1 ) p2 (T2 ) · · · pk (Tk ) pk 1 (Tk 1 )2.1( )Learning Arbitrarily-Shaped InvariantsOur predicate search space includes the infinite Polyhedradomain: wT ·v b 0 where v is a vector of variables and theweight values of w and the bias value of b are unrestricted.The search space defines the concept class from which invariants are generated. We discuss the basic intuition of howPolyhedral predicates are discovered from data using theprogram shown in Fig. 3.We draw positive and negative samples collected in theloop head of this program, as depicted in Fig. 6(i). Intuitively,the positive samples ( ) are obtained by running the loopwith{(x, y) (0, 2), (0, 1), (0, 0), (0, 1)}which do not raise a violation of the assertion in Fig. 3; thenegative samples ( ) are derived from running the loop withwhere each pi (1 i k 1) is an unknown predicate symbolover a vector of first-order terms Ti and ϕ is an unknownpredicate-free formula with respect to some backgroundtheory. In this paper, we assume ϕ is expressible using lineararithmetic.A formula like ( ) is a recursive CHC constraint if, forsome pi (1 i k), pk 1 is identical to pi or pi is recursivelyimplied by pk 1 in some other CHCs.Dealing with recursive CHCs is challenging because thereare occurrences of mutually dependent unknown predicatesymbols on both sides of a CHC. Using a novel counterexample driven sampling approach, we iteratively solve a CHC system with recursive CHCs like ( ) by exploring the interplayof either strengthening the solutions of some pi (1 i k)with new negative data or weakening the solution of pk 1with new positive data. This process continues until either avalid interpretation of each unknown predicate or a counterexample to validity is derived.{(x, y) (3, 3), ( 3, 3)}which do throw an assertion violation.2As opposed to previous approaches [38] that tune linearclassification techniques to obtain a perfect classifier thatmust classify all positive samples correctly, our algorithmLinearArbitrary simply applies standard linear classification techniques (e.g. SVM [30] and Perceptron [9]) to thesamples in Fig. 6(i), to yield the linear classifier:1.1 Main ContributionsWe depict our overall framework in Fig. 2. Our machinelearning toolchain efficiently learns CHC interpretations,which are discharged by an SMT solver, in a fully automatedcounterexample-guided manner. This paper makes the following contributions: x y 1 0Notably, this classifier only partially classifies the positivedata, as depicted in Fig. 6(ii). In particular, the three positive We propose to learn arbitrarily shaped invariants withfeature predicates learned from linear classification2 We709describe how to obtain positive ( ) and negative ( ) data in Sec. 2.3.

PLDI’18, June 18ś22, 2018, Philadelphia, PA, USA// Program needsWV-invariantmain(){int x,y;x 0; y *;while(y! 0){if (y 0) {x--; y ;}else {x ; y--;}assert(x! 0);}}He Zhu, Stephen Magill, and Suresh Jagannathan// Program needs Polyhedral invariantmain(){int x,y,i,n;x y i 0; n *;while(i n) {i ; x ;if(i%2 0) y ;}assert(i%2! 0 x 2*y);}// Program with recursive functionfibo(int x) {if (x 1) return 0;else if (x 1) return 1;elsereturn fibo(x-1) fibo(x-2);}main(int x) {assert(fibo(x) x-1);}Figure 3. Program (a)Figure 4. Program (b)Figure 5. Program (c)(i) Samples of Program (a) in Fig. 3(ii) φ : x y 1 0(iii) φ : x y 1 0 x y 1 0(iv) φ : x y 1 0 x y 1 0 x y 1 0(v) φ : x y 1 0 x y 1 0 (x y 1 0 x y 1 0)Figure 6. Learning arbitrarily shaped invariants with linear classification on Program (a) in Fig. 3.samples that are above the classification line x y 1 0(in blue) are clearly misclassified.Because we are solving a verification problem, we cannottolerate any misclassification of a positive sample if we wishto have our presumed invariant pass the verification oracle(i.e., an SMT solver). We therefore apply the linear classification technique again on the misclassified positive samplesand all the negative samples; this results in the classificationshown in Fig. 6(iii), represented by the formula x y 1 0.The combined classifier is thus:in Fig. 6(iv) to yield the classifier shown in Fig. 6(v). FromFigs. 6(iv) and Fig. 6(v), we realize the conjunctive classifierx y 1 0 x y 1 0that fully separates {x 0, y 0} from all negative samples.Finally, combining the classifiers learnt from Figs. 6(ii),6(iii), 6(iv) and 6(v), we obtain the classifier x y 1 0 x y 1 0 (x y 1 0 x y 1 0)that separates all positive samples from all negative data.In summary, our machine learning algorithm LinearArbitrary uses off-the-shelf linear classification algorithms toseparate samples even when they are not linearly separable,and accepts classification candidates in arbitrary Booleancombinations. As a result, LinearArbitrary inherits allthe benefits of linear classification, explored over the yearsin the machine learning community, with well-understoodtrade-offs between precision and generalization. LinearArbitrary can efficiently search from the Polyhedra abstractdomain, which among the many numerical domains introduced over the years, is one of the most expressive (andexpensive). We reduce searching Polyhedral invariants towell-optimized linear classification tasks to gain efficiency;but, because we do not bound the Boolean form of invariants x y 1 0 x y 1 0that separates all positive samples except {x 0, y 0} fromall the negative data.Our only concern for the moment is how to deal withthe remaining unseparated positive data {x 0, y 0}. Weapply our learning algorithm again on this sample and allthe negative data. As shown in Fig. 6(iv), this yields anotherlinear classifier x y 1 0, which unfortunately nowmisclassifies a number of negative samples below the classification line x y 1 0 (in blue). Based on our goal thatevery positive sample must be separated from any negativeone, we again apply linear classification on the positive sample {x 0, y 0} and the misclassified negative samples710

A Data-Driven CHC SolverPLDI’18, June 18ś22, 2018, Philadelphia, PA, USAgenerated, we do not lose expressivity in the process. Observe that the learned classifier shown above requires bothconjunctions and disjunctions, a capability which is out ofthe reach of existing linear classification-based verificationapproaches [38].2.2information gain attributes that lead to a split that causes twopartitions, one with more positive samples and the other withmore negative. However, there are no guarantees on learninga high information gain split at every internal classificationtask. Leveraging information gain as a measure, we aim touse Decision Tree learning [31] to generalize the classification result produced by LinearArbitrary to heuristicallychoose attributes to build classifiers with higher informationgains. When used for classification, such attributes usuallygeneralize better and can yield simpler classifiers than lowinformation gain ones. Empirically a simple invariant is morelikely to generalize than a complex one [29].Decision Trees. A decision tree (DT) is a binary tree thatrepresents a Boolean function. Each inner node of the tree islabeled by a decision of the form f (v) c where f (v) is afeature attribute over a vector of variables v and c is a threshold. In our context, f (v) is learned from LinearArbitrary.Each leaf of the tree is labeled either positive or negative .To evaluate an input x, we trace a path down from the rootnode of the tree, going to a true branch (t) or a false branch(f) at each inner node depending on whether its feature f (x)is less or equal to its threshold c. The output of the tree on xis the label of the leaf reached by this process.For example, applying a DT learning algorithm [31] withfeature attributes drawn from atomic predicates in ρ, { 10i x 5y 6n 7, i x, i x, i x 4y, 2i 3x 4y 2n 34},which are all learned from LinearArbitrary, yields thefollowing decision tree:A Layered Machine-Learning ToolchainAn important design consideration of data-driven methodslike LinearArbitrary’s is how to best combat over-fittingand under-fitting of data, allowing learned invariants to beas general as possible. If the linear classifier imposes a highpenalty for misclassified points it may only produce overfitted classifiers; otherwise it may under-fit. In either case, aclassification-based verification algorithm may never reacha correct program invariant or may depend on a sufficientlylong cycle of sampling, learning, and validation to converge.The problem is exacerbated as the complexity of the conceptclass (here Polyhedra) increases.Ideally, we would like to learn a classifier within the sweetspot between under-fitting and over-fitting. Consider theexample program (b) in Fig. 4.3 Using LinearArbitrary, weobtain the following candidate invariant for the unknownloop invariant ρ (i, x, y, n) of this program:ρ (( 10i x 5y 6n 7 0 ) i x 0 i x 0 i 2x 2y 0) 2i 3x 4y 2n 34 0This candidate does not generalize and is not a loop invariantof the program. The first and last atomic predicates shownabove are unnecessarily complex and restrictive. We cancertainly ask for more samples from the verification oracleto refine ρ. However, a more fruitful approach is to exploreways to produce simpler and more generalizable invariantsfrom the same amount of data.Observe that each learned Polyhedral classifier implies ahyperplane in the form of f (v) 0 where f (v) wT · v b.We call f (v) a feature attribute selected for classification.For example, the first classifier of the invariant ρ above isbased on the feature attribute 10i x 5y 6n 7. Usingit as a separator leads to binary partitions of data each ofwhich contains a subset of positive and negative samples,causing ρ to be a disjunctive conjunctive formula. Clearly,the learning algorithm has made a trade-off, misclassifyingsome sample instances to avoid over-fitting based on itsbuilt-in generalization measure.Is the choice always reasonable? To generalize the invariant ρ, we first need to quantify the goodness of a featureattribute. In machine learning theory, information gain isoften used to evaluate the quality of an attribute f (v) fora particular classification task. Informally, the informationgain of an attribute evaluates how homogeneous the samplesare after choosing the attribute as a classifier. We prefer highAt the root node, the DT does not choose the complex attribute 10i x 5y 6n 7 as in LinearArbitrary. Instead,DT learning chooses the simpler attribute i x and learns athreshold 1 to bound the attribute because such a decisioncan lead to a higher information gain split as the left childnode of the root now contains only negative samples. Eventually, DT learning is able to pick two concise attributes i xand i 2x 2y to separate all the positive and negative dataand equip them with properly adjusted thresholds. As thereis a single decision path that leads to positive samples in theabove DT, combining all decisions along that path yields anew loop invariant for program (b) in Fig. 4: ( i x 1) i x 0 ( i 2x 2y 1) i 2x 2y 1which suffices to verify the program. Importantly, the use ofDT learning generalizes ρ on the same data from which ρwas learned before by LinearArbitrary, without having toask for more samples.3 We do not draw the samples of this program because they are complex tovisualize, involving constraints over four dimensions.711

PLDI’18, June 18ś22, 2018, Philadelphia, PA, USA2.3He Zhu, Stephen Magill, and Suresh JagannathanCounterexample Guided CHC SamplingThe prior sections assume the existence of positive and negative data sampled from the program to bootstrap learning.However, sampling from a program is challenging when thecode base is large. To make data-driven methods of the kindwe propose practical for scalable verification, we need toefficiently sample positive and negative data directly fromCHCs in an automatic manner, that nonetheless can scale toprograms with complex loops and recursive functions.The program (c) in Fig. 5 shows why recursion might confound existing learning based tools, and complicate samplingdata directly from CHCs. We show the CHCs of the program:x 1 y 0 p(x, y)(5)x 1 x 1 y 1 p(x, y)(6)Figure 7. Learning the invariant for Program (c) in Fig. 5with bounded positive samples that form derivation trees.Instead of explicitly unwinding recursive CHCs in H , westudy the problem from a data perspective. Our approachonly implicitly unwinds H by considering positive data sampled from a finite unwinding. A positive sample s is obtainedfrom a bounded unwinding of H if we are able to recursivelyconstruct a derivation tree using other positive samples, explaining how s is derived. For example, we draw the positivesamples collected for p using this approach in Fig. 7. For thecounterexample generated above, we can add (x 3, y 2)as a positive example of p(x, y) because both (x 2, y 1)and (x 1, y 1) are already labeled as positive, which canbe used to explain how (x 3, y 2) is derived. Note thatin Fig. 7 every positive sample can be explained by how it isderived from other positive samples except data points generated from initial CHC (5) and (6). Samples that do not satisfythis condition are labeled negative to allow strengtheningCHC (7) until it becomes inductive. If p gets strengthened toomuch, it can be weakened later with more positive samplesfrom CHC (5) and (6).Similar to how solutions of recursive CHC systems arederived from unwound recursion-free CHC systems in modern CHC solvers, our verification framework uses a machinelearning algorithm to explain why p has a valid interpretation by separating positive samples of p which are derivedfrom implicit unwindings of the CHCs (5)-(8), from samplednegative data, relying on the underlying machine learning algorithm to generalize our choice of the solution to p. For thepositive data and negative data depicted in Fig. 7, applyingLinearArbitrary yields a classifier,x 1 x , 1 p(x 1, y1 ) p(x 2, y2 ) y y1 y2 p(x, y)p(x, y) y x 1(7)(8)To prove this program correct, it is sufficient to find an interpretation of p(x, y) which encodes the input and outputbehavior of the function fibo. CHC (5) and (6) correspondto the initial cases of the function while constraint (8) encodes the safety property to be satisfied by p(x, y). CHC (7)corresponds to the inductive case. Given an interpretationof the unknown predicate p, it is straightforward to samplepositive data from CHC (5) and (6) and negative data fromconstraint (8), but is less clear how to deal with the recursive CHC (7) because there are occurrences of the unknownpredicate symbol p on both sides of the constraint.For example, suppose that we interpreted p(x, y) to be: 23x 25y 22 0 y 1 0and we used an SMT solver to check the validity of constraint(7) under this interpretation. The following counterexamplemight be returned:p(x 1, y1 ) p(2, 1), p(x 2, y2 ) p(1, 1),p(x, y) p(3, 2)This is a counterexample because the second conjunct inthe above interpretation of p(x, y) is false when y 2. Atthis point, however, it is not clear whether we should add(3, 2) as a new positive sample, given that p(2, 1) and p(1, 1)are true, thus implying p(3, 2) is true because of CHC (7);or, whether we should add (2, 1) and/or (1, 1) as negativesamples, given that p(3, 2) is a counterexample.4Our approach is inspired by modern CHC solvers [1, 25,32]. They solve a recursive CHC system H by iterativelyconstructing a series of recursion-free unwindings of H ,which can be considered as derivation trees of H that areessentially logic program executions [25], whose solutionsare then generalized as candidate solutions of H . x y 1 0 x 2y 0that correctly interprets p(x, y) in CHCs (5)-(8) and henceproves that the program (c) in Fig. 5 is safe.Of course, we may have to iteratively increase the implicit unwinding bound based on newly discovered positivesamples until we can prove the satisfiability of the CHCs. Empirically, we find that our counterexample guided samplingapproach is efficient. For example, consider changing the annotated assertion in Fig. 5 to assert (x 9 fibo(x) 34),a difficult verification task in the SV-COMP benchmarks [39].4 Notablythe ICE framework [10, 11] is not suitable to deal with this counterexample as p occurs more than once on the left-hand side of CHC (7).712

A Data-Driven CHC SolverPLDI’18, June 18ś22, 2018, Philadelphia, PA, USATo verify the program, our tool needs to sample positive dataof the fibo function using at least inputs from 0 to 10. Itproves the program in less than one minute with a complicated disjunctive and conjunctive invariant learned whilethe best two tools in the recursive category of SV-COMP’17either timeout or run out of memory [39] .is deemed to be better than one which closely approachesexamples of one or both classes.In practice, data is complex and may not be separated perfectly with a hyperplane. In these cases, we must relax ourgoal of maximizing the margin of the hyperplane that separates the classes . To generalize, some training data should beallowed to violate the hyperplane. To constrain the amountof margin viol

A formula like ( ) is a recursive CHC constraint if, for somepi (1 i k),p k 1 is identical topi orpi is recursively implied byp k 1 in some other CHCs. Dealing with recursive CHCs is challenging because there are occurrences of mutually dependent unknown predicate symbols on both sides of a CHC. Using a novel counterexam-