Transcription

Fast Fine-Grained Global Synchronization on GPUsKai WangUniversity of Texas at Austinkaiwang@cs.utexas.eduDon FussellUniversity of Texas at Austinfussell@cs.utexas.eduAbstractThis paper extends the reach of General Purpose GPUprogramming by presenting a software architecture thatsupports efficient fine-grained synchronization overglobal memory. The key idea is to transform global synchronization into global communication so that conflictsare serialized at the thread block level. With this structure, the threads within each thread block can synchronize using low latency, high-bandwidth local scratchpadmemory. To enable this architecture, we implement ascalable and efficient message passing library.Using Nvidia GTX 1080 ti GPUs, we evaluate our newsoftware architecture by using it to solve a set of fiveirregular problems on a variety of workloads. We findthat on average, our solutions improve performanceover carefully tuned state-of-the-art solutions by 3.6 .CCS Concepts Computer systems organization Single instruction, multiple data; Software and itsengineering Mutual exclusion; Message passing.Keywords GPU; Synchronization; Irregular WorkloadsACM Reference Format:Kai Wang, Don Fussell, and Calvin Lin. 2019. Fast Fine-GrainedGlobal Synchronization on GPUs. In 2019 Architectural Supportfor Programming Languages and Operating Systems (ASPLOS’19), April 13–17, 2019, Providence, RI, USA. ACM, New York,NY, USA, 14 pages. onGPUs provide significant performance advantages overtraditional CPUs, particularly for highly regular dataparallel workloads. Given the potential performancegains, there has been strong interest in applying GPUs toirregular computations. For example, there has been significant work in graph and tree-based algorithms [7, 8,Permission to make digital or hard copies of all or part of this workfor personal or classroom use is granted without fee provided thatcopies are not made or distributed for profit or commercial advantageand that copies bear this notice and the full citation on the firstpage. Copyrights for components of this work owned by others thanACM must be honored. Abstracting with credit is permitted. To copyotherwise, or republish, to post on servers or to redistribute to lists,requires prior specific permission and/or a fee. Request permissionsfrom permissions@acm.org.ASPLOS ’19, April 13–17, 2019, Providence, RI, USA 2019 Association for Computing Machinery.ACM ISBN 978-1-4503-6240-5/19/04. . . 15.00https://doi.org/10.1145/3297858.3304055Calvin LinUniversity of Texas at Austinlin@cs.utexas.edu18, 22, 33], and Nvidia’s recently released Volta [29] GPUsupports a new SIMT execution model that makes it easier for programmers to use fine-grained synchronizationwithout worrying about SIMT-induced deadlocks [12].Unfortunately, despite hardware support for atomicinstructions, more general forms of fine-grainedsynchronization—such as those that use fine-grainedlocks to provide mutual exclusion to global memoryupdates—are one of the most expensive operations thatcan be performed on a GPU. Two properties of GPUscontribute to this problem. First, GPUs typically runthousands of threads, which can lead to massive lockcontention. Second, unlike CPUs, GPUs do not havecoherent caches that could allow lock retries to be confined to local caches, so GPU retries must access the lastlevel cache. With these two properties, lock contentioncan trigger a massive number of retries that need totouch the last level cache, creating a serious bottleneck.Moreover, fine-grained synchronization is often usedfor irregular workloads, so these retries often involvenon-coalesced accesses that further degrade global memory throughput. Finally, lock retries also increase thelatency of lock operations, which can significantly limitperformance when many threads contend for a smallnumber of locks. Thus, fine-grained synchronization cancause both throughput and latency problems. As a result,GPU programmers typically avoid fine-grained synchronization, often resorting to less efficient algorithms thatadmit less parallelism.In this paper, we present a new software architecture that supports efficient fine-grained synchronizationon GPUs by moving lock operations from slow globalmemory to the GPU’s faster word-addressable localscratchpad memories (i.e. shared memory in CUDA orlocal memory in OpenCL). However, these scratchpadmemories are not visible to all threads, so our solutionviews the GPU chip as a distributed system, whereeach thread block (TB) is a node equipped with a fastprivate memory (i.e., scratchpad memory), and the multiple nodes share a communication medium (i.e., globalmemory). Thus, our architecture decouples a baselineGPU kernel into two types of thread blocks that runconcurrently on the GPU. Server TBs handle updates tocritical section data1 . Client TBs execute all other aspectsof the baseline kernel, with added procedure calls to the1 Wedefine critical section data to be the data accessed in a criticalsection.

server TBs, which access the critical sections on behalfof the client TBs.More specifically, our solution partitions critical section data among server TBs so that each server TBworks on an exclusive set of data without conflicts.When a client TB needs to update some critical sectiondata, it sends a message to the appropriate server TB.Threads within a server TB use locks implemented inthe scratchpad memory to maintain mutual exclusionwhen processing the clients’ requests. To support thisarchitecture, we implement a software message passinglibrary so that client TBs can efficiently communicatewith server TBs via global memory. The benefit of oursolution is that clients send these messages just onceover global memory—subsequent lock requests and retries are performed by the server TBs without accessingglobal memory.From the programmer’s perspective, our softwarearchitecture is straightforward to implement—in mostcases, the code in critical sections is moved to serverthreads, while the remaining code remains in Clientthreads.This paper makes the following contributions: We implement a software client-server architecture that supports efficient global synchronizationon GPUs by moving lock operations from globalmemory to fast scratchpad memories. We describe a highly optimized software inter-TBmessage passing system that supports this clientserver architecture. Using Nvidia GTX 1080 ti GPUs, we evaluateour solution on a set of irregular problems, including graph-based problems and microbenchmarks, showing that our approach yields an average speedup of 3.6 over the best known GPUsolutions for these same problems. We provide a performance analysis that explainswhy our solution is profitable.The remainder of this paper is organized as follows.In Section 2, we place our work in the context of priorwork. After describing our solution in Section 3, wepresent our evaluation methodology in Section 4 andour empirical evaluation in Section 5, before concluding.2Related WorkWe now describe relevant prior work in the area offine-grained synchronization for both CPUs and GPUs.2.1CPU-Based SolutionsWe begin by comparing our solution with previous workthat applies similar ideas to CPUs.The idea of designating one or more threads as servers(or delegates) to handle critical sections has been proposed for multi-core and many-core CPUs [9, 14, 20, 27,32, 34, 35, 41, 43] The server threads can be chosen eitherstatically [9, 27, 35, 41] or dynamically [14, 20, 32, 34].While their designs differ, these solutions share theprinciple of transforming synchronization into communication, that is, to let clients offload (via messagepassing) the updates for the same data to the same serverso that critical section updates can be serialized at theserver. Our work is the first to apply similar principlesfor GPUs. Since GPU architectures differs significantlyfrom that of CPUs, our solution differs from previouswork in a number of key ways.First, CPUs have fewer hardware threads, so previous work uses individual threads as servers, and sinceconflicts are serialized to a single thread, no furthersynchronization is needed for processing requests. SinceGPUs have large thread or warp counts, our solutionuses thread blocks (TBs) as servers so that when requestsare processed, threads in the TB synchronize via the fastscratchpad memory.Second, CPUs have implicit hardware inter-core communication mechanisms in the form of cache coherenceand, in many cases, sophisticated on-chip interconnects.So previous solutions employ software message passingsystems on top of these mechanisms for a relativelysmall number of threads. By contrast, our solution mustscale to the much larger number of threads on GPUs,which lack such hardware support for inter-SM (interTB) communication.2.2GPU SolutionsYilmazer, et al. [46] propose a hardware-accelerated finegrained lock scheme for GPUs, which adds support forqueuing locks in L1 and L2 caches and uses a customizedcommunication protocol to enable faster lock transfer and to reduce lock retries for non-coherent caches.ElTantawy, et al. [13] propose a hardware warp scheduling policy that reduces lock retries by de-prioritizingwarps whose threads are spin waiting. In addition, hardware accelerated locks have also been proposed forCPUs [4, 25, 42, 47].By contrast, our solution does not require hardwaremodification. Moreover, a rough comparison with theirpublished results (see Section 5.4) suggests that oursolution performs as well if not better than previoushardware solutions, likely because our solution solvesthe problem at higher level by using scratchpad memories for global synchronization.Li, et al [24] propose a lightweight scratchpad memorylock design in software for older Nvidia GPUs (Fermiand Kepler) that uses software atomics for scratchpadmemories. Their solution improves local (i.e. intra-TB)

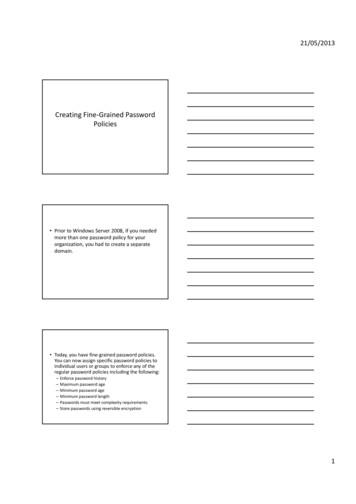

synchronization performance, whereas our solutionsolves global (i.e. inter-TB) synchronization problems.Instead of focusing on performance, others have focused on the programmer productivity aspect of finegrained synchronization. ElTantawy, et al [12] introducea compiler and a hardware scheme that avoids SIMTinduced deadlocks. Xu, et al [44] present a lock designthat reduces memory storage and uses lock stealingto avoid deadlock. Liu, et al [26] describe a compilerscheme that eliminates redundant locks in the program.In addition, previous work attempts to improve theperformance and programmability of GPUs by supporting transactional memory [10, 11, 15, 16, 37, 45] and byproviding memory consistency and memory coherenceon GPUs [5, 19, 36, 38–40].3Our SolutionOur solution is a software architecture that uses synchronization servers to enable efficient global fine-grainedsynchronization. The key idea is to replace global lockoperations with (1) scratchpad memory lock operationsand (2) message passing implemented in global memory.We describe our architecture in Section 3.1.Our software message passing system, described inSection 3.2, makes efficient use of global memory byemploying optimizations that reduce the overhead ofmessage passing operations and that promote coalescedmemory accesses.The synchronization server design described in Section 3.1 handles cases where the original kernel codedoes not have nested lock acquisition. Additional components and optimizations are needed to handle nestedlocks, and these are described in Section 3.3.3.1Synchronization Server ArchitectureConsider a baseline kernel that uses locks to protectsome data for mutually exclusive updates. In the schemeshown in Figure 1(a), the protected data can be referenced by threads in any TB, so locks are implementedin global memory. The slow global memory becomes athroughput bottleneck for lock retries on conflicts, andit becomes a latency bottleneck for transferring lockownership among threads.Our solution separates the original kernel into twokernels—the client kernel, which handles the non-criticalsections, and the server kernel, which executes the criticalsection on behalf of the client kernel. The two kernelsrun concurrently; each kernel launches a number of TBs,where the combined TB count of the two kernels doesnot exceed the maximum occupancy of the GPU. Anoverview of our scheme is shown in Figure 1(b).On the client side, client threads can still updatearbitrary protected data items, but they do so indirectly(a) Global LocksThreadBlocks(b) Our SolutionTB0TB1T0 T1 T2 T3T0 T1 T2 taTB1T0 T1 T2 T3T0 T1 T2 T3offloadby data idlock,(retry,)modify data,unlockL0TB0T0 T1 T2 T3T0 T1 T2 T3lock,(retry,)unlockServerTBsTB0ScratchPad LocksTB1ScratchPad Locksmodify dataGlobalD0 D1MemOwner:Server TB0D2D3ProtectedDataOwner:Server TB1Figure 1. Fine-grained mutual exclusion with (a) global locks(baseline) and (b) our solution.by sending messages to server TBs; this is handled byour software message passing system.On the server side, the ownership of protected datais partitioned among server TBs so that each data itemis accessible exclusively through a unique server TB.Client threads choose the appropriate server TBs asdestinations of messages based on data IDs. Threadswithin each server TB service clients’ requests in paralleland use scratchpad memory locks to maintain mutualexclusion.In essence, our solution replaces global lock operations with a two-level mutual exclusion scheme. At theglobal level, instead of synchronizing among themselveswith locks, client threads send messages to server TBswhen they need to update protected data items. Sincedata item IDs have a one-to-one mapping to server TBs,all update requests for the same data are guaranteedto be sent to the same server TB, so mutual exclusionis maintained at the global level, which allows threadswithin each server TB to synchronize using scratchpadmemory locks at the local level. Scratchpad memories arehigh-bandwidth, low-latency on-chip SRAM that support word-granularity accesses, where accesses wasteno bandwidth due to unused cache-line data. Thus,scratchpad memories are ideal for lock accesses andretries with irregular memory accesses.Compared to a baseline kernel with global lock operations, our solution makes better use of global memorybandwidth. Instead of contending for locks throughglobal memory, contentions are handled in scratchpadmemories. In addition, since fine-grained locks are usually used for irregular workloads, lock accesses tendto be non-coalesced memory accesses; by contrast, ourmessage passing system has various optimizations thatallows clients and servers to access global memory in amore coalesced manner (as we will show in Section 3.2).Aside from bandwidth benefits, our solution also avoids

transferring lock ownership in the high latency globalmemory, since locks are now implemented in the lowlatency scratchpad memories. Because the latency oftransferring lock ownership is on the critical path, the reduced latency is beneficial when protected data updatesare distributed in a manner that concentrates a largenumber of updates to a small number of data items.3.1.1ImplementationOur solution is implemented at the source code levelwithout modifications to the compiler or the hardware.This section shows how a baseline kernel with globallocks (Listing 1) would be modified to use our synchronization server architecture (Listing 2).Listing 1. Original Kernel with Global Locks12345678910111213void k e r n e l ( . . . ) {/ / begin c r i t i c a l se ct io nbool s u c c e s s f a l s e ;do {i f ( try lock ( data id ) ) {c r i t i c a l s e c ( data id , arg0 , arg1 ) ;threadfence ( ) ;unlock ( d a t a i d ) ;success true ;}} while ( ! s u c c e s s ) ;/ / end c r i t i c a l s e c t i o n}Listing 1 shows how a critical section is encapsulatedinto a function (line 6) and is protected by a try-lockloop. Data id, which can be a single word or a datastructure, refers to the data item to be updated, and arg#are additional arguments—generated by computationsin the non-critical sections—passed to the critical section.Listing 2 shows how our software architecture usestwo new procedures, send msg and recv msg (lines 2-3),to pass messages from a client TB to a server TB, wheredst in send msg denotes the server TB. The messagesize (in terms of words) corresponds to the number ofarguments of the critical section function.The client kernel (lines 5-16) corresponds to the original baseline kernel in Listing 1, where the critical sectionin the try-lock loop has been replaced with messagesending to server TBs. The code at line 8 maps offloadedwork to server TBs based on data IDs. The mapping interleaves the ownership of data items to server TBs. Thisfine-grained partitioning provides better load balanceamong server TBs than a coarse-grained partitioning.However, data IDs are dynamically generating by clientTBs depending on data inputs, so load imbalance canstill occur when a large number of threads serialize onrelative a small number of data items. Even in thesescenarios, the original kernel with global locks suffersmuch more due to high latency global memory and theinterference caused by lock retries.Listing 2. Pseudocode Code For Our 62728293031323334353637383940/ / procedure c a l l s f o r message passingvoid send msg ( i n t dst , i n t data id , any arg0,.) ;bool recv msg ( i n t& data id , any& arg0 , . . . ) ;void c l i e n t k e r n e l ( . . . ) {/ / e x e c u t e non c r i t i c a l s e c t i o n./ / map d a t a t o s e r v e ri n t s e r v e r i d d a t a i d % num server TB ;/ / offload c r i t i c a l section executionsend msg ( s e r v e r i d , data id , arg0 , arg1 ) ;/ / e x e c u t e non c r i t i c a l s e c t i o n.}void s e r v e r k e r n e l ( . . . ) {/ / s c r a t c h p a d memory l o c k sshared i n t l o c k s [ 4 0 9 6 ] ;/ / loop to handle c l i e n t requestsbool t e r m i n a t e f a l s e ;while ( ! t e r m i n a t e ) {i n t data id , arg0 , arg1 ;i f ( recv msg ( data id , arg0 , arg1 ) ) {/ / r e c e i v e d msg , do c r i t i c a l s e c t i o nbool s u c c e s s f a l s e ;do {i f ( t r y l o c k l o c a l ( data id ) ) {c r i t i c a l s e c ( data id , arg0 , arg1 ) ;threadfence block ( ) ;unlock local ( data id ) ;success true ;}} while ( ! s u c c e s s ) ;}t e r m i n a t e c h e c k t e r m i n a t i o n ( ) ;}}The server kernel (lines 18-40) executes the criticalsection on behalf of the clients, so any try-lock loop in theoriginal kernel is now in the server kernel (lines 29-36),which uses locks implemented in scratchpad memoriesrather than global memory. Since scratchpad memorieshave limited size, there can be a limited number oflocks; multiple data IDs can be mapped (aliased) to thesame lock. On modern Nvidia GPUs, for example, themaximum TB is 1K threads; we use 4K locks per serverTB to reduce the chance of unnecessary serializationscaused by aliasing.Server threads execute a loop (lines 19-34) that listensto clients’ messages and terminates when all clientsare finished. The termination condition is a flag (setby clients) in global memory, which is only checked

periodically by servers; the overhead of checking fortermination is negligible because only one thread perTB checks the flag and then informs the other threads ofthe condition.Our solution is mostly straightforward for programmers. For most cases, our code in Listing 2 can be usedas a template; the programmer needs to insert codefor both non-critical sections and critical sections intothe indicated places. The server architecture for nestedlocks (discussed in Section 3.3) is more complex, but atemplate is also provided for that case.The maximum occupancy of the GPU (i.e. the numberof TBs that can be executed concurrently) can be determined by API calls. Some of those TBs are used by theserver kernel, and remaining TBs are used by the clientkernel. The ratio of server to client TBs is based on therelative amount of work performed in the critical sections versus the non-critical sections. The programmeris responsible for setting this parameter based on thecharacteristics of the specific application. This may alsorequire some tuning by the programmer.3.2Our Message Passing SystemThis section describes our software message passingsystem, where client threads send messages and serverthreads receive messages. Message passing is achievedby reading and writing buffers that reside in globalmemory. There is one message buffer per receiver TB,which is shared by all threads of that TB and not accessedby other receiver TBs. To send a message, a thread writesto the specific buffer associated with the receiving TB.We first describe our basic algorithm for sending andreceiving messages, before describing optimizations forefficiently using global memory.3.2.1Our Basic AlgorithmEach message buffer is a large array accessed as a circularbuffer (we use 4K message entries as a default); our datastructure is lock-free and does not use global barriers.The message buffer has the following metadata as shownin Figure 2.The write idx is atomically incremented by the senderto reserve a buffer index for writes. To determinewhether the reserved index is free to write, the senderchecks the read idx, which is atomically incremented bythe receiver after reads. The bit-mask has one bit corresponding to each buffer location; it is set by the senderafter the message data has been successfully written toL2 (i.e. after the memory barrier2 ), and it is checked bythe receiver to find available messages for reads. The2 Memorybarriers are supported in CUDA by calls to threadfence(),and they are supported in OpenCL by calls to mem fence(CLK GLOBAL MEM FENCE).(a) Send One Messageread idxwrite idxInitial20State:❶ atomicIncto reserveidx 2write idxAfter3State:Writer2:data arrayn/a n/a n/a r0bit-mask0001❷ check if ❸ write data,❹ set bit 2idx 2 is free (idx%buf size), atomicOrthen membarread idxbit-maskdata array0101n/a r2 n/a r00(b) Receive One MessageInitialState:bit-mask0101Readr0:❶ find an availablemsg (set bit), thenatomicAnd to clearAfterState:bit-mask0100data arrayr2 n/a r0read idx0❷ read data❸ Inc tofree upidx 0data arrayr2 n/a n/aread idx1n/an/aFigure 2. The data structure of a single message buffer andthe basic algorithm for reading and writing.bitmask is needed because concurrent sender threadsmay finish writing out-of-order, e.g. in the figure, bufferlocation 1 is reserved before location 2, but location 2 iswritten before location 1, so the receiver must be able todetermine whether a specific location is valid.3.2.2Our Optimized AlgorithmEach message buffer is concurrently accessed by tens ofthousands of threads, so our system’s buffer accessesneed to be highly efficient. Unfortunately, our basic algorithm is inefficient, because threads access the messagebuffer individually, so each message send or receiveincurs the overhead of accessing metadata in globalmemory (e.g. bit-mask, etc.); moreover, the lanes of asender warp may have different destination buffers (receiver TBs), and the lanes of the receiver warp might notread consecutive buffer locations, so memory accessescan be non-coalesced.Our optimized solution amortizes the cost of globalmemory accesses by aggregating both messages andtheir metadata. Senders aggregate messages by collecting them in local buffers residing in scratchpad memorybefore being written to global message buffers in bulk.Receivers aggregate message passing metadata accessby having a single warp, known as a leader warp, manipulate the metadata on behalf of the other warps, whichwe refer to as the follower warps.For senders, each TB has a set of small message buffersin the scratchpad memory, with each local buffer corresponding to one receiver TB. Messages from multiplewarps are aggregated in local message buffers beforebeing written to global message buffers, so metadataoverhead is amortized and global memory accesses are

typically coalesced. In addition, the metadata overheadof accessing read idx (Figure 2(a)) can be further reducedby keeping a local scratchpad copy and updating it lazily.The design is described in Section 3.2.4.For receivers, with one warp-granularity global memory access, the leader warp reads multiple bit-masksto find available messages and then uses a set of assignment buffers in local scratchpad memory to assignthese messages to follower warps. The leader warp onlyreads the bit-mask, while the actual message data isread by follower warps. The messages assigned to eachfollower warp are stored in consecutive buffer locations,so accesses to message data are coalesced memory accesses. Since the warp can read 32 bit-masks which eachrepresent 32 buffer locations, the leader warp can withone memory access read the bit-mask of up to 1024messages. The design is described in Section 3.2.3.(a) leader warp operations(b) follower warps operationsstep a1: the leader warp reads bit-maskshead idx:0last msg:12step b1: follower warps read msg datafw0fw1fw2fw3asg-bufs0 4 0 0 4 4 0 4 8 0 1 1181011 .1216followerwarp 0:Lane0 Lane1 Lane2 Lane3msg data(global):calculate num of valid msgs to readnum of msgs: 133.2.3Receiver DesignFor receiving messages, the heavy lifting is performedby the leader warp. Figure 3(a) shows the operationsperformed by the leader warp in one iteration. In stepa1, 4 lanes of the leader warp read 4 bit-masks (words)from global memory. Here, head idx denotes the current progress of bit-mask read. The leader warp onlyhandles consecutive available messages (i.e. consecutive 1s) starting from head idx; in the figure, the lastmessage to handle is at location 12. This requirementsimplifies buffer management for two reason. First, theleader warp does not have to backtrack to check previously unavailable messages. Second, when assigningmessages to follower warps, the set of valid messagescan be simply represented as a range starting from thehead idx.To derive the total number of consecutive availablemessages, the leader warp performs a reduction onbit-masks read by individual lanes, which is achieved3 Of course, our overall software architecture has the added advantagethat it does not perform lock retries in global et current3.idx:3reset counter 0: 13 4step a2: assign msgs to follower warps (FWs)for actual msg (buffer) data readsincrementcurrentcount by 4step b2: when current matches target,update read idx and reset bit-masks tofree up buffer locationsasg-bufs0 4 0 0 4 4 0 4 8 0 1 12(local):fw1fw3fw2reset counter 0: 13 13leaderwarp:reset assign assigntarget currentcounter sizeptrID (3bit) (6bit) (23bit)reset13 0countersn/a n/a(local):Lane0 Lane1 Lane2 Lane3bit-mask0000 0000 0000 0xxx .(global):0481216read idx(global):.Benefits Over Global Locking. Our message passingsystem makes much more efficient use of global memory than codes that access global locks.3 The key insight is that global lock operations must directly accessspecific lock variables that are spread throughout theaddress space, so memory accesses are inherently noncoalesced. By contrast, because our solution handleslocking indirectly and locally in the server TBs’ scratchpad memories, a client’s send messages are not boundto specific global memory addresses; therefore, thesemessages can be placed consecutively in circular buffers,allowing our optimized solution to perform coalescedreads and writes of global memory.Lane0 Lane1 Lane2 Lane313increment by 13Figure 3. Operations performed by (a) the leader and (b) thefollower warps when reading messages—assuming 4 bits perbit-mask words, 4 lanes per warp, and 4 follower warps perTB.by two intra-warp communication functions—vote ballot and shuffle idx, which are register-like operationssupported by hardware. Vote ballot accepts a 0 or 1bit (ballot) from each lane as its argument and returnsthe entire warp’s vote as a bit-vector (all lanes get thesame bit-vector), so each lane knows how other laneshave voted. For our purpose, any lane that has a 0 in itsbit-mask (i.e. unavailable messages) votes 1; otherwiseit votes 0. In the figure, lane 3 votes 1, and other lanesvote 0, so we know that the sequence of consecutive1s ends somewhere in lane 3’s bit-mask. Next, we justneed to know exact bit position of the first 0 in lane 3’sbit-mask, and this is done by using shuffle idx, whichallows all lanes to read a register value from a specificlane. With this information, the warp determines thatthere are 13 consecutive valid messages starting fromthe head idx (at 0).In step a2, these valid messages are assigned to follower warps via assignment buffers, which are packed32-bit words in scratchpad memory. Each assignmentbuffer corresponds to one follower warp. The assign idxand the assign size fields indicate the starting locationand the number of messages to read. As shown in step b1(Figure 3(b)), each follower warp reads message data according to its assignment buffer, where assign size, thenumber of assigned messages for each follower warp,has a maximum value of the GPU’s warp size (e.g. 32).To wait for work from the leader warp, the followerwarps poll the assignment buffers. Similarly, when follower warps are busy, the leader warp polls assignment

buffers to wait for available follower warps. Both typesof polling only generate accesses to scratchpad memory.Once messages are read, their buffer locations are freedby resetting the bit-masks and updating the read idx(see Figure 2(b)). Reset counters in scratchpad memoryare used to aggregate these global memory metadataoperations, so the overhead can be paid once for a largenumber of messages. Step a2 shows that when assigningmessages, the leader warp initializes the target field of areset counter to the number of message assigned and

fine-grained synchronization for both CPUs and GPUs. 2.1 CPU-Based Solutions We begin by comparing our solution with previous work that applies similar ideas to CPUs. The idea of designating one or more threads as servers (or delegates) to handle critical sections has been pro-posed for multi-core and many-core CPUs [9, 14, 20, 27,