Transcription

UNITE/JJ STIJTESPCDSTIJL SERVICE Postal Regulatory CommissionSubmitted 1/26/2018 4:12:03 PMFiling ID: 103608Accepted 1/26/2018Performance Audit PlanInternal Service Performance MeasurementJanuary 23, 2018

UNITE/JJ STIJTESPCDSTIJL SERVICE INTRODUCTIONThe Postal Service is developing t he Internal Service Performance Measurement (SPM ) System toconsolidate Commercial Mail and Single-Piece First -Class Mail (SPFC) measurement into a single, internalsyst em. Internal SPM is support ed by data feeds from multiple postal systems to store all of t he datarelevant t o t he measurement of Market Dominant Products.The Postal Service cont ract ed a t hird-party vendor to develop an audit approach for review ing theInternal SPM system and processes. The Post al Service is in the process of procuring a third -party vendorto conduct t he Int ernal SPM audit, to include t hese four major tasks:Figure 1. Inte rnal SPM Audit Major Tas ksDefine scope andobjectives.IDetermine metricsto be measuredObtain informationand review resultsReport findingsandrecommendationsAudit t asks will be performed in an iterative process, meaning audit activities w ithin a task may berepeated as new information is made avai lable and throughout t he lifecycle of the audit. Likew ise,follow ing an audit report, it is likely that revisions may occur to t he audit objectives, metrics, analysis, orreport ing tasks based on t he fin dings.Each of t he audit tasks is described in more detail below .1. DEFINE SCOPE AND OBJECTIVESThe first st ep in conducting an audit is to confirm t he scope and determine the objectives. A solidunderstanding of t he audit' s scope and objectives is needed to define and complete the subsequentsteps of t he audit.The objectives of t his audit are to evaluate the accuracy, reliability, and representativeness of theInternal SPM results. As background, t he Government Accountability Office (GAO) published a report onSeptember 30, 2015, entitled Actions Needed to Make Delivery Performance Information MoreComplete, Useful and Transparent.1 In response, the Posta l Regulatory Commission issued Order No.2491 2 on October 29, 2015, invit ing public comment on t he qualit y and completeness of SPM datameasured by the USPS. This included a request for a description of potential deficiencies in accuracy,reliability, and representativeness of SPM data.In PRC Order No. 3490 3, published on August 26, 2016, one of the Commission' s re quirements is to1U.S. Govern me nt Accountability Office, Actions Needed to Make Delivery Performance Information MoreComplete, Useful and Transparent, 2015, http://www. gao.gov/products/GA0 -15 -756.2Postal Regulato ry Commission, Notice Establishing Docket Concerning Service Performance Measurement Data,2015, http://www.prc.gov/dockets/docume nt/93660.3Postal Regulato ry Commissio n, Order Enhancing Service Performance Reporting Requirements and ClosingDocket, 2016, http://www.prc.gov/dockets/docume nt/96994.2

UNITE/JJ STIJTESPCDSTIJL SERVICE provide information on current methodologies verifying the accuracy, reliabilit y, and representativenessof SPM data . Furthermore, Order No. 3490 cited stakeholder comments, such as the PublicRepresentative's use of defi nit ions from the Handbook on Data Quality Assessment Methods and Tools, 4including: Accuracy- "denotes the closeness of computations of estimates to the ' unknow n' exact or trueva lues."Reliability- defined as "reproducibilit y and stabilit y (consistency) of the obtained measurementestimates and/ or scores."Representativeness - defined as "how we ll the sampled data reflects the overall population."This audit plan addresses these concerns by fram ing the audit metrics to address accuracy, reliability,and representativeness, as detailed below.Regarding the scope, this audit will cover specific products, measurement phases, and majorcomponents of Internal SPM.The audit will include a review of measurement results for letters and flats for the following products: Domestic First-Class Mail:o Single-Piece letters and cardso Presort letters and cardso Single-Piece and Presort flats USPS Marketing Mailo High Densit y and Saturation letterso High Densit y and Saturation fl atso Carrier Routeo Letterso Flatso Every Door Direct Mail-Retail flats PeriodicalsPackage Serviceso Bound Printed Matter fl atsThroughout this document, "Commercial Mail" refers to all of the products listed above excludingSingle-Piece First-Class letters, cards, and fl ats. "Standard Mail" was renamed "USPS Marketing Mail" inJanuary 2017.The audit will evaluate each of the following phases of internal measurement:First MileThe First Mile phase includes the t ime in collection and from collection of mail to init ia l automatedprocessing on mail sorting equipment. For mail accepted at retail counters, First Mile is measured fromacceptance of mail to init ial processing. As part of the measurement process, Postal Service personnel4.!i., quoting Manfre d Ehling and Thomas Korne r (eds.), Handbook on Data Quality Assessment Me thods and Tools,at 9 (Eurostat, 2007).3

UNITE/JJ STIJTESPCDSTIJL SERVICE scan mailpieces from randomly selected collection points. They also scan barcodes for pieces withSpecia l Services to accept them at retail counters. First Mile applies only to Single-Piece First-Class Mail(SPFC), which includes Single-Piece First-Class Mail letters and cards, and the portion of First-Class Mailflats which are single-piece.The audit w ill verify whether Interna l SPM provides First Mi le data that are accurate, reliable, andrepresentative. This verification w ill include a review of requirements, business rules, and inputscompared to sampling group inputs, collection point inventory, statistica l framework, sampling targets,sampling request generation, scan requests, and actual scan resu lts, among other components. Forexample, the representativeness of the sampli ng resu lts will be reviewed to determine whether thereare under- coverage issues and whether a bias may exist. Likewise, First Mile data will be assessed forreliability, including the manner in which samples are taken throughout each fisca l quarter and anassessment of how proxy data and imputations impact results.Processing DurationThe Processing Duration phase begins w ith the determination of "start-the-clock" information formeasurement. Input data are used to decide the outcome of new fie lds for start-the-clock, whichinclude Induction Method, Actual Entry Time, Critical Entry Time, and the Start-the-Clock Date. For SPFC,the calcu lation of Processing Duration involves the processing of scan records to determine which scanrecords belong together to form the history of a single-piece of mail, followed by the determinations ofsimilar crit ical fields required for measurement.The audit w ill verify that the Internal SPM system has adequate processes in place to verify that criticalfie lds have been accurately calculated for both Commercial Mai l and SPFC. The audit w ill assesswhether the processing duration and overall measurement processes yield representative and reliableresu lts. For example, the audit will evaluate the representativeness of the Processing Duration data bysummarizing by product and mail entry type, the applicable Full Service or non-Full Service mail volumesmeasured in Internal SPM for comparison w ith the population mail volumes from Revenue, Pieces, andWeights (RPW). Similarly, the audit organization will determ ine if qua lity controls have been establishedto confirm that data are stored, associated, aggregated, and excluded according to established businessrules and requirements.Last MileThe Last Mile phase measures the last leg of mail delivery, from the latest observed processing scan todelivery (or the initial delivery attempt). In Interna l SPM, t ime in Last Mile is estimated by having PostalService personnel scan mailpieces at randomly selected delivery points.Sim ilar to plans for First Mile, the audit organization will assess the accuracy, reliability, andrepresentativeness of Last Mile data and processes. The audit will verify the processes to validate thequality and accuracy of calcu lations and data storage for delivery point coverage, sampling targets,sampling request generation, scan request, and actual scan results, among other aspects. For example,the audit wi ll evaluate whether carrier sampling training procedures and data collection processesprovide for accurate measurement resu lts. Likewise, by measuring the Last Mile response rate by postaladministrative District, the audit will evaluate the representativeness and va lidate whether nonresponse results are immateria l or may indicate a potentia l bias based on what was and was not4

UNITE/JJ STIJTESPCDSTIJL SERVICE sampled.Scoring and ReportingThe Interna l SPM system calculates service performance estimates and produces reports of marketdominant product performance scores.The audit will assess w hether appropriate processes have been established to produce accurate andreliable data for use in reports. Similarly, by review ing rules and processes for data exclusions,documentation, and coverage, the audit will assess the representativeness of the data.System ControlsAdditionally, the audit will consider how business rules and administrative rights are applied w ithin theinternal SPM measurement processes and w ill review the data recording and operating procedu res forPosta l personnel execut ing measurement processes. The audit will evaluate if there are potential ri sks ofmanipulation or error due t o insufficient restrictions or inadequate controls and/ or procedures.2. DETERMINE METRICS TO BE MEASUREDUsing the defined objectives and scope, the next step in the audit plan is to determine w hich metricsshould be measured. There are three initial quest ions to frame the metrics: Does the Internal SPM system produce result s that are accurate? Does the Internal SPM system produce result s that are reliable? Does the Internal SPM system produce result s that are representative?The third party vendor w ho developed the audit plan review ed materials from some organizations t odevelop the approach. One key input w as a publication titled Designing performance audits: setting theaudit questions and criteria 5 developed by the International Organization of Supreme Audit Institutions{INTOSAI). INTOSAI leveraged the M into Pyramid Principle 6 to define relevant sub-questions and sub-subquest ions. All questions w ere developed to align high-level audit objectives w ith the Internal SPMphases and aspects.The figu re below show s an example of the initial accuracy question, followed by sub-questions focu sedon the measurement phases, follow ed by sample sub-sub-questions about specific Internal SPMprocesses to be exam ined. For f urther detail, see the Audit Measures Attachment to this document.Each question is aimed at providing support for answering the first level question of " Does the InternalSPM system produce result s that are accurate?"5Designing performance audits: setting the audit questions and criteria, rformance -audit s -setting-audit-questions.pdf.6Minto, Barbara, The Minto Pyramid Principle, Ed. Minto International, Inc., 2003.5

UNITE/JJ STIJTESPCDSTIJL SERVICE Figure 2. Example of Minto Pyramid Principle approach used to determine metrics for Internal SPMDoes InternalSPM produceaccurateresutts?Are Design andExecution ofFlrst Mileprocessesaccurate?Are Design andExecution ofProcessing Durationprocesses accurate?Are Design andExecution ofLast Mileprocessesaccurate?rIs First Milesampling accuratelycompleted bycarriers?Is the collection boxdensity dataaccurate andcomplete?Is Internal SPMhandling PD systemchanges to provide dataintegrity and accuracy?Is Internal SPMaccuratelycapturing andassociating allLM scan data?Is Internal SPMaccuratelycalculating andstoring LM Profiledata?Following this approach for all three questions and across the phases of measurement, an init ial set ofaudit questions w as developed.Once the questions w ere developed, the audit organization utilized INTOSAl's basic design matrix modelto define the follow ing for each sub-sub question: Audit criteria: Audit criteria is the expectation or 'yardstick' to be used w hen answ ering the subsub question. Audit information: Audit information is the specific data or report to review or assess. Methods: Methods provide the high-level steps the auditor will use to review information.The figure below provides an example from the sub-sub questions related to reliabilit y. For further detailregarding the audit information, criteria, and compliance thresholds, please see Appendix B.6

UNITE/JJ STIJTESPCDSTIJL SERVICE Figu re 3. Example Decision TreeNoPartiallyAchie vedTable 1. Example of Audit Measures fo r Reliability- Does the Internal SPM System Prod uce Reliable First MileResults?PhaseLevellFirstMileIs FMdataReliable?FirstMileIs FMdataReliable?Level 2Level3Audit Criteria(Yardstick}Are First Mileresultsdesigned a ndexecuted toproducere liable results?Are First Mileresultsdesigned a ndexecuted toproducere liable results?Is use of imp uta tions forFM Profile results limitedto provide FMmeasurement t hatrepresents t he Dist rict'sperforma nce?Is use of proxy data forFM Profile results limitedto provide FMmeasurement t hatrepresents t he Dist rict'sperforma nce?Most dist ricts shouldhave a limitedamou nt of volumefo r which imputedresults are usedwithin t he q uarte r.Most dist ricts shouldhave a limitedamou nt of volumefo r which proxyresults are usedwithin t he q uarte r.AuditInformationReview t hevolume of mailfor whichimputations a rerequired.Review t hevolume of mailwhere proxy dataare used .3. OBTAIN INFORMATION AND REVIEW RESULTSThe next step in the audit plan after the measures are established is to obtain information and reviewresults. The audit organization w ill use the Quality and Audit computing environment to collect andana lyze data . This incl udes: Taking snapshots of key system tables for ana lysis Pu lling samples of data from very large system tables for review7

UNITE/JJ STIJTESPCDSTIJL SERVICE Compiling data aggregates to summarize data for review and/ or analysis Producing reports and fi les needed for information.In addition, information may be gathered by conducting interview s or review ing available reports andother documents.Once the information is obtained, the audit organization w ill review the information and compare it tothe audit criteria. This includes organizing all of the measures, audit criteria, audit information, andmethods into a logical, analytica l flow or decision tree. The audit organization w ill use this process toreview data for the highest level question and answ er the initial question. If the quarterly data indicatespossible issues w ith accuracy, reliability, or representativeness, additional information w ill then begathered and review ed as needed. Throughout this portion of the audit , resu lts w ill be documented andpotential issues w ill be flagged. Likew ise, the audit organization w ill quantify the impact or potentialimpact of issues flagged for accuracy, reliability, and representativeness concerns w henever possible.When the review is complete, next steps w ill be determined.4. REPORT FINDINGS AND RECOMMENDATIONSThe final step in conducting the audit is to report the findings and recommendations for the fiscalquarter under evaluation. This w ill include a high-level summary of achieved, partially achieved, and notachieved metrics. A dashboard using stoplight metrics w ill likely be one high level visual to pinpoint theseverity of issues and the level of risk and impact associated w ith any not achieved results.Table 2. Example of Stoplight Metrics easures marked in gree n would indicate no issues were identifi ed with the accuracy,reliability or representativeness fo r t hat measure.Ye llow measures would indicate pote nt ial risks that sho uld be looked into as soon as feasible .Measures marked in red would indicate major concerns which need t o be reviewed a ndaddressed promptly.There w ill also be a more detailed report of the findings w hich w ill provide information about w hat w asmeasured and w hat the results w ere. The audit report may also include recommendations to improveInternal SPM, such as refining business rules or methodology if issues are identified. Recommendationscou ld also result in change requests for system and process modifications. Likew ise, the audit reportmay include recommendations for changes to the audit process itself, including alterations to scope,objectives, metrics, information collection, or reporting.The audit report w ill be provided to the USPS on a quarterly basis. The quarterly audit results w ill feedinto an annua l audit summary report for USPS leadership. If the SPM system is approved as the basis forreporting market-dominant product service performance to the Commission, information from the auditreport may also be used to support reporting requirements in the Annual Compliance Report (ACR).8

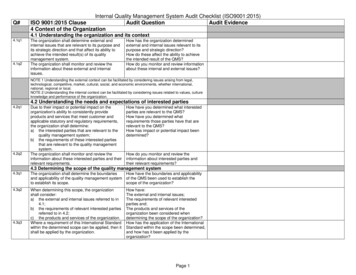

UNITE/JJ STIJTESPCDSTIJL SERVICE APPENDIX A-AUDIT PLAN MEASURESTable A-1 below displays t he audit quest ions, c riteria, a nd info rmation used to e valuate the complia nce of t he sampling process.Table A-1. Audit Plan MeasuresMeasurePhaseLevel 1Level 2Level3Are Design (e.g.requirements, SOPs,business rules) andExecut ion of FirstMile processesacc urate?Are Design (e.g.requirements, SOPs,business rules) andExecut ion of FirstMile processesacc urate?Do carriers accuratelycomplete First Milesampling?Audit Criteria(Yardstick)Procedures forsampling should bewritten and t rainingprovided to employeesresponsible forperforming sampling.Carrie r samplingweekly compliancerates shouldconsistently exceed 80percent for mostdistricts.Audit Information1First MileIs First Mile (FM)data Accurate?2First MileIs FM dataAccurate?3First MileIs FM dataAccurate?Are Design (e.g.requirements, SOPs,business rules) andExecut ion of FirstMile processesacc urate?Is t he collection boxdensity data accuratea nd complete?Density t ests s hould beperformed o n everyactive collect ion pointa nnually and datacollect ed shouldacc urately reflect thevolume in t he boxesduri ng t he testingperiod.Verify t hat t here is a process t oload/use Collection PointManagement Syst em (CPMS)density data .4Last MileIs Last Mile (LM)data Accurate?Are Design (e.g.requirements, SOPs,business rules) andDo carriers acc uratelycomplete Last Milesampling?Procedures forsampling should bewritten and t rainingValidate t hat t he samplingprocedures a re up-to-date a ndcomprehensive.Do carriers acc uratelycomplete First Milesampling?Validate t hat t he samplingprocedures a re up-to-date a ndcomprehensive.Validate whether processes existto verify t he acc uracy of t hesampling responses.

MeasureUNITE/JJ STIJTESPCDSTIJL SERVICE PhaseLevel 1Level 2Level3Execution of LastMile processesaccu rate?Are Design (e.g.requirements, SOPs,business rules) andExecution of LastMile processesaccu rate?Do carriers accu ratelycomplete Last Milesampling?5Last MileIs LM dataAccurate?6Reporting/ProcessingDurationDataIs Reporti ng/Data Accurate?Are Design (e.g.,requirements, SOPs,business rules) andExecution ofReporting processesaccu rate?7Reporting/ProcessingDurationDataIs Reporti ng/Data Accurate?Are Design (e.g.,requirements, SOPs,business rules) andExecution ofReporting processesaccu rate?Are reportingprocedures andrequirementsestablished a ndexecuted per designto produce accu rateresults?Are reportingprocedures andrequirementsestablished a nd beingexecuted per designto produce accu rateresults?Audit Criteria(Yardstick)provided to employeesresponsible forperforming sampling.Carrier samplingweekly compliancerates shouldconsistently exceed 80percent for mostdistricts.Audit InformationValidate whether processes existto verify the accu racy of t hesampling responses.Reportingrequirements s houldbe documented a nda ligned with regulatoryreportingrequirements.Quarterl y verification ofrequirements and reportcontents should occur.Exclusions, exceptions,a nd limitations s houldbe documented in theInternal ServicePerformanceMeasurement (SPM)system and t he finalreports.Validate whether Attachme nts A(Exclusion Reasons Breakdown)and B (Total Measured/Unmeasured) are accuratelyproduced for Internal SPM.10

UNITE/JJ STIJTESPCDSTIJL SERVICE t MileLevel 1Is Reporti ng/Data Acc urate?Is FM dataReliable?Level 2Level3Are Design (e.g.,req uirements, SOPs,b usiness rules) andExecut ion ofReporting processesacc urate?Do no n-a utomatedexclusio ns and specia lexceptio ns (e.g., loca lholidays, no ncertified mail, proxyAre First Mileresults designed a ndexecut ed toproduce relia bleresults?10First MileIs FM dataReliable?Are First Mileresults designed a ndexecut ed toproduce relia bleresults?data , low volumeexclusio ns) createu nbiasedperforma nceestimates.Is use of im putat io nsfo r FM Profile resu ltslimited to provide FMmea surement t hatrepresents t hed istrict'sperforma nce?Is use of proxy datafo r FM Profile resu ltslimited to provide FMmea surement t hatrepresents t hed istrict'sperforma nce?Audit Criteria(Yardstick)Audit InformationA documenteda pproval p rocesss ho uld be in place andbe fo llowed for a llma nua l/specia lexclus io ns a ndexcept ions a nd foradd ing or changingexclus io ns o r otherb usiness ru les.Most dist ricts shouldhave a limited fo rwhich imput ed resultsa re used within t heq uarter.Review a pproval process fo r allma n ua l exclusio ns a nd s pecia lexceptio ns. Review process andMost dist ricts shouldhave a limited volumefo r which proxy resultsa re used within t heq uarter.Review t he volume of ma ilw here proxy data a re used.decisio ns for a ny exclusio ns toconfi rm t he focus is onmeasurement accuracy a nd notbiased.Review t he volume of ma il fo rw hich imputatio ns a re requ ired.11

Measure11UNITE/JJ STIJTESPCDSTIJL SERVICE PhaseLast MileLevel 1Is Last Mile (LM)data Reliable?Audit Criteria(Yardstick)Level 2Level3Are Last Mile resultsdesigned andexecuted toproduce reliableresults?Is use of imputationsfo r LM Profile resultslimited to provide LMMost districts shouldhave a limited volumefo r which imputedmeasu rement t hatrepresents thedistrict'sperformance?results a re used withint he quarter.Audit InformationReview t he volume of mail fo rwhich imputations a re required.12Last MileIs LM dataReliable?Are Last Mile resultsdesigned andexecuted toproduce reliableresults?Is use of proxy datafo r LM Profile resultslimited to provide LMmeasu rement t hatrepresents thedistrict'sperformance?Most districts shouldhave a limited volumefo r which proxy resultsa re used within t heq uarter.Review t he volume of mailwhere proxy data a re used.13Reporting/ProcessingDurationDataIs Reporti ng/Data Reliable?Does t he InternalSPM systemproduce reliableresults?Are changes to SPMdoc umented a ndavailable forrefere nce?Review docume ntation ofsystems' modifications a ndReporting/ProcessingDurationDataIs Reporti ng/Data Reliable?Does t he InternalSPM systemproduce reliableresults?Are changes to SPMdoc umented a ndavailable forrefere nce?Program a nd SPMc ha nges aredocumented in anInternal SPMrepository forrefere nce.PRC Reports de notemajo r methodologya nd process changes inq uarterly results.14validate availability androb ustness.Review method and processchanges as well as PRC Reportna rratives.12

UNITE/JJ STIJTESPCDSTIJL SERVICE MeasurePhaseLevel 1Level 215Reporting/ProcessingDurationDataIs Reporti ng/Data Reliable?Does t he InternalSPM systemproduce reliableresults?Does t he InternalSPM system producereliable results?16Reporting/ProcessingDurationDataIs Reporti ng/Data Reliable?Does t he InternalSPM systemproduce reliableresults?Do processes exist tostore and mainta inofficial Is Reporti ng/Data Reliable?Does t he InternalSPM systemproduce reliableresults?Does t he scheduleallow fo r t heproduction of re liablequarterly resultsgiven data andsystem constraints?Level3Audit Criteria(Yardstick)For each productmeasured, t he on-timeperformance scoress hould have margi ns oferror lower t han t hedesigned maximumsfo r the quarter.Processes s hould beestablis hed fo r storingfi nal quarterly results.All critical defects anddata repairs s hould becompleted for t hequarter beforefi nalizing results. Alldata loading,ingestions,associations,consolidations, andaggregations s hould becompleted.Audit InformationReview statistical precision byproduct and reporting level.Validate t hat vital scori ng dataare "frozen" for quarter closeand t hat t hese data a remainta ined in accordance withdata retention policy.Validate t hat t here is a processto close the qua rterly reportingperiod to incl ude: 1) Reviewoutstanding defects todete rmine impact or potentialimpact; 2) Review completeddata repairs/defect repairs forcomprehensiveness; and 3)Review data processing backlogsimpacting the quarter.13

MeasureUNITE/JJ STIJTESPCDSTIJL SERVICE PhaseLevel 1Level 2Level318First MileIs FM dataRep resentative?Does t he executionof the First Milemeasurementprocess yield resultsthat arerep resentative?Do the samplingresults indicate thatall collection pointswere included(districts, ZIP codes,box types, boxlocations)?19First MileIs FM dataRep resentative?Does t he executionof the First Milemeasurementprocess yield resultsthat arerep resentative?20First MileIs FM dataRep resentative?Does t he executionof the First Milemeasurementprocess yield resultsthat arerep resentative?Are t he samplingrespo nse ratessufficient to indicatet hat non- responsebiases areimmaterial? If no,does the dataindicate diffe rences inperformance forunde r-representedgroups?If the samplingrespo nse rates do notmeet t he districtt hreshold, are theredifferences inperformance forunde r-representedgroups?Audit Criteria(Yardstick)Between the firstquarter and t he e nd oft he curre nt quarter,t he percentage ofboxes selected forsampling at least onet ime should be moret han t he quarterlytarget percentage.Most response ratesshould exceed 80% at adistrict level.Coverage ratios shouldmeet acceptablet hresholds at the 3digit ZIP Code levels fordistricts with poorcoverage.Audit InformationAcross the Fiscal year, measurethe total number of collectionpoints which were selected fo rsampling a nd which resulted invalid samples to identifywhether t here is systematic noncoverage of boxes.Calculate sampling response ratefor each district.For district response rates belowthresholds, calculate coverageratios for the 3-digit ZIP codes.14

MeasureUNITE/JJ STIJTESPCDSTIJL SERVICE PhaseLevel 121First MileIs FM dataRepresentative?22First MileIs FM ataIs ProcessingDuration ataIs ProcessingDuration dataRepresentative?Level 2Level3Does the executionof the First Milemeasurementprocess yield resultsthat arerepresentative?Does the executionof the First Milemeasurementprocess yield resultsthat arerepresentative?Do the execution oft he ProcessingDuration and overallmeasurementprocess yield resultsthat arerepresentative?Do the execution oft he ProcessingDuration and overallmeasurementprocess yield resultsthat arerepresentative?Are all valid collectionpoints included in thecollection profile(collection points, ZIPcodes, and collectiondates)?Are all retail locationsincluded in the finalretail results for allshapes, dates, and ZIPcodes?How much of thevolume is included inmeasurement foreach measuredproduct?Are all destinating ZIPcodes and datesrepresented in thefina l data?Audit Criteria(Yardstick)Most e ligible collectionpoints in CPMS shouldbe measured in theprofile.Most e ligible retaillocations shouldcontribute data to theprofile for some datesand mail types in thequarter.At least 70% of thevolume is measured foreach product.Most active ZIP codesshould have mailreceipts for allproducts during thequarter.Audit InformationAssemble full frame of collectionpoints and assess whether all arerepresented in the profile. If not,determine the extent of missingpoints.Assemble a full frame of eligibleretail locations and measure howmany have at least one piecemeasured during the quarter.Take the total measured volumefor the quarter and the totalpopulation pieces for eachproduct (PRC product reportinglevels) and calculate the percentof mail in measurement.Summarize the fina l data fromthe quarter by destination 5digit ZIP code and product andassess against the full frame.15

MeasureUNITE/JJ STIJTESPCDSTIJL SERVICE PhaseLevel 125Last MileIs LM dataRep resentative?26Last MileIs LM dataRep resentative?Level 2Level3Does t he executionof the Last Milemeasurementprocess yield resultsthat arerep resentative?Does t he executionof the Last Milemeasurementprocess yield resultsthat arerep resentative?Are t he samplingrespo nse ratessufficiently high toindicate that nonrespo nse biases areimmaterial?If the samplingrespo nse rates do notmeet t he districtt hreshold, does t hedata indicatedifferences inperformance forunde r-representedgroups?Audit Criteria(Yardstick)Most response ratesshould exceed 80% at aDistrict level.Coverage ratios shouldmeet acceptablet hresholds at the 3digit ZIP Code levels fordistricts with poorcoverage.Audit InformationMeasure t he last mile samplingresponse rate by t he district.For district response rates belowthresholds, calculate coverageratios for the 3-digit ZIP codes.16

UNITE/JJ STIJTESPCDSTIJL SERVICE APPENDIX B-AUDIT COMPLIANCE EVALUATION SCHEMETable B-1 below presents the audit compliance eva luation scheme and relevant compliance thresholds. These thresholds should be used tocategorize each compliance measure as achieved, parti

INTOSAI leveraged the Minto Pyramid Principle6 to define relevant sub-questions and sub-sub questions. All questions were developed to align high-level audit objectives with the Internal SPM phases and aspects. The figure below shows an example of the initial accuracy question, followed by sub-questions focused .