Transcription

Big Data Hadoop and SparkDeveloper

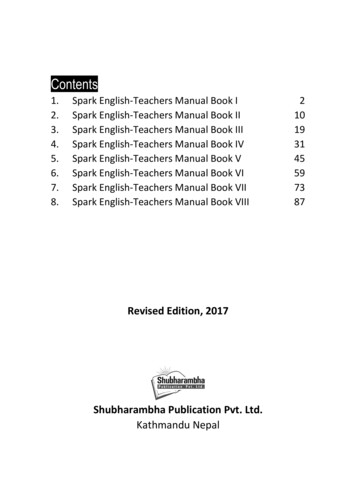

Table of Contents:Program OverviewCertification AlignmentProgram FeaturesCertification Details and CriteriaDelivery ModeCourse CurriculumPrerequisitesCourse end ProjectsTarget AudienceCustomer ReviewsKey Learning OutcomesAbout UsProgram Overview:This Big Data Hadoop Certification course is designed to give you an in-depth knowledgeof the big data framework using Hadoop and Spark. In this hands-on big data course, youwill execute real-life, industry-based projects using Simplilearn’s integrated labs.Program Features:74 hours of blended learning22 hours of Online self-paced learning52 hours of instructor-led trainingFour industry-based course-end projectsInteractive learning with integrated labs2Curriculum aligned to Cloudera CCA175 certification examTraining on essential big data and Hadoop ecosystem tools, and Apache SparkDedicated mentoring session from faculty of industry expertsDelivery Mode:Blended - Online self-paced learning and live virtual classroomPrerequisites:It is recommended that you have knowledge of:Core JavaSQL

Target Audience:Analytics professionalsSenior IT professionalsTesting and mainframe professionalsData management professionalsBusiness intelligence professionalsProject managersGraduates looking to begin a career in big data analyticsKey Learning Outcomes:This Big Data Hadoop and Spark Developer course will enable you to:Learn how to navigate the Hadoop ecosystem and understand how to optimize its useIngest data using Sqoop, Flume, and Kafka.Implement partitioning, bucketing, and indexing in HiveWork with RDD in Apache SparkProcess real-time streaming dataPerform DataFrame operations in Spark using SQL queriesImplement User-Defined Functions (UDF) and User-Defined Attribute Functions (UDAF) inSparkCertification Alignment:Our curriculum is aligned to Cloudera CCA175 certification exam.Certification Details and Criteria:Completion of at least 85 percent of online self-paced learning or attendance of one livevirtual classroomA score of at least 75 percent in course-end assessmentSuccessful evaluation in at least one project

Course Curriculum:Lesson 01 - Introduction to Bigdata and HadoopIntroduction to Big Data and HadoopIntroduction to Big DataBig Data AnalyticsWhat is Big Data?Four vs of Big DataCase Study Royal Bank of ScotlandChallenges of Traditional SystemDistributed SystemsIntroduction to HadoopComponents of Hadoop Ecosystem Part OneComponents of Hadoop Ecosystem Part TwoComponents of Hadoop Ecosystem Part ThreeCommercial Hadoop DistributionsDemo: Walkthrough of Simplilearn CloudlabKey TakeawaysKnowledge Check

Lesson 02 - Hadoop Architecture Distributed Storage(HDFS) and YARNHadoop Architecture Distributed Storage (HDFS) and YARNWhat is HDFSNeed for HDFSRegular File System vs HDFSCharacteristics of HDFSHDFS Architecture and ComponentsHigh Availability Cluster ImplementationsHDFS Component File System NamespaceData Block SplitData Replication TopologyHDFS Command LineDemo: Common HDFS CommandsPractice Project: HDFS Command LineYarn IntroductionYarn Use CaseYarn and its ArchitectureResource ManagerHow Resource Manager OperatesApplication MasterHow Yarn Runs an ApplicationTools for Yarn DevelopersDemo: Walkthrough of Cluster Part OneDemo: Walkthrough of Cluster Part TwoKey TakeawaysKnowledge CheckPractice Project: Hadoop Architecture, distributed Storage (HDFS) and Yarn

Lesson 03 - Data Ingestion into Big Data Systems and ETLData Ingestion Into Big Data Systems and EtlData Ingestion Overview Part OneData Ingestion Overview Part TwoApache SqoopSqoop and Its UsesSqoop ProcessingSqoop Import ProcessSqoop ConnectorsDemo: Importing and Exporting Data from MySQL to HDFSPractice Project: Apache SqoopApache FlumeFlume ModelScalability in FlumeComponents in Flume’s ArchitectureConfiguring Flume ComponentsDemo: Ingest Twitter DataApache KafkaAggregating User Activity Using KafkaKafka Data ModelPartitionsApache Kafka ArchitectureDemo: Setup Kafka ClusterProducer Side API ExampleConsumer Side APIConsumer Side API ExampleKafka ConnectDemo: Creating Sample Kafka Data Pipeline Using Producer and ConsumerKey TakeawaysKnowledge CheckPractice Project: Data Ingestion Into Big Data Systems and ETL

Lesson 04 - Distributed Processing MapReduceFramework and PigDistributed Processing Mapreduce Framework and PigDistributed Processing in MapreduceWord Count ExampleMap Execution PhasesMap Execution Distributed Two Node EnvironmentMapreduce JobsHadoop Mapreduce Job Work InteractionSetting Up the Environment for Mapreduce DevelopmentSet of ClassesCreating a New ProjectAdvanced MapreduceData Types in HadoopOutput formats in MapreduceUsing Distributed CacheJoins in MapreduceReplicated JoinIntroduction to PigComponents of PigPig Data ModelPig Interactive ModesPig OperationsVarious Relations Performed by DevelopersDemo: Analyzing Web Log Data Using MapreduceDemo: Analyzing Sales Data and Solving Kpis Using PigPractice Project: Apache PigDemo: WordcountKey TakeawaysKnowledge CheckPractice Project: Distributed Processing - Mapreduce Framework and Pig

Lesson 05 - Apache HiveApache HiveHive SQL over Hadoop MapreduceHive ArchitectureInterfaces to Run Hive QueriesRunning Beeline from Command LineHive MetastoreHive DDL and DMLCreating New TableData TypesValidation of DataFile Format TypesData SerializationHive Table and Avro SchemaHive Optimization Partitioning Bucketing and SamplingNon-Partitioned TableData InsertionDynamic Partitioning in HiveBucketingWhat Do Buckets Do?Hive Analytics UDF and UDAFOther Functions of HiveDemo: Real-time Analysis and Data FiltrationDemo: Real-World ProblemDemo: Data Representation and Import Using HiveKey TakeawaysKnowledge CheckPractice Project: Apache HiveLesson 06 - NoSQL Databases HBaseNoSQL Databases HBaseNoSQL IntroductionDemo: Yarn TuningHbase OverviewHbase ArchitectureData ModelConnecting to HBasePractice Project: HBase ShellKey TakeawaysKnowledge CheckPractice Project: NoSQL Databases - HBase

Lesson 07 - Basics of Functional Programming and ScalaBasics of Functional Programming and ScalaIntroduction to ScalaDemo: Scala InstallationFunctional ProgrammingProgramming With ScalaDemo: Basic Literals and Arithmetic ProgrammingDemo: Logical OperatorsType Inference Classes Objects and Functions in ScalaDemo: Type Inference Functions Anonymous Function and ClassCollectionsTypes of CollectionsDemo: Five Types of CollectionsDemo: Operations on ListScala REPLDemo: Features of Scala REPLKey TakeawaysKnowledge CheckPractice Project: Apache HiveLesson 08 - Apache Spark Next-Generation Big DataFrameworkApache Spark Next-Generation Big Data FrameworkHistory of SparkLimitations of Mapreduce in HadoopIntroduction to Apache SparkComponents of SparkApplication of In-memory ProcessingHadoop Ecosystem vs SparkAdvantages of SparkSpark ArchitectureSpark Cluster in Real WorldDemo: Running a Scala Programs in Spark ShellDemo: Setting Up Execution Environment in IDEDemo: Spark Web UIKey TakeawaysKnowledge CheckPractice Project: Apache Spark Next-Generation Big Data Framework

Lesson 09 - Spark Core Processing RDDIntroduction to Spark RDDRDD in SparkCreating Spark RDDPair RDDRDD OperationsDemo: Spark Transformation Detailed Exploration Using Scala ExamplesDemo: Spark Action Detailed Exploration Using ScalaCaching and PersistenceStorage LevelsLineage and DAGNeed for DAGDebugging in SparkPartitioning in SparkScheduling in SparkShuffling in SparkSort ShuffleAggregating Data With Paired RDDDemo: Spark Application With Data Written Back to HDFS and Spark UIDemo: Changing Spark Application ParametersDemo: Handling Different File FormatsDemo: Spark RDD With Real-world ApplicationDemo: Optimizing Spark JobsKey TakeawaysKnowledge CheckPractice Project: Spark Core Processing RDDLesson 10 - Spark SQL Processing DataFramesSpark SQL Processing DataFramesSpark SQL IntroductionSpark SQL ArchitectureDataframesDemo: Handling Various Data FormatsDemo: Implement Various Dataframe OperationsDemo: UDF and UDAFInteroperating With RDDsDemo: Process Dataframe Using SQL QueryRDD vs Dataframe vs DatasetPractice Project: Processing DataframesKey TakeawaysKnowledge CheckPractice Project: Spark SQL - Processing Dataframes

Lesson 11 - Spark MLib Modelling BigData with SparkSpark Mlib Modeling Big Data With SparkRole of Data Scientist and Data Analyst in Big DataAnalytics in SparkMachine LearningSupervised LearningDemo: Classification of Linear SVMDemo: Linear Regression With Real World Case StudiesUnsupervised LearningDemo: Unsupervised Clustering K-meansReinforcement LearningSemi-supervised LearningOverview of MlibMlib PipelinesKey TakeawaysKnowledge CheckPractice Project: Spark Mlib - Modelling Big data With Spark

Lesson 12 - Stream Processing Frameworks and SparkStreamingStreaming OverviewReal-time Processing of Big DataData Processing ArchitecturesDemo: Real-time Data ProcessingSpark StreamingDemo: Writing Spark Streaming ApplicationIntroduction to DStreamsTransformations on DStreamsDesign Patterns for Using ForeachrddState OperationsWindowing OperationsJoin Operations Stream-dataset JoinDemo: Windowing of Real-time Data ProcessingStreaming SourcesDemo: Processing Twitter Streaming DataStructured Spark StreamingUse Case Banking TransactionsStructured Streaming Architecture Model and Its ComponentsOutput SinksStructured Streaming APIsConstructing Columns in Structured StreamingWindowed Operations on Event-timeUse CasesDemo: Streaming PipelinePractice Project: Spark StreamingKey TakeawaysKnowledge CheckPractice Project: Stream Processing Frameworks and Spark Streaming

Lesson 13 - Spark GraphXSpark GraphXIntroduction to GraphGraphX in SparkGraphX OperatorsJoin OperatorsGraphX Parallel SystemAlgorithms in SparkPregel APIUse Case of GraphXDemo: GraphX Vertex PredicateDemo: Page Rank AlgorithmKey TakeawaysKnowledge CheckPractice Project: Spark GraphXProject Assistance

Course End Projects:The course includes four real-world, industry-based projects. The successful evaluation of oneof the following projects is a part of the certification eligibility criteria:Project 1: Analyzing Historical Insurance ClaimsUse Hadoop features to predict patterns and share actionable insights for a car insurancecompanyThis project uses New York Stock Exchange data from 2010 to 2016, captured from 500 listedcompanies. The data set consists of each listed company’s intraday prices and volume traded.The data is used in both machine learning and exploratory analysis projects for the purposesof automating the trading process and predicting the next trading-day winners or losers. Thescope of this project is limited to exploratory data analysis.Domain: BFSIProject 2: Employee Review of Comment AnalysisUse Hive features for data analysis and share the actionable insights with the HR team for thepurpose of taking corrective actions.The HR team is surfing social media to gather current and ex-employee feedback andsentiments. This information will be used to derive actionable insights and take correctiveactions to improve the employer-employee relationship. The data is web-scraped fromGlassdoor and contains detailed reviews of 67K employees from Google, Amazon, Facebook,Apple, Microsoft, and Netflix.Domain: Human ResourcesProject 3: K-Means Clustering for Telecommunication DomainLoudAcre Mobile is a mobile phone service provider whichthat has introduced a new opennetwork campaign. As a part of this campaign, the company has invited users to complainabout mobile phone network towers in their area if they are experiencing connectivity issueswith their present mobile network. LoudAcre has collected the dataset of users who havecomplained.Domain: TelecommunicationProject 4: Market Analysis in Banking DomainOur client, a Portuguese banking institution, ran a marketing campaign to convince potentialcustomers to invest in a bank term deposit promotion. The marketing campaign pitches weredelivered by phone calls. Often, however, the same customer was contacted more than once.You have to perform the marketing analysis of the data generated by this campaign, keeping inmind the redundant callsDomain: Banking(Market Analysis)

Tools Covered:

Customer Reviews:Hari HarasanTechnical Architect at InfosysThe session on Map reducer was really interesting, a complex topicwas very well explained in an understandable manner.Vignesh BalasubramanianSenior Operations Professional @ IBMI have enrolled for Big Data Hadoop Spark developer course fromSimplilearn. The course was well organized, covering all the rootconcepts and relevant real-time experience. The trainer was wellequipped to solve all the doubts during the training. Cloud lab facilityand materials provided were on point.Anusha T SSoftware Developer at ZibtekI have enrolled in Big Data Hadoop and Spark Developer fromSimplilearn. I like the teaching method of the trainers. He wasvery helpful and knowledgeable. Overall I am very happy withSimplilearn. Their cloud labs are also very user-friendly. I wouldhighly recommend my friends to take a course from here and upskillthemselves.Permoon AnsariProject Manager at IBMGautam has been the best trainer throughout the session. He tookample time to explain the course content and ensured that the classunderstands the concepts. He's undoubtedly one of the best in theindustry. I'm delighted to have attended his sessions.

About Us:Simplilearn is a leader in digital skills training, focused on the emerging technologies thatare transforming our world. Our blended learning approach drives learner engagementand is backed by the industry’s highest completion rates. Partnering with professionals andcompanies, we identify their unique needs and provide outcome-centric solutions to helpthem achieve their professional goals.For more information, please visit our n.comFounded in 2009, Simplilearn is one of the world’s leading providers of online training for Digital Marketing,Cloud Computing, Project Management, Data Science, IT Service Management, Software Development andmany other emerging technologies. Based in Bangalore, India, San Francisco, California, and Raleigh, NorthCarolina, Simplilearn partners with companies and individuals to address their unique needs, providingtraining and coaching to help working professionals meet their career goals. Simplilearn has enabled over 1million professionals and companies across 150 countries train, certify and upskill their employees.Simplilearn’s 400 training courses are designed and updated by world-class industry experts. Theirblended learning approach combines e-learning classes, instructor-led live virtual classrooms, appliedlearning projects, and 24/7 teaching assistance. More than 40 global training organizations have recognizedSimplilearn as an official provider of certification training. The company has been named the 8th mostinfluential education brand in the world by LinkedIn.India – United States – Singapore 2009-2019 - Simplilearn Solutions. All Rights Reserved.The certification names are the trademarks of their respective owners.

This Big Data Hadoop Certification course is designed to give you an in-depth knowledge of the big data framework using Hadoop and Spark. In this hands-on big data course, you will execute real-life, industry-based projects using Simplilearn's integrated labs. Program Overview: Program Features: hours of blended learning74