Transcription

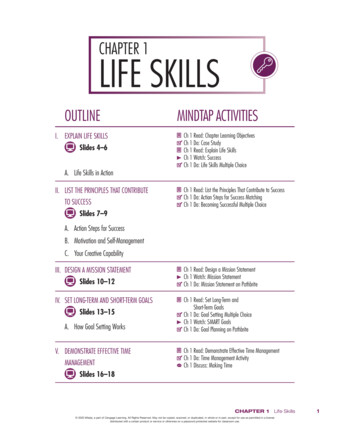

The Case againstSocial MediaContent RegulationReaffirming Congress’ Duty toProtect Online Bias, “HarmfulContent,” and Dissident Speechfrom the Administrative StateBy Clyde Wayne Crews, Jr.June 2020ISSUE ANALYSIS 2020 NO. 4

The Case against Social Media Content RegulationReaffirming Congress’ Duty to Protect Online Bias, “Harmful Content,”and Dissident Speech from the Administrative StateBy Clyde Wayne Crews, Jr.Executive SummaryAs repeatedly noted by most defenders of free speech,expressing popular opinions never needs protection.Rather, it is the commitment to protecting dissidentexpression that is the mark of an open society. On theother hand, no one has the right to force people totransmit one’s ideas, much less agree with them.However, the flouting of these principles is nowcommonplace across the political spectrum.Government regulation of media content has recentlygained currency among politicians and pundits of bothleft and right. In March 2019, for example, PresidentTrump issued an executive order directing thatcolleges receiving federal research or education grantspromote free inquiry. And in May 2020 he issuedanother addressing alleged censorship and bias byallegedly monopolistic social media companies.In this political environment, policy makers, pressuregroups, and even some technology sector leaders—whose enterprises have benefited greatly from freeexpression—are pursuing the imposition of onlinecontent and speech standards, along with other policiesthat would seriously burden their emerging competitors.The current social media debate centers aroundcompeting interventionist agendas. Conservativeswant social media titans regulated to remain neutral,while liberals tend to want them to eradicate harmfulcontent and address other alleged societal ills.Meanwhile, some maintain that Internet serviceshould be regulated as a public utility.Blocking or compelling speech in reaction togovernmental pressure would not only violate theConstitution’s First Amendment—it would requireCrews: The Case against Social Media Content Regulationimmense expansion of constitutionally dubiousadministrative agencies. These agencies would eitherenforce government-affirmed social media and serviceprovider deplatforming—the denial to certain speakersof the means to communicate their ideas to the public—or coerce platforms into carrying any message byactively policing that practice. When it comes toprotecting free speech, the brouhaha over social mediapower and bias boils down to one thing: The Internet—and any future communications platforms—needsprotection from both the bans on speech sought by theleft and the forced conservative ride-along speechsought by the right.In the social media debate, the problem is not that bigtech’s power is unchecked. Rather, the problem is thatsocial media regulation—by either the left or right—would make it that way. Like banks, social mediagiants are not too big to fail, but regulation wouldmake them that way.American values strongly favor a marketplace ofideas where debate and civil controversy can thrive.Therefore, the creation of new regulatory oversightbodies and filing requirements to exile politicallydisfavored opinions on the one hand, and efforts toforce the inclusion of conservative content on theother, should both be rejected.Much of the Internet’s spectacular growth can beattributed to the immunity from liability for usergenerated content afforded to social media platforms—and other Internet-enabled services such asdiscussion boards, review and auction sites, andcommentary sections—by Section 230 of the 1996Communications Decency Act. Host takedown orretention of undesirable or controversial content by1

“interactive computer services,” in the Act’s words,can be contentious, biased, or mistaken. But Section230 does not require neutrality in the treatment ofuser-generated content in exchange for immunity.In fact, it explicitly protects non-neutrality, albeitexercised in “good faith.”Section 230’s broad liability protection represented anacceleration of a decades-long trend in courts narrowingliability for publishers, republishers, and distributors.It is the case that changes have been made to Section230, such as with respect to sex trafficking, butdeeper, riskier change is in the air today, advocated forby both Republicans and Democrats. It is possible thatsome content removals may happen in bad faith, or thatcompanies violate their own terms of service, butaddressing those on a case-by-case basis would be amore fruitful approach. Section 230 notwithstanding,laws addressing misrepresentation or deceptivebusiness practices already impose legal disciplineon companies.Regime-changing regulation of dominant tech firms—whether via imposing online sales taxes, privacymandates, or speech codes—is likely not to disciplinethem, but to make them stronger and more imperviousto displacement by emerging competitors.The vast energy expended on accusing purveyors ofinformation, either on mainstream or social media, ofbias or of inadequate removal of harmful contentshould be redirected toward the development of tools2that empower users to better customize the contentthey choose to access.Existing social media firms want rules they can livewith—which translates into rules that future socialnetworks cannot live with. Government cannot createnew competitors, but it can prevent their emergenceby imposing barriers to market entry.At risk, too, is the right of political—as opposed tocommercial—anonymity online. Government has aduty to protect dissent, not regulate it, but a casualty ofregulation would appear to be future dissident platforms.The Section 230 special immunity must remain intactfor others, lest Congress turn social media’s economicpower into genuine coercive political power. Competingbiases are preferable to pretended objectivity. Given thatreality, Congress should acknowledge the inevitablepresence of bias, protect competition in speech, anddefend the conditions that would allow future platformsand protocols to emerge in service of the public.The priority is not that Facebook or Google or anyother platform should remain politically neutral, butthat citizens remain free to choose alternatives thatmight emerge and grow with the same Section 230exemptions from which the modern online giants havelong benefited. Policy makers must avoid creating anenvironment in which Internet giants benefit fromprotective regulation that prevents the emergence ofnew competitors in the decentralized infrastructure ofthe marketplace of ideas.Crews: The Case against Social Media Content Regulation

Introduction[A]t some level the question ofwhat speech should be acceptableand what is harmful needs to bedefined by regulation.1—Facebook CEO MarkZuckerberg, to reporters aftermeeting with French PresidentEmmanuel Macron in Paris, May10, 2019Congress shall make no law abridging the freedom of speech,or of the press.—U.S. Constitution, Amendment IAs repeatedly noted by most defendersof free speech, expressing popularopinions never needs protection. Rather,it is the commitment to protectingdissident expression that is the markof an open society. On the other hand,no one has the right to force people totransmit one’s ideas, much less agreewith them.However, the flouting of theseprinciples is now commonplace acrossthe political spectrum. Governmentregulation of media content seemedunthinkable not so long ago, yet ithas recently gained currency amongpoliticians and pundits of both left andright. In March 2019, for example,President Trump issued an executiveorder directing that colleges receivingfederal research or education grantspromote free inquiry.2 In May 2020 heissued another addressing allegedcensorship and bias byallegedly “monopolistic” social mediacompanies.3In this political environment, policymakers, pressure groups, and evensome technology sector leaders—whose enterprises have benefitedgreatly from free expression—arepursuing the imposition of onlinecontent and speech standards, alongwith other policies that would seriouslyburden their emerging competitors.Examples of what many wish toregulate include political neutrality,bias, harmful content, bots, advertisingpractices, privacy standards, election“meddling,” and more.4The currentsocial mediadebate boilsdown tocompetinginterventionistagendas.The current social media debate boilsdown to competing interventionistagendas. Conservatives want socialmedia titans regulated to remainneutral, while liberals tend to wantthem to eradicate harmful content andaddress other alleged societal ills.Meanwhile, Google and Facebooknow hold that Internet service shouldbe regulated as a public utility.Either blocking or compelling speechin reaction to governmental pressurewould not only violate theConstitution’s First Amendment—itwould require immense expansion ofconstitutionally dubious administrativeagencies.5 These agencies wouldeither enforce government-affirmedsocial media and service providerdeplatforming—the denial to certainspeakers of the means to communicateCrews: The Case against Social Media Content Regulation3

The Internetneeds protectionfrom both thebans on speechsought by theleft andthe forcedconservativeride-alongspeech soughtby the right.their ideas to the public—or coerceplatforms into carrying any message byactively policing that practice. When itcomes to protecting free speech, thebrouhaha over social media powerand bias boils down to one thing: TheInternet needs protection from boththe bans on speech sought by the leftand the forced conservative ride-alongspeech sought by the right. So dofuture communications platforms.As all-encompassing as today’s socialmedia platforms seem, they are only asnapshot in time, prominent parts of avirtually boundless and ever-changingInternet and media landscape. TheInternet represents a transformativeleap in communications not because oftop-down rules and codes governingacceptable expression, but because ithas radically expanded broadcastfreedom to mankind. More ispublished in a day than could formerlybe produced in months or even years.6How Public Policy HasProtected Online Free SpeechIn the early days of the commercialInternet, different moderating policiesof firms like Prodigy and Compuserveraised serious concerns over who couldbe held liable for defamation, libel,and other harms: Would the responsibleparty be the host or the person whowrote or uploaded the content?7 Thiswas a question ultimately resolved byCongress rather than the courts.4Much of the Internet’s spectaculargrowth can be attributed to theimmunity from liability for usergenerated content afforded to socialmedia platforms—and other Internetenabled services such as discussionboards, review and auction sites, andcommentary sections—by Section 230of the 1996 Communications DecencyAct.8 Host takedown or retention ofundesirable or controversial contentby “interactive computer services,”in the Act’s words, can be contentious,biased, or mistaken. But Section 230does not require neutrality in thetreatment of user-generated content inexchange for immunity. In fact, itexplicitly protects non-neutrality,albeit exercised in “good faith.” Thelaw reads that providers will not beheld liable for:[A]ny action voluntarily taken ingood faith to restrict access to oravailability of material that theprovider or user considers to beobscene, lewd, lascivious, filthy,excessively violent, harassing,or otherwise objectionable,whether or not such material isconstitutionally protected.9In other words, you can say it, but noone is obligated to help you do so.Jeff Kosseff, Assistant Professor ofCybersecurity Law at the U.S. NavalAcademy and author of The TwentySix Words that Created the Internet,maintains that without Section 230Crews: The Case against Social Media Content Regulation

“the two most likely options for arisk-averse platform would be eitherto refrain from proactive moderationentirely, and only take down contentupon receiving a complaint, or not toallow any user-generated content.”10Similarly, Internet AssociationPresident Michael Beckerman argues,“Eliminating the ability of platformsto moderate content would mean aworld full of 4chans and highlycurated outlets—but nothing inbetween.”11 (The online message board4chan has become infamous for itsbare-bones moderation and tolerationof bigoted content.) None are requiredto act in a fair or neutral manner, andgovernment cannot require them todo so.12Section 230’s “broad liability shieldfor online content distributors”—in the words of Mercatus Centerscholars Brent Skorup and JenniferHuddleston—represented anacceleration of a decades-long trendin courts narrowing liability forpublishers, republishers, anddistributors more generally,13 as wellas a concept of “conduit immunity”imparted to intermediaries.14 It is thecase that changes have been made toSection 230, such as with respect tosex trafficking.15 But deeper, riskierchange is in the air today, advocated forby both Republicans and Democrats.16It is possible that some contentremovals may happen in bad faith, orthat companies violate their own termsof service, but addressing those on acase-by-case basis would be a morefruitful approach. Section 230notwithstanding, laws addressingmisrepresentation or deceptive businesspractices already expose companies tolegal discipline. Still, some officialshave called for using laws barringdeceptive business practices to chargesocial media platforms with makingfalse statements about viewpointneutrality.17Regime-changing regulation ofdominant tech firms—whether viaimposing online sales taxes,18 privacymandates,19 or speech codes—is likelynot to discipline them, but to makethem stronger and more imperviousto displacement by emergingcompetitors.20 Given the proliferationof media competition across platformsand online infrastructure, none can beconsidered essential, much lessmonopolistic, as some critics claim.However, regulation can backfire andturn them into such. For example,Facebook CEO Mark Zuckerbergacknowledged, in testimony toCongress in 2018, that privacyregulation would benefit Facebook.21Given theproliferationof mediacompetitionacross platformsand onlineinfrastructure,none can beconsideredessential,much lessmonopolistic.That sentiment was reiterated byFacebook policy head Richard Allan,who favors a “regulatory framework”to address disinformation and fakenews.22From a given corporation’s perspective,such concessions are strategically moreCrews: The Case against Social Media Content Regulation5

favorable alternatives to the threatenedcorporate breakups, large fines, or evenpersonal liability for management.23Conservativesfixated on socialmedia bias arereluctant toappreciate theimmeasurablebenefit theyreceive fromSection 230.While LinkedIn and Reddit leadershave cautioned against social mediaregulation,24 several leading techcorporate chiefs—including Apple’sTim Cook, Twitter’s Jack Dorsey, Snap,Inc.’s Evan Spiegel, Y Combinator’sSam Altman, and former InstagramCEO Kevin Systrom—have expressedsupport for regulating elements of bigtech, in social media and elsewhere.25None has been as prominent andunambiguous in support for top-downregulation as Facebook’s Zuckerberg,who in a March Washington Postop-ed called for governments to barcertain kinds of “harmful” speech andrequire detailed official reportingobligations for tech firms. Thisproposal is in direct conflict with theprotections of Section 230.26Conservatives fixated on social mediabias are reluctant to appreciate theimmeasurable benefit they receivefrom Section 230.27 It has never actedas a subsidy to anyone, applyingequally to all—publishers, likenewspapers, have websites, too. Evenif biases by some platforms were avalid concern, there is no precedentfor the reach conservatives enjoynow.28 Those who complain of biasor censorship on YouTube—such asPrager University, for example—paynothing for the hosting that can reachmillions and stand to benefit from6similar freedom. Just this year, theNinth Circuit Court of Appeals ruledthat, “[D]espite YouTube’s ubiquityand its role as a public-facing platform,it remains a private forum, not a publicforum subject to judicial scrutinyunder the First Amendment.”29 Thisdisintermediation—the rapid erosionof the influence of major mediagatekeepers—has enabled the growthof a more diverse landscape, thanks inlarge part to the collapse of barriers toentry, including cost.Most ominously, conservatives fail toappreciate the dangers of commoncause with advocates of content oroperational regulation on the left.Given the ideologically liberalnear-monoculture at publicly fundeduniversities, major newspapers, andnetworks, the vast preexistingadministrative apparatus in the federalgovernment—and in management andcorporate culture for most of SiliconValley, for that matter—it is the left thathas more to lose from not regulating theInternet as the sole cultural mediumnot largely dominated by liberalperspectives.Nothing is more fully open toindependent or dissenting voices thanthen Internet; regulation would changethat. For that reason alone,tomorrow’s conservatives need to relyupon Section 230 immunities morethan ever to preserve their voices. Anyexpansion of federal agency oversightCrews: The Case against Social Media Content Regulation

of Internet content standards standultimately to be repurposed to theaims of the left in the long run.Yet, the threat of online speechregulation now comes in bipartisanclothing. The Deceptive Experiencesto Online Users Reduction (DETOUR)Act (S. 1084), cosponsored by Sens.Mark Warner (D-VA) and Deb Fischer(R-NE), seeks to address “darkpatterns” and the alleged tricking ofusers into handing over data.30 Warnerseeks still broader regulation of techfirms including changes to Section230,31 but probably will not agreewith conservatives on what constitutesdisinformation or valid exercise ofthe civil right of anonymouscommunication.32At bottom, neither left nor right nowdefends the right to platform bias. Bothsides must hit pause, in order to protectthe right to express controversialviewpoints enshrined in the FirstAmendment and affirm the propertyrights of “interactive computerservices” of today and tomorrow. Theopponents, superficially in conflict,agree in principle that governmentshould have the final say. In the wakeof any bipartisan tech regulation“victory,” entrenched unelectedbureaucrats at Washington regulatoryagencies will have free rein to define“objectivity” as they see fit— highlylikely to the detriment ofconservatives and classical liberals.Countering the Tech RegulatoryCampaign of the RightUnlike the left and its emphasison culling “harmful content,”conservatives seek forced carriage inservice of political neutrality; theywant content included, not removed.Many on the right insist bias prevailsand that monopolistic social mediaroutinely censor search results anduser-generated content. To supposedlyremedy that situation, they wouldalter Section 230’s immunities andrequire objectivity and even officialcertifications of non-bias—to bedetermined by tomorrow’s bureaucrats. On Twitter, President DonaldTrump thundered at “the tremendousdishonesty, bias, discrimination andsuppression practiced by certaincompanies. We will not let them getaway with it much longer.”33 He hasalso expressed a desire to sue socialmedia firms.34Neither leftnor right nowdefends theright toplatform bias.Then in July 2019, Sens. Ted Cruz(R-TX) and Josh Hawley (R-MO)wrote to the Federal Trade Commission(FTC) seeking investigation into howmajor tech companies curate content,while —echoing progressive calls forregulation—accusing social media ofbeing “powerful enough to—at thevery least—sway elections.” “Theycontrol the ads we see, the news weread, and the information we digest,”they complain. “And they activelycensor some content and amplify othercontent based on algorithms andCrews: The Case against Social Media Content Regulation7

Control,amplification,or contentremoval byprivate actorsare not coercionor censorship,but elementalto free speech.intentional decisions that arecompletely nontransparent.”35Meanwhile, many on the left holdthe same elitist opinion of people’sfaculties. Sen. Hawley has nowintroduced several pieces of nannystate legislation that should be ofconcern in today’s environment.36Lost in all this are the simple facts thatsocial media cannot censor and a thatnon-depletable Internet cannot bemonopolized unless governmentcircumscribes it. Control, amplification,or content removal by private actorsare not coercion or censorship, butelemental to free speech. Manipulatingan algorithm is likewise an exercise offree speech, even if the July 2019White House Social Media Summitmaintained otherwise.37 Censorshiprequires government force to eitherblock or compel speech, and there canbe no private media monopoly so longas there is no government censorship.38One has a right to speak, but no oneexercising that right has a right toforce others to supply them with aWeb platform, newspaper, venue, ormicrophone.39The Constitution places limits on stateactors, yet Hawley asserts socialmedia must “abide by principles offree speech and First Amendment.”40These vital principles apply togovernment, not to the populationgenerally or to communicationsenabled by private companies.8Hawley’s own legislation, the EndingSupport for Internet Censorship Act(S. 1914), would limit Section 230immunities, deny property rights,compel transmission of speech,and create a large administrativebureaucracy by requiring that a“covered company” obtain an“immunity certification” from theFederal Trade Commission, assuringa majority of the unelectedcommissioners every two years that itdoes not moderate content providedby others in a politically biased way.41In similar spirit, the Stop theCensorship Act (H.R. 4027), sponsoredby Rep. Paul Gosar (R-AZ), wouldlimit moderation of “objectionable”content.42 These legislative proposalsare highly problematic. One cannotprove a negative. The premise isinoperable, susceptible to partisanship,and hostile to free speech.Discrimination and bias are theessence of healthy debate, andmandating neutrality by alteringSection 230—or via any othermeans—would remove the right ofconservatives to “discriminate” onpotentially dominant alternativeplatforms that could emerge in comingyears or decades (just as Google andFacebook emerged to displace priorleaders).But that is not all. Sen. Hawley alsohas set his sights on many techcompanies’ business model. HawleyCrews: The Case against Social Media Content Regulation

has complained: “These companiesand others exploit this harvested datato build massive profiles on users andthen rake in hundreds of billions ofdollars monetizing that data.”43Indeed, big tech firms use data inextraordinarily sophisticated ways, butso do popular conservative mediasites. The Drudge Report looks like1990s vintage, but it sports asophisticated architecture underneaththe hood.44 Perhaps “information wantsto be free,” but it also wants to bemonetized.45 Another Hawleysponsored bill, the Social MediaAddiction Reduction Technology(SMART) Act (S. 2314), would meanfuture conservative platforms couldnot monetize and survive as easily.46Specifically, it would require onlinecontent providers to tread carefullyover how they write headlines. Thebill stipulates:Not less frequently than onceevery 3 years, the [Federal Trade]Commission shall submit toCongress a report on the issueof internet addiction and theprocesses through which socialmedia companies and otherinternet companies, by exploitinghuman psychology and brainphysiology, interfere with freechoices of individuals on theinternet (including with respect tothe amount of time individualsspend online).47The current antitrust debate isinextricably bound with the onlinespeech controversy. Yet, concepts like“common carrier” and “essentialfacility,” which have been used torationalize regulation, are not applicableeither to a virtually limitless Internetor to its future capabilities.48 Like theexpanding universe, the healthyInternet, with all its potential, remainsunbounded and not monopolizable.Legislation would change that.In today’s environment, big tech firmsshould defend the principle of andright to bias both for themselves andfor others, even as they articulate thatthey strive toward some measure ofobjectivity, which can be a sellingfeature.Competing Biases Are Preferableto Pretended ObjectivitySocial media firms deny they arebiased. Google Vice President KaranBhatia, for example, denies bias incontent display or search results.49 HeLike theexpandinguniverse,the healthyInternet, withall itspotential,remainsunboundedand ied as such in July 2019 to theSenate Judiciary Committee, pointingto external validating studies.50 Bhatiareasonably asserts: “Our users overwhelmingly trust us to deliver themost helpful and reliable informationout there. Distorting results forpolitical purposes would beantithetical to our mission andcontrary to our business interests.”51To an unappreciated degree, people’sCrews: The Case against Social Media Content Regulation9

own online behavior, not externallyimposed bias, will influence algorithmfunctionality and what they see.52Unknown,undiscovered,or unrealizedemergence ofbias will likelyincrease ashumanknowledgeincreases.Twitter has likewise proclaimed itselfan “open communications platform.”Twitter CEO Jack Dorsey similarlyclaimed, in testimony to Congress in2018, that it does not base decisionson political ideology.53 In April 2019testimony, Twitter Public Policy andPhilanthropy Director Carlos Monjetold the Senate Judiciary Committee:“We welcome perspectives and insightsfrom diverse sources and embracebeing a platform where the open andfree exchange of ideas can occur.”54Google’s 2018 report “The GoodCensor,” described in the SenateJudiciary hearing as an internalassessment and marketing exercise,defended “balance,” and “well orderedspaces for safety and civility.” Theseare worthy goals, but, as we haveseen, “censoring” can only be a termof art as Google used it in the report(and for which it drew avoidablecriticism).55 In any event, many policymakers will never be convinced ofnon-bias. A negative cannot be proven,nor can total neutrality be achievedgiven the inevitability of bias in humancommunication.56 Unknown,undiscovered, or unrealized emergenceof bias will likely increase as humanknowledge increases. Algorithmsunderstandably boost content thatgenerates likes, shares, and otherinteractions.57 They also undertake the10error-prone and thankless task oflooking for and rooting out “toxicity.”58The upshot is that Google results cannever be objective in a way that cansatisfy everybody, and there is nothingwrong with that. Policies will alwaysbe in flux and there can be vaguenessand lack of transparency ripe formisunderstanding.59 The sole remedy,“All search results must appear first,”is an absurdity.60 In a fluid environment,it is not reasonable to say bias is notreflected in decisions made or inalgorithmic results, but revealed orovert bias can be more honest than aninsincere, pretended objectivity.Besides, search has evolved intoswiping and talking as means ofinterface, which do not always involveGoogle and typing text.61In the July Senate hearing, the promiseextracted from Google to grant accessto its records on advertisements andvideos pulled sets a terrible precedent.While moves like Facebook’s responseof appointing Sen. Jon Kyl to lead abias audit62 may yield some helpfultransparency proposals, they willresolve nothing as far as competingcomplaints of bias are concerned.63But if conservatives, liberals, or somealliance of them triumph in the questfor content regulation, that wouldimpose control and censorship oneveryone.64 That is why competingbiases and algorithms are paramountas a matter of principle, whether ornot bias exists in select circumstances.Crews: The Case against Social Media Content Regulation

The Danger of ProgressiveRegulation of “Harmful Content”“I think a lot of regimes aroundthe world would welcome the callto shut down political oppositionon the grounds that it’s harmfulspeech,”—Federal CommunicationsCommissioner Brendan Carr65Some politicians, dominant socialmedia firms, and activists propose toexpunge what they see as objectionablecontent online. They point to hatespeech, disinformation, misinformation,and objectionable, harmful, ordehumanizing content.66 Since“misinformation” can translate into“things we disagree with,” thisinventory can be expected to grow.Disagreeable or hateful speech isnonetheless constitutionally protected.67The leading edge of social mediaregulation consists of a March 2019proposal from Facebook founder andCEO Mark Zuckerberg and a whitepaper from Sen. Mark Warner entitled,“Potential Policy Proposals forRegulation of Social Media andTechnology Firms.” 68Zuckerberg asserts, “I believe we needa more active role for governmentsand regulators.” He endorses allianceswith governments around the world topolice harmful online speech, a movethat would erect impenetrable globalbarriers to future social mediaalternatives to Facebook. In his March2018 Washington Post op-ed, “TheInternet Needs New Rules,”Zuckerberg said:Lawmakers often tell me we havetoo much power over speech, andfrankly I agree. I’ve come tobelieve that we shouldn’t make somany important decisions aboutspeech on our own. So we’recreating an independent body sopeople can appeal our decisions.We’re also working withgovernments, including Frenchofficials, on ensuring theeffectiveness of content reviewsystems. Some politicians,dominant socialmedia firms, andactivists proposeto expunge whatthey see asobjectionablecontent online.One idea is for third-party bodiesto set standards governing thedistribution of harmful contentand measure companies againstthose standards. Regulation couldset bas

media titans regulated to remain neutral, while liberals tend to want them to eradicate harmful content and address other alleged societal ills. Meanwhile, Google and Facebook now hold that Internet service should be regulated as a public utility. Either blocking or compelling speech in reaction to governmental pressure would not only violate the