Transcription

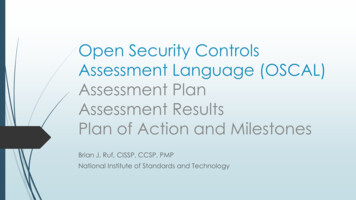

Open Security ControlsAssessment Language (OSCAL)Assessment PlanAssessment ResultsPlan of Action and MilestonesBrian J. Ruf, CISSP, CCSP, PMPNational Institute of Standards and Technology

2OverviewAssessmentResultsLayerPlan of Action & Milestones (POA&M) ModelAssessment Results ModelPossible Other Assessment Results Models (Future)Three New Models: Assessment Plan Assessment ResultsAssessmentLayerAssessment Plan ModelAssessment Activity Model(s) (Future) Plan of Action and MilestonesImplementationLayerSystem Security Plan ModelComponent ModelProfileLayerProfile ModelCatalogLayerCatalog Model

3Background Assessment Layers were intended to be addressed in OSCAL 2.0 FedRAMP has an immediate need to receive a complete ATO package inOSCAL NIST and FedRAMP agreed to expand OSCAL now to enable OSCAL modelingof FedRAMP SAP, SAR, and POA&Ms Developed these with FedRAMP as the focus, but also in anticipation of otheruses, such as continuous assessment Additional assessment layer features will still be addressed in OSCAL 2.0, such asadditional mechanism to automate assessment inspections and testing.

4Importance of ImportOSCAL CatalogControlBaseline(OSCAL Profile)System SecurityPlanAssessment PlanOSCAL CatalogControlBaseline(OSCAL Profile)System SecurityPlanPlan of Action and Milestones (POA&M)Assessment Results OSCAL is designed for traceability In most cases: Models to the right refer to content in models on the left,instead of duplicating content There are a few exceptions

OSCAL Catalog5Importanceof Import FedRAMP Example The SSP refers tothe profile andcatalog forcontrol definitions The AssessmentPlan and Resultsrefer to the SSPfor systemdescription andarchitectureNIST SP 800-53Control DefinitionsNIST SP 800-53AAssessmentObjectives(by Control)NIST SP 800-53AAssessment Actions(by Control)TEST, INSPECT,INTERVIEWControl Baseline(OSCAL Profile)Controls in thisBaselineFedRAMPModificationsSystem SecurityPlanCSP s ControlImplementationEmpty Test CaseWorkbookAssessment PlanAssessment ResultsPlanned In-ScopeControls forAssessmentActual In-ScopeControls AssessedEmpty Test CaseWorkbook(With Adjustments)Planned In-ScopeAssessmentObjectives andActionsOrganizationallyRequiredAssessment Actionsfor Each ObjectivePopulated Test CaseWorkbookAssessment Actionsand FindingsPlan of Action andMilestones(POA&M)POA&M EntriesFindings for EachObjectiveSSP DiscrepanciesFoundRisk ExposureTablePOA&M EntriesDeviations:FP, OR, RADeviations:FP, OR, RAPlanned In-ScopeSystem DetailsAssessed SystemDetailsBasic SystemInformationRules of EngagementRules of EngagementPlanned Scheduleand ActivitiesActual Eventsand ActivitiesPlanned ToolsTools UsedSystem Descriptionand ArchitectureUsersSystem Components& InventoryLocations

6Overlapping Syntax (AP and AR)Assessment PlanAssessment ResultsAuthor: AssessorMetadataAuthor: AssessorMetadataImport SSPImport Assessment PlanObjectives [Planned]Planned vs. ActualObjectives [Actual]Assessment Subject [Planned]Planned vs. ActualAssessment Subject [Actual]Assets (Tools, Teams, ROE, etc.) [Planned]Planned vs. ActualAssets (Tools, Teams, etc.) [Actual]Assessment Activities [Planned]Planned vs. ActualAssessment Activities [Actual]Back MatterResultsBack MatterTraditional Snapshot Approach Assessment Plan: What the assessor plans to do Assessment Results: What the assessor actually didContinuous Assessment Approach Assessment Plan: What should be tested/inspected, how, and in what frequency Assessment Results: Time-slice of results

7Assessment Plan andAssessment Results Common to AP and AR: Objectives Assessment Subject Assets Assessment Activities Back Matter (general) Unique to AR: Results Evidence in Back mponentsandInventoryComponents and ionTestTestTeamSystemOwnerTestPOCsSystem Owner Test t(ROE)Rules of Engagement ationTestTestResults (Current)Findings / ObservationsIdentified Risks, Calculations DeviationsRecommendations and Remediation PlansEvidence Descriptions and LinksDisposition tachments and terviewEvidence (Screen Shots, Photos, InterviewNotes)Notes)

8Assessment Results:Time SlicesAssessment Results (AR)Import Assessment PlanObjectivesAssessment SubjectAssetsTraditional Snapshot Approach Entire current assessment in one Resultsassembly Each past assessment cycle in its own resultsassemblyContinuous Assessment Approach Each Results assembly is a snapshot in time Example: If testing once per hour, eachresults assembly represents the testing forthat hourAssessment ActivitiesResults (Current)Findings / ObservationsIdentified Risks, Calculations DeviationsRecommendations and Remediation PlansEvidence Descriptions and LinksDisposition StatusResults (Last Cycle)Results (Earlier Cycle)

Findings,Risks,Analysis,and Flow1.Gather findings. Some findingsdemonstrate compliance. Otherfindings demonstrate a lack ofcompliance and represent a risk.2.While performing risk analysis,some risks are closed during theassessment period. Others areidentified as a false positive. Someopen risks have mitigating factors,resulting in a risk adjustment. Theremaining open and adjusted risksare typically populated in a riskexposure table.3.All residual risks are typicallyentered into the POA&M by thesystem owner, where they aretracked until closure.FindingsIdentified RisksTest Case WorkbookAssessment Activities91. GATHER FINDINGS: Assessment Results Model: Results/Finding AssemblySatisfiedOther ThanSatisfiedAutomated Tool ResultsPenetration Testing Results2. RISK ANALYSIS: Assessment Results Model: Results/Finding/Risk AssemblyFindingsIdentified RisksClosed edRisk AdjustedFalse PositiveRisk Exposure Table (Residual Risk AdjustedTracked RisksPOA&M Items3. RISK TRACKING: POA&M Model: Results/Finding/Risk AssemblyFalse Positive

Overlapping Syntax (AR andPlan of Action and Milestones (POA&M)POA&M)Assessment Results (AR)MetadataImport AP10ObjectivesMetadataAssessment SubjectImport SSPAssetsSystem IdentifierAssessment ActivitiesLocal DefinitionsResultsPOA&M ItemsFindingPOA&M ItemUnique ID, Impacted ControlObjective StatusAssessment Objective IDObservationsObservationsRisk InformationTitle, Source, CVE#, Calculations, Severity,RecommendationsRisk InformationTitle, Source, CVE#, SeverityStatusopenVendor Dependencies Status and EvidenceDeviations JustificationFalse Positive (FP)Operational Requirement (OR)Risk Adjustment (RA)Risks withstatus open atthe end of testingare transferred tothe POA&M usingthe same OSCALsyntax.Correspondingobservationsmust also betransferred.Remediation ActivitiesPlan, Schedule, Resolution Date,Remediation StatusVendor DependenciesEvidence and Check-InsDeviationsStatus (Investigating, Pending, Approved)False Positive (FP)Operational Requirement (OR)Risk Adjustment (RA)CVSS MetricsSSP Implementation Statement DifferentialFinding (From Automated Tools / Scanners)POA&M ItemFinding (From Penetration Testing)POA&M ItemBack MatterBack Matter Typically allremainingassessment risks areentered into thePOA&M. (notclosed duringtesting, and not averified FP) To facilitate this, thesyntax the same foran individual ARfinding and anindividual POA&Mitem. While some detail,such as objectivestatus, may befiltered, it can alsotravel to thePOA&M along withthe risk informationif appropriate.

Plan of Action and Milestones (POA&M)11POA&M Model Ideally the POA&M imports an SSP. The System Identifier is used when a POA&M isdelivered without its corresponding SSP Example: Monthly Continuous Monitoring(ConMon) delivery of a POA&M where an SSP isonly delivered annually. This enables another tool to re-link the POA&Mand a previously delivered SSP. Scanning tools and missing SSP content aredefined in the Local Definitions assembly. The structure provides robust remediationplanning and tracking activities. The structure also provides risk metrics anddeviation management for multiple differentcompliance frameworks. OSCAL enablesthese to co-exist in a single POA&M itementry.MetadataTitle, Version, DateRoles, People, OrganizationsImport SSPPointer to FedRAMP System Security PlanSystem IdentifierUnique system IDLocal DefinitionsFor content not defined in the SSPPOA&M ItemsPOA&M ItemUnique ID, Impacted ControlObservationsRisk InformationTitle, Source, CVE#, SeverityRemediation ActivitiesPlan, Schedule, Resolution Date,Remediation StatusVendor DependenciesEvidence and Check-InsDeviationsStatus (Investigating, Pending, Approved)False Positive (FP)Operational Requirement (OR)Risk Adjustment (RA)CVSS MetricsPOA&M ItemPOA&M ItemBack MatterCitations and External LinksAttachments and Embedded ImagesEvidence (Vendor Check-Ins, DR Evidence)

12Questions?Thank you!OSCAL Repository:https://github.com/usnistgov/OSCALWe want your feedback!Project Website:https://www.nist.gov/oscalHow to /FedRAMP Implementation Guideshttps://github.com/gsa/fedrampautomation (Available in July)

13Thank youWe want your feedback!OSCAL ct Website:https://www.nist.gov/oscalHow to /

deviation management for multiple different compliance frameworks. OSCAL enables these to co-exist in a single POA&M item entry. Plan of Action and Milestones (POA&M) Metadata Title, Version, Date Roles, People, Organizations Import SSP Pointer to FedRAMP System Security Plan Back Matter Cit