Transcription

The OPS Domain Specific Abstraction forMulti-Block Structured Grid ComputationsIstván Z. Reguly, Gihan R. Mudalige, Michael B. GilesDan Curran, Simon McIntosh-SmithOxford e-Research Centre, University of Oxford,7 Keble Road, Oxford, UKEmail: giles@maths.ox.ac.ukDepartment of Computer Science, University of Bristol,The Merchant Venturers Building, Bristol, UKEmail: dc8147@bristol.ac.uk, simonm@cs.bris.ac.ukAbstract—Code maintainability, performance portability andfuture proofing are some of the key challenges in this era ofrapid change in High Performance Computing. Domain SpecificLanguages and Active Libraries address these challenges byfocusing on a single application domain and providing a high-levelprogramming approach, and then subsequently using domainknowledge to deliver high performance on various hardware.In this paper, we introduce the OPS high-level abstraction andactive library aimed at multi-block structured grid computations,and discuss some of its key design points; we demonstrate howOPS can be embedded in C/C and the API made to look like atraditional library, and how through a combination of simple textmanipulation and back-end logic we can enable execution on adiverse range of hardware using different parallel programmingapproaches.Relying on the access-execute description of the OPS abstraction, we introduce a number of automated execution techniquesthat enable distributed memory parallelization, optimizationof communication patterns, checkpointing and cache-blocking.Using performance results from CloverLeaf from the Mantevosuite of benchmarks, we demonstrate the utility of OPS.Keywords—Domain Specific Languages, Software Design,Structured Grid, High Performance ComputingI.I NTRODUCTIONIncreasingly complex multicore CPU systems, as well asrecently emerged massively parallel platforms, such as graphical processing units (GPUs) or the Xeon Phi, require moreand more hardware-specific knowledge and low-level programming techniques for applications to be able to exploit theirfull performance potential. Even more importantly varioushardware platforms often require very different programmingapproaches and low-level optimizations, which has prompteda lot of research into the area [1], [2], exploring how differentalgorithmic classes map to different hardware.At the same time, most scientific codes are primarilydeveloped by domain scientists, who are often self-educatedin programming. While many codes are well designed withsoftware sustainability in mind, in some cases the developmentstrategy is ”as soon as it starts working, it’s done”. In manycases these codes are used for 10-20 years, with new featuresconstantly being added and the code being patched, as newresearch and methods are integrated - these applications arethen heavily relied upon to deliver scientific results, thus thesoftware engineering approach is critical to the extensibilityand maintainability of the code. It is increasingly necessary toapply more and more involved optimisations to achieve highperformance on modern hardware, but the current approach ofporting an application to a specific platform is very expensivein terms of developer effort, and, perhaps more importantly,involves a high amount of risk, because it is not clear whichplatforms and programming approaches will “win” in thelong term. Therefore, the “future proof” design of scientificapplications has been receiving increasing attention.Future proofing software for re-use and longevity is not anew concept in software engineering, there is a general pushtowards raising the level of abstraction for programming; tobe able to design any code in a productive way and achievehigh performance. Despite decades of research no language orprogramming environment exists that would deliver generality,productivity and performance. Below, we discuss some ofthe most prominent software design approaches aimed ataddressing this challenge.Classical numerical and software libraries give access tohigh performance implementations of a set of algorithms,such as MKL [3] or MAGMA [4] to Basic Linear AlgebraSubprograms (BLAS), or to a wider set of algorithms, such asPETSc for the solution of PDEs [5]. By only providing a set ofbuilding blocks, this approach restricts the kinds of algorithmsthat can be constructed, furthermore it is very rigid in terms ofdata structures, because input data is generally expected to belaid out in a particular fashion. Some approaches support highperformance general-purpose computations, but they actuallylower the level of abstraction in terms of expressing parallelism, thereby degrading productivity; languages or languageextensions like OpenCL [6], CUDA [7] or Cilk Plus [8] expecta very specific, comparatively low-level, programming style.Other approaches aim to compile high-level languages likePython down to modern architectures, such as Copperhead [9].One of the most promising directions for research isDomain Specific Languages [10] or Active Libraries [11] thatexploit knowledge about a specific problem domain, such asstructured grids, to provide a high-level abstraction that canbe easily used by domain scientists, and at the same timeaddress the aforementioned programming challenges. Thereis a wide range of DSLs being developed, targeting variousproblem domains, each using a slightly different programming approach. There are two main classes of DSLs [10],standalone ones define an entire new language, and embeddedones are embedded in the host language, utilizing its syntax

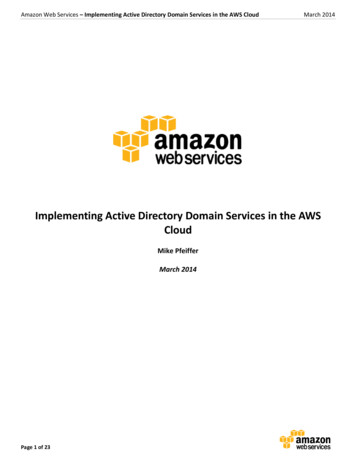

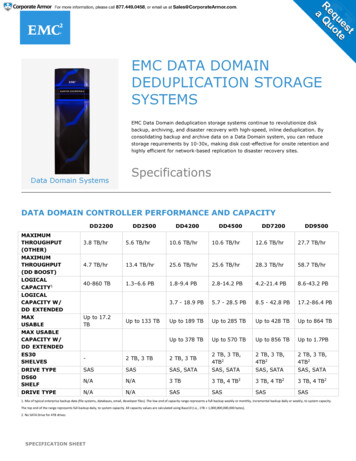

and compilation tools. Most DSLs for scientific computationsare embedded, to various degrees; some rely entirely on thefeatures of the host language, such as templates and objectoriented programming, and often appear to be classical software libraries, but most rely on compilation techniques toeither directly generate machine code, or to generate sourcecode (via source-to-source translation) that is then fed toa traditional compiler. Active Libraries look like traditionallibraries, but use code generation to transform an applicationcode written once to different parallel implementations. Theextent to which DSLs modify the host language varies widely:some do not change anything (TIDA [12], OP2 [13]), some addnew keywords (STELLA[14]), and most define various newlanguage constructs: Delite [15], Halide [16], Pochoir [17],PATUS [18].The use of DSLs as a development strategy was previouslyshown to have significant benefits both for developer productivity and gaining near-optimal performance [19], [20], [21].However, currently most of these still remain as experimentalresearch projects and have not yet been adopted by a widerHPC community. Partly the reason is a lack of DSLs or highlevel frameworks that are actively used at creating productionlevel applications.In this paper, we further explore the utility of high-levelabstraction frameworks, and study our approach to designingand implementing DSLs, and its inherent advantages anddrawbacks. We introduce the OPS (Oxford Parallel libraryfor Structured mesh solvers) Domain Specific Active Librarytargeting the development of parallel multi-block structuredmesh applications. The domain of multi-block structured meshapplications can be viewed as an unstructured collection ofstructured-mesh blocks. It is distinct from the single-blockstructured mesh and unstructured mesh applications domainswhich are supported by a number of well established DSLs andactive libraries [14], [16], [19], [20]. Thus, the challenges indeveloping a good abstraction to represent the description anddeclaration of multi-block problems and their efficient solutionon modern massively parallel hardware platforms is unique.More specifically we make the following contributions:1)2)3)4)We introduce the OPS abstraction and API for multiblock structured grid computations.We present how, through this abstraction, automaticparallelization and data movement can be achieved inshared memory and distributed memory systemsWe introduce a novel lazy execution scheme anddiscuss what runtime optimizations this enables.We conclude by discussing the benefits and the drawbacks of our approach to designing a DSL, from theperspective of both domain scientists and computerscientists.We argue that the OPS abstraction covers a wide rangeof structured mesh applications and at the same time theassumptions made and our approach to implementing thisactive library are strong enough that OPS can apply a widerange of optimisations and deliver near-optimal performance.The remainder of the paper presents evidence to support bothclaims; Section II introduces the OPS abstraction and givesan example of how to use its API. Section III describes howrelying on the abstraction it is possible to utilize various parallel programming approaches to execute on different hardware,Dataset 1on block 1blockhaloDataset 2on block 2 yxhalo areaof dataset 1yxFig. 1: Halos in a multi-block settingintroduces a lazy execution scheme used by OPS, and describesa number of optimizations that rely on the loop chainingabstraction [22]. Section IV gives an overview of the designchoices of OPS discussing their benefits and drawbacks, andfinally Section VI draws conclusion.II.T HE OPS ABSTRACTION AND APIThe Oxford Parallel library for Structured grid computations (OPS) is a Domain Specific Active Library embeddedin C/C , targeting computations on multi-block structuredmeshes. The abstraction consists of four principal components:1)2)3)4)Blocks: a collection of structured grid blocks. Thesehave a dimensionality but no size.Datasets: data defined on blocks, with explicit size.Halos: description of the interface between datasetsdefined on different blocks.Computations: description of an elemental operationapplied to grid points, accessing datasets on a givenblock.Given blocks, datasets and halos, an unstructured collectionof structured meshes can be fully described. The principalassumption of the OPS abstraction is that the order in whichelemental operations are applied to individual grid points during a computation may not change the results, within machineprecision (OPS does not enforce bitwise reproducibility). Thisis the key assumption that enables OPS to parallelize executionusing a variety of programming techniques.From a programming perspective, OPS looks like a traditional software library, with a number of Application Programming Interface (API) calls that facilitate the definitionof blocks, datasets and halos, as well as the definition ofcomputations. From the user point of view, using OPS is likeprogramming a traditional single-threaded sequential application, which makes development and testing intuitive - data andcomputations are defined at a high level, making the resultingcode easy to read and maintain.Take for example a simple multi-block scenario, shown inFigure 1, with two blocks, one dataset each that are of size2 6 and 3 4 respectively, and with one layer of halo. Theblocks are oriented differently with respect to each other, anda halo connection is defined between them, as shown in the

int range[4] {12,50,12,50};for (int j range[2]; j range[3]; j ) {for (int i range[0]; i range[1]; i ) {a[j][i] b[j][i] b[j 1][i] b[j][i 1];}}Fig. 3: A classical 2D stencil computationops block block1 ops decl block(2,”block1”);ops block block2 ops decl block(2,”block2”);int halo neg[] {0,0}; int halo pos[] {1,0};int size[] {2,6}; int base[] {0,0};ops dat dataset1 ops decl dat(block1, 1, size,base, halo pos, halo neg,“double”,”dataset1”);size[0] 3;size[1] 4;halo pos[0] 0;halo pos[1] 1;ops dat dataset2 ops decl dat(block2, 1, size,base, halo pos, halo neg,“double”,”dataset2”);//user kernelvoid calc(double *a, const double *b) {a[OPS ACC0(0,0)] b[OPS ACC1(0,0)] b[OPS ACC1(0,1)] b[OPS ACC1(1,0)];}.int range[4] {12,50,12,50};ops par loop(calc, block, 2, range,ops arg dat(a,S2D 0,”double”,OPS WRITE),ops arg dat(b,S2D 1,”double”,OPS READ));int halo iter[] {3,1};int base from[] {0,3}; int axes from[] {0,1};int base to[] {2,2}; int axes to[] {1,0};ops halo halo0 ops decl halo(dataset2,dataset1,base from, base to,axes from, axes to);Fig. 2: OPS definition of blocks, datasets and halos as shown inFigure 1figure. Here we briefly introduce the key components of theOPS API and give a sample code that defines the necessarydata structures to describe the multi-block situation in Figure1, using a sequence of OPS calls, as shown in Figure 2. Furtherdetails can be found in [23]:ops block ops decl block(num dims,.) defines a structured block, which will serve to link datasetsdefined on the same block together.ops dat ops decl dat(block,size[],.) defines a dataset on a specific block with a given size; not alldatasets have to have the same size (consider data on cells vs.faces, or a multigrid setup). Ownership of data is transferred toOPS, it may not be accessed directly, only via the ops datopaque handles.ops halo ops decl halo(.) defines a halo interface between two datasets with arbitrary range and orientation, as illustrated in Figure 1. Currently, this is restricted toa one-to-one matching between grid points.void ops halo transfer(halos)halo exchange between the listed datasets.triggersthevoid ops par loop( void (*kernel)(.),block, ndim, range[], arg1,., argN) definesa parallel loop over a given block with a specific iterationrange, applying the user kernel kernel (a function pointer)to every grid point in the iteration range, passing data pointers,described by the ops arg arguments:ops arg ops arg dat(dataset, stencil,type, accss) gives access to a dataset, passing apointer to the user kernel that may be dereferenced with thegiven stencil points, and read, written, read and written, orincremented according to the access specification.ops arg ops arg gbl(data, size, type,access) facilitates passing data to the user kernel that is notdefined on any block, such as global variables, and enablesglobal reductions.Fig. 4: A parallel loop defined using the OPS APITake for example a classic nested loop performing astencil operation as shown in Figure 3. The description of thisoperation using the OPS API is shown in Figure 4; it definesan iteration over the grid points specified by range, executingthe user kernel calc on each, passing pointers to datasets aand b, a is written using a one-point stencil and b is read, usinga three point stencil - these stencils are described by the datastructures S2D 0 and S2D 1 respectively. The OPS ACCmacros are used to compute the index offsets required toaccess the different stencil points, these are set up by OPSautomatically.An application implemented once using the above APIcan be immediately compiled using a common C compiler(such as GNU g or Intel icpc), and tested for accuracy andcorrectness - this is facilitated by a header file that providesa single-threaded implementation of the parallel loops and thehalo exchanges.The high-level application code is built to rely entirely onthe OPS API to carry out computations and to access data;after an initial setup phase where data is passed to OPS usingeither existing pointers or HDF5 files, OPS takes ownershipof all data, and it may only be accessed via API calls. Thisenables OPS to make transformations to data structures thatfacilitate efficient parallel execution.This abstraction and API can be viewed as an instantiation of the AEcute (Access-Execute descriptor) programmingmodel [24] that separates the abstract definition of a computation from how it is executed and how it accesses data, this inturn gives OPS the opportunity to apply powerful optimisationsand re-organize execution. The basic semantics and rules ofexecution are as follows:1)2)3)4)For any given ops par loop, the order in whichgrid points are executed may be arbitrary.Subsequent parallel loops over the same block respectdata dependencies (e.g. one loop writes data that theother reads).Subsequent parallel loops over different blocks do nothave a fixed order of execution.Only halo exchanges between blocks introduce a

#define OPS ACC0(j,i) j*xdim0 i#define OPS ACC1(j,i) j*xdim1 i//user kernelvoid calc(double *a, const double *b) {.}Fig. 5: OPS code generation and build processdata dependency and therefore a prescribed order ofexecution between blocks.In this paper, we argue that a wide range of structuredmesh applications can be implemented using this API, whichwe demonstrate with two industrially representative codes,CloverLeaf [25] and ROTORSIM [26]. Furthermore, we claimthat the above API is expressive enough that no languageextensions or custom compilers are required to enable execution on a variety of hardware platforms, utilizing a range ofdifferent parallel programming abstractions and environments.Finally, we demonstrate near-optimal performance matchingor outperforming the hand-coded original, substantiating theseclaims.III. W HAT THE ABSTRACTION LETS US DOThe principal idea of the OPS abstraction and API is theseparation of the abstract definition of computations from theirparallel implementation and execution. Because computationsare described in such a descriptive manner, explicitly statinghow and what data is accessed, we show that it is possible to dynamically organise parallelism and data movement,tailoring it to different target architectures. While input datastructures are fixed, based on their description OPS can applytransformations to have them better suited for different hardware. OPS uses two fundamental techniques in combinationto utilise different parallel programming environments andto organize execution and data movement; code generationand back-end logic. Code generation is used to produce theparallel implementation of individual parallel loops targeted atdifferent hardware architectures and programming languages,and back-end logic is used to factor out common operations,and to organize execution and communication in a way thatsatisfies data dependencies and improves parallelism, localityor resilience. Figure 5 gives an overview of the OPS workflow;a structured mesh application is implemented using the OPSC/C API, handed to the code generator which creates theplatform specific optimized implementations. This can then becompiled using conventional compilers, such as icc, gcc ornvcc, and linked against the platform specific back-end. Theresulting executable may use the built-in capabilities of OPSto read HDF5 files in parallel, and is executed on the targetarchitecture(s).A. Code generationAlthough an OPS application can be immediately compiled, the header file implementation of ops par loop isvery general, and prohibits a number of optimizations that avoid ops par loop calc(int ndim, int range,ops arg arg0, ops arg arg1){//set up pointers and stridesdouble *p a0 (double*)ops base ptr(range, arg0);double *p a1 (double*)ops base ptr(range, arg1);xim0 arg0.dat- size[0]; xim1 arg1.dat- size[0];//do the computationfor(int j 0; j range[3]-range[2]; j ) {for(int i 0; i range[1]-range[0]; i ) {calc(&p a0[j*xdim0 i],&p a1[j*xdim1 i]);}}Fig. 6: A simple example of code generated by OPScompiler would carry out on hand-coded nested for loopswith stencil computations, such as the one shown in Figure3. However, a key point in designing the OPS API was tosupply all the necessary information that could be used to reconstruct this formulation, thereby enabling the same, if notfurther, compiler optimizations.Figure 6 shows a simple example of code generated forsequential execution of the loop in Figure 4; observe that allthe information required to generate this code is present inthe body of the ops par loop call. This is a key propertyof the OPS API: it is sufficient to extract information fromthe ops par loop call, therefore we have chosen not toimplement a full-fledged compiler system, rather to have asimple python script that looks for parallel loop calls in thesour

halo halo area of dataset 1 Fig. 1: Halos in a multi-block setting introduces a lazy execution scheme used by OPS, and describes a number of optimizations that rely on the loop chaining abstraction [22]. Section IV gives an overview of the design choices of OPS discussing their benefits a