Transcription

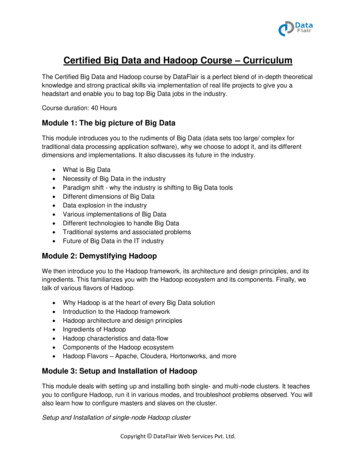

Certified Big Data and Hadoop Course – CurriculumThe Certified Big Data and Hadoop course by DataFlair is a perfect blend of in-depth theoreticalknowledge and strong practical skills via implementation of real life projects to give you aheadstart and enable you to bag top Big Data jobs in the industry.Course duration: 40 HoursModule 1: The big picture of Big DataThis module introduces you to the rudiments of Big Data (data sets too large/ complex fortraditional data processing application software), why we choose to adopt it, and its differentdimensions and implementations. It also discusses its future in the industry. What is Big DataNecessity of Big Data in the industryParadigm shift - why the industry is shifting to Big Data toolsDifferent dimensions of Big DataData explosion in the industryVarious implementations of Big DataDifferent technologies to handle Big DataTraditional systems and associated problemsFuture of Big Data in the IT industryModule 2: Demystifying HadoopWe then introduce you to the Hadoop framework, its architecture and design principles, and itsingredients. This familiarizes you with the Hadoop ecosystem and its components. Finally, wetalk of various flavors of Hadoop. Why Hadoop is at the heart of every Big Data solutionIntroduction to the Hadoop frameworkHadoop architecture and design principlesIngredients of HadoopHadoop characteristics and data-flowComponents of the Hadoop ecosystemHadoop Flavors – Apache, Cloudera, Hortonworks, and moreModule 3: Setup and Installation of HadoopThis module deals with setting up and installing both single- and multi-node clusters. It teachesyou to configure Hadoop, run it in various modes, and troubleshoot problems observed. You willalso learn how to configure masters and slaves on the cluster.Setup and Installation of single-node Hadoop clusterCopyright DataFlair Web Services Pvt. Ltd.

Hadoop environment setup and pre-requisitesInstallation and configuration of HadoopWorking with Hadoop in pseudo-distributed modeTroubleshooting encountered problemsSetup and Installation of Hadoop multi-node cluster Hadoop environment setup on the cloud (Amazon cloud)Installation of Hadoop pre-requisites on all nodesConfiguration of masters and slaves on the clusterPlaying with Hadoop in distributed modeModule 4: HDFS – The Storage LayerNext, we discuss HDFS( Hadoop Distributed File System), its architecture and mechanisms,and its characteristics and design principles. We also take a good look at HDFS masters andslaves. Finally, we discuss terminologies and some best practices. What is HDFS (Hadoop Distributed File System)HDFS daemons and architectureHDFS data flow and storage mechanismHadoop HDFS characteristics and design principlesResponsibility of HDFS Master – NameNodeStorage mechanism of Hadoop meta-dataWork of HDFS Slaves – DataNodesData Blocks and distributed storageReplication of blocks, reliability, and high availabilityRack-awareness, scalability, and other featuresDifferent HDFS APIs and terminologiesCommissioning of nodes and addition of more nodesExpanding clusters in real-timeHadoop HDFS Web UI and HDFS explorerHDFS best practices and hardware discussionModule 5: A Deep Dive into MapReduceAfter finishing this module, you will be comfortable with MapReduce, the processing layer ofHadoop, and will be aware of its need, components, and terminologies. MapReduce lets youprocess and generate big data sets with a parallel, distributed algorithm on a cluster with mapand reduce methods. We will demonstrate using examples as we move on to optimization ofMapReduce jobs and will introduce you to combiners as we move on to the next module. What is MapReduce, the processing layer of HadoopThe need for a distributed processing frameworkIssues before MapReduce and its evolutionCopyright DataFlair Web Services Pvt. Ltd.

List processing conceptsComponents of MapReduce – Mapper and ReducerMapReduce terminologies- keys, values, lists, and moreHadoop MapReduce execution flowMapping and reducing data based on keysMapReduce word-count example to understand the flowExecution of Map and Reduce togetherControlling the flow of mappers and reducersOptimization of MapReduce JobsFault-tolerance and data localityWorking with map-only jobsIntroduction to Combiners in MapReduceHow MR jobs can be optimized using combinersModule 6: MapReduce – Advanced ConceptsTime to dig deeper into MapReduce! This module takes you to more advanced concepts ofMapReduce- those like its data types and constructs like InputFormat and RecordReader. Anatomy of MapReduceHadoop MapReduce data typesDeveloping custom data types using Writable & WritableComparableInputFormat in MapReduceInputSplit as a unit of workHow Partitioners partition dataCustomization of RecordReaderMoving data from mapper to reducer – shuffling & sortingDistributed cache and job chainingDifferent Hadoop case-studies to customize each componentJob scheduling in MapReduceModule 7: Hive – Data Analysis ToolHalfway through the course now, we begin to explore Hive, a data warehouse software project.We take a look at its architecture, various DDL and DML operations, and meta-stores. Then, wetalk of where this would be useful. Finishing this module, you will be able to perform data queryand analysis. The need for an adhoc SQL based solution – Apache HiveIntroduction to and architecture of Hadoop HivePlaying with the Hive shell and running HQL queriesHive DDL and DML operationsHive execution flowSchema design and other Hive operationsSchema-on-Read vs Schema-on-Write in HiveCopyright DataFlair Web Services Pvt. Ltd.

Meta-store management and the need for RDBMSLimitations of the default meta-storeUsing SerDe to handle different types of dataOptimization of performance using partitioningDifferent Hive applications and use casesModule 8: Pig – Data Analysis ToolThis module teaches you all about Pig, a high-level platform for developing programs forHadoop. We will take a look at its execution flow and various operations, and will then compareit to MapReduce. Pig can execute its jobs in MapReduce. The need for a high level query language - Apache PigHow Pig complements Hadoop with a scripting languageWhat is PigPig execution flowDifferent Pig operations like filter and joinCompilation of Pig code into MapReduceComparison - Pig vs MapReduceModule 9: NoSQL Database – HBaseWe move on to HBase, an open-source, non-relational, distributed NoSQL database. In thismodule, we talk of its rudiments, architecture, datastores, and the Master and Slave model. Wealso compare it to both HDFS and RDBMS. Finally, we discuss data access mechanisms. NoSQL databases and their need in the industryIntroduction to Apache HBaseInternals of the HBase architectureThe HBase Master and Slave ModelColumn-oriented, 3-dimensional, schema-less datastoresData modeling in Hadoop HBaseStoring multiple versions of dataData high-availability and reliabilityComparison - HBase vs HDFSComparison - HBase vs RDBMSData access mechanismsWorking with HBase using the shellModule 10: Data Collection using SqoopWith Apache Sqoop, you can always go about another helping of data from a relationaldatabase into Hadoop or the other way around. This is a command-line interface application. The need for Apache SqoopIntroduction and working of SqoopCopyright DataFlair Web Services Pvt. Ltd.

Importing data from RDBMS to HDFSExporting data to RDBMS from HDFSConversion of data import/export queries into MapReduce jobsModule 11: Data Collection using FlumeApache Flume is a reliable distributed software that lets us efficiently collect, aggregate, andmove large amounts of log data. Here, we talk about its architecture and various tools it has tooffer. What is Apache FlumeFlume architecture and aggregation flowUnderstanding Flume components like data Sources and SinksFlume channels to buffer eventsReliable & scalable data collection toolsAggregating streams using Fan-inSeparating streams using Fan-outInternals of the agent architectureProduction architecture of FlumeCollecting data from different sources to Hadoop HDFSMulti-tier Flume flow for collection of volumes of data using AVROModule 12: Apache YARN & advanced concepts in the latest versionVersion 2 of Hadoop brought with it Yet Another Resource Negotiator (YARN). It will allow youto efficiently allocate resources. The need for and the evolution of YARNYARN and its eco-systemYARN daemon architectureMaster of YARN – Resource ManagerSlave of YARN – Node ManagerRequesting resources from the application masterDynamic slots (containers)Application execution flowMapReduce version 2 application over YarnHadoop Federation and Namenode HAModule 13: Processing data with Apache SparkThis module deals with Apache Spark and its features. This is an open-source distributedgeneral-purpose cluster-computing framework. We also discuss RDDs (Resilient DistributedDatasets) and their operations. Then, we understand the Spark programming model and theentire ecosystem. Introduction to Apache SparkCopyright DataFlair Web Services Pvt. Ltd.

Comparison - Hadoop MapReduce vs Apache SparkSpark key featuresRDD and various RDD operationsRDD abstraction, interfacing, and creation of RDDsFault Tolerance in SparkThe Spark Programming ModelData flow in Spark, The Spark EcosystemHadoop compatibility, & integrationInstallation & configuration of SparkProcessing Big Data using SparkModule 14: Real-Life Project on Big DataWe conclude this course with a live Hadoop project to prepare you for the industry. Here, wemake use of various Hadoop components like Pig, HBase, MapReduce, and Hive to solve realworld problems in Big Data Analytics. Web Analytics - Weblogs are web server logs where web servers like Apache record allevents along with a remote IP, timestamp, requested resource, referral, user agent, andother such data. The objective is to analyze weblogs to generate insights like usernavigation patterns, top referral sites, and highest/lowest traffic-times.Sentiment Analysis - Sentiment analysis is the analysis of people’s opinions, sentiments,evaluations, appraisals, attitudes, and emotions in relation to entities like individuals,products, events, services, organizations, and topics. It is achieved by classifying theobserved expressions as opinions positive or negative.Crime Analysis - Learn to analyze US crime data and find the most crime-prone areasalong with the time of crime and its type. The objective is to analyze crime data andgenerate patterns like time of crime, district, type of crime, latitude, and longitude. This isto ensure that additional security measures can be taken in crime-prone areas.IVR Data Analysis - Learn to analyze IVR(Interactive Voice Response) data and use it togenerate multiple insights. IVR call records are meticulously analyzed to help withoptimization of the IVR system in an effort to ensure that maximum calls complete at theIVR itself, leaving no room for the need for a call-center.Titanic Data Analysis - Titanic was one of the most colossal disasters in the history ofmankind, and it happened because of both natural events and human mistakes. Theobjective of this project is to analyze multiple Titanic data sets to generate essentialinsights pertaining to age, gender, survived, class, and embarked.And so many more projects of retail, telecom, media, etc.Copyright DataFlair Web Services Pvt. Ltd.

Certified Big Data and Hadoop Course . Data modeling in Hadoop HBase Storing multiple versions of data Data high-availability and reliability Comparison - HBase vs HDFS . Titanic Data Analysis - Titanic was one of the most colossal disasters in the history of mankind, and it happened because of both natural events and human mistakes. The