Transcription

Accelerator-level ParallelismMark D. Hill, Wisconsin & Vijay Janapa Reddi, Harvard@ Technion (Virtually), June 2020Aspects of this work on Mobile SoCs and Gables were developed whilethe authors were “interns” with Google’s Mobile Silicon Group. Thanks!1

Accelerator-level Parallelism Call to ActionFuture apps demand much more computingStandard tech scaling & architecture NOT sufficientMobile SoCs show a promising approach:ALP Parallelism among workload componentsconcurrently executing on multiple accelerators (IPs)Call to action to develop “science” for ubiquitous ALP4

OutlineI.Computer History & X-level ParallelismII. Mobile SoCs as ALP HarbingerIII. Gables ALP SoC ModelIV. Call to Action for Accelerator-level Parallelism5

20th Century Information & Communication TechnologyHas Changed Our World long list omitted Required innovations in algorithms, applications,programming languages, , & system softwareKey (invisible) enablers (cost-)performance gains Semiconductor technology (“Moore’s Law”) Computer architecture ( 80x per Danowitz et al.)6

Enablers: Technology ArchitectureArchitectureTechnologyDanowitz et al., CACM 04/20129

How did Architecture Exploit Moore’s Law?MORE (& faster) transistors è even faster computersMemory – transistors in parallel Vast semiconductor memory (DRAM) Cache hierarchy for fast memory illusionProcessing – transistors in parallelBit-, Instruction-, Thread-, & Data-level ParallelismNow Accelerator-level Parallelism10

X-level Parallelism in Computer ArchitectureP busMi/fdev1 CPUBLP ILPBit/Instrn-LevelParallelism11

Bit-level Parallelism (BLP)Early computers: few switches (transistors) è compute a result in many steps E.g., 1 multiplication partial product per cycleBit-level parallelism More transistors è compute more in parallel E.g., Wallace Tree multiplier (right)Larger words help: 8bà16bà32bà64bImportant: Easy for softwareNEW: Smaller word size, e.g. machine learning inference accelerators12

Instruction-level Parallelism (ILP)Processors logically do instructions sequentially (timeà)addloadActually do instructions in parallel è ILPIBM Stretch [1961]addloadbranchPredict direction: target or fall thruandSpeculate!storeSpeculate more!E.g., Intel Skylake has 224-entry reorder buffer w/ 14-19-stage pipelineImportant: Easy for software13

X-level Parallelism in Computer ArchitectureP busMi/fdev1 CPUBLP ILPBit/Instrn-LevelParallelismMultiprocessor TLPThread-LevelParallelism14

Thread-level Parallelism (TLP)Thread-level Parallelism HW: Multiple sequential processor cores SW: Each runs asynchronous threadSW must partition work, synchronize,& manage communication E.g. pThreads, OpenMP, MPICDC 6600, 1964,(TLP via multithreaded processor)On-chip TLP called “multicore” – forced choiceLess easy for software but More TLP in cloud than desktop à cloud!!Intel Pentium Pro Extreme Edition,early 2000s Bifurcation: experts program TLP; others use it15

X-level Parallelism in Computer ArchitectureP busMi/fdev1 CPUBLP ILPBit/Instrn-LevelParallelismMulticore TLPThread-LevelParallelism17

Data-level Parallelism (DLP)Need same operation on many data itemsDo with parallelism è DLP Array of single instruction multiple data (SIMD) Deep pipelines like Cray vector machines Intel-like Streaming SIMD Extensions (SSE)Broad DLP success awaited General-Purpose GPUs1. Single Instruction Multiple Thread (SIMT)2. SW (CUDA) & libraries (math & ML)3. Experimentation as 1-10K not 1-10MIllinois ILLIAC IV, 1966NVIDIA TeslaBifurcation again: experts program SIMT (TLP DLP); others use it18

X-level Parallelism in Computer ArchitectureP busdev-MMi/fGPUdev1 CPUBLP ILPBit/Instrn-LevelParallelismMulticore TLPThread-LevelParallelism Discrete GPU DLPData-LevelParallelism19

X-level Parallelism in Computer ArchitecturePGPU busMi/fdev1 CPUBLP ILPBit/Instrn-LevelParallelismMulticore TLPThread-LevelParallelism Integrated GPU DLPData-LevelParallelism20

X-level Parallelism in Computer Architecture21

OutlineI.Computer History & X-level ParallelismII. Mobile SoCs as ALP HarbingerIII. Gables ALP SoC ModelIV. Call to Action for Accelerator-level Parallelism22

X-level Parallelism in Computer ArchitecturePGPU busMi/fdev1 CPUBLP ILPBit/Instrn-LevelParallelismMulticore TLPThread-LevelParallelism Integrated GPU DLPData-LevelParallelismSystem on a Chip(SoC) ALPAccelerator-LevelParallelism23

Potential for Specialized Accelerators (IPs)Accelerator is a hardware component thatexecutes a targetedvcomputation class faster & usually with (much) less energy.v16 Encryption17 Hearing Aid18 FIR for disk read19 MPEG Encoder20 802.11 Baseband[Brodersen & Meng, 2002]25

CPU, GPU, xPU (i.e., Accelerators or IPs)42 Really?The Hitchhiker'sGuide to theGalaxy?2019 Apple A12 w/ 42 accelerators26

Example Usecase(recording 4K video)Janapa Reddi, et al.,IEEE Micro, Jan/Feb 2019ALP Parallelism among workload componentsconcurrently executing on multiple accelerators (IPs)27

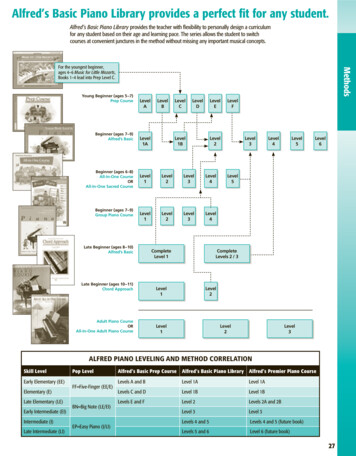

Mobile SoCs Run XXXXXXVideo Capture HDRXXXXXVideo PlaybackXXXXImage RecognitionXXXXAccelerators (IPs) èUsecases (rows)CPUs(AP)DisplayPhoto EnhancingXVideo reXMust run each usecase sufficiently fast -- no need fasterA usecase uses IPs concurrently: more ALP than serialFor each usecase, how much acceleration for each IP?28

ALP(t) #IPs concurrently active at time tActiveIPs109876543210Disclaimer:Made up DataTime to perform usecase (sec)29

OutlineI.Computer History & X-level ParallelismII. Mobile SoCs as ALP HarbingerIII. Gables ALP SoC Model [HPCA’19]IV. Call to Action for Accelerator-level Parallelism30

Mobile SoCs Hard To Program For and SelectEnvision usecases(years ahead)Port to many SoCs?Diversity hinders use[Facebook, HPCA’19]How to reason aboutSoC performance?31

Mobile SoCs Hard To DesignEnvision usecases(2-3 years ahead)Select IPsSize IPsDesign UncoreWhich accelerators? How big? How to even start?32

Computer Architecture & Performance ModelsAccuracyEffortInsightMultiprocessor &Amdahl’s LawModels vs Simulation More insight Less effortanswer But less accuracyMulticore &RooflineModels give first answer, not finalGables extends Roofline è first answer for SoC ALP33

Roofline for Multicore Chips, 2009Multicore HW Ppeak peak perf of all cores Bpeak peak off-chip bandwidthMulticore SW I operational intensity #operations/#off-chip-bytes E.g., 2 ops / 16 bytes à I 1/8Output Patt upper bound on performance attainable34

Roofline for Multicore Chips, 2009Ppeak(Patt)Bpeak* :Example of a naive Roofline model.svgCompute v. Communication: Op. Intensity (I) #operations / #off-chip bytes35

ALP System on Chip (SoC) Model: NEW Gables2019 Apple A12 w/ 42 acceleratorsGables uses Roofline per IP to provide first answer! SW: performance model of a “gabled roof?” HW: select & size accelerators36

Gables for N IP SoCCPUsIP[0]A0 1A0*PpeakB0A1*PpeakIP[1]AN-1*PpeakIP[N-1]B1BN-1 Share off-chip Bpeak Usecase at each IP[i] Operational intensity Ii operations/byte Non-negative work fi (fi’s sum to 1) w/ IPs in parallel37

Example Balanced Design Start w/ GablesTWO-IP SoCA1*Ppeak 5*40 200Ppeak 40IP[0]CPUsB0 6Bpeak 10DRAMIP[1]GPUB1 15Workload (Usecase):f0 1 & f1 0I0 8 good cachingI1 0.1 latency tolerantPerformance?38

Perf limited by IP[0] at I0 8IP[1] not used à no rooflineLet’s Assign IP[1] work: f1 0 à 0.75Ppeak 40Bpeak 10A1 5B0 6B1 15f1 0I0 8I1 0.13939

IP[1] present but Perf drops to 1! Why?I1 0.1 à memory bottleneckEnhance Bpeak 10 à 30(at a cost)Ppeak 40Bpeak 10A1 5B0 6B1 15f1 0.75I0 8I1 0.14040

Perf only 2 with IP[1] bottleneckIP[1] SRAM/reuse I1 0.1 à 8Reduce overkill Bpeak 30 à 20Ppeak 40Bpeak 30A1 5B0 6B1 15f1 0.75I0 8I1 0.14141

Usecases using K accelerators àGables has K 1 rooflinesPerf 160 A*Ppeak 200Can you do better?It’s possible!Ppeak 40Bpeak 20A1 5B0 6B1 15f1 0.75I0 8I1 84242

Into Synopsys design flow 6 months of publication!44

Case Study: IT Company SynopsysTwo cases where: Gables Actual1. Communication between two IP blocks Root: Too few buffers to cover communication latency Little’s Law: # outstanding msgs avg latency * avg BW uter-system-performance-part-1/ Solution: Add buffers; actual performance à Gables2. More complex interaction among IP blocks Root: Usecase work (task graph) not completely parallel Solution: No change, but useful double-check45

Case Study: Allocating SRAMIP0IP2IP1SHAREDWhere SRAM? Private w/i each IP Shared resource48

Does more IP[i] SRAM help Op. Intensity (Ii)?Compute v. Communication: Op. Intensity (I) #operations / #off-chip itsW/S working setIP[i] SRAMNon-linear function that increases when new footprint/working-set fitsShould consider these plots when sizing IP[i] SRAMLater evaluation can use simulation performance on y-axis50

Gables Home Page[HPCA’19]Model ExtensionsInteractive toolGables Android Source at s/51

Mobile System on Chip (SoC) & GablesSW: Map usecase to IP’s w/ many BWs & accelerationHW: IP[i] under/over-provisioned for BW or acceleration?Gables—like Amdahl’s Law—gives intuition & a first answerBut still missing is SoC “architecture” & programming model52

OutlineI.Computer History & X-level ParallelismII. Mobile SoCs as ALP HarbingerIII. Gables ALP SoC ModelIV. Call to Action for Accelerator-level Parallelism53

Future Apps Demand Much More Computing54

Accelerator-level Parallelism Call to ActionFuture apps demand much more computingStandard tech scaling & architecture NOT sufficientMobile SoCs show a promising approach:ALP Parallelism among workload componentsconcurrently executing on multiple accelerators (IPs)Call to action to develop “science” for ubiquitous ALP An SoC architecture that exposes & hides? A whole SoC programming model/runtime?55

ALP/SoCNo visibleparallelismPLocal SW stack abstractsSoftware Descent to Hellfire! each accelerator.But no good, general SWAny thread-levelabstraction for SoC ALP!parallelism, e.g., Thought bridge:Accelerate eachhomogeneous Must divide workdifferently withheterogeneously unique HLLsAll of above &P P P P(DSLs) & SDKshide in manyUniprocessorP P P PP P P PP P P MulticoreHellfire!Key: P processor core; A-E orskernel drivers LPAAAPAABDCCBECCBToday: DeviceAccelerators57

SW HW Lessons from GP-GPUs?Programming for data-level parallelism: four decadesSIMDàVectorsàSSEàSIMT!Nvidia GK110BLP TLP DLPFeatureThenNow1. ProgrammingGraphics OpenGLSIMT (Cuda/OpenCL/HIP)2. ConcurrencyEither CPU or GPU only;Intra-GPU mechanismsFiner-grain interactionIntra-GPU mechanisms3. CommunicationCopy data betweenhost & device memoriesMaybe shared memory,sometimes coherence4. DesignDriven by graphics only;GP: 0B marketGP major player, e.g.,deep neural networks58

SW HW Directions for ALP?Need programmability for broad success!!!!In less than four decades?Apple A12: BLP ILP TLP DLP ALPFeatureNowFuture?1. ProgrammingLocal: Per-IP DSL & SDK Abstract ALP/SoC likeGlobal: Ad hocSIMT does for GP-GPUs2. ConcurrencyAd hoc3. CommunicationSW: Up/down OS stackSW HW for queue pairs?HW: Via off-chip memory Want control/data planes4. Design, e.g., select,combine, & size IPsAd hocGP-GPU-like scheduling?Virtualize/partition IP?Make a “science.” Speedwith tools/frameworks59

OpportunitiesChallenges1. ProgrammabilityWhither globalmodel/runtime?DAG of streamsfor SoCs?3. CommunicationHow should SWstack reason aboutlocal/global memory,caches, queues, &scratchpads?HW assist for scheduling?Virtualize & partition?2. ConcurrencyWhen combine“similar” accelerators?Power vs. area?4. Design SpaceScienceHennessy & Patterson: A New Golden Age for Computer Architecture60

New Feb 2020!61

Data-Level Parallelism Bit/Instrn-Level Parallelism Thread-Level Parallelism ALP Accelerator-Level Parallelism. Potential for Specialized Accelerators (IPs) 25 [Brodersen& Meng, 2002] v v 16 Encryption 17 Hearing Aid 18 FIR for disk read 19 MPEG Encoder 20 802.11 Baseband