Transcription

DOCUMENT RESUMEED 366 650AUTHORTITLEPUB DATENOTEPUB TYPEEDRS PRICEDESCRIPTORSIDENTIFIERSTM 021 065Loftin, Lynn BakerFactor Analysis of the IDEA Student Rating Instrumentfor Introductory College Science and MathematicsCourses.Nov 9334p.; Paper presented at the Annual Meeting of theMid-South Educational Research Association (19th, NewOrleans, LA, November 10-12, 1993).Reports - Research/Technical (143) -Speeches/Conference Papers (150)MF01/PCO2 Plus Postage.Academic Achievement; College Students; *CourseEvaluation; Course Selection (Students); *FactorAnalysis; Higher Education; InstructionalEffectiveness; *Mathematics Instruction; Persistence;Questionnaires; Rating Scales; Research Methodology;*Science Instruction; Student Attitudes; StudentCharacteristics; Student Evaluation of TaacherPerformance; *Teacher Effectiveness; Test Use;Validity*Instructional Development Effectiveness Assessment;Kansas State UniversityABSTRACTThis study illustrates how a factor analysis of awell-designed student-rating instrument can increase its utility.Factor analysis of a student-ruting instrument was used to revealconstructs that would explain student attrition in science,mathematics, and engineering majors. The Instructional Developmentand Effectiveness Assessment (IDEA) instrument is used to ratecollege courses in science or mathematics at Kansas State University.It is used to gather students' reactions to instructors, personalprogress, and courses, as well as students' attitudes, and to obtainan overall rating. Subjects for the factor analysis were 141upperclass students (56.7 percent male). The factor analysis revealsconstructs about which students in science and mathematics haveexpressed concern. Of seven identified factors, the first three,interpreted as instructor presentation skills, student perception ofpersonal progress, and student-teacher interaction, are particularlyimportant in distinguishing instructor presentation from the personalaspects of course takers. Three tables present analysis results.(Contains 23 references.) **************************Reproductions supplied by EDRS are the best that can be madefrom the original ******************************

11:,U.S. DEPARTMENT OP EDUCATIONOffice of Educational Research and Improvementrf*EDU\Coer)"PERMISSION TO REPRODUCE THISMATERIAL HAS BEEN GRANTED BYTIONAL RESOURCES INFORMATIONCENTER (ERIC)Zyv,t) &A/Et Zopt/A)his document has been reproduced asreceived from the person or orgartaationoriginating itC Minor Changes have been made to improvereproduction QualityPoints of view or opinions stated in this docu-ment do not neCesSanly representOERI position 'or policyofficialTO THE EDUCATIONAL RESOURCESINFORMATION CENTER (ERIC)."Factor Analysis of the IDEA Student Rating InstrumentfoxIntroductory College Science and Mathematics CoursesLynn Baker LoftinUniversity of New Orleans/QPaper presorted at the annual meetin,of the Mid-SouthEducational Research Association, New Orleans, LA, November 9-12,1993.;112BEST COPY MAILARE

Most students attend college to prepare for a career.havechosen majorsalreadyandhaveprofessional field they expect to enter.agoodideaofMostwhatHowever, of the studentswho initially choose.science, mathematics, or engineering majors,over.40 percent graduate with a major in a nonscience field(Tobias, 1990).Students switch from science, mathematics, andengineexing majors at a much higher rate than from any other major.At a time when careers requiring science and technology expertiseare increasing,critical.this loss of talent to the field has istence in preparation for science related careers have comefrom several fronts.Most solutions are aimed at restructuringcurriculum and instruction in elementary and secondary schools.However necessary these changes may be, addxessing the problem atthe college level could more quickly show results.to assesscollegescienceandmathematicsOne approach iscurriculumandinstruction to discern problems which would deter students fromtheir career goals.Confronting and resolving problems at thecollege level would have an almost immediate effect on the numbersof college graduates in science, mathematics, and engineering.If college instruction is a contributor to the decline in thenumber of science, mathematics, and engineering majors, a directapproach to assessing the instruction is to ask the students.Colleges and universities often do this by using in-house orcommercially prepared student rating forms.These rating formscommonly consist of a series of items regarding the instruction and13

instructor in a particularusually on a Likert scale.course.The student responses areResults are typically given as averagescores on individual items with an overall rating average.Theseratings are primarily used by instructors for self-improvement andby administrators as contributing information fox promotion andtenure decisions.However useful the item average scores might tobe to instructors and administrators, the student rating instrumentcould also render further valuable information.techniquesexecuted ontheitem responsesFactor analysiscan often revealunderlying constructs within the rating instrument.Interpretablefactors provide additional information not readily apparent fromindividual item averages.Factor scores are often more meaningfulin evaluating instruction than average scores on individual items.In addition,factor analysis could reveal constructs which areimportant in influencing student persistence in a major and suggestmore clearly avenues for improvement.Using a student ratinginstrument is a positive step in evaluating instruction and afactor analysis of the item responses could increase its utility indiscerning how to help students persist in their career goals.Fewstudieshavefocused oncollegeinstructionasacontributing factor to the decline in the number of science majors.Tobias (1990), conducted a study of freshman science courses todetermine why the classes are considered so "hard" and why so manystudents are "turned off" by science.freshman chemistry or physicsSubjects were enrolled incourses, attended classes,tookexaminations, received grades and completed the courses.The4

cumulated from subject journals and interview sessions.Thecourses were reported to be interesting and the teaching adequate,however, the courses emphasized technique without explanationofhow it came about and failed to connect content with the realworld. The subjects complained of large impersonal lectureclasseswith a distinct lack of student enthusiasm. At the same time, thecourses were intensely competitive.Hewitt and Seymourethnographicstudies(1991,1992; Seymour, 1992a,intervieweddescribed as "switchers,"majoring in science butcollegestudentsthose wh-1 started collegeswitched to another major,1992b)whoinwereinitiallyand "non-switchers" those who persisted in science.Results suggest thatthe two groups were not greatly different.Both groups mentionedchanging interests, conceptual difficulties,inadequate high schoolpreparation, etc. as concerns.However, most often mentioned byall the students was the poor teaching andunapproachability of thefaculty in sciences. In fact, poor teaching andremoteness of thefaculty was the number one complaint of the non-switchersbut onlythe numberseven complaintfortheswitchers.Both groupsexperiencee difficulties with instruction, but one group persistedand the other switched majors.Schlipak(1988)interviewed female and male physics andengineering studenn and faculty at Harvard University to discerndifferences from otnex fields in an ethnological study.35Interviews

with college physics and engineering students and faculty revealedfive themes.The scis./engineering community was united bylanguage that excluded nonscientists.The male dominated facultywere perceived as strong authority figures who were brilliant,elitist, and disinterested in undergraduates.Poor advising andlack of support from faculty were usual, but women students voicedparticular dissatisfaction.Separation of the science communityfrom other fields was seen by men students as a decision to beautonomous, but by women students as isolation.Women students,but not men, expressed concerns about the intense competition.In a series of reports, Light (1990; 1992) explored teachingand learning in many fields at Harvard Uniwrsity.Informationabout undergraduates and the physical sciences was generated fromquestionnaires.The data revealed several perceptions.First,contrary to most expectations, students expressed a strong interestin taking courses in physical science; students were not frustratedby faculty emphasis on research in these areas, buthoped toparticipate; freshmen expressed confidence about taking science andseniors expressed regrets for not taking more science; studentsperceived a high workload in science classes; students perceivedmore grade competition in science classes.The sample size wasmodes*, and the students were superior Harvard undergraduates.Even though the sample sizes in the preceding studies weresmall, the results point out recurring themes in science d engineeringcourses is often distinct from instruction in other fields. This46

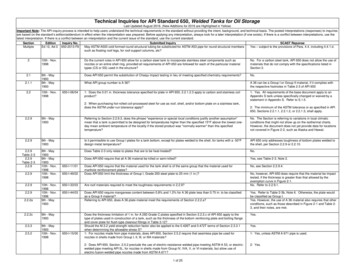

suggests that science and mathematics currir um and instructionmay be instrumental in deterring some students from their careergoals.Assessing science and mathematics instruction by usingstudent ratings may provide some answers.Augmenting ratingquestionnaires with factor analysis could communicate elements ofinstruction in a way that may be directly addressed.In thepresent study students at a public urban university in the Southwere asked to rate theirmathematicsusingthefirst college course in science orIDEA'(InstructionalEffectiveness Assessment) rating items.orDevelopmentandAll students were juniorsseniors who had been initially or were currently science,mathematics or engineering majors.introductorybiology,chemistry,The courses rated includedgeology,physics,computerscience, or nonremedial mathematics for mathematicsengineeringmajors.A factor analysis utilizing principalcomponentstechniques was used to discern underlying interpretable factors.Methods and MaterialsThe IDEAThe IDEA student rating of instruction questionnaire is partof a faculty evaluation system developed at KansasState Universityby Donald P. Hoyt and expanded by William P. Cashin.The IDEA hadbeen used in over 90,000 classes in 300 institutions by 1988(Cashin, 1988).Its long history and extensive use provide a broaddatabase supporting reliability and validity.The current IDEA is'Available from the Center for Faculty Evaluation andDevelopment, Kansas State University, Manhattan, KA 66502-16045

the 1988 edition.The IDEA Survey form for student reactions to instruction andcoursesisdividedintoidentification section.sixinformationsectionsandoneFor this study only the 45 items of thefirst five sections were considered.1. The Instructor includes twenty statements describing theinstructor's teaching.Responses to the items are by a five-pointLikert scale ranging from (1) hardly ever,sometimes,2.(2) occasionally,(3)(4) frequently, and (5) almost always.Progress includes ten objectives in a college course.Responses reflect the progress in this course compared with othercollege courses.Fox each stated objective, students use Likertscale responses to the question,(1)"In this course my progress was:Low (lowest 10 percent of courses I have taken),Average (next 20 percent of courses I have taken),(middle 40 percent of courses),(3)(2)LowAverage(4) High Average (next 20 percentof courses I have taken), and(5)High (highest 10 percent ofcourses I have taken) ."3. The Course includes four items about assignments, subjectmatter and work-load as compared with other courses.Likert scaleresponses axe: (1) much less than most courses, (2) less than most,(3) about average, (4) more than most, and (5) much more than most.4. Self-ratim includes four items about the student's ownattitudes and behaviors in the course.are:(1)between,definitely false,The Likert scale responsesmore false than true,(2)(3)(4) more true than false, and (5) definitely false.6in

5.Overall retina includes seven statements about the course,the instructor, and the student's learning.are:(1)between,definitely false,(2)(4) more true than false,Likert scale responsesmore false than true,in(5) definitely true.Reliability is involved with consistency.affected by the number of raters.(3)Reliability can beCashin, in a draft technicalreport on the IDEA system (1992), reported the following averageitem reliabilities: .69 for 10 raters; .83 for 20 raters; and .91for 40 raters.These reliabilites are typical of well designedstudent rating forms.Stability is involved with the agreement of a student's ratingrepeated over time.Marsh & Overall (1979) and Overall & Marsh(1980) in a longitudinal study compared student ratings at the endof a course with ratings of the course one to several years laterusing the same students.The average correlation was .83.Thisstability is particularly cogent to the present study in that itestablishes that seniors or even graduate students rating coursestaken up to several years before willnot have changed theirresponses to a great degree.Genexalizability is involved with how well the ratings reflectan instructor's effectiveness overall and not just for a particularcourse during a particular term.Marsh (1982) studied data from1,364 courses dividing them into four categories: same se;differentinstructor, same course; different instructor, different course.The items were separated into those concerned specifically with the7

instructor and those considered background.instructorratingsinstructor"had adifferentfor:clmefourcorrelation ofcourse,"instructor,thegroups,.71.the correlation wascourse,"theIn correlating the"sameForcourse,same"same instruCtor,.52.Forcorrelation was.14,"differentandfor"different instructor, different course," the correlation was .06.These data suayest that the generalizability of student ratingitems is tied to the instructor more than to the course.background items,Thesuch as the student's reason for taking thecourse and the workload of the course, were found to be more highlycorrelated with the course rather than the instructor.same course these items had higher tFor theregardless ofratingsaregeneralizable for instructors and for courses.Validity is concerned with items measuring what they aredesigned to measure.If the criterion for measuring instructoreffectiveness is student learning, achievement should be greaterwith effective than with ineffective instructors.Cohen (1981,1986) in a meta-analysis reviewed validity studies using studentgrades on an independently derived examination as a measure ofstudent learning.The students were enrolled in the same coursesusing the same text and syllabus, but with different instructors.The examinations for the course were identical but constructed bya third party.The correlations of actual student grades on theexaminations with student ratings of the instructor ranged on thevarious items from .22 to .50.Cashin (1988) suggests that unlike8

reliability, validity correlations above .70 are very useful butrare when studying complex phenomena.Student ratings withvalidity correlations between .00 and .19, even when statisticallysignificant are not usually practical to use, however, those above.20 are generally useful.Cashin submits that students rateinstructors higher in classes where they learn more, however, onemust realize that otherfactors such as student preparation,motivation, etc. not related to the instructor, also enter into theequation.cashinratings.(1988,1992)reviewed sources of bias in studentInstructor characteristics reviewed included age, sex,experience, personality, and research productivity.generally show little orThese factorsno relationship to student ratings.However, faculty rank was often found to have an affect.The IDEAsystem reports a correlation with academic rank (including graduateteaching assistants)ofStyle of presentation including.10.expressiveness and enthusiasm often affectsstudents ratings.Cashin (1988) suggests that style and enthusiasm are related to aninstructor's effectiveness and should not be considered a source ofbias.Cashin (1988) reviewed student characteristics relative tostudent ratings and reported student characteristics found not tobe related, included the student'sage,sophomore, etc), GPA, and personality.sex,level(freshman,A student variable whichwas related to :ratings included reasons fox taking the course.TheIDEA system uses a motivation item "I had a strong desire to take9

this course" which has an average correlation of .37 with the otheritems on the questionnaire to account for this factor.In thepresent study all subjects were incipient science majors.Expected grades are known to influence student ratings. UsingIDEA data Howard and Maxwell (1980; 1982) suggest that students whoare motivated andlearn more,earn highergrades,thereforesuggesting student ratings are valid.Course and administrative variables reviewed and reported tohave little or no effect were class size, time of day, and timeduring term the survey was taken (Cashin, 1988). In a later studycomparative data from four class sizes from less than 15 studentsto more than 100 showed a modest correlation with student ratings(Cashin,1932).Level of course and academic field have beenreported in some studies to affect student ratings.system correlates on average .07 with course level.The IDEAThe presentstudy used only introductory courses for rating.Student xatings in 44 academic fields have been reported forIDEA items.In general, humanities and arts type courses are ratedhighest, followed by social science type courses, then math-sciencetype courses.Cashin & Clegg (1987) found that differences in tenacademic fields accounted for 12 percent ox more of the variancefox half of the IDEA items.For a third of the items, differencesin academic field accounted for 20 percent of the item variance.The IDEA has published tables of means for 44 academic fields onthree global items (Cashin,Noma, & Hanna, 1987).By consultingthe tables, comparisons can be made fox ratings in each field.10The

reason for variation by academic field is unclear but users ofstudent ratings are cautioned about comparing student ratings foxcourses in different fields.In the present study this differenceis at a minimum since all courses evaluated are introductoryscience and mathematics courses.Work load and difficulty have been found to be .Averagecorrelations of overall rating with specific IDEA items are .14 for"amount of reading,".17for"amount of assignments,".22 for"difficulty of subject matter," and .29 for "worked harderin thiscourse than on most courses I have taken."cashin (1988) suggeststhat these results support the validity of student ratingsratherthan bias.The subiectsThe subjects fox the survey were upperclassmen in a publicurban university in the South.students in classes whereminutes.The questionnaires were given topossible and took approximately tenInstructors cooperated in allowing class time fox thesurvey.Other subjects not easily accessible in classes werecontacted and surveyed at meetings set Up in an empty classroom.All subjects were volunteers and confidentiality was observed. Thestudents were asked to respond to the IDEA standard form ratingtheinstruction in their first college science course (in the case ofmathematicsorengineeringmathematics course).earthscience,majors,theirfirstThe sciences included hemistry,sciencesand

mathematics.Analysis of alThe sample of 141 students was 56.7 percent male.The averagesoftware package.ResultsDescriPtion of the Sampleage was 25 years(11a 4.64, range 19-42).The sample was 71.6percent white, 9.2 percent black, 6.4 percent hispanic, 9.9 percentAsian, 0.7 percent American Indian, and 2.1 percent other groups.Natural science majors(biology, chemistry, earth science,physics) made up 69.6 percent of the sample,majorspercent.12.7percent,andandcomputer scienceengineering/mathematicsmajors17.7For the course evaluated, the average grade the studentsreported was 2.70 with a median of 3.00 on a 4 point scale.Table 1presents the means and standard deviations for eachof the 45 IDEA items.[Insert Table 1 about here.]Statistical Analysis of DataSampling adequacy using the Kaiser -Meyer-Olkin (KMO) statistic(Kaiser,1978).Small values indicate that thecorrelationsbetween pairs of variables cannot be explained by other variables,therefore, a factor analysis would be inappropriate.adequacy ofA sampling.93 indicated that the data consisting of the IDEA1214

items were appropriate for factor analysis.The Bartletttestforsphericity wasused totestthehypothesis that the correlation matrix was an identity matrix,i.e.,that there were no factors.The test of sphericity wasV 4391.26 with statistical significance of g .001 indicatingthat factors were present in the correlation matrix.A factor analysis utilizing principal components extractionwas performed on the 45 IDEA items.The factors were chosen basedon the eigenvalues greater-than-one and"scree" tests.Thesecriteria yielded eight factors accounting for 67.2 percent of thevariance.factors.A varimax rotation enhanced interpretability of theThe rotated factor structure is presented in Table 2.[Insert Table 2 about here.]The items associated with the eight factors and variance accountedforpriorto rotation are presented in Tablepresented inTable 3arethose3.The itemswith structure coefficientsgreater than absolute .45.[Insert Table 3 about here.]ConclusionsInterpretation of the StatisticsFactor analysis is a way of parsimoniously describing data by1315

recognizing relationships among variables (Gorsuch, 1974).Factoranalysis serves to reduce the number of variables while preservingthe maximum amount of variance.Interpretation of the factors alsohelps to identify underlying constructs of the instrument.Thecorrelations among the student responses on the IDEA items ratingtheir introductory sciencecourses can be used to generalizeseparate factors, each describing distinct facets of the courseexperience and each factor uncorrelated with other factors.Theinterpretation of the factors is a generalization of the itemswhich are most closLly associated with the factor.The IDEA was designed using a series of factor analyses as atool to describe the separate aspects of the course experience.The instrument is divided into sections reflecting the authors'interpretation of six factors (Hoyt & Cashin, 1977)were related to the skill of the instructor,.Items 1-20items 21-30 wererelated to the progress of the student, 31-34 were the structure ofthe course, 35-38 were related to the attitude of the student, anditems 40-46 were for the overall rating.A factor analysis of the accumulated data from the IDEAstudent rating instrument was completed in 1992 at KSU.In apersonal communication W. E. Cashin (1992) reported obtaining sextractionusingavarimaxThe seven factors explained 76.2 percent of the raduate classes from all different subject areas from the KSUdatabase consisting of many hundreds of classes of 30 students or14

more from colleges and universities nationwide.3,4, 5, 7,8,Factor I (items 2,9, 10, 13, 14, 15, 16, 17, 18, 20, 21, 34, 37, 38,40, 42, 44, 45, 46), was interpreted as instructor skill; Factor II(items 11,26,27, 28, 29, 30, 41) was interpreted as creativeexpression; Factor III (items 21, 22, 23, 24, 25) was interpretedascognitiveskills;Factorinterpreted as examinations;interpretedasIV(items12,6,Factor V and 43)was19,33,interpreted as motivation to take the course; and Factor VII (item1and an item on the size of the class)discussion.was interpreted asThPse factors and associated items are comparable tothose obtained in the present study.study are presented in TableThe factors for the present3.In the present study Factor I was interpreted as "instructorpresentation skills."The factor was most highly saturated withitems regarding instructor enthusiasm, expressiveness, al,intellectualstimulation, and overall rating of the instructor.Some exampleitems on FactorIare,"The instructor explained thecoursematerial clearly, and explanations were to the point." and "Theinstructor made presentations which were dry and dull."Factor II dealt with the student's "perception of personalprogress in learning and development" from the course.The itemsmost highly correlated with this factor asked the studenttocompare this course with other college courses on the basis oflearning facts, principles, applications, skills, how professionals1517

utilize this knowledge, developing creativity, self-discipline,understanding, positive attitudes and total s factorofpersonalaccomplishment, learning, and competence in cognitive areas gainedfrom taking the course.The focus of the items was on the personalexperience of the course rather than on the instructor.Exampleitems on this factor are the student's rating of progress on,"learning to apply course material to improve rational thinking,problem-solving and decision making" and "developing skill inexpressing myself orally or in writing."FactorIII dealt with teacher-studentinteractions whichhelped the student develop creatively and intellectually.Theassociated items concerned how the instructor helped the studentsanswer their own questions, encouraged self-expression, promoteddiscussion and encouraged thinking.are,Example items fox this factor"The instructor encouraged students to express themselvesfreely and openly" and "The instructor promoted teacher-studentdiscussion (as opposed to mere responses to questions)."Thisfactor was interpreted as "creative and intellectual development."Factor IV involved the quality of the course examinations ofquestions, amount of memorization, detail, and import of materialson examinations and assignments.An example item on this factor is"The instructor gave examination questions which were unreasonablydetailed (picky) ."This factor was interpreted as the "nature ofexaminations."1618

Factor V evaluated the student's "interest in .theTheassociated items were again concerned with the course not theinstructor.An example item is "I had a strong desire to take thiscourse."FactorVI wasconcerned with the difficulty and effortinvolved with the course. The associated items were about theamount of non-reading assignments, difficulty, and how hard thestudent worked compared to othercourses.Thisfactorwasinterpreted as "course rigor." An example item is "I worked harderon this course than on most courses I have taken."Only one item,involving the instructor's explanationofcriticisms of students answers, was highly correlated with FactorVII.This item may be more relatedtoacourse involvingconsiderable free discussion, such as a literature course or asocial science course, rather than an introductory science course.Only one item, which was concerned with the amount of readingrequired, was highly correlated with Factor VIII.Although thereading in introductory science courses is often intense, requiringreading for details and concepts,usually not ascourses.e,:tensiveasthe reading assignments arein literature orsocialscienceFactor VII and Factor VIII are not considered as reliablesince each factor was strongly associated with only one item.The factor analyses from the KSU data base and the presentstudy rendered similar factors.The slight differences could bedue to sample size and make-up.The rating units for Cashin's1719

factor analysis were class averages, while for the present studythe unit was the individual student.The Cashin sample includedall courses from many different schools.The present sampleincluded only science/mathematics/engineering courses.In spite ofthese differences in samples, the factors were remarkably similar,suggesting that the factors are stable.piscussionThe importance of these factors particularly the first threeis that they distinguish instructor presentation from the personalaspect of the student taking the course.In the work cited (Light,1990, 1992; Hewitt & Seymour, 1991, 1992; Seymour, 1992a, tion of content was notseen asasdefinedasa problem forthemoststudents. The personal feelings of intense competition, isolation,and personal development were most often pointed out as problems inscience presentation skills of the instructor and accounted for most of thetotal variation.thisaspectquestionnaires.ofHowever, most items in the IDEA were directed toinstruction,asaxemoststudentratingPresentation is the aspect of teaching whichinstructors put much energy into such as preparing lectures andaudio-visuals, choosing of texts, etc.It is an important part ofteaching because it is necessary to present content in a coherentway.In addition, presentation is the aspect of teaching mostreadily judged by peers, students, and administrators.18However,

student ratings should not dwell on theexclusion ofotherfacets of"g

AUTHOR Loftin, Lynn Baker TITLE Factor Analysis of the IDEA Student Rating Instrument. for Introductory College Science and Mathematics Courses. PUB DATE Nov 93 NOTE 34p.; Paper presented at the Annual Meeting of the. Mid-South Educational Research Association (19th, New Orleans, LA, November 10-12, 1993). PUB TYPE Reports - Research/Technical .