Transcription

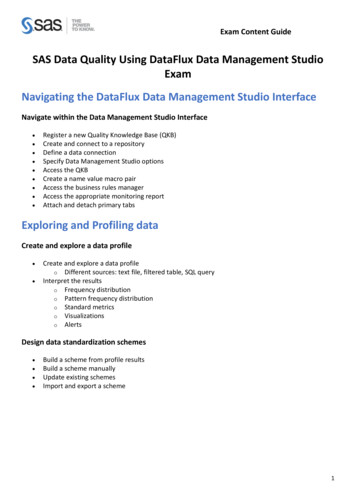

Exam Content GuideSAS Data Quality Using DataFlux Data Management StudioExamNavigating the DataFlux Data Management Studio InterfaceNavigate within the Data Management Studio Interface Register a new Quality Knowledge Base (QKB)Create and connect to a repositoryDefine a data connectionSpecify Data Management Studio optionsAccess the QKBCreate a name value macro pairAccess the business rules managerAccess the appropriate monitoring reportAttach and detach primary tabsExploring and Profiling dataCreate and explore a data profile Create and explore a data profileo Different sources: text file, filtered table, SQL queryInterpret the resultso Frequency distributiono Pattern frequency distributiono Standard metricso Visualizationso AlertsDesign data standardization schemes Build a scheme from profile resultsBuild a scheme manuallyUpdate existing schemesImport and export a scheme1

Exam Content GuideData JobsCreate Data Jobs Rename output fieldsAdd nodes and preview nodesRun a data jobView a log and settingsWork with data job settings and data job displaysBest practices (ensure you are following a particular best practice such as inserting notes,establishing naming conventions)Work with branchingJoin tablesApply the Field layout node to control field orderWork with the Data Validation node:o Add it to the job flowo Specify properties/review propertieso Edit settings for the Data Validation nodeWork with data inputsWork with data outputsProfile data from within data jobsInteract with the Repository from within Data JobsDebug levels for loggingDetermine how data is processedSet sorting properties for the Data Sorting NodeApply a Standardization definition and scheme Use a definitionUse a schemeDetermine the differences between definition and schemeExplain what happens when you use both a definition and schemeReview and interpret standardization resultsExplain the different steps involved in the process of standardizationApply Parsing definitions Distinguish between different data types and their tokensReview and interpret parsing resultsExplain the different steps involved in the process of parsingUse parsing definitionInterpret parse result codes2

Exam Content GuideApply Casing definitions Describe casing methods: upper/lower/properExplain different techniques for accomplishing casingUse casing definitionCompare and contrast the differences between identification analysis and right fieldingnodes Review resultsExplain the technique used for identification (process of definition)Apply the Gender Analysis node to determine gender Use gender definitionInterpret resultsExplain different techniques for conducting gender analysisCreate an Entity Resolution Job Use a clustering node in a data job and explain its useSurvivorship (surviving record identification)o Record ruleso Field ruleso Options for survivorshipDiscuss and apply the Cluster Diff nodeApply Cross-field matchingEntity resolution file output nodeUse the Match Codes Node to select match definitions for selected fields.o Outline the various uses for match codes (join)o Use the definitiono Interpret the resultso Match versus match parsedo Explain the process for creating a match codeo Select sensitivity for a selected match definitiono Apply matching best practicesUse data job references within a data job Use of external data provider nodeUse of data job reference nodeDefine a target nodeExplain why you would want to use a data job reference (best practice)Real-time data service3

Exam Content GuideUnderstand how to use an Extraction definition Interpret the resultsExplain the process of the definitionExplain the process of the definition of pattern analysisBusiness Rules MonitoringDefine and create business rules Use Business Rules ManagerCreate a new business ruleo Name/label ruleo Specify type of ruleo Define checkso Specify fieldsDistinguish between different types of business ruleso Rowo Seto GroupApply business ruleso Profileo Execute business rule nodeUse of Expression BuilderApply best practicesCreate new tasks Understand eventso Log error to repositoryo Set a data flow/key valueo Log error to a text fileo Write the row to a tableApplying taskso Explain purpose of the data monitoring nodeReview a data monitoring job logReview a monitoring reporto Trigger valueso Filters4

Exam Content GuideData Management ServerInteract with the Data Management Server Import/export jobs (special case profile)Test serviceRun history/job statusIdentify the required configuration components (QKB, data, reference sources, and repository)Security, the access control listCreation and use of WSDLExpression Engine Language (EEL)Explain the basic structure of EEL (components and syntax) Identify basic structural components of the codeo Statementso Functionso DeclarationsUse EELo Profileo Expression node (data job) Tabs (expression, grouping, etc) Order of Operations (pre/post, etc)o Expression node (process job)o Business ruleso Custom metrics Use in profile Use in data job (execute custom metric node) Use in business ruleo Use in data validation node5

Exam Content GuideProcess JobsWork with and create process jobsooooooooooAdd nodes and explain what nodes doInterpret the logParameterizing process jobsIdentify Run optionsUsing different functionality in process jobsIf/then logic Echo Fork Parallel iterator Events and event handling (event listener) Global get/set Expression code features Declaration of events Set output slotEmbedded data job and data job referenceUsing Work tables, process flow worktable readerSAS code executionSQLMacro Variables and Advanced Properties and SettingsWork with and use macro variables in data profiles, data jobs and data monitoringooooDefine macro variables: In DM studio In Configuration files With Command line DynamicUse macro variables: In a profile In expression code In a data job In a process job In business rulesDetermine Scoping/precedence (order in which macros are read)Compare/Contrast DM Studio versus DM Server6

Exam Content GuideDetermine uses for advanced propertiesooMulti-locale Use locale guessing Use with a scheme Locale list and locale fieldApply setting for Max output rowsQuality Knowledge Base (QKB)Describe the organization, structure and basic navigation of the QKBoooIdentify and describe locale levels (global, language, country)Navigate the QKB (tab structure, copy definitions, etc)Identify data types and tokensBe able to articulate when to use the various components of the QKB. Componentsinclude:ooooooRegular expressionsSchemesPhonetics libraryVocabulariesGrammarChop TablesDefine the processing steps and components used in the different definition types.ooIdentify/describe the different definition types Parsing Standardization Match Identification Casing Extraction Locale guess Gender PatternsExplain the interaction between different definition types (with one another, parse withinmatch, etc)Note: All nine sections and 24 main objectives will be tested on every exam. The expanded objectives areprovided for additional explanation and define the entire domain that could be tested.7

Exam Content Guide 5 Data Management Server Interact with the Data Management Server Import/export jobs (special case profile) Test service Run history/job status Identify the required configuration components (QKB, data, reference sources, and repository) Security, the access control list Creation and use of WSDL Expression Engine Language (EEL)