Transcription

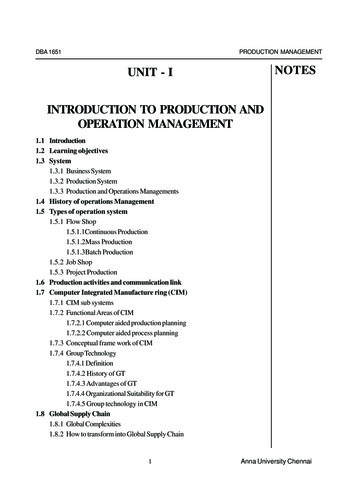

Microsoft’s ProductionConfigurable CloudDerek ChiouMicrosoft Azure Cloud SiliconUT AustinH2RC Nov 14, 20161

Today’s Data Centers O(100K) servers/data centerVery dense, maximize number of serversTens of MegaWattsStrict power and cooling requirementsSecure, hot, noisyIncrementally upgraded 3 year server depreciation, upgraded quarterly Applications change very rapid (weekly, monthly) Many advantages including economies of scale, data all in oneplace, etc. At data center scales, don’t need to get an order of magnitudeimprovement to make sense Positive ROI at large scale easier to achieve How can we improve efficiencies?H2RC Nov 14, 20162

Efficiency via SpecializationFPGAsSource: Bob Broderson, Berkeley Wireless groupH2RC Nov 14, 2016ASICs3

What Does a Data Center ServerWith an FPGA look like?Depends on your point of viewH2RC Nov 14, 20164

Classic View of ComputerDRAMnetworkCPUStorageH2RC Nov 14, 20165

Networking View of ComputerDRAMNetwork “offload”AccCPUnetworkStorageH2RC Nov 14, 20166

“Offload” Accelerator view of ServerDRAMIntel MCPAccAccNICCPUnetworkAccStorageH2RC Nov 14, 20167

Our View of a Data Center C Nov 14, 20168

Benefits Software receives packets slowly Interrupt or polling Parse packet, start right work FPGA processes every packet anyways Packet arrival is an event that FPGA dealswith Identify FPGA work, pass CPU work to CPU Map common case work to FPGA Processor never sees packet Can read/modify system memory to keepapp state consistent CPU is complexity offload engine forFPGA! Many possibilities Distributed machine learning Software defined networking Memcached getH2RC Nov 14, 20169

Converged Bing/Azure ArchitectureWCS 2.0 Server BladeDRAMCatapult v2 Mezzanine cardCatapult V2DRAMDRAM40Gb/sQPICPUGen3 2x8Gen3 x8NICFPGAQSFPQSFPCPUQSFPSwitchWCS Gen4.1 Blade with Mellanox NIC and Catapult FPGA40Gb/s Completely flexible architecture1. local compute accelerator2. remote compute accelerator3. Network/storage acceleratorPikes PeakOption CardMezzanineConnectorsWCS TrayBackplaneH2RC Nov 14, 201610

Network Connectivity (IP)H2RC Nov 14, 201611

Case 1: Local compute acceleratorBing Ranking as a ServiceH2RC Nov 14, 201612

Bing Document Ranking FlowRanking as a Service (RaaS)Selection as a Service 4RaaSIFM4448IFMIFM4444Selection-as-a-Service (SaaS)- Find all docs that contain query terms,- Filter and select candidate documents forranking10 blue linksRanking-as-a-Service (RaaS)- Compute scores for how relevant each selecteddocument is for the search query- Sort the scores and return the resultsH2RC Nov 14, 201613

FE: Feature ExtractionQuery: “FPGA currences 0 7NumberOfOccurrences 1 4NumberOfTuples 0 1 1 4K DynamicFeatures 2K SyntheticFeaturesL2ScoreScoreH2RC Nov 14, 201614

Feature Extraction AcceleratorCompressedDocumentPCIeFree FormExpression(FFE)StreamPreprocessing FSMControl/DataTokensDistribution latchesFeatureGatheringNetworkH2RC Nov 14, 201615

Bing Production ResultssoftwareHW vs. SW Latency and Load99.9% Query Latency versus Queries/secFPGA99.9% software latency99.9% FPGA latencyaverage FPGA query loadaverage software loadH2RC Nov 14, 201616

Case 2: Remote acceleratorH2RC Nov 14, 201617

Feature Extraction FPGA faster than needed Single feature extraction FPGAmuch faster than single server Wasted capacity and/or wastedFPGA resources Two choices Somehow reduce performanceand save FPGA resources Allow multiple servers to usesingle FPGA? Use network to transferrequests and return responsesH2RC Nov 14, 201618

Inter-FPGA rverH2RC Nov 14, 2016 FPGAs can encapsulatetheir own UDP packets Low-latency inter-FPGAcommunication (LTL) Can provide strongnetwork primitives But this topology opensup other opportunities19

Lightweight Transport Layer (LTL) Latencies25LTL L26x8 Torus LatencyLTL Average LatencyLTL 99.9th PercentileRound-Trip Latency (us)2015Example L1 latency histogramLTL L110Example L0 latency histogramLTL L0 (same TOR)56x8 Torus(can reach up to 48 FPGAs)Examples of L2 latency histograms for different pairs of r of Reachable Hosts/FPGAsH2RC Nov 14, 201620

Hardware Acceleration as a Service AcrossData Center (or even across Internet)CSCSToRToRToRToRHPCBing Ranking HWSpeech to textLarge-scaledeep learningBing Ranking SWH2RC Nov 14, 201621

BrainWave: Scaling FPGAs To Ultra-Large DNNModels Distribute NN models across asmany FPGAs as needed (up tothousands) Use HaaS and LTL to manage multiFPGA execution Very close to live productionH2RC Nov 14, 201622

BrainWave Publicly Demoed Ignite 2016 Translation DNN running on FPGAs 2 orders of magnitude lower latencythan CPU implementation 10% of powerH2RC Nov 14, 201623

Case 3: Networking acceleratorH2RC Nov 14, 201624

FPGA SmartNIC for Cloud Networking Azure runs Software Defined Networking on the hosts Software Load Balancer, Virtual Networks – new features each month Before, we relied on ASICs to scale and to be COGS-competitive at 40G But 12 to 18 month ASIC cycle time to roll out new HW is too slow to keep up with SDN SmartNIC gives us the agility of SDN with the speed and COGS of HW Base SmartNIC provide common functions like crypto, GFT, QoS, RDMA on all hostsSLB DecapRuleVFP*ActionDecapSLB SR-IOV(Host .3.1- 1.3.4.1, 62362- 80Decap, DNAT, Rewrite, MeterCryptoRDMAQoS50GH2RC Nov 14, 201625

Azure Accelerated Networking SR-IOV turned on VM accesses NIC hardware directly,sends messages with noOS/hypervisor callVMGuest OSHypervisorVFPVMNICNICGFT/FPGA FPGA determines flow of eachpacket, rewrites header to makedata center compatible Reduces latency to roughly baremetal Azure now has the fastest publiccloud network 25Gb/s at 25us latency Fast crypto developedH2RC Nov 14, 201626

We Are Hiring and Collaborating We are hiring FPGA and software folks Academic engagements Research.Microsoft.com/catapultWill provide boards to a limited number of academics (1 page proposal)Will be giving access to clusters of up to 48 at TACCResearch grantsInternships Please contact me if you’re interested dechiou@microsoft.com catapult@Microsoft.comH2RC Nov 14, 201627

Will Configurable Clouds Change the World? Being deployed for all new Azure and Bing machines Many other properties as well Ability to reprogram a datacenter’s hardware Specialized compute acceleration Networking, storage, security Can turn homogenous machines into specialized SKUs dynamically Hyperscale performance with low latency communication Exa-ops of performance with a O(10us) diameter What should we do with the world’s most powerful configurablefabric?H2RC Nov 14, 201628

Very close to live production H2RC Nov 14, 2016 22 . QoS, RDMA on all hosts Transposition Engine e SLB Decap SLB NAT VNET ACL Metering Rule Action Rule Action Rule Action Rule Action Rule Action * Decap * DNAT * Rewrite * Allow * Meter SmartNIC VFP VMSwitch VM SR-IOV (Host Bypass) 50G Crypto RDMA QoS Flow Action 1.2.3.1- 1.3.4.1, 62362- 80 .

![ExpertsLive2016 Migrate your Azure Pack to Azure Stack[2] (Alleen-lezen)](/img/38/expertslive2016-migrate-your-azure-pack-to-azure-stack2.jpg)