Transcription

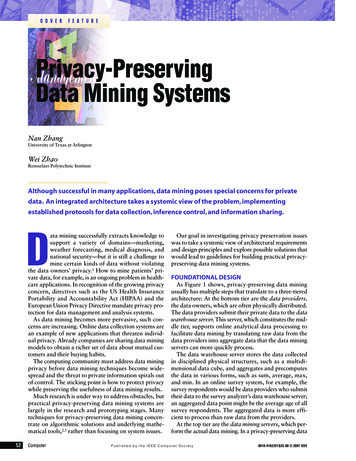

C O V E RF E A T U R EPrivacy-PreservingData Mining SystemsNan ZhangUniversity of Texas at ArlingtonWei ZhaoRensselaer Polytechnic InstituteAlthough successful in many applications, data mining poses special concerns for privatedata. An integrated architecture takes a systemic view of the problem, implementingestablished protocols for data collection, inference control, and information sharing.Data mining successfully extracts knowledge tosupport a variety of domains—marketing,weather forecasting, medical diagnosis, andnational security—but it is still a challenge tomine certain kinds of data without violatingthe data owners’ privacy.1 How to mine patients’ private data, for example, is an ongoing problem in healthcare applications. In recognition of the growing privacyconcern, directives such as the US Health InsurancePortability and Accountability Act (HIPAA) and theEuropean Union Privacy Directive mandate privacy protection for data management and analysis systems.As data mining becomes more pervasive, such concerns are increasing. Online data collection systems arean example of new applications that threaten individual privacy. Already companies are sharing data miningmodels to obtain a richer set of data about mutual customers and their buying habits.The computing community must address data miningprivacy before data mining techniques become widespread and the threat to private information spirals outof control. The sticking point is how to protect privacywhile preserving the usefulness of data mining results.Much research is under way to address obstacles, butpractical privacy-preserving data mining systems arelargely in the research and prototyping stages. Manytechniques for privacy-preserving data mining concentrate on algorithmic solutions and underlying mathematical tools,2,3 rather than focusing on system issues.52ComputerOur goal in investigating privacy preservation issueswas to take a systemic view of architectural requirementsand design principles and explore possible solutions thatwould lead to guidelines for building practical privacypreserving data mining systems.FOUNDATIONAL DESIGNAs Figure 1 shows, privacy-preserving data miningusually has multiple steps that translate to a three-tieredarchitecture: At the bottom tier are the data providers,the data owners, which are often physically distributed.The data providers submit their private data to the datawarehouse server. This server, which constitutes the middle tier, supports online analytical data processing tofacilitate data mining by translating raw data from thedata providers into aggregate data that the data miningservers can more quickly process.The data warehouse server stores the data collectedin disciplined physical structures, such as a multidimensional data cube, and aggregates and precomputesthe data in various forms, such as sum, average, max,and min. In an online survey system, for example, thesurvey respondents would be data providers who submittheir data to the survey analyzer’s data warehouse server;an aggregated data point might be the average age of allsurvey respondents. The aggregated data is more efficient to process than raw data from the providers.At the top tier are the data mining servers, which perform the actual data mining. In a privacy-preserving dataPublished by the IEEE Computer Society0018-9162/07/ 25.00 2007 IEEE

mining system, these serversdo not have free access to allInformation sharingdata in the data warehouse.Data Mining System 1Data Mining System 2In a hospital system, theaccounting department canmine patients’ financial data,for example, but cannotData mining serversData mining serversaccess patients’ medicalrecords. Developing and validating effective rules for thedata mining servers’ accessto the data warehouse is anData warehouse serverData warehouse serveropen research problem.4Besides constructing datamining models on its localdata warehouse server, adata mining server mightData providersData providersshare information with datamining servers from othersystems. The motivation forthis sharing is to build data Figure 1. Basic architecture for privacy-preserving data mining.The architecture typicallymining models that span has three tiers: data providers, which are the data owners; the data warehouse server, whichsystems. For example, sev- supports online analytical processing; and the data mining servers that perform data miningeral retail companies might tasks and share information. The challenge is to control private information transmittedopt to share their local data among entities without impeding data mining.mining models on customerrecords to build a global data mining model about con- Minimum thus means that privacy disclosure is on asumer behavior that would benefit all the companies. need-to-know basis. Many privacy regulations, includAs Figure 1 shows, sharing occurs in the top tier, where ing HIPAA, mandate this minimum necessary rule.each data mining server holds the data mining model ofits own system. Thus, “sharing” means sharing local Privacy protocolsdata mining models rather than raw data.On the basis of the architecture in Figure 1 and theminimum necessary design principle, we have evolved“Minimum necessary” design principlea basic strategy for building a privacy-preserving dataAny design of a privacy-preserving data mining system mining system. Central to the strategy are three protorequires a clear definition of privacy. The common inter- cols that govern privacy disclosure among entities:pretation is that a data point is private if its owner hasthe right to choose whether or not, to what extent, and Data collection protects privacy during data transfor what purpose to disclose the data point to others. Inmission from the data providers to the data wareprivacy-preserving data mining literature, most authorshouse server.assume (either implicitly or explicitly) that a data owner Inference control manages privacy protection betweengenerally chooses not to disclose its private data unlessthe data warehouse server and data mining servers.data mining requires it. This assumption and the Information sharing controls information sharedaccepted information-privacy definition form the basisamong the data mining servers in different systems.of the “minimum necessary” design principle:Given the minimum necessary rule, a common goalIn a data mining system, disclosed private informationof these protocols is to transmit the minimum private(from one entity to another) should be the minimuminformation necessary for data mining from one entitynecessary for data mining.to another to build accurate data mining models. In reality, it is often difficult to build an efficient system thatMinimum in this context is a qualitative, not a quan- protects private information perfectly. Consequently,titative, measure. Since the quantitative measure of pri- there are always tradeoffs between data privacy and datavacy disclosure varies among systems, minimum mining model accuracy. These protocols are based oncaptures the idea that all unnecessary private informa- established methods that the system designer can tailortion (unnecessary in the context of how accurate the to particular requirements, choosing the most beneficialdata mining results must be) should not be disclosed. tradeoffs. The data collection protocol, for example, canApril 200753

can be effective in guaranteeing the data’s anonymity6—k-anonymity, for example,means that each perturbeddata record is indistinguishValue-based methodDimension-based methodable from the perturbed values of at least k–1 other happroachThe value-based methodassumes that it would be difficult, if not impossible, forFigure 2. Data collection protocol taxonomy. A designer can choose which of two methods—the data warehouse server tovalue- or dimension-based—and its attendant approaches best serve the design.rediscover the original private data from the manipulated values but that the server would still be able todraw from one of two established collection methods, recover the original data distribution from the perturbedeach with its advantages and drawbacks.data, thereby supporting the construction of accuratedata mining models.5Data collection protocolDATA COLLECTION PROTOCOLThe data collection protocol lets data providers identify the minimum necessary part of private information—what must be disclosed to build accurate data miningmodels—and ensures that they transmit only that partof the information to the data warehouse server.Several requirements shape the data collection protocol. First, it must be scalable, since a data warehouseserver can deal with as many as hundreds of thousandsof data providers, as in an online survey system. Second,the computational cost to data providers must be smallbecause they have considerably lower computationalpower than the data warehouse server, and a higher costcould discourage them from participating in data mining.Finally, the protocol must be robust; it must deliver relatively accurate data mining results while protecting dataproviders’ privacy, even if data providers behave erratically. For example, if some data providers in an onlinesurvey system deviate from the protocol or submit meaningless data, the data collection protocol must controlthe influence of such erroneous behavior and ensure thatglobal data mining results remain sufficiently accurate.Figure 2 shows a data collection protocol taxonomybased on two data collection methods.Value-based methodWith the value-based method,5 a data providermanipulates the value of each data attribute or itemindependently using one of two approaches. The perturbation-based approach3 adds noise directly to theoriginal data values, such as changing age 23 to 30 orTexas to California. The aggregation-based approachgeneralizes data according to the relevant domain hierarchy, such as changing age 23 to age range 21-25 orTexas to the US.The perturbation-based approach is highly suitablefor arbitrary data, while the aggregation-based approachrelies on knowledge of the domain hierarchy, but54ComputerDimension-based methodThe dimension-based method is so called because thedata to be mined usually has many attributes, or dimensions. The basic idea is to remove part of the privateinformation from the original data by reducing the number of dimensions. The blocking-based approach3accomplishes this by truncating some private attributeswithout releasing them to the data warehouse server.However, this approach could result in information loss,preventing data mining servers from constructing accurate data mining models. The more complicated projection-based approach7 overcomes this problem byprojecting the original data into a carefully designed,low-dimensional subspace in a way that retains only theminimum information necessary to construct accuratedata mining models.Advantages and drawbacksEach method and attendant approach has pluses andminuses. The value-based method is independent of thedata mining task, which makes it suitable for applications involving multiple data mining tasks or tasksunknown at data collection. In contrast, the dimensionbased method fits better with individual data miningtasks because the information to be retained afterdimension reduction usually depends on the particulartask.So far, research has not defined an effective and universally applicable projection-based approach. Even so,the projection-based approach promises strong advantages over value-based methods in terms of the tradeoffbetween accuracy and privacy protection.Most value-based approaches treat different attributes independently and separately, so at least someattributes that are less necessary for data mining arealways disclosed to the data warehouse server to thesame extent as other attributes. Indeed a recent study

revealed that, with the perturbation-based randomization approach, the data warehouseItemAprilMayJuneJulySumserver could use privacy intrusion techniquesto filter noise from the perturbed data, therebyBook10Known15KnownQ5 25rediscovering part of the original private data.8CD20Known27KnownQ6 47The projection-based approach avoids thisDVDKnown351636Q7 87problem by exploiting the relationship amongGameKnown25Known14Q8 39attributes and disclosing only those necessarySumQ1 30Q2 60Q3 58Q4 50for data mining.Guiding data submission can also reduceunnecessary privacy disclosure, enhancing Figure 3. Inference that discloses private information. If the data miningthe performance of data perturbation. In ear- server becomes an adversary, it might be able to infer from the querylier work,7 we and colleague Shengquan answers and certain cells (Known) the number of DVDs a data providerWang proposed a guidance-based dimension sold in June (which is private and should not be disclosed) by computingreduction scheme for dynamic systems, such Q1 Q3 – (Q5 Q6 ) 88 – 72 16, where Q1 to Q8 are query answers.as online survey systems, in which dataproviders (survey respondents and so on) join the sysFigure 4 shows an inference control protocol taxontem and submit their data asynchronously. To guide omy based on two inference control methods.data providers that have not yet submitted data, thescheme analyzes the data already collected and esti- Query-oriented methodmates the attributes necessary for data mining. TheThe query-oriented method4 is centered on the consystem then sends the estimated useful attributes to cept of a safe query set, which says that query set Q1,data providers as guidance. Our work shows that this Q2, , Qn is safe if a data mining server cannot inferguidance-based scheme is more effective than private data from the answers to Q1, Q2, , Qn. Thus,approaches without such guidance.query-oriented inference control means that when thedata warehouse server receives a query, it will answerINFERENCE CONTROL PROTOCOLthe query only if the union set of query history—theProtecting private data in the data warehouse server set of all queries already answered—and the recentlyrequires controlling the information disclosed to the received query are safe. Otherwise, it will reject thedata mining servers—which is the aim of the inference query. Relative to query-oriented inference control incontrol protocol. Following the minimum necessary statistical databases, inference control in data warerule, the inference control protocol ensures that the data houses involves significantly more data. Consequently,warehouse server answers the queries necessary for data the burden is on inference control protocols to processmining yet minimizes privacy disclosure.queries more efficiently.Several requirements drive the inference control proBecause dynamically determining a query set’s safetytocol’s design and implementation. One is the need to (online query history check) can be time-consuming, ablock inferences. If a data mining server becomes an static version of the query-oriented method might beadversary, it will try to infer private information from more suitable. The static version determines a safe setthe query answers it has already received. Figure 3 gives of queries offline (before any query is actually received).an example.If a query set is safe, then any one of its subsets is alsoFurther, the inference control protocol must be effi- safe. At runtime, when the data warehouse servercient enough to satisfy the datawarehouse server’s required onlineresponse time—the time betweenInference control protocolissuing a query and answering it.The time that an inference controlprotocol uses is part of that responseQuery-oriented methodData-oriented methodtime. It must be controlled so thatthe data warehouse server can maintain its reduced response time.Classify safeDo perturbationCheck queryDo perturbationTo meet these requirements, inferand unsafe setsonline when queryhistory onlineby data collectionofflinereceivedence control protocols must restrictthe information included in thequery answers so that the data mining server cannot infer private data Figure 4. An inference control protocol taxonomy. A designer can choose which of twomethods—query- or data-oriented—best serves the design.from received query answers.April 200755

receives the query, it answers only if the query is in thepredetermined safe set. Otherwise, it will reject thequery. On the downside, the static method is conservative in selecting a safe set, which might cause it to rejectsome queries unnecessarily.Data-oriented methodserver reject some privacy-divulging queries (such asQ3 in Figure 3). This, in turn, would effectively downgrade the data perturbation level yet retain the samedegree of privacy protection. Because the data is perturbed, the server would have to reject far fewer queriesand could thus answer most queries fairly accuratelywhile continuing to protect private information.With the data-oriented method of inference control,9the data warehouse server perturbs the stored raw data INFORMATION SHARING PROTOCOLand estimates the query answers as accurately as possiBecause each data mining server constructs local datable on the basis of the perturbed data. As Figure 4 shows, mining models in its own system, these servers are likelythe data collection protocol can handle perturbation to share their local data mining models rather than theunless the application requires storing original data in the raw data in the data warehouses. Local data mining moddata warehouse server. In that case,els can be sensitive, especially whenthe data warehouse server might havethe local models are not globally valid.The query-oriented methodto perturb the data when processingTo protect the privacy of individthe query.ual data mining systems, some mechcan provide more accurateThe data-oriented method assumesanism must control the disclosure ofanswers than thethat perturbation can protect privateprivate information in local datadata-oriented method.information from being disclosed,mining models. This mechanism isenabling the data warehouse server tothe information sharing protocol,answer all queries freely on the basiswhich again follows the minimumof the perturbed data. Research has shown that the query necessary rule. The protocol’s objective is to enable dataanswers estimated from the perturbed data can still sup- mining servers across multiple systems to constructport the construction of accurate data mining models.5global data mining models while disclosing only the minimum private information about local data mining modAdvantages and disadvantagesels necessary for information sharing.The two methods have unique performance considMany information sharing protocols exist for applierations. The data-oriented method offers query respon- cations other than data mining, such as database intersiveness, since the data warehouse server will answer all operation or data integration.10 Information sharing isqueries. The query-oriented method, in contrast, nor- necessary for most distributed data mining systems, andmally rejects a substantial number of queries,9 which much work has focused on designing specific informameans that some data mining servers might be unable tion sharing protocols for data mining tasks.to complete their data mining tasks.A major design concern of the information sharingOn the plus side, the query-oriented method can pro- protocol is defending against adversaries that behavevide more accurate answers than the data-oriented arbitrarily within the capability allocated to them. Themethod. When the data warehouse server answers a defense strategy depends on the adversary model—thequery, its answer will always be precise. The data-ori- set of assumptions about an adversary’s intent andented method, in contrast, answers queries with esti- behavior. Two of the more popular adversary modelsmation, so it might not be accurate enough to support are semihonest10 and beyond semihonest.data mining, particularly when the construction of datamining models requires highly accurate query answers. Semihonest adversariesEfficiency is an important advantage for the static verAn adversary is semihonest if it properly follows thesion of the query-oriented method, which has the short- designated protocol but records all intermediate comest response time because most of its computational cost putation and communication, thereby providing a wayis offline. The dynamic version must trade off efficiency to derive private information.and query responsiveness: To answer more queries, theCryptographic encryption has proved effective indata warehouse server must spend more time analyzing defending against semihonest adversaries.2,10,11 In thisthe query history. The data-oriented method also suf- method, each data mining server encrypts its local datafers from low efficiency, since the computational over- mining model and exchanges the encrypted model withhead for query estimation can be several orders of other data mining servers.magnitude higher than for query answering.Some encryption scheme properties, such as the RivestOne way to enhance inference control protocol per- Shamir-Adleman (RSA) cryptosystem’s commutativeformance is to integrate query- and data-oriented meth- encryption property, make it possible to design algoods. Introducing the query answer-or-reject scheme to rithms for data mining servers to perform certain datathe data-oriented method would let the data warehouse mining tasks and set operations without knowing the56Computer

private keys of other entities.2,10,11 Tasks include classification, association rule mining, clustering, and collaborative filtering; set operations include set intersection, setunion, and element reduction.Because it is not possible to recover the original (local)data mining models from their encrypted values withoutknowing the private keys, this method is a secure defenseagainst semihonest adversaries. Researchers have alreadyevolved a detailed taxonomy and cryptographic encryption methods for various system settings.2,3Beyond semihonest adversarieserogeneous privacy requirements is a challenge withmuch potential return.Privacy measurementsThe accuracy versus protection tradeoff inherent inprivacy-preserving data mining means that some mechanism must accurately measure the degree of privacyprotection. Although extensive work has focused on privacy measurement, as yet no one has proposed a commonly accepted measurement technique for genericprivacy-preserving data mining systems. Proper privacyprotection measurement has three criteria: It mustAn adversary is considered beyondsemihonest if it deviates from the reflect system settings (adversariesResearch on anomalydesignated protocol, changes itsmight have different levels of interinput data, or both.est in different data values, such asdetection can contribute toBecause it is difficult if not imposbeing more concerned withmultiple disciplines, such assible to defend against an adversarypatients that have contagious dissecurity, biology, and finance.that is behaving arbitrarily, dealingeases than other diseases),with beyond semihonest adversaries account for data providers’ diverserequires more refined models. Oneprivacy concerns (some might consuch model is the intent-based adversary model,12 whichsider age as private information, while others are willformulates an adversary’s intent as combining the intenting to disclose it publicly), andto obtain accurate data mining results with compromis satisfy the minimum necessary rule.ing other entities’ private information. A game-theoreticmethod is then developed to defend against adversariesA comprehensive study of privacy measurement forthat weigh the accuracy of data mining results over com- all three protocols would be a huge step toward improvpromising other parties’ privacy.12ing the performance of privacy-preserving data miningThe basic idea is to design the information sharing pro- techniques.tocol in a way that no adversary can both obtain accurate data mining results and intrude on other servers’ Anomaly detectionprivacy. Adversaries that are more concerned with theA common application of data mining is to detectaccuracy of data mining results will be forced not to data-set anomalies, as in mining log file data to detectintrude on the privacy of others to get that accuracy.intrusions. However, few researchers have consideredprivacy protection in detecting anomalies.OPEN RESEARCH ISSUESResearch on anomaly detection is an important partSeveral issues require additional research to ensure the of data mining and can contribute to multiple discioptimum performance of the techniques described.plines, such as security, biology, and finance. Thoroughlyinvestigating issues related to the design of privacy-preProtocol integrationserving data mining techniques for anomaly detectionMany systems need a seamless integration of the three would be extremely beneficial.protocols, yet little research has addressed this need.Our proposed integrated architecture could serve as Multiple protection levelsa platform for studying protocol interaction. SuchIn some cases, multiple levels of private informationinsights can pave the way for effective and efficient must be protected. The first level might be a data pointintegration.value, and the second level, the data point sensitivity(knowledge of whether or not a data point is private).Heterogeneous privacy requirementsMost existing studies focus on protecting the first levelPrivacy-preserving data mining techniques depend and assume that all entities already know the secondon respecting the privacy protection levels that data level. Research has yet to answer how to protect theproviders require. Most existing studies assume homoge- second level (and higher levels) of private information.nous privacy requirements—that all data owners needthe same privacy level for all their data and its attributes. This assumption is unrealistic in practice and couldur work is an important first step in addressing theeven degrade system performance unnecessarily.critical systemic issues of privacy preservation inDesigning and implementing techniques that exploit hetdata mining. Much research remains to realize theOApril 200757

potential of the architecture and design principles wehave described. Much literature already addresses privacy-preserving data mining, but clearly the ideas mustcross considerable ground to become practical systems.Studies are needed for the design of privacy-preservingdata mining techniques in real-world scenarios, in whichdata owners can freely address their individual privacyconcerns without the data miner’s consent. Also criticalis work that more closely incorporates designs with specialized applications such as healthcare, market analysis, and finance. Our hope is that others will continueefforts in this important area. References1. J. Han and M. Kamber, Data Mining Concepts and Techniques, Morgan Kaufmann, 2001.2. C. Clifton et al., “Tools for Privacy Preserving DistributedData Mining,” SIGKDD Explorations, vol. 4, no. 2, 2003,pp. 28-34.3. V.S. Verykios et al., “State-of-the-Art in Privacy PreservingData Mining,” SIGMOD Record, vol. 33, no. 1, 2004, pp.50-57.4. L. Wang, S. Jajodia, and D. Wijesekera, “Securing OLAP DataCubes against Privacy Breaches,” Proc. 25th IEEE Symp.Security and Privacy, IEEE Press, 2004, pp. 161-175.5. R. Agrawal and R. Srikant, “Privacy-Preserving Data Mining,” Proc. 19th ACM SIGMOD Int’l Conf. Management ofData, ACM Press, 2000, pp. 439-450.6. R.J. Bayardo and R. Agrawal, “Data Privacy through Optimalk-Anonymization,” Proc. 21st Int’l Conf. Data Eng., IEEEPress, 2005, pp. 217-228.7. N. Zhang, S. Wang, and W. Zhao, “A New Scheme on Privacy-Preserving Data Classification,” Proc. 11th ACMSIGKDD Int’l Conf. Knowledge Discovery and Data Mining, ACM Press, 2005, pp. 374-383.8. Z. Huang, W. Du, and B. Chen, “Deriving Private Informationfrom Randomized Data,” Proc. 24th ACM SIGMOD Int’lConf. Management of Data, ACM Press, 2005, pp. 37-48.9. R. Agrawal, R. Srikant, and D. Thomas, “Privacy-PreservingOLAP,” Proc. 25th ACM SIGMOD Int’l Conf. Managementof Data, ACM Press, 2005, pp. 251-262.10. R. Agrawal, A. Evfimievski, and R. Srikant, “InformationSharing across Private Databases,” Proc. 22nd ACM SIGMOD Int’l Conf. Management of Data, ACM Press, 2003,pp. 86-97.11. Y. Lindell and B. Pinkas, “Privacy Preserving Data Mining,”Proc. 12th Ann. Int’l Conf. Advances in Cryptology, SpringerVerlag, 2000, pp. 36-54.12. N. Zhang and W. Zhao, “Distributed Privacy Preserving Information Sharing,” Proc. 31st Int’l Conf. Very Large DataBases, ACM Press, 2005, pp. 889-900.Nan Zhang is an assistant professor of computer scienceand engineering at the University of Texas at Arlington. Hisresearch interests include databases and data mining, information security and privacy, and distributed systems. Zhangreceived a PhD in computer science from Texas A&M University. He is a member of the IEEE. Contact him atnzhang@cse.uta.edu.Wei Zhao is a professor of computer science and the deanfor the School of Science at Rensselaer Polytechnic Institute. His research interests include distributed computing,real-time systems, computer networks, and cyberspace security. Zhao received a PhD in computer and information sciences from the University of Massachusetts, Amherst. He isa Fellow of the IEEE and a member of the IEEE ComputerSociety and the ACM. Contact him at zhaow3@rpi.edu.Engineering and Applying the InternetIEEE Internet Computing reports emerging tools,technologies, and applications implemented through the Internetto support a worldwide computing environment.In 2007, we’ll look at: Autonomic Computing Roaming Distance Learning Dynamic Information Dissemination Knowledge Management Media Searchwww.computer.org/internet/58Computer

preserving data mining systems. FOUNDATIONAL DESIGN As Figure 1 shows, privacy-preserving data mining usually has multiple steps that translate to a three-tiered architecture: At the bottom tier are the data providers, the data owners, which are often physically distributed. The data providers submit their private data to the data warehouse server.