Transcription

A Survey of Peer-to-Peer Storage Techniques for Distributed File SystemsRagib Hasan‡† Zahid Anwar‡† William Yurcik‡ Larry Brumbaugh‡ Roy Campbell†‡National Center for Supercomputing Applications†Department of Computer ScienceUniversity of Illinois at Urbana Champaign{rhasan,anwar, byurcik, ljbrumb}@ncsa.uiuc.edu, roy@cs.uiuc.eduAbstractThe popularity of distributed file systems continuesto grow. Reasons they are preferred over traditionalcentralized file systems include fault tolerance,availability, scalability and performance. In addition,Peer-to-Peer (P2P) system concepts and scalablefunctions are being incorporated into the domain offile systems. This survey paper explores the designparadigms and important issues that relate to suchsystems and discusses the various research activities inthe field of Distributed Peer- to-Peer file systems.1. IntroductionIn the recent years, Peer-to-Peer system researchhas grown significantly. Using a large scale distributednetwork of machines has become an important elementof distributed computing due to the phenomenalpopularity of Peer-to-Peer (P2P) services like Napster[19], Gnutella [10], Kazaa [14] and Morpheus [17].Though these systems are more famous for filesharing, and related legal problems, P2P systems arebecoming a very promising and exciting area ofresearch. P2P systems offer a decentralized, selfsustained, scalable, fault tolerant and symmetricnetwork of machines providing an effective balancingof storage and bandwidth resources.File Systems have been a basic element of Systemsresearch. Efforts have focused on providing a stable,reliable, efficient central storage system with certainperformance constraints. Experience has shown that adistributed approach is better for achieving these goals.Early efforts included SUN NFS, CODA, Plan 9, XFSand SFS. Initial efforts emphasized sharing data in asecure and reliable way. Important features of thesesystems included a client-server architecture that wasfundamental to their design caching, replication andavailability.Internet growth resulted in a new approach, thebuilding of distributed file system. As the host nodesstoring the shared objects became more geographicallydistributed and diverse, new criteria and performanceconstraints like availability, fault tolerance, security,robustness and location mechanisms became importantissues in designing distributed file systems.In recent years, P2P systems have emerged as aviable architecture for implementing distributed filesystems. In a P2P network, end users share resourcesvia direct exchange between computers. Information isdistributed among the member nodes instead ofconcentrated at a single server. A pure peer-to-peersystem is a distributed system without centralizedcontrol, where the software running at each node isequivalent in functionality. A P2P system should behighly scalable, available, distributed and more or lesssymmetric. The attractive properties of a Peer-to-Peerarchitecture have generated many research efforts inbuilding distributed P2P file systems. Because of thesuccess in this area, P2P systems are almost certain tobecome a major part of current and future researchactivities in file systems. This survey paper attempts toexplore the inherent properties of such systems andanalyze the characteristics of some major distributedP2P file systems. Also, the comparative advantage anddisadvantages of each system are discussed in detail.The rest of the paper is organized as follows:Section 2 discusses the benefits of using P2P systemsover other distributed storage mechanisms. Section 3explores design issues and desired properties ofdistributed P2P file systems. Section 4 identifies majorresearch distributed P2P file systems, analyzing theircomparative suitability depending upon selectedcriteria. Section 5 presents an analysis of the openproblems. A summary and conclusions follow inSection 6.

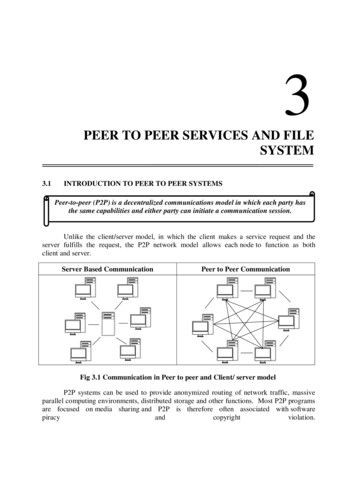

2. Justification of Using P2P Architecturefor File System DesignThe term Peer-to-Peer refers to a class of systemsand applications that employ distributed resources toperform a function in a decentralized manner. Eachnode potentially has the same responsibility. Shareditems can include CPU cycles (SETI@Home) orStorage space (Napster [19], Gnutella [10], OceanStore[15]).Basic P2P system goals are decentralization, ad hocconnectivity, reduced cost of ownership andanonymity. P2P has more decentralized control anddata compared to alternatives. P2P is potentially morescalable than centralized and client-server solutions.The basic defining feature of a P2P system is that it ischaracterized by direct access between peer computers,not through a centralized server.Androutsellis-Theotokis et al. [2] defined P2P as“applications that take advantage of resources (storage,cycles, content, human presence) available at the edgesof the Internet.” According to [2], “the “litmus test'”for P2P is: Does it treat variable connectivity and temporalnetwork addresses as the norm? Does it give the nodes at the edges of the networksignificant autonomy?"In lieu of the above definition, a noticeablecharacteristic of P2P systems is that they haveinteresting self-organizing capacity, in that thetopology of a P2P network can often change as nodesenter or leave the system. Important issues in a P2Psystem are considerably different than in traditionaldistributed systems, However, P2P systems providecertain advantages over conventional file systems thatjustify their usage in building distributed file system.For example, compared to the client-server model, P2Psystems provide inherent scalability and availability ofresources. They take advantage of the redundancy ofthe resources and construct a coherent view of thesystem using decentralized, independent components.The diverse nature of P2P systems and the large-scaledistributed structure ensures the fault tolerance andresolute nature of P2P systems as compared with clientserver systems. The sheer number of nodesparticipating in P2P architecture contributes toadvantage such as being adaptable, scalable and selforganizing. These essential features contrast distinctlywith traditional client-server approaches that arelimited by their lack of scalability and robustness incases of component failures.To make ubiquitous computing become a reality,the computing devices must become reliable, resilientand have distributed access to data. With this view inmind, the P2P system architecture appears to be mostsuitable to ensure the changing storage requirements ofnext-generation computing. The P2P architecture canhelp reduce storage system costs and allow costsharing by using existing infrastructures and bundlingresources from different sites. Resource aggregationadds value beyond the mere accumulation of resourcesand provides a rich, robust platform on which to buildpersistent storage systems. Considering all thesefactors, the P2P model should be very useful indesigning the future generation distributed filesystems.3. Design Issues in P2P File SystemsPeer-to-Peer systems have basic properties thatseparate them from conventional distributed systems.Inherently, P2P systems are loosely coupled, and noperformance guarantee can be provided; but the systemas a whole contains common characteristics that affectits behavior in varying circumstances. This sectiondiscusses different design issues of a P2P file systemand the potential effect of the issues on performance.3.1 SymmetryP2P systems are characterized by symmetry amongthe roles of the participating nodes. It assumes nospecial capability of certain nodes that would markthem separate from the rest of the nodes. Conventionalclient-server systems are asymmetric and the serversare often more powerful than the clients. However, inP2P systems, all peers are symmetric. They have theability to function both as a client and a server.3.2 DecentralizationP2P systems are decentralized by nature. Hence,P2P systems have mechanisms supporting distributedstorage, processing, information sharing, etc. Thisallows increased extensibility, resilience to faults andhigher system availability [16]. However, getting aglobal view of the system state is difficult. Also,system behavior no longer remains deterministic.Another problem is the issue of joining a group anddiscovering the peers belonging to that group.

3.3 Operation with Unmanaged VolunteerParticipantsAn important P2P design issue is that theparticipation of a given element can neither beexpected nor enforced. System elements and storagenodes are not managed by any centralized authority.They are assumed to be prone to failure and removedfrom the system at any time. The system should berobust enough to survive the removal or failure ofnodes at any moment.3.4 Fast Resource LocationOne of the most important P2P design issues is themethod used for resource location. As resources aredistributed in diverse peers, an efficient mechanism forobject location becomes the deciding factor in theperformance of such systems. The mechanism shouldbe capable of adapting to a changing network topology.Contrary to the P2P concept, Napster uses acentralized directory of object locations that proves tobe a bottleneck. Gnutella [10] incorporates objectlocation using non-scalable flooding. Elaborateschemes have been developed to solve this problemefficiently. Notable currently used object location androuting systems include Chord [26], Pastry [24],Tapestry [27] and CAN [23]. Pastry and Tapestry usesPlaxton [22] trees, basing their routing on addressprefixes. This approach is a generalization ofhypercube routing. However, Pastry and Tapestry addrobustness, dynamism and self-organizing properties tothe Plaxton scheme. Chord [26] uses the numericaldifference with the destination address to routemessages. This is unlike Pastry [24] or Tapestry [27]that use successively longer address prefixes with thedestination. The Content Addressable Network orCAN [23] uses a d-dimensional space to routemessages; with each node maintaining a O(d) sizedrouting table and any node within O(dN1/d) hops andthe routing table does not grow with network size.An important location strategy used in severalsystems is Distributed Hash Table (DHT). It useshashing of file or resource names to locate the object.Kelips [12] is a DHT based system, which has theadvantage of being efficient and scalable as well asusing O(n1/2) space per node and O(1) lookup times.3.5 Load BalancingLoad balancing is an important issue in buildingrobust P2P file systems. The system should be able tomake optimal distribution of resources based oncapability and availability of node resources. Thesystem should have mechanisms for preventing thebuild up of hotspots, locations where the load isdisproportionately high. Also, it should be possible torebalance the system based on usage patterns.3.6 Churn ProtectionChurn describes the fast oscillations in the P2Psystem caused by the rapid joining and leaving ofnodes. It occurs when there is a node failure andcorresponding joining of new nodes at the same time.Churn causes reduced performance in any distributedsystem. One form of a denial of service attack is tointroduce churn in a system. Hence, a P2P distributedfile system should be able to resist the churn effect.3.7 AnonymityIn a distributed storage system, anonymity is animportant issue to ensure resistance to censorship.There is need for resistance to attempts by third partiesto deny access to information and provide anonymityfor both the producers and consumers of information.3.8 ScalabilityScalability implies the ability of the system tosupport millions of peers into a peer-to-peer system.Traditional distributed systems usually are not scalablebeyond a few hundreds or thousands of nodes.3.9 Persistence of InformationA P2P system should be able to provide persistentaccess to data. Methods should be present to ensurethat even in the case of untrusted peers, the data storedin the system is safe, protected against destruction, andhighly available in a transparent manner.3.10 SecuritySecurity from attacks and system failure are designgoals for every system. P2P systems are built onunmanaged, geographically distributed hosts and datasecurity is the systems responsibility. Encryption,different coding schemes, etc can help achieve this.4. Some Existing SystemsDesigning a P2P file system that can implement allthe properties described in Section 3 is exceedinglydifficult. Recently, a number of efforts have been madeto achieve most of the goals. However, most of thesesystems utilize specific properties or mechanisms and

specialize in particular fields. This section discussessome existing P2P based distributed file systems.4.1 FreeNetFreenet [3,7] is an adaptive peer-to-peer file systemthat enables the publication, replication and retrieval ofdata while protecting the anonymity of the authors,data location and the readers. It uses probabilisticrouting to preserve the anonymity of its users, datapublishers, and data hosts. Basically, Freenet operatesas a location-independent distributed file system acrossmany individual computers that allow files to beinserted, stored and requested anonymously. Thedesign goals of Freenet are: anonymity; deniability forthe storers of information; resistance to 3rd partyaccess; dynamic storage and routing; and decentralizedpolicy.4.1.2 Location and Access Mechanisms Freenetidentifies files by keys obtained through a hashfunction, Current implementations of Freenet use 160bit SHA1 cryptographic function as the hashingmethod. The key may be keyword signed key (KSK),Signed Subspace key (SSK) or Content Hash Key(CHK). Using any of the hash mappings, the source ofthe search sends queries. The query may be locallyprocessed, or on failure may be routed to thelexicographically closest matching node according tothe routing table. Communications by Freenet nodesare encrypted and are routed through other nodes tomake it extremely difficult to determine who isrequesting the information and what its content is.On receipt of an insert request, a node first checksits own storage to see whether the key is already taken.In case of collisions, the user tries again using adifferent key. If the key is not found, the node looks upthe nearest key in its routing table and forwards theinsert request to the corresponding node thatpropagates through the nodes until the hops-to-livelimit is reached. If there is no key collision, a successmessage is propagated back to the original sender. Thedata follows along the path established and is stored innodes along the way. Data is stored in an LRU fashionand older unused information gradually fades away.Table 1. Freenet TradeoffsAdvantagesFreenet attempts to provideanonymity both for producersand consumers of information.Performance analysis shows: asthe network converges, medianDisadvantagesAnonymity requirements limitreliability and performance, sincetheprobabilisticroutingmechanism stops forming of anycoherent topology among servers.Anunpopularfilemightdisappear from the network if allrequest path length drops.Network is scalable up to amillion nodes with a medianpath length of just 30.Replicate popular data itemstransparently near requestingnode. With time, the networkrouting learns and remembersrequests for better performance.The network is robust againstquite large failures.The popularity of each site'smaterial causes the system toactually alter its topologyHashingrendersFreenetunusable for random searchesRewards popular material andallows unpopular material todisappear quietly.nodes decide to drop its copies.Dictionary attacks to modify ofrequested files en route ispossible for files stored underkeyword-signed keys.Denial-of-Service attack throughinsertion of a large number ofjunk files.The flat namespace producesglobally unique identifiers andversioning might become aproblem as the system growsSuffers from problems ofestablishinginitialnetworkconnection.No search mechanism. Astandard search allows attacks totake out specific content holdersScalability, resilience testing in areal world scenario is lacking.4.2 CFSCooperative File System (CFS) [5] is a peer-to-peerread only storage system developed at MIT with thefollowing design goals: provable guarantee forefficiency, robustness, load balancing and scalability.4.2.1 Mechanism. CFS uses Distributed Hash table(Dhash) for storage of blocks. The file system isdesigned as a set of blocks distributed over the CFSservers. A file is divided into constituent blocks thatare stored among different nodes. CFS has 3 layers: FSwhich interprets blocks as files and presents a filesystem interface to applications, DHash, distributedhash table that stores unstructured data blocks reliablyand Chord [26] which maintains routing tables forlookup and query managementCFS is a read only system from the perspective ofthe users. However, the publishers can update theirwork. Key based authentication is used.Table 2. CFS TradeoffsAdvantagesQuota on publishers provides asecurity advantageDividing a large file intochunks removes the problemthat one node may not have thecapacity to store the whole fileCachingandreplicationsdecreases response timeUsage of Chord allowslogarithmic lookup timesDistributed storage of a fileallows parallel block retrievalDisadvantagesMaintaining a single file in manyblocks introduce overhead offetching the blocksTo enhance performance, CFSsacrifices anonymity. So, it doesnot provide the same censorshipresistance as Freenet

4.3 PASTPAST [25] is a large scale P2P persistent storagemanagement system. It is comprised of self-organizing,Internet Based overlay network of storage nodes whichroute file queries in a cooperative manner, performreplica storage and caching.4.3.1 Mechanism. PAST is built on top of the Pastry[24] lookup system. The nodes form an overlaynetwork. A 128-bit node identifier that is assignedquasi-randomly uniquely identifies each node. Thisuniformly chosen random identifier ensures loadbalancing. Files also have a file id that is a SHA-1 hashof the file name and the public key of the client. ThePastry layer handles the lookup requests. Replicationsenable fast lookup and transmission. To retrieve a file,a client uses its fileID, and in some cases, thedecryption key. For the client, PAST provides threemain sets of operations. Insert: store a file replicated k times, k being auser specified number, Lookup: reliably retrieve a copy of the fileidentified by fileId if it exists in the PAST Reclaim: reclaim the storage occupied by kcopies of the file.4.3.2 Security using Smart-Cards. The system usessmart-card key based techniques for security, loadbalancing and free storage re-allocation by replicadiversion.Table 3. PAST TradeoffsAdvantagesThere is no restriction thatPastry must to be used. Due tomodular design, Chord, CANor others can also be used.Files in PAST are immutable,so multiple files cannot havethe same fileId.Smart cards are not used inother systems.DisadvantagesPAST stores a single large filewithout breaking it into smallerchunks (as in CFS). This is notefficient or fault tolerant.PAST is an archive and storagesystem, rather than a generalpurpose file system utility.4.4 IVYIVY [18] is a read/write peer-to-peer file systemsthat is distributed and decentralized and able to supportmultiple users concurrently. The system is based on aset of logs and the DHash distributed hash. It providesan NFS-like file system view to the users, while at thesame time; it can detect conflicting modifications andrecover from network failure.4.4.1 Mechanism. The IVY file system is based on aset of logs that each participant keeps to record thechanges made to the system. Each user scans andsynchronizes the logs. Snapshot mechanisms preventscanning of all but the most recent log. The logs arethemselves stored in DHash. IVY overcomes theoverhead of multiple accesses and locking. It also usesversion vectors for synchronization. Integrity of eachblock is ensured by either content hash key or publickey. Since logs are stored indefinitely, recovery isalways possible in case of network partitions. The totalsystem state is a composite of all the individual logs.Periodically, each participant takes snapshots to avoidfuture scanning of the entire log.Table 4. IVY TradeoffsAdvantagesIt enables writing with reading.Other systems discussed so farseem to be read only systems.No need for explicit trustbetween the hostsDisadvantagesSlow. Ivy is 2 to 3 times slowerthan NFS tresolution tools have to be used.4.5 OceanStoreOceanStore [15] is a proposed system to providedistributed access to persistent nomadic data in auniform global scenario. It is designed using acooperative utility model in which consumers pay theservice providers certain fees to ensure access topersistent storage. The service providers in turn useutility model to form agreement and resource sharing.Data stored in OceanStore4.5.1 Mechanism. Using mainly untrusted servers,OceanStore caches data anywhere in the network, withencryption. This provides high availability andprevention of denial-of-service type of attacks.Persistent objects are uniquely identified by a GlobalID (GUID) and are located by either a nondeterministic but fast algorithm (Attenuated BloomFilters) or a slower deterministic algorithm (ModifiedPlaxton Trees [22]). OceanStore uses ACL forrestricting write access to data, while read access isavailable with the key. Updates are achieved using theByzantine agreement protocol between the primaryreplica and the secondaries. For high performance,OceanStore also provides self-monitoring introspectionmechanisms for data migration based on accesspatterns. This is also used to detect clusters andimprove routing performance.Table 5. OceanStore TradeoffsAdvantagesIt is suitable for ubiquitousTheDisadvantagessystem is still inthe

systems.Disassociating theinformation from any particularfixed location, and making itavailable everywhere is verybeneficial.implementation phase. The mainweakness is the practicability. Areal world deployment andtesting will bring out many realworld problems.The Introspection mechanism[15] is a learning process, andthe system starts to makessmart decisions based on theaccess patterns."Caching data anywhere andeverywhere", this approach is notsecure enough because readaccess is controlled by only theowner's public encryption key4.6 FarsiteFarsite [1] is a symbiotic, serverless, distributed filesystem. It works among cooperating but notcompletely trusting the clients. The goal is to providehigh availability and reliability for file storage, securityand resistance to Byzantine threats. Reliability andavailability is ensured through replication of the wholefile. Farsite has a collection of interacting andByzantine-fault-tolerant replica groups arranged in atree overlaying the file system namespace. It hascryptographic checksums of indirection pointers thatare replicated in a Byzantine-fault-tolerant manner.Data stored in the servers is encrypted and replicated ina non-byzantine way. Alternatively, the design allowsuse of erasure coding for replication [1].4.6.1 Mechanism. Farsite first encrypts the contents ofthe files to prevent unauthorized reads. Digitalsignatures are used to prevent an unauthorized user towrite a file. After encryption, replicas of the file aremade and they are distributed to other client machines.Replication provides reliability thru long term datapersistence and immediate availability of requested filedata. Directory group members share replicatedmetadata. File data is replicated on multiple file hosts.Table 6. Farsite TradeoffsAdvantagesIt replaces physical security ofa server in a locked room withvirtual security: cryptography,randomized replication, andByzantine fault-toleranceFarsite is designed to supporttypical desktop file I/Oworkloads in academic andcorporate environments, nothigh-performanceI/Oofscientific applications or writesharing of database apps.Minimal administrative effortto initially configure andalmostnocentraladministration to maintain.Designed to be highly scalable,DisadvantagesFarsite uses a lazy updatescheme. The content of newlywritten files will briefly reside ononly one machine. Loss of thatmachine will result in loss of theupdate. The directory servicemust keep track of which replicascontain up-to-date data, so thatusers will not accidentally accessout-of-date versions of files.with capacity for up to 105machines.bandwidth applications, and mustdeal with servers less reliablethan one large-scale file system.4.7 KelipsKelips [12] is a P2P file system that achieves fasterfile look-up time and more stability to failures andchurn, at the cost of increased memory usage andconstant background communication overhead.Specifically, it achieves O(1) lookup time, at the costof memory usage of square root N. This can becompared to other DHT P2P Systems CFS, PAST thatensure log(n) for lookup and memory usage.4.7.1 Mechanism The system hashes a node to kaffinity groups. Each node maintains group views(entries for all nodes within the group), and a constantnumber of contacts for all other groups and file tuplesfor all files stored in the group. This requires squareroot n storage, when k is optimized. Information ation that has logarithmic latency.Inaddition, bandwidth consumed per node is constant.Lookups consist of hashing the file to groupnumber, sending a lookup request to a member of thegroup, and then retrieving the file tuple info for thelookup. Insertions are done by hashing the file to thegroup number, sending an insert request to a memberof the group, and the contact picking up a random nodein the group as the store.Because latency of gossip protocol and bandwidthlimit results in incomplete soft state maintenance, theabove lookup and insertion can fail; in that case multihop rerouting is used.Table 7. Kelips TradeoffsAdvantagesHighChurnResistanceRecovery from failure of halfthe nodes within secondsLookups succeed even underfailuresP2P system with a lookup costequal to Full index duplicationO(1) and a memory utilizationof O(sqrt(N)) O(N)DisadvantagesUses a constant communicationoverhead and data quality maylag if updates occur too rapidly.Memory requirement high.Gossip architectures may onlyallow weak consistency.Locality and Security issues notconsidered4.8 FastTrackUses O(dn (1/d) ) size routingtables to route in O(d) hops, butdoesnotaddressrapidmembership changes.It is not designed for high-FastTrack based systems are more popularly knownas “file sharing” systems because the objects areimmutable, and as a result the vast majority of objects

are fetched at most once per client. The FastTracknetwork is supposedly one of the most popular P2PFile systems today with over 5 million simultaneoususers sharing up to 900 million files [20]. It’s a hybridbetween Gnutella and Napster and takes advantage of“healthier” participants in the system. The underlyingtechnology in Kazaa, KazaaLite, and Grokster(FastTrack clients) is a proprietary protocol, but somedetails are available.objects is much flatter than Zipfwould predictSupernodes liken to Napter’scentralized server techniqueand Gnutella’s flooding.4.9 BitTorrentOne of the more recently developed and immenselystudied P2P file systems is BitTorrent [4]. Studies onInternet backbones indicate that it is one of the mostpopular networks [13]. Its traffic made up 53 per centof all P2P traffic in June 2004 [21]. BitTorrent is afile-download protocol and relies on other (global)components, such as websites, for finding files. Themost popular website for this purpose is suprnova.org.4.8.1 Mechanism Fast Track is built upon activeclients known as ‘supernodes’ that store a directorylisting ( filename,peer pointer ), similar to Napsterservers. Supernode membership changes over time andany peer can become (and stay) a supernode, providedit has earned enough reputation. Peers search bycontacting a nearby supernode.4.9.1 Mechanism In BitTorrent [BITORRENT], filesare split up into fixed-size chunks (on the order of athousand per file), and the downloaders of a file barterfor chunks of it by uploading and downloading them ina tit-for-tat-like manner to prevent parasitic behavior.Each peer is responsible for maximizing its owndownload rate by contacting suitable peers, and peerswith high upload rates will with high probability alsobe able to download with high speeds. When a peer hasfinished downloading a file, it may become a seed bystaying online for a while and sharing the file for free,i.e., without bartering.Kazaa clients supply Kazaa-specific “usernames” asan HTTP header in each transaction- period of time.Kazaa control traffic, primarily consists of queries andtheir responses, and is encrypted. Kazaa file-transfertraffic consists of unencrypted HTTP transfers; alltransfers include Kazaa-specifc HTTP headers (e.g.,“X-Kazaa-IP”). These headers provide information toidentify precisely which object is being transferred in agiven transaction. When a client attempts to downloadan object, that object may be downloaded in pieces(often called “chunks”) from several sources over along period of time. A “transaction” is a single HTTPtransfer between a client and a server, and a “request”is a set of transactions a client participates in todownload an entire object. A failed transaction occurswhen a client successfully contacts a remote peer, butthat remote peer returns an HTTP 500 error code.Table 9. Bittorrent TradeoffsAdvantagesGlobal components ensure ahigh level of integrity of boththe content and the meta-data(less fake-file problems) at theprice of system availability(opposite to a.org ) sometime fail.Mirrors rarely survive longer thana few days due to the highdemands of over 1,200,000 dailyvisitors (Oct 2004). In general,trackers are a frequent target fordenial-of-service

file systems. This survey paper explores the design paradigms and important issues that relate to such systems and discusses the various research activities in the field of Distributed Peer- to-Peer file systems. 1. Introduction In the recent years, Peer-to-Peer system research has grown significantly. Using a large scale distributed