Transcription

Outlier-Robust Optimal Transport:Duality, Structure, and Statistical AnalysisSloan NietertCornell UniversityRachel CummingsColumbia UniversityAbstractThe Wasserstein distance, rooted in optimaltransport (OT) theory, is a popular discrepancy measure between probability distributions with various applications to statisticsand machine learning. Despite their rich structure and demonstrated utility, Wassersteindistances are sensitive to outliers in the considered distributions, which hinders applicabilityin practice. We propose a new outlier-robustWasserstein distance Wpε which allows for εoutlier mass to be removed from each contaminated distribution. Under standard momentassumptions, Wpε is shown to be minimax optimal for robust estimation under the Huberε-contamination model. Our formulation ofthis robust distance amounts to a highly regular optimization problem that lends itselfbetter for analysis compared to previouslyconsidered frameworks. Leveraging this, weconduct a thorough theoretical study of Wpε ,encompassing robustness guarantees, characterization of optimal perturbations, regularity,duality, and statistical estimation. In particular, by decoupling the optimization variables,we arrive at a simple dual form for Wpε thatcan be implemented via an elementary modification to standard, duality-based OT solvers.We illustrate the virtues of our frameworkvia applications to generative modeling withcontaminated datasets.1INTRODUCTIONDiscrepancy measures between probability distributions are a fundamental constituent of statistical inferProceedings of the 25th International Conference on Artificial Intelligence and Statistics (AISTATS) 2022, Valencia,Spain. PMLR: Volume 151. Copyright 2022 by the author(s).Ziv GoldfeldCornell Universityence, machine learning, and information theory. Amongmany such measures, Wasserstein distances (Villani,2003) have recently emerged as a tool of choice formany applications. Specifically, for p [1, ) and apair of probability measures µ, ν on a metric space(X , d), the p-Wasserstein distance between them is1 Wp (µ, ν) : Zinfπ Π(µ,ν)d(x, y)p dπ(x, y) 1/p,X Xwhere Π(µ, ν) is the set of couplings for µ and ν. Thepopularity of these metrics stems from a myriad ofdesirable properties, including rich geometric structure,metrization of the weak topology, robustness to support mismatch, and a convenient dual form. Modernapplications thereof include generative modeling (Arjovsky et al., 2017; Gulrajani et al., 2017; Tolstikhinet al., 2018), domain adaptation (Courty et al., 2014,2016), and robust optimization (Esfahani and Kuhn,2018; Blanchet et al., 2018; Gao and Kleywegt, 2016).Despite their advantages, Wasserstein distances sufferfrom sensitivity to outliers due to the strict marginalconstraints, as even a small outlier mass can contributegreatly to the distance. This has inspired a recentline of work into outlier-robust OT (Balaji et al., 2020;Mukherjee et al., 2021; Le et al., 2021), which relaxesthe marginal constraints in various ways. These buildupon the theory of unbalanced OT (Piccoli and Rossi,2014; Chizat et al., 2018a,b; Liero et al., 2018; Schmitzerand Wirth, 2019) that quantifies the cost-optimal wayto transform one measure into another via a combination of mass variation and transportation. We proposea new framework for outlier-robust OT that arisesas the solution to a principled robust approximationproblem. We conduct an in-depth theoretical study ofthe proposed robust distance, encompassing formal robustness guarantees, duality, characterization of primalminimizers / dual maximizers, regularity, and empiricalconvergence rates.1For p , we set W (µ, ν) : inf π Π(µ,ν) kdkL (π) .

Outlier-Robust Optimal Transport: Duality, Structure, and Statistical Analysis1.1ContributionsWe introduce and study the ε-outlier-robust Wasserstein distance defined by ν0µ0ε,,Wp (µ, ν) : infWpµ0 (X ) ν 0 (X )µ0 ,ν 0 M (X )µ0 µ, kµ µ0 kTV εν 0 ν, kν ν 0 kTV ε(1)where µ0 and ν 0 are positive measures, k·kTV is the totalvariation (TV) norm, and denotes setwise inequalitywhen appropriate. The minimization over µ0 , ν 0 allowsoutliers occupying less than fraction ε of probabilitymass to be omitted from consideration, after whichthe perturbed measures are renormalized. Comparedto prior work employing TV constraints (Balaji et al.,2020; Mukherjee et al., 2021), our definition has severaldistinct features: (1) it is naturally derived as a robustproxy for Wp under the Huber ε-contamination model;(2) it can be reframed as an optimization problem over ahighly regular domain; and, consequently, (3) it admitsa simple and useful duality theory.We show that when the clean distributions havebounded qth moments for q p, Wpε achieves theminimax optimal robust estimation error of ε1/p 1/qunder the Huber ε-contamination model. Moreover,our dual formulation mirrors the classic Kantorovichdual with an added penalty proportional to the rangeof the potential function. This provides an elementaryrobustification technique which can be applied to anyduality-based OT solver: one needs only to compute theargmin and argmax of the discriminative potentials overthe batch samples, which can be done in conjunctionwith existing gradient evaluations. We demonstratethis the utility of this procedure with experiments forgenerative modeling with contaminated datasets usingWasserstein generative adversarial networks (WGANs)(Arjovsky et al., 2017).We also study structural properties of Wpε , characterizing the minimizers of (1) and maximizers of its dual, describing the regularity of the problem in ε, and drawinga connection between Wpε and loss trimming (Shen andSanghavi, 2019). Finally, we study statistical aspectsof Wpε , examining both one- and two-sample empiricalconvergence rates and providing additional robustnessguarantees. The derived empirical convergence ratesare at least as fast as the regular n 1/d rate for standard Wp ; however, faster rates may be possible if onlya small amount of high-dimensional mass is present.1.2and Mukherjee et al. (2021). In Balaji et al. (2020),similar constraints are imposed with respect to (w.r.t.)general f -divergences, but the perturbed measures arerestricted to probability distributions. This results in amore complex dual form (derived by invoking standardKantorovich duality on the Wasserstein distance between perturbations) and requires optimization over asignificantly larger domain. In Mukherjee et al. (2021),robustness w.r.t. the TV distance is added via a regularization term in the objective. This leads to a simplemodified primal problem but the corresponding dual requires optimizing over two potentials, even when p 1.Additionally, Le et al. (2021) and Nath (2020) considerrobustness via Kullback-Leibler (KL) divergence andintegral probability metric (IPM) regularization terms,respectively. The former focuses on Sinkhorn-basedprimal algorithms and the latter introduces a dual formthat is distinct from ours and less compatible with existing duality-based OT computational methods. InStaerman et al. (2021), a median of means approachis used to tackle the dual Kantorovich problem froma robust statistics perspective. None of these worksprovide minimax error bounds for robust estimation.The robust OT literature is intimately related to unbalanced OT theory, which addresses transport problemsbetween measures of different mass (Piccoli and Rossi,2014; Chizat et al., 2018a; Liero et al., 2018; Schmitzerand Wirth, 2019; Hanin, 1992). These formulations arereminiscent of the problem (1) but with regularizersadded to the objective (KL being the most studied)rather than incorporated as constraints. Sinkhornbased primal algorithms (Chizat et al., 2018b) are thestandard approach to computation, and these haverecently been extended to large-scale machine learning problems via minibatch methods (Fatras et al.,2021). Fukunaga and Kasai (2021) introduces primalbased algorithms for semi-relaxed OT, where marginalconstraints for a single measure are replaced with aregularizer in the objective. Partial OT (Caffarelli andMcCann, 2010; Figalli, 2010), where only a fraction ofmass needs to be moved, is another related framework.However, Caffarelli and McCann (2010) consider a different parameterization of the problem, arriving at adistinct dual, and Figalli (2010) is mostly restrictedto quadratic costs with no discussion of duality. Recently, Chapel et al. (2020) has explored partial OT forpositive-unlabeled learning, but dual-based algorithmsare not considered.Related WorkThe robust Wasserstein distance2 in (1) is closely related to the notions considered in Balaji et al. (2020)2Despite calling Wpε a distance, we remark that themetric structure of Wp is lost after robustification.Notation and Preliminaries. Let (X , d) be a complete, separable metric space, and denote the diameterof a set A X by diam(A) : supx,y A d(x, y). TakeCb (X ) as the set of continuous, bounded real functions on X , and let M(X ) denote the set of signed

Sloan Nietert, Rachel Cummings, Ziv GoldfeldRadon measures on X equipped with the TV norm3kµkTV : µ (X ). Let M (X ) denote the space offinite, positive Radon measures on X . The Lebesguemeasure on Rd is designated by λ. For µ, ν M (X )and p [1, ], we consider the standard Lp (µ) space 1/pRwith norm kf kLp (µ) , and we write f p dµµ ν when µ(B) ν(B) for every Borel set B X .Let P(X ) M (X ) denote the space of probabilityon X , and take Pp (X ) : {µ P(X ) :R measuresd(x, x0 )p dµ(x) } to be those with bounded pthmoment. We write P (X ) for probability measureswith bounded support. Given µ, ν P(X ), let Π(µ, ν)denote the set of their couplings, i.e., π P(X X )such that π(B X ) µ(B) and π(X B) ν(B), forevery Borel set B. When X Rd , we write the covariance matrix for µ P2 (X ) as Σµ : E[(X E[X])(X E[X]) ] where X µ. For f : X R, we define therange Range(f ) : supx X f (x) inf x X f (x). Wewrite a b max{a, b} and use ., &, to denoteinequalities/equality up to absolute constants.Recall that Wp (µ, ν) , for any µ, ν Pp (X ). Forany p [1, ), Kantorovich duality states thatZZWp (µ, ν)p supf dµ f c dν,(2)f Cb (X )where the c-transform f c : X R is defined by f c (y) inf x X d(x, y)p f (x) (with respect to the cost c(x, y) d(x, y)p ).2ROBUST ESTIMATION OF WpOutlier-robust OT is designed to address the fact thatWp (1 ε)µ εδx , ν explodes as d(x, x0 ) , nomatter how small ε might be. More generally, oneconsiders an adversary that seeks to dramatically alterWp by adding small pieces of mass to its arguments.To formalize this, we fix p [1, ) and consider theHuber ε-contamination model popularized in robuststatistics (Huber, 1964), where a base measure µ P(X ) is perturbed to obtain a contaminated measureµ̃ belonging to the ballBε (µ) : (1 ε)µ ε P(X ) (1 ε)µ εα : α P(X ) .(3)The goal is to obtain a robust proxy Ŵ : P(X )2 Rwhich, for any clean distributions µ, ν P(X ) withcontaminated versions µ̃ Bε (µ), ν̃ Bε (ν), achieveslow error Ŵ (µ̃, ν̃) Wp (µ, ν) . In general, this errorcan be unbounded, so we require that the base measures belong to some family D capturing distributional3This definition will be convenient but omits a factor of1/2 often present in machine learning literature.assumptions, e.g., bounded moments of some order. Forany ε [0, 1] and D P(X ), we define the minimaxrobust estimation error byE(D, ε) : infsupŴ :P(X )2 R µ,ν Dµ̃ Bε (µ)ν̃ Bε (ν)Ŵ (µ̃, ν̃) Wp (µ, ν) .Our interest in the robust Wasserstein distance Wpε , asdefined in (1), stems from the following theorem, characterizing it as the minimax optimal robust proxy forWp under Huber contamination and standard momentassumptions.Theorem 1 (Robust estimation of Wp ). Fix q p and let Dq : {µ Pq (X ) : kd(·, x)kLq (µ) M for some x X } denote the family of distributionswith centered qth moments uniformly bounded by anabsolute constant M . Then, for 0 ε 1/4, we haveE(Dq , ε) . M ε1/p 1/q .(4)This error rate is obtained by Ŵ Wpε and is optimal solong as X contains two points at distance Θ(M ε 1/q ).The distance assumption is quite mild and is satisfied,e.g., when X has a path connected component withdiameter at least M ε 1/q . We outline the proof below,with full details provided in Appendix A.2.Proof Sketch. The upper bound relies crucially onO(ε)the fact that Wpε (µ̃, ν̃) Wp (µ, ν) Wp (µ, ν) Wp (µ, ν) , allowing one to bound E(Dq , ε) uniformlyover contaminated measures. To control the upper bound, we use that Wp (µ, ν) kd(·, x0 )kLq (µ) kd(·, x1 )kLq (ν) d(x0 , x1 ) for q p, which holds for anyx0 , x1 X and connects Wp to the moment boundsencoded by Dq . The lower bound follows by a standardmodulus of continuity argument which givesE(D, ε) &sup Wp (µ, µ̃)µ,µ̃ Dµ̃ Bε (µ)for any choice of D P(X ). In the case of D Dq ,a simple choice of µ and µ̃ supported on at most twopoints gives a matching lower bound under the distanceassumption. Importantly, the hidden constants in thisresult are absolute and independent of X (in particular,they hold even when X is unbounded).We next specialize Theorem 1 to the common case ofX Rd with base measures whose covariance matricesΣµ , Σν have bounded spectral norms. Such measuresalso have bounded moments in the sense of Theorem 1,so the previous upper bound applies. The lower boundfor this case (given in Appendix A.3) uses the sametechnique as before but with a more careful choice ofmeasures involving a multivariate Gaussian.

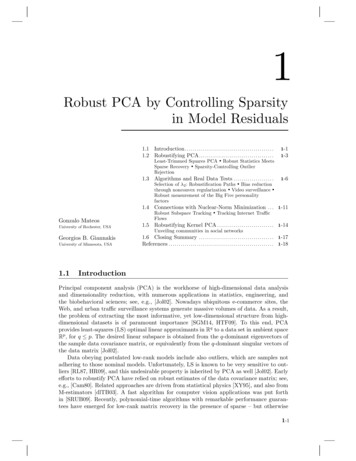

Outlier-Robust Optimal Transport: Duality, Structure, and Statistical AnalysisCorollary 1 (Bounded covariance). Fix X Rd andlet D2cov : {µ P2 (X ) : Σµ Id }. For p 2 and0 ε 1/4, we have E(D2cov , ε) d ε1/p 1/2 ,and this optimal error rate is achieved by Ŵ Wpε .Remark 1 (Comparison with robust mean estimation).In the setting of mean estimation under assumptionsanalogous to Corollary 1 (i.e., Σµ Id , µ̃ Bε (µ)), theoptimal error rate of ε is dimension-free(Chen et al., 2018). We interpret the factor of d present for ourWp rate as reflecting the high-dimensional optimizationinherent to the Wasserstein distance.Remark 2 (Breakdown point). We note that thebound of 1/4 on ε in both results can be substitutedwith any constant less than 1/3. We conjecture thatthis breakdown point of 1/3 can be pushed to itsinformation-theoretic limit of 1/2.Remark 3 (Asymmetric contamination). The robustdistance from (1) readily extends to an asymmetric disε ,εtance Wpµ ν with distinct robustness radii, so thatεWp Wpε,ε . Extensions of our main results (including Theorem 1) to this setting are presented inAppendix B.1. The one-sided version Wpε (µkν) : Wpε,0 (µ, ν) is well-suited for applications such as generative modeling (see Section 6).Our precise error bounds exploit the unique structure ofWpε and do not translate clearly to existing robust proxies for Wp . We note, however, that the TV-robustifiedWp presented in Balaji et al. (2020) can be controlledand approximated to some extent by Wpε , via boundspresented in Appendix B.2.3DUALITY THEORY FOR WpεIn addition to its robustness properties, Wpε enjoysa simple optimization structure that enables a usefulduality theory. Unless stated otherwise, we henceforthassume that X is compact. To begin, we reformulateWpε (µ, ν) as a minimization problem over Huber ballscentered at µ and ν.Proposition 1 (Mass addition). For all p [1, ]and µ, ν P(X ), we haveFigure 1: Huber ε-contamination balls (green) and ε-TVballs (blue), centered at distinct points (red) within the 2dimensional simplex. The Huber balls with different centersare translates of each other, while the rightmost TV ballinteracts non-trivially with the simplex boundary.relies on the symmetric nature of the OT distanceobjective. Roughly, instead of removing a piece massfrom one measure, we may always add it to the other.This reformulation is valuable because the updatedconstraint sets are simple and do not interact with thesimplex boundary. Specifically, definition (3) revealsthat the Huber ball Bδ (µ) is always an affine shift ofP(X ), with scaling independent of µ. Hence, linearoptimization over Bδ (µ) is straightforward:Zinfµ0 Bδ (µ)f dµ0 (1 ε)Zf dµ δ inf f (x). (6)x XThe above stands in contrast to the TV balls (i.e.,sets of the form {µ0 P(X ) : kµ0 µkTV δ}) thatappear in existing robust OT formulations; these exhibit non-trivial boundary interactions as depicted inFig. 1.R Fortunately, Wp is closely tied to the linear formµ 7 f dµ via Kantorovich duality—a cornerstone forvarious theoretical derivations and practical implementations. Combining this with a minimax result, weestablish a related dual form for Wpε .Theorem 2 (Dual form). For p [1, ), ε [0, 1],and µ, ν P(X ), we haveZ(1 ε)Wpε (µ, ν)p supZf dµ f c dν 2εkf k f Cb (X )Z supZf dµ f c dν ε Range(f ),(7)f Cb (X )(5)and the suprema are achieved by f Cb (X ) with f (f c )c .While the original definition (1) involves removing massfrom the base measures and rescaling, (5) is optimizingover mass added to µ and ν (up to scaling). Our proofin Appendix A.4 of this somewhat surprising resultThis new formulation differs from the classic dual (2)by a range penalty for the potential function. Whenp 1, we have f c f and f (f c )c exactly when fis 1-Lipschitz. The theorem is proven in Appendix A.5,where we first apply Proposition 1 and then invokeKantorovich duality for Wp (µ0 , ν 0 ), while verifying thatthe conditions for Sion’s minimax theorem hold true.Wpε (µ, ν) (1 2δ) 1/pinfµ0 Bδ (µ)ν 0 Bδ (ν)00Wp (µ , ν ),where δ ε/(1 ε).

Sloan Nietert, Rachel Cummings, Ziv GoldfeldApplying minimax givesinfµ0 Bδ (µ)ν 0 Bδ (ν)Wp (µ0 , ν 0 ) supZinf0f Cb (X ) µ Bδ (µ)Z0f dµ infν 0 Bδ (ν)cf dν0 ,where δ ε/(1 ε). At this point, we employ (6), alongwith properties of the c-transform, to obtain the desireddual. We stress that if µ0 and ν 0 instead varied withinTV balls, the inner minimization problems would notadmit closed forms due to boundary interactions.Theorem 2 reveals an elementary procedure for robustifying the Wasserstein distance against outliers:regularize its standard Kantorovich dual w.r.t. the supnorm of the potential function. The simplicity of thismodification is its main strength. As demonstrated inSection 6, this enables adjusting popular duality-basedOT solvers, e.g., Arjovsky et al. (2017), to the robust framework and opens the door for applications togenerative modeling with contaminated datasets. Weprovide an interpretation for the maximizing potentialsin Section 4. Some concrete examples for computingWpε are found in Appendix B.3.Remark 4 (TV as a dual norm). Recall that k · kTVis the dual norm corresponding to the Banach space ofmeasurable functions on X equipped with k · k . Aninspection of the proof of Theorem 2 reveals that ourpenalty scales with k · k precisely for this reason.Finally, we describe an alternative dual form which tiesrobust OT to loss trimming—a popular practical toolfor robustifying estimation algorithms when µ and νhave finite support (Shen and Sanghavi, 2019).Proposition 2 (Loss trimming dual). Fix p [1, )and take µ, ν to be uniform distributions over n pointseach. If ε [0, 1] is a multiple of 1/n, thenWpε (µ, ν)p supf Cb (X ) minA supp(µ) A (1 ε)n1 Xf (x) A x AminB supp(ν) B (1 ε)n X1 f c (y) . B y BThe inner minimization problems above clip out theεn fraction of samples whose potential evaluations arelargest. This is similar to how standard loss trimmingclips out a fraction of samples that contribute most tothe considered training loss.4STRUCTURAL PROPERTIESFigure 2: The gridded light blue and green regions eachhave mass ε, respectively, and are removed to obtain optimalµ0 and ν 0 for W1ε . No mass need be removed from the darkregion designating µ ν.4.1Primal and Dual OptimizersWe first prove that there are primal and dual optimizerssatisfy certain regularity conditions.Proposition 3 (Existence of minimizers). For p [1, ] and µ, ν P(X ), the infimum in (1) is achieved,and there are minimizers µ0 µ and ν 0 ν such thatµ0 , ν 0 µ ν and µ0 (X ) ν 0 (X ) 1 ε.We remark that the lower envelope of µ ν, illustratedin Fig. 2, is straightforward when p 1, since W1 (µ, ν)is a function of µ ν. However, this conclusion isnot obvious for p 1, and its proof in Appendix A.6utilizes a discretization argument. For achieving theinfimum, we show for p that the constraint set iscompact w.r.t. the classic Wasserstein topology, whilethe objective is clearly continuous in Wp . For p ,we observe that the constraint set is compact and theobjective is lower semicontinuous w.r.t. the topologyof weak convergence over P (X ).Proposition 4 (Interpreting maximizers). If f Cb (X ) maximizes (7), then any µ0 , ν 0 M (X ) minimizing (1) satisfy supp(µ µ0 ) argmax(f ) andsupp(ν ν 0 ) argmin(f ).Thus, the level sets of the dual potential encode thelocation of outliers in the original measures, as depicted in Fig. 3. In fact, optimal perturbations µ µ0and ν ν 0 are sometimes determined exactly by anoptimal potential f , often taking the form µ argmax(f )and ν argmax(f ) (though not always; we discuss this inAppendix A.11 along with the proof).4.2Regularity in Robustness RadiusWe examine how Wpε depends on the robustness radius.Proposition 5 (Dependence on ε). For any p [1, ],0 ε ε0 1, and µ, ν P(X ), we havekµ νkTV /2(i) Wp(µ, ν) 0, Wp0 (µ, ν) Wp (µ, ν);0We turn to structural properties of Wpε , exploring primal and dual optimizers, regularity of Wpε in , as wellas an alternative (near) coupling-based primal form.(ii) Wpε (µ, ν) Wpε (µ, ν); p1 0 p100 ε(iii) Wpε (µ, ν) 1 εWpε (µ, ν) 4 diam(X ) ε1 ε.1 ε

Outlier-Robust Optimal Transport: Duality, Structure, and Statistical Analysis𝑓𝑓𝜇𝜇of optimal (near) couplings also holds. A proof inAppendix A.9 provides an affirmative answer via aΓ-convergence argument.𝜈𝜈Proposition 7 (Convergence of couplings). Fix p [1, ] and µ, ν P(X ). If εn & 0 and πn P(X X )is optimal for Wpεn (µ, ν) via (8), for each n N, then{πn }n N admits a subsequence converging weakly to anoptimal coupling for Wp (µ, ν).Figure 3: Optimal potentials: (top) 1D densities plottedwith their optimal potential for the W1ε dual problem; (bottom) contour plots for optimal dual potentials to W1 andW1ε between 2D Gaussian mixtures. Observe how optimalpotentials for the robust dual are flat over outlier mass.The proof is given in Appendix A.7. More precise,diameter independent, bounds in the form of (iv) areprovided in the proofs of the robustness results fromSection 2, but these require µ and ν to satisfy certainmoment bounds that do not hold in general.4.3Finally, we consider a case of practical importance: thediscrete setting where µ and ν have finite supports.Like classic OT, computing Wpε (µ, ν) between discretemeasures amounts to a linear program for p and can be solved in polynomial time. The proof inAppendix A.10 starts from the alternative primal formof Proposition 6 and analyzes the feasible polytopewhen the support sizes are equal.Proposition 8 (Finite support). Let µ and ν be uniform discrete measures over n points each. Then thereexist optimal µ0 , ν 0 for Wpε (µ, ν) such that µ0 and ν 0each give mass 1/n to b(1 ε)nc points and assigntheir remaining dεne/n ε mass to a single point.When ε is a multiple of 1/n, the propositions saysthat there exist minimizers which assign equal massto (1 ε)n points, while eliminating the remaining εnthat are identified as outliers.Alternative Primal FormMirroring the primal Kantorovich problem for classicWp , we derive in Appendix A.8 an alternative primalform for Wpε in terms of (near) couplings for µ and ν.Proposition 6 (Alternative primal form). For anyp [1, ] and µ, ν P(X ), we have5We now examine estimation of Wpε from observed data.Throughout this section, we fix ε 1/2.5.1Wpε (µ, ν) infπ P(X X )µ Bε (π1 ), ν Bε (π2 )kdkLp (π) ,(8)where π1 and π2 are the respective marginals of π.Remark 5 (Data privacy). From this, we deduce thatεW (µ, ν) M if and only if there exists a coupling(X, Y ) for µ, ν such that X Y M with probabilityat least 1 Θ(ε). In Appendix C, we state this moreprecisely and leverage this fact for an application todata privacy. Specifically, within the Pufferfish privacyframework, the so-called Wasserstein Mechanism (Songet al., 2017) maximizes W over certain pairs of distributions to provide a strong privacy guarantee. Byεsubstituting W with W , we reach an alternativemechanism that satisfies a slightly relaxed guaranteeand can introduce significantly less noise.Proposition 5 implies that limε 0 Wpε Wp , posing Wpεas a natural extension of Wp . Given the representationin Proposition 6, we now ask whether convergenceSTATISTICAL ANALYSISEmpirical Convergence RatesIn practice, we often have access only to samples fromµ, ν P(X ), which motivates the study of empiriεcal convergence underempiricalPn Wp . Consider thePn 1 1measures µ̂n : ni 1 δXi and ν̂n : ni 1 δYi ,where X1 , . . . , Xn and Y1 , . . . , Yn are i.i.d. samples fromµ and ν, respectively. We examine both the one- andtwo-sample scenarios, i.e., the speed at which Wpε (µ, µ̂n )and Wpε (µ̂n , ν̂n ) Wpε (µ, ν) converge to 0 as n grows.This section assumes that X Rd with d 2; extensions to the non-Euclidean case are provided in theAppendices A.13 to A.15. To state the results, we needsome definitions. Recall the covering number Nδ (γ, τ ),defined for δ 0, τ 0, and γ M (X ) as the minimum number of closed balls of radius δ needed to coverX up to a set A with γ(A) τ . We define the lowerτ -covering dimension for γ asdτ (µ) : lim infδ 0Nδ (γ, τ ). log(δ)

Sloan Nietert, Rachel Cummings, Ziv GoldfeldWe note that lower bounds for standard Wp depend onthe lower Wasserstein dimension given by limτ 0 dτ (µ)(Weed and Bach, 2019). To provide meaningful boundsfor Wpε , on the other hand, we require control of dτ (µ)for some τ ε, which can be understood as a robustnotion of dimension.The proof in Appendix A.15 is a consequence of the dualform from Theorem 2, combined with standard onesample rates for Wp . There, we also discuss obstaclesto extending two-sample lower bounds for standard Wpto the robust setting.Proposition 9 (One-sample rates). Fix p [1, ]and τ (ε, 1]. If p d/2, we have5.2 E Wpε (µ, µ̂n ) Cn 1/dfor a constant C independent of n and ε. Furthermore,if dτ (µ) s , then1/pWpε (µ, γ) C 0 (τ ε)n 1/sfor any γ supported on at most n points, including µ̂n ,where C 0 0 is an absolute constant.The robust τ -covering dimension can be smaller thanstandard notions of intrinsic dimension when all butthe outlier mass is lower dimensional. Our lower boundcaptures this fact. The upper bound depends on theambient dimension d since there is no guarantee thatonly outlier mass is supported on a high-dimensionalset4 . Indeed, the following corollary shows that whena significant portion of mass is high-dimensional, then 1/d rate is sharp.Corollary 2 (Simple one-sample rate). Fix p d/2and ε (0,R 1]. If µ has absolutely continuous partf dλ with f dλ ε and f bounded from above, thendτ (µ) d and E Wpε (µ, µ̂n ) Θ(n 1/d ).In words, if more than the ε mass that can be removed via robustification is absolutely continuous(w.r.t. Lebesgue on Rd ), then the standard n 1/d“curse of dimensionality” applies. Nevertheless, we conjecture that an upper bound that depends on a robustupper dimension can be derived under appropriate assumptions on µ, as discussed in Section 7. The proofs ofProposition 9 and Corollary 2 are found in AppendicesA.13 and A.14, respectively.Moving to the two-sample regime, we again have anupper bound which matches the standard rate for Wp .Proposition 10 (Two-sample rate). For any ε [0, 1]and p d/2, we haveE Wpε (µ̂n , ν̂n )p Wpε (µ, ν)p Cn p/d .for a constant C independent of n and ε.Additional Robustness GuaranteesFinally, we provide conditions under which Wp (µ, ν)can be recovered precisely from Wpε (µ, ν) despite datacontamination. Naturally, these conditions are strongerthan those needed for approximate (minimax optimal)robust approximation, as studied in Section 2. Wereturn to the Huber ε-contamination model, taking µ̃ Bε (µ) and ν̃ Bε (ν). One cannot hope to achieve exactrecovery via Wpε in general, since Wpε (µ̃, ν̃) Wp (µ, ν)when µ̃ µ and ν̃ ν for p . Nevertheless, we canprovide exact recovery guarantees under appropriatemass separation assumptions.Proposition 11 (Exact recovery). Fix µ, ν P(X ),ε [0, 1], and suppose that µ̃ (1 ε)µ εα andν̃ (1 ε)ν εβ, for some α, β P(X ). LetS supp(µ ν). If d(supp(α), S), d(supp(β), S), andd(supp(α), supp(β)) are all greater than diam(S), thenWpε (µ̃, ν̃) Wp (µ, ν).Our proof in Appendix A.16 uses an infinitesimal perturbation argument and shows that, when outliers aresufficiently far away, removing outlier mass is strictlybetter than inlier mass for minimizing Wp . The appendix also discussed more flexible albeit technicalassumptions under which exact recovery is possible,and provides bounds for when the robustness radiusdoes not match the contamination level ε exactly.Proposition 11 relies on the contamination level ε being known, which is often not the case in practice. Toaccount for this, we prove in Appendix A.17 that, under the same assumptions, there exists a principledapproach for selecting the robustness radius when ε isunknown.Proposition 12 (Robustness radius for unknown ε).Assume the setting of Proposition 11 and let D bethe maximum of d(supp(α), S), d(supp(β), S), andd(supp(α), supp(β)). Then, for p [1, ), we have dδp Dp ,δ [0, ε)dδ (1 δ)Wp (µ̃, ν̃) dδppp diam(S) D , δ (ε, 1]dδ (1 δ)Wp (µ̃, ν̃)at the (all but countably many) points where the derivative is defined.As (1 δ)W

scribing the regularity of the problem in ", and drawing a connection between W" p and loss trimming (Shen and Sanghavi,2019). Finally, we study statistical aspects of W" p, examining both one- and two-sample empirical convergence rates and providing additional robustness guarantees. The derived empirical convergence rates