Transcription

The Data WarehouseETL Toolkit

The Data WarehouseETL ToolkitPractical Techniques forExtracting, Cleaning,Conforming, andDelivering DataRalph KimballJoe CasertaWiley Publishing, Inc.

Published byWiley Publishing, Inc.10475 Crosspoint BoulevardIndianapolis, IN 46256www.wiley.comC 2004 by Wiley Publishing, Inc. All rights reserved.Copyright Published simultaneously in CanadaeISBN: 0-764-57923-1Printed in the United States of America10 9 8 7 6 5 4 3 2 1No part of this publication may be reproduced, stored in a retrieval system, or transmitted in anyform or by any means, electronic, mechanical, photocopying, recording, scanning or otherwise, except as permitted under Sections 107 or 108 of the 1976 United States Copyright Act, without eitherthe prior written permission of the Publisher, or authorization through payment of the appropriateper-copy fee to the Copyright Clearance Center, 222 Rosewood Drive, Danvers, MA 01923, (978)750-8400, fax (978) 646-8600. Requests to the Publisher for permission should be addressed to theLegal Department, Wiley Publishing, Inc., 10475 Crosspoint Blvd., Indianapolis, IN 46256, (317)572-3447, fax (317) 572-4355, e-mail: brandreview@wiley.com.Limit of Liability/Disclaimer of Warranty: The publisher and the author make no representationsor warranties with respect to the accuracy or completeness of the contents of this work and specifically disclaim all warranties, including without limitation warranties of fitness for a particularpurpose. No warranty may be created or extended by sales or promotional materials. The adviceand strategies contained herein may not be suitable for every situation. This work is sold withthe understanding that the publisher is not engaged in rendering legal, accounting, or other professional services. If professional assistance is required, the services of a competent professionalperson should be sought. Neither the publisher not the author shall be liable for damages arisingherefrom. The fact that an organization or Website is referred to in this work as a citation and/ora potential source of further information does not mean that the author or the publisher endorsesthe information the organization or Website may provide or recommendations it may make. Further, readers should be aware that Internet Websites listed in this work may have changed ordisappeared between when this work was written and when it is read.For general information on our other products and services please contact our Customer CareDepartment within the United States at (800) 762-2974, outside the United States at (317) 572-3993or fax (317) 572-4002.Wiley also publishes its books in a variety of electronic formats. Some content that appears in printmay not be available in electronic books.Library of Congress Cataloging-in-Publication DataKimball, Ralph.The data warehouse ETL toolkit : practical techniques for extracting, cleaning, conforming, anddelivering data / Ralph Kimball, Joe Caserta.p. cm.Includes index.eISBN 0-7645-7923 -11. Data warehousing. 2. Database design. I. Caserta, Joe, 1965- II. emarks: Wiley, the Wiley Publishing logo, and related trade dress are trademarks or registeredtrademarks of John Wiley & Sons, Inc. and/or its affiliates. All other trademarks are the propertyof their respective owners. Wiley Publishing, Inc., is not associated with any product or vendormentioned in this book.

CreditsVice President and ExecutiveGroup Publisher:Richard SwadleyVice President and Publisher:Joseph B. WikertExecutive Editorial Director:Mary BednarekExecutive Editor:Robert ElliotEditorial Manager:Kathryn A. MalmDevelopment Editor:Adaobi Obi TultonProduction Editor:Pamela HanleyMedia Development Specialist:Travis SilversText Design & Composition:TechBooks Composition Services

ContentsAcknowledgmentsxviiAbout the AuthorsxixIntroductionxxiPart IRequirements, Realities, and Architecture1Chapter 1Surrounding the RequirementsRequirementsBusiness NeedsCompliance RequirementsData ProfilingSecurity RequirementsData IntegrationData LatencyArchiving and LineageEnd User Delivery InterfacesAvailable SkillsLegacy LicensesArchitectureETL Tool versus Hand Coding (Buy a Tool Suite or RollYour Own?)The Back Room – Preparing the DataThe Front Room – Data AccessThe Mission of the Data WarehouseWhat the Data Warehouse IsWhat the Data Warehouse Is NotIndustry Terms Not Used Consistently344456778899910162022222325vii

viiiContentsResolving Architectural Conflict: A Hybrid ApproachHow the Data Warehouse Is ChangingThe Mission of the ETL TeamChapter 2272728ETL Data StructuresTo Stage or Not to StageDesigning the Staging AreaData Structures in the ETL SystemFlat FilesXML Data SetsRelational TablesIndependent DBMS Working TablesThird Normal Form Entity/Relation ModelsNonrelational Data SourcesDimensional Data Models: The Handoff from the BackRoom to the Front RoomFact TablesDimension TablesAtomic and Aggregate Fact TablesSurrogate Key Mapping TablesPlanning and Design StandardsImpact AnalysisMetadata CaptureNaming ConventionsAuditing Data Transformation StepsSummary29293135353840414242Part IIData Flow53Chapter 3ExtractingPart 1: The Logical Data MapDesigning Logical Before PhysicalInside the Logical Data MapComponents of the Logical Data MapUsing Tools for the Logical Data MapBuilding the Logical Data MapData Discovery PhaseData Content AnalysisCollecting Business Rules in the ETL ProcessIntegrating Heterogeneous Data SourcesPart 2: The Challenge of Extracting from DisparatePlatformsConnecting to Diverse Sources through ODBCMainframe SourcesWorking with COBOL CopybooksEBCDIC Character SetConverting EBCDIC to 67678787980

ContentsFlat FilesProcessing Fixed Length Flat FilesProcessing Delimited Flat FilesXML SourcesCharacter SetsXML Meta DataWeb Log SourcesW3C Common and Extended FormatsName Value Pairs in Web LogsERP System SourcesPart 3: Extracting Changed DataDetecting ChangesExtraction TipsDetecting Deleted or Overwritten Fact Records at the 2105106109111111Cleaning and ConformingDefining Data QualityAssumptionsPart 1: Design ObjectivesUnderstand Your Key ConstituenciesCompeting FactorsBalancing Conflicting PrioritiesFormulate a PolicyPart 2: Cleaning DeliverablesData Profiling DeliverableCleaning Deliverable #1: Error Event TableCleaning Deliverable #2: Audit DimensionAudit Dimension Fine PointsPart 3: Screens and Their MeasurementsAnomaly Detection PhaseTypes of EnforcementColumn Property EnforcementStructure EnforcementData and Value Rule EnforcementMeasurements Driving Screen DesignOverall Process FlowThe Show Must Go 25128130131131134134135135136136138139Transferring Data between PlatformsHandling Mainframe Numeric DataUsing PICturesUnpacking Packed DecimalsWorking with Redefined FieldsMultiple OCCURSManaging Multiple Mainframe Record Type FilesHandling Mainframe Variable Record LengthsChapter 4ix

xContentsKnown Table Row CountsColumn NullityColumn Numeric and Date RangesColumn Length RestrictionColumn Explicit Valid ValuesColumn Explicit Invalid ValuesChecking Table Row Count ReasonabilityChecking Column Distribution ReasonabilityGeneral Data and Value Rule ReasonabilityPart 4: Conforming DeliverablesConformed DimensionsDesigning the Conformed DimensionsTaking the PledgePermissible Variations of Conformed DimensionsConformed FactsThe Fact Table ProviderThe Dimension Manager: Publishing ConformedDimensions to Affected Fact TablesDetailed Delivery Steps for Conformed DimensionsImplementing the Conforming ModulesMatching Drives DeduplicationSurviving: Final Step of ConformingDeliveringSummaryChapter 5Delivering Dimension TablesThe Basic Structure of a DimensionThe Grain of a DimensionThe Basic Load Plan for a DimensionFlat Dimensions and Snowflaked DimensionsDate and Time DimensionsBig DimensionsSmall DimensionsOne Dimension or TwoDimensional RolesDimensions as Subdimensions of Another DimensionDegenerate DimensionsSlowly Changing DimensionsType 1 Slowly Changing Dimension (Overwrite)Type 2 Slowly Changing Dimension (Partitioning History)Precise Time Stamping of a Type 2 Slowly ChangingDimensionType 3 Slowly Changing Dimension (Alternate Realities)Hybrid Slowly Changing DimensionsLate-Arriving Dimension Records and Correcting Bad DataMultivalued Dimensions and Bridge TablesRagged Hierarchies and Bridge TablesTechnical Note: POPULATING HIERARCHY BRIDGE 76178180182183183185190192193194196199201

ContentsUsing Positional Attributes in a Dimension to RepresentText FactsSummaryChapter 6Delivering Fact TablesThe Basic Structure of a Fact TableGuaranteeing Referential IntegritySurrogate Key PipelineUsing the Dimension Instead of a Lookup TableFundamental GrainsTransaction Grain Fact TablesPeriodic Snapshot Fact TablesAccumulating Snapshot Fact TablesPreparing for Loading Fact TablesManaging IndexesManaging PartitionsOutwitting the Rollback LogLoading the DataIncremental LoadingInserting FactsUpdating and Correcting FactsNegating FactsUpdating FactsDeleting FactsPhysically Deleting FactsLogically Deleting FactsFactless Fact TablesAugmenting a Type 1 Fact Table with Type 2 HistoryGraceful ModificationsMultiple Units of Measure in a Fact TableCollecting Revenue in Multiple CurrenciesLate Arriving FactsAggregationsDesign Requirement #1Design Requirement #2Design Requirement #3Design Requirement #4Administering Aggregations, Including MaterializedViewsDelivering Dimensional Data to OLAP CubesCube Data SourcesProcessing DimensionsChanges in Dimension DataProcessing FactsIntegrating OLAP Processing into the ETL SystemOLAP 8239241243244245246246247248248249250252253253xi

xiiContentsPart IIIImplementation and operations255Chapter 7DevelopmentCurrent Marketplace ETL Tool Suite OfferingsCurrent Scripting LanguagesTime Is of the EssencePush Me or Pull MeEnsuring Transfers with SentinelsSorting Data during PreloadSorting on Mainframe SystemsSorting on Unix and Windows SystemsTrimming the Fat (Filtering)Extracting a Subset of the Source File Records on MainframeSystemsExtracting a Subset of the Source File FieldsExtracting a Subset of the Source File Records on Unix andWindows SystemsExtracting a Subset of the Source File FieldsCreating Aggregated Extracts on Mainframe SystemsCreating Aggregated Extracts on UNIX and WindowsSystemsUsing Database Bulk Loader Utilities to Speed InsertsPreparing for Bulk LoadManaging Database Features to Improve PerformanceThe Order of ThingsThe Effect of Aggregates and Group Bys on PerformancePerformance Impact of Using Scalar FunctionsAvoiding TriggersOvercoming ODBC the BottleneckBenefiting from Parallel ProcessingTroubleshooting Performance ProblemsIncreasing ETL ThroughputReducing Input/Output ContentionEliminating Database Reads/WritesFiltering as Soon as PossiblePartitioning and ParallelizingUpdating Aggregates IncrementallyTaking Only What You NeedBulk Loading/Eliminating LoggingDropping Databases Constraints and IndexesEliminating Network TrafficLetting the ETL Engine Do the sScheduling and SupportReliability, Availability, Manageability Analysis for ETLETL Scheduling 101301302302303Chapter 94296296297297298299299299300300300

ContentsScheduling ToolsLoad DependenciesMetadataMigrating to ProductionOperational Support for the Data WarehouseBundling Version ReleasesSupporting the ETL System in ProductionAchieving Optimal ETL PerformanceEstimating Load TimeVulnerabilities of Long-Running ETL processesMinimizing the Risk of Load FailuresPurging Historic DataMonitoring the ETL SystemMeasuring ETL Specific Performance IndicatorsMeasuring Infrastructure Performance IndicatorsMeasuring Data Warehouse Usage to Help Manage ETLProcessesTuning ETL ProcessesExplaining Database OverheadETL System SecuritySecuring the Development EnvironmentSecuring the Production EnvironmentShort-Term Archiving and RecoveryLong-Term Archiving and RecoveryMedia, Formats, Software, and HardwareObsolete Formats and Archaic FormatsHard Copy, Standards, and MuseumsRefreshing, Migrating, Emulating, and EncapsulatingSummaryChapter 9MetadataDefining MetadataMetadata—What Is It?Source System MetadataData-Staging MetadataDBMS MetadataFront Room MetadataBusiness MetadataBusiness DefinitionsSource System InformationData Warehouse Data DictionaryLogical Data MapsTechnical MetadataSystem InventoryData ModelsData DefinitionsBusiness RulesETL-Generated 2353354355356359360361362363363364365365366367xiii

xivContentsETL Job MetadataTransformation MetadataBatch MetadataData Quality Error Event MetadataProcess Execution MetadataMetadata Standards and PracticesEstablishing Rudimentary StandardsNaming ConventionsImpact er 10 ResponsibilitiesPlanning and LeadershipHaving Dedicated LeadershipPlanning Large, Building SmallHiring Qualified DevelopersBuilding Teams with Database ExpertiseDon’t Try to Save the WorldEnforcing StandardizationMonitoring, Auditing, and Publishing StatisticsMaintaining DocumentationProviding and Utilizing MetadataKeeping It SimpleOptimizing ThroughputManaging the ProjectResponsibility of the ETL TeamDefining the ProjectPlanning the ProjectDetermining the Tool SetStaffing Your ProjectProject Plan GuidelinesManaging 0391391392393393394401412416Part IV419Real Time Streaming ETL SystemsChapter 11 Real-Time ETL SystemsWhy Real-Time ETL?Defining Real-Time ETLChallenges and Opportunities of Real-Time DataWarehousingReal-Time Data Warehousing ReviewGeneration 1—The Operational Data StoreGeneration 2—The Real-Time PartitionRecent CRM TrendsThe Strategic Role of the Dimension ManagerCategorizing the Requirement421422424424425425426428429430

ContentsData Freshness and Historical NeedsReporting Only or Integration, Too?Just the Facts or Dimension Changes, Too?Alerts, Continuous Polling, or Nonevents?Data Integration or Application Integration?Point-to-Point versus Hub-and-SpokeCustomer Data Cleanup ConsiderationsReal-Time ETL ApproachesMicrobatch ETLEnterprise Application IntegrationCapture, Transform, and FlowEnterprise Information IntegrationThe Real-Time Dimension ManagerMicrobatch ProcessingChoosing an Approach—A Decision 7452456459Chapter 12 ConclusionsDeepening the Definition of ETLThe Future of Data Warehousing and ETL in ParticularOngoing Evolution of ETL Systems461461463464Index467xv

AcknowledgmentsFirst of all we want to thank the many thousands of readers of the Toolkitseries of data warehousing books. We appreciate your wonderful supportand encouragement to write a book about data warehouse ETL. We continueto learn from you, the owners and builders of data warehouses.Both of us are especially indebted to Jim Stagnitto for encouraging Joeto start this book and giving him the confidence to go through with theproject. Jim was a virtual third author with major creative contributions tothe chapters on data quality and real-time ETL.Special thanks are also due to Jeff Coster and Kim M. Knyal for significantcontributions to the discussions of pre- and post-load processing and projectmanaging the ETL process, respectively.We had an extraordinary team of reviewers who crawled over the firstversion of the manuscript and made many helpful suggestions. It is always daunting to make significant changes to a manuscript that is “done”but this kind of deep review has been a tradition with the Toolkit seriesof books and was successful again this time. In alphabetic order, the reviewers included: Wouleta Ayele, Bob Becker, Jan-Willem Beldman, IvanChong, Maurice Frank, Mark Hodson, Paul Hoffman, Qi Jin, David Lyle,Michael Martin, Joy Mundy, Rostislav Portnoy, Malathi Vellanki, PadminiRamanujan, Margy Ross, Jack Serra-Lima, and Warren Thornthwaite.We owe special thanks to our spouses Robin Caserta and Julie Kimball fortheir support throughout this project and our children Tori Caserta, BrianKimball, Sara (Kimball) Smith, and grandchild(!) Abigail Smith who werevery patient with the authors who always seemed to be working.Finally, the team at Wiley Computer books has once again been a realasset in getting this book finished. Thank you Bob Elliott, Kevin Kent, andAdaobi Obi Tulton.xvii

About the AuthorsRalph Kimball, Ph.D., founder of the Kimball Group, has been a leadingvisionary in the data warehouse industry since 1982 and is one of today’smost well-known speakers, consultants, teachers, and writers. His books include The Data Warehouse Toolkit (Wiley, 1996), The Data Warehouse LifecycleToolkit (Wiley, 1998), The Data Webhouse Toolkit (Wiley, 2000), and The DataWarehouse Toolkit, Second Edition (Wiley, 2002). He also has written for Intelligent Enterprise magazine since 1995, receiving the Readers’ Choice Awardsince 1999.Ralph earned his doctorate in electrical engineering at Stanford Universitywith a specialty in man-machine systems design. He was a research scientist, systems development manager, and product marketing manager atXerox PARC and Xerox Systems’ Development Division from 1972 to 1982.For his work on the Xerox Star Workstation, the first commercial productwith windows, icons, and a mouse, he received the Alexander C. Williamsaward from the IEEE Human Factors Society for systems design. From 1982to 1986 Ralph was Vice President of Applications at Metaphor ComputerSystems, the first data warehouse company. At Metaphor, Ralph inventedthe “capsule” facility, which was the first commercial implementation of thegraphical data flow interface now in widespread use in all ETL tools. From1986 to 1992 Ralph was founder and CEO of Red Brick Systems, a providerof ultra-fast relational database technology dedicated to decision support.In 1992 Ralph founded Ralph Kimball Associates, which became known asthe Kimball Group in 2004. The Kimball Group is a team of highly experienced data warehouse design professionals known for their excellence inconsulting, teaching, speaking, and writing.xix

xxAbout the AuthorsJoe Caserta is the founder and Principal of Caserta Concepts, LLC. He is aninfluential data warehousing veteran whose expertise is shaped by years ofindustry experience and practical application of major data warehousingtools and databases. Joe is educated in Database Application Developmentand Design, Columbia University, New York.

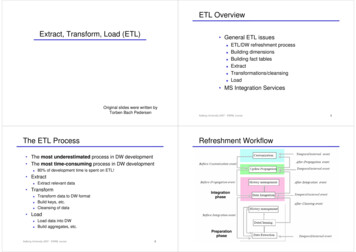

IntroductionThe Extract-Transform-Load (ETL) system is the foundation of the datawarehouse. A properly designed ETL system extracts data from the sourcesystems, enforces data quality and consistency standards, conforms dataso that separate sources can be used together, and finally delivers datain a presentation-ready format so that application developers can buildapplications and end users can make decisions. This book is organizedaround these four steps.The ETL system makes or breaks the data warehouse. Although buildingthe ETL system is a back room activity that is not very visible to end users,it easily consumes 70 percent of the resources needed for implementationand maintenance of a typical data warehouse.The ETL system adds significant value to data. It is far more than plumbing for getting data out of source systems and into the data warehouse.Specifically, the ETL system:Removes mistakes and corrects missing dataProvides documented measures of confidence in dataCaptures the flow of transactional data for safekeepingAdjusts data from multiple sources to be used togetherStructures data to be usable by end-user toolsETL is both a simple and a complicated subject. Almost everyone understands the basic mission of the ETL system: to get data out of the sourceand load it into the data warehouse. And most observers are increasinglyappreciating the need to clean and transform data along the way. So muchfor the simple view. It is a fact of life that the next step in the design ofxxi

xxiiIntroductionthe ETL system breaks into a thousand little subcases, depending on yourown weird data sources, business rules, existing software, and unusualdestination-reporting applications. The challenge for all of us is to toleratethe thousand little subcases but to keep perspective on the simple overallmission of the ETL system. Please judge this book by how well we meetthis challenge!The Data Warehouse ETL Toolkit is a practical guide for building successfulETL systems. This book is not a survey of all possible approaches! Rather,we build on a set of consistent techniques for delivery of dimensional data.Dimensional modeling has proven to be the most predictable and cost effective approach to building data warehouses. At the same time, becausethe dimensional structures are the same across many data warehouses, wecan count on reusing code modules and specific development logic.This book is a roadmap for planning, designing, building, and runningthe back room of a data warehouse. We expand the traditional ETL steps ofextract, transform, and load into the more actionable steps of extract, clean,conform, and deliver, although we resist the temptation to change ETL intoECCD!In this book, you’ll learn to:Plan and design your ETL systemChoose the appropriate architecture from the many possible choicesManage the implementationManage the day-to-day operationsBuild the development/test/production suite of ETL processesUnderstand the tradeoffs of various back-room data structures,including flat files, normalized schemas, XML schemas, and star join(dimensional) schemasAnalyze and extract source dataBuild a comprehensive data-cleaning subsystemStructure data into dimensional schemas for the most effectivedelivery to end users, business-intelligence tools, data-mining tools,OLAP cubes, and analytic applicationsDeliver data effectively both to highly centralized and profoundlydistributed data warehouses using the same techniquesTune the overall ETL process for optimum performanceThe preceding points are many of the big issues in an ETL system. But asmuch as we can, we provide lower-level technical detail for:

IntroductionImplementing the key enforcement steps of a data-cleaning systemfor column properties, structures, valid values, and complex businessrulesConforming heterogeneous data from multiple sources intostandardized dimension tables and fact tablesBuilding replicatable ETL modules for handling the natural timevariance in dimensions, for example, the three types of slowlychanging dimensions (SCDs)Building replicatable ETL modules for multivalued dimensions andhierarchical dimensions, which both require associative bridge tablesProcessing extremely large-volume fact data loadsOptimizing ETL processes to fit into highly constrained loadwindowsConverting batch and file-oriented ETL systems into continuouslystreaming real-time ETL systemsFor illustrative purposes, Oracle is chosen as a common dominator whenspecific SQL code is revealed. However, similar code that presents the same resultscan typically be written for DB2, Microsoft SQL Server, or any popular relationaldatabase system.And perhaps as a side effect of all of these specific recommendations, wehope to share our enthusiasm for developing, deploying, and managingdata warehouse ETL systems.Overview of the Book: Two Simultaneous ThreadsBuilding an ETL system is unusually challenging because it is so heavilyconstrained by unavoidable realities. The ETL team must live with the business requirements, the formats and deficiencies of the source data, the existing legacy systems, the skill sets of available staff, and the ever-changing(and legitimate) needs of end users. If these factors aren’t enough, the budget is limited, the processing-time windows are too narrow, and importantparts of the business come grinding to a halt if the ETL system doesn’tdeliver data to the data warehouse!Two simultaneous threads must be kept in mind when building an ETLsystem: the Planning & Design thread and the Data Flow thread. At thehighest level, they are pretty simple. Both of them progress in an orderlyfashion from left to right in the diagrams. Their interaction makes life veryxxiii

xxivIntroductionRequirements& RealitiesSystemImplementationArchitectureTest & ReleaseFigure Intro-1 The Planning and Design Thread.interesting. In Figure Intro-1 we show the four steps of the Planning &Design thread, and in Figure Intro-2 we show the four steps of the DataFlow thread.To help you visualize where we are in these two threads, in each chapterwe call out process checks. The following example would be used when weare discussing the requirements for data cleaning:P R O C E S S C H E C K Planning & Design:Requirements/Realities Architecture Implementation Test/ReleaseData Flow: Extract Clean Conform DeliverThe Planning & Design ThreadThe first step in the Planning & Design thread is accounting for all therequirements and realities. These include:Business needsData profiling and other data-source realitiesCompliance requirementsSecurity requirementsData integrationData latencyArchiving and lineageMainframeExtractCleanConformDeliverEnd User Applicationsd i gi t a lEnd User ApplicationsProductionSourceFigure Intro-2 The Data Flow Thread.Operations

IntroductionEnd user delivery interfacesAvailable development skillsAvailable management skillsLegacy licensesWe expand these individually in the Chapter 1, but we have to point outat this early stage how much each of these bullets affects the nature of yourETL system. For this step, as well as all the steps in both major threads, wepoint out the places in this book when we are talking specifically about thegiven step.The second step in this thread is the architecture step. Here is where wemust make big decisions about the way we are going to build our ETLsystem. These decisions include:Hand-coded versus ETL vendor toolBatch versus streaming data flowHorizontal versus vertical task dependencyScheduler automationException handlingQuality handlingRecovery and restartMetadataSecurityThe third step in the Planning & Design thread is system implementation.Let’s hope you have spent some quality time on the previous two stepsbefore charging into the implementation! This step includes:HardwareSoftwareCoding practicesDocumentation practicesSpecific quality checksThe final step sounds like administration, but the design of the test andrelease procedures is as important as the more tangible designs of the preceding two steps. Test and release includes the design of the:Development systemsTest systemsxxv

xxviIntroductionProduction systemsHandoff proceduresUpdate propagation approachSystem snapshoting and rollback proceduresPerformance tuningThe Data Flow ThreadThe Data Flow thread is probably more recognizable to most readers because it is a simple generalization of the old E-T-L extract-transform-loadscenario. As you scan these lists, begin to imagine how the Planning & Design thread affects each of the following bullets. The extract step includes:Reading source-data modelsConnecting to and accessing dataScheduling the source system, intercepting notifications anddaemonsCapturing changed dataStaging the extracted data to diskThe clean step involves:Enforcing column propertiesEnforcing structureEnforcing data and value rulesEnforcing complex business rulesBuilding a metadata foundation to describe data qualityStaging the cleaned data to diskThis step is followed closely by the conform step, which includes:Conforming business labels (in dimensions)Conforming business metrics and performance indicators (in zingStaging the conformed data to disk

Introduction xxviiFinally, we arrive at the payoff step where we deliver our wonderful data tothe end-user application. We spend most of Chapters 5 and 6 on deliverytechniques because, as we describe in Chapter 1, you still have to serve thefood after you cook it! Data delivery from the ETL system includes:Loading flat and snowflaked dimensionsGenerating time dimensionsLoading degenerate dimensionsLoading subdimensionsLoading types 1, 2, and 3 slowly changing dimensionsConforming dimensions and conforming factsHandling late-arriving dimensions and late-arriving factsLoading multi-valued dimensionsLoading ragged hierarchy dimensionsLoading text facts in dimensionsRunning the surrogate key pipeline for fact tablesLoading three fundamental fact table grainsLoading and updating aggregationsStaging the delivered data to diskIn studying this last list, you may say, “But most of that list is modeling,not ETL. These issues belong in the front room.” We respectfully disagree.In our interviews with more than 20 data warehouse teams, more thanhalf said that the design of the ETL system took place at the same timeas the design of the target tables. These folks agreed that there were twodistinct roles: data warehouse architect and ETL system designer. But thesetwo roles often were filled by the same person! So this explains why thisbook carries the data all the way from the original sources into each of thedimensional database configurations.The basic four-step data flow is overseen by the operations step, whichextends from the beginning of the extract step to the end of the deliverystep. Operations includes:SchedulingJob executionException handlingRecovery and restartQuality checking

xxviii IntroductionReleaseSupportUnderstanding how to think about these two fundamental threads (Planning & Design and Data Flow) is the real goal of this book.How the Book I

The Data Warehouse ETL Toolkit Practical Techniques for Extracting, Cleaning, Conforming, and Delivering Data Ralph Kimball Joe Caserta Wiley Publishing, Inc. iii. P1: FCH/SPH P2: FCH/SPH QC: FCH/SPH T1: FCH WY046-FM WY046-Kimball-v4.cls