Transcription

Studying MOOC Completion at Scale Using the MOOC ReplicationFrameworkJuan Miguel L. AndresRyan S. BakerDragan GaševićUniversity of Pennsylvania3700 Walnut StreetPhiladelphia, PA 19104 1 (877) 736-6473miglimjapandres@gmail.comUniversity of Pennsylvania3700 Walnut StreetPhiladelphia, PA 19104 1 (877) 736-6473University of EdinburghOld College, South BridgeEdinburgh EH89YL, UK 44 (131) orge SiemensScott A. CrossleySrećko JoksimovićUniversity of Texas Arlington701 S Nedderman DriveArlington, TX 76019 1 (817) 272-2011Georgia State University38 Peachtree Center Ave.Atlanta, GA 30303 1 (404) 413-5000sacrossley@gmail.comUniversity of EdinburghOld College, South BridgeEdinburgh EH89YL, UK 44 (131) omABSTRACTResearch on learner behaviors and course completion withinMassive Open Online Courses (MOOCs) has been mostlyconfined to single courses, making the findings difficult togeneralize across different data sets and to assess which contextsand types of courses these findings apply to. This paper reportson the development of the MOOC Replication Framework(MORF), a framework that facilitates the replication ofpreviously published findings across multiple data sets and theseamless integration of new findings as new research isconducted or new hypotheses are generated. In the proof ofconcept presented here, we use MORF to attempt to replicate 15previously published findings across 29 iterations of 17 MOOCs.The findings indicate that 12 of the 15 findings replicatedsignificantly across the data sets, and that two findingsreplicated significantly in the opposite direction. MORF enableslarger-scale analysis of MOOC research questions thanpreviously feasible, and enables researchers around the world toconduct analyses on huge multi-MOOC data sets without havingto negotiate access to data.CCS CONCEPTS Applied computing EducationKEYWORDSMOOCs, MORF, MOOC Replication Framework, completion,multi-MOOC analysis, replication, meta-analysis.Permission to make digital or hard copies of all or part of this work forpersonal or classroom use is granted without fee provided that copies are notmade or distributed for profit or commercial advantage and that copies bearthis notice and the full citation on the first page. Copyrights for componentsof this work owned by others than ACM must be honored. Abstracting withcredit is permitted. To copy otherwise, or republish, to post on servers or toredistribute to lists, requires prior specific permission and/or a fee. Requestpermissions from Permissions@acm.org.LAK '18, March 7–9, 2018, Sydney, NSW, Australia 2018 Association for Computing Machinery.ACM ISBN 978-1-4503-6400-3/18/03 15.00https://doi.org/10.1145/3170358.3170369ACM Reference format:Juan Miguel L. Andres, Ryan S. Baker, Dragan Gašević, George Siemens,Scott A. Crossley, and Srećko Joksimović. 2018. Using the MOOCReplication Framework to Examine Course Completion. In Proceedings ofthe International Conference on Learning Analytics and Knowledge,Sydney, Australia, March 2018 (LAK’18), 8 pages. UCTIONMassive Open Online Courses (MOOCs) have created newopportunities to study how learning occurs across contexts, withmillions of users registered, thousands of courses offered, andbillions of student-platform interactions [1]. Both the popularityof MOOCs among students [2] and their benefits to those whocomplete them [3] suggest that MOOCs present a new, easilyscalable, and easily accessible opportunity for learning. A majorcriticism of MOOC platforms, however, is their frequently highattrition rates [4], with only 10% or fewer learners completingmany popular MOOC courses [1, 5]. As such, a majority ofresearch on MOOCs in the past 3 years has been geared towardsunderstanding and increasing student completion. Researchershave investigated features of individual courses, universities,platforms, and students [2] as possible explanations of whystudents complete or fail to complete.A majority of MOOC research has been limited to single courses,often taught by the researchers themselves, which is due in mostpart to the lack of access to other data, as well as challenges toresearchers in working with data sets much larger than thosethey are used to. While understandable, the practice ofconducting analyses on small samples often leads to inconsistentfindings and questions about the generalizability andreplicability of what is learned. In the context of MOOCs, forexample, one study investigated the possibility of predictingcourse completion based on forum posting behavior in a 3Dgraphics course [6]. They found that starting threads morefrequently than average was predictive of completion. Anotherstudy investigating this relationship in two courses on Algebraand Microeconomics found the opposite to be true; participantsthat started threads more frequently were less likely to complete[7]. Research in single courses has the risk of producingcontradictory findings which are difficult to resolve. Running

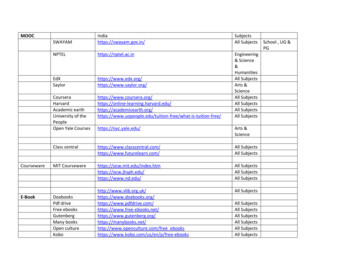

analyses on single-course data sets limits the generalizability offindings, and leads to inconsistency between published reports[8].In another example of this problem, one study investigating therelationship between students’ motivations in taking the courseand course completion across three open online learningenvironments found that students who were taking a course forcredit were more likely to complete [4]. An attempt to replicatethis finding in a different MOOC found that this feature was nota statistically significant predictor of completion [9].The current limited scope of much of the current research withinMOOCs has led to several contradictory findings of this nature,duplicating the “crisis of replication” seen in the socialpsychology community [10]. The ability to determine whichfindings generalize across MOOCs, which findings don’t, and inwhat contexts less universal findings are relevant, will lead totrustworthy and ultimately more actionable knowledge aboutlearning and engagement in MOOCs.While there has been some initial interest in data sharing withinMOOCs, prior efforts have not yet changed this state of affairs.Individual universities store data on dozens of MOOCs, but havemostly not yet made this data available to researchers in afashion that enables large-scale analysis (although individualexamples of multi-MOOC analyses exist [cf. 11, 12]). The edXRDX data exchange has made limited data from multipleuniversities accessible to researchers at other universities [13],but has also restricted the data available due to concerns aboutprivacy, restricting key data necessary to replicating manypublished analyses. The moocDB data format and moocRPanalytics tools were developed with a goal of supportingresearch in this area [14]. Their tool allows for theimplementation of several analytic models, with the goal offacilitating the re-use and replication of an analysis in a newMOOC. However, the use of moocRP has not yet scaled beyondanalyses of single MOOCs, making it uncertain how useful it willbe for the types of broad, cross-contextual research that areneeded to get MOOC research past its own replication crisis.In this paper, we present a solution that seeks to address thisproblem of replicability in the context of MOOCs. We do this byinvestigating the replicability of findings previously published inarticles that leveraged learning analytics methods and datathrough the use of the MOOC Replication Framework.2MORF: GOALS AND ARCHITECTUREOne of the common approaches to resolving the uncertaintycaused by contradictory findings is to conduct meta-analyses[15], where the results of several previous findings are integratedtogether to produce a more general answer to a researchquestion. The meta-analysis research community has developedpowerful statistical techniques for synthesizing many studiestogether despite incomplete information. By definition, however,a meta-analysis must wait on the completion of analyses bymultiple research groups.An alternate approach is to collect large and diverse data sets tothen test published findings in. Such an approach has historicallybeen infeasible in learning contexts, where data sources were, upuntil relatively recently, disparate, incompatible, and small. Eventhough large amounts of data have become available forindividual intelligent tutoring systems over the last decade [16],the differences in the design of different tutoring systems andthe semantics of data fields (even when the data field has thesame name across different systems, or the systems share acommon data format as in the Pittsburgh Science of LearningCenter DataShop [16]), has made statistical analyses acrossmultiple platforms relatively rare. However, analysis across largeranges of courses becomes more feasible for MOOCs, where asmall number of providers generate huge amounts of data oncourses with very different content, but relatively similar highlevel design.To leverage this opportunity, we have developed MORF, theMOOC Replication Framework, a framework for investigatingresearch questions in MOOCs within data from multiple MOOCdata sets. Our goal is to determine which relationships(particularly, previously published findings) hold across differentcourses and iterations of those courses, and which findings areunique to specific kinds of courses and/or kinds of participants.In our first report on MORF [9], we discussed the MORFarchitecture and attempted to replicate 21 published findings inthe context of a single MOOC. In this paper, we report the firstlarge-scale use of MORF, attempting to replicate 15 publishedfindings in 29 iterations of 17 MOOCs, listed in Table 1.Table 1. Courses and Iteration Counts Included in theCurrent StudyCourse TitleNumber ofIterationsArtificial Intelligence Planning2Animal Behavior and Welfare1Astrobiology2AstroTech: The Science andTechnology Behind AstronomicalDiscovery2Clinical Psychology1Code Yourself! An Introduction toProgramming1E-Learning and Digital Cultures3EDIVET: Do you have what it takes tobe a veterinarian?2Equine Nutrition2General Elections 20151Introduction to Philosophy4Mental Health: A Global Priority1Fundamentals of Music Theory1Nudge-It1Philosophy and the Sciences2Introduction to Sustainability1The Life and Work of Andy Warhol2In its current version, MORF represents findings as productionrules, a simple formalism previously used in work to develophuman-understandable computational theory in psychology andeducation [17, 18]. This approach allows findings to berepresented in a fashion that human researchers andpractitioners can easily understand, but which can beparametrically adapted to different contexts, where slightlydifferent variations of the same findings may hold.

Table 2. Previously Published Findings on MOOCCompletion Included in the Study#123IfParticipant spends moretime in forums thanaverageParticipant spends moretime on assignmentsthan averageParticipant’s averagelength of posts is longerthan the course averageThenSourceLikely to complete[22]Likely to complete[22]Likely to complete[5, 26]MOOC. Outcomes can represent a number of indicators ofstudent success or failure including watching a majority ofvideos [e.g., 11, 20] or publishing a scientific paper afterparticipating in the MOOC [e.g., 21]. In the current study, wefocus on the most commonly-studied research question, whetheror not the student in question completed the MOOC. Not allproduction rules need to have both attributes and operators. Forexample, production rules that look at time spent in specificcourse pages may have only operators (e.g., spending more timein the forums than the average student) and outcomes (i.e.,whether or not the participant completed the MOOC) [e.g., 22].Each production rule returns two counts: 1) the confidence [23],or the number of participants who fit the rule, i.e., meet both theif and the then statements, and 2) the conviction [24], theproduction rule’s counterfactual, i.e., the number of participantswho match the rule’s then statement but not the rule’s ifstatement. For example, in the production rule, “If a studentposts more frequently to the discussion forum than the averagestudent, then they are more likely to complete the MOOC,” thetwo counts returned are the number of participants that postedmore than the average student and completed the MOOC, andthe number of participants who posted less than the average, butstill completed the MOOC. As a result, for each MOOC, aconfidence and a conviction for each production rule can begenerated.4Participant posts on theforums more frequentlythan averageLikely to complete[7, 26]5Participant respondsmore frequently to otherparticipants’ posts thanaverageLikely to complete[5]6Participant starts athreadLikely to complete[5]7Participant starts threadsmore frequently thanaverageNot likely tocomplete[8]8Participant hasrespondents on threadsthey startedLikely to complete[27]9Participant hasrespondents on threadsthey started greater thanaverageLikely to complete[27]10Participant uses moreconcrete words thanaverageLikely to complete[26]11Participant uses morebigrams than averageLikely to complete[26]A chi-square test of independence can then be calculatedcomparing each confidence to each conviction. The chi-squaretest can determine whether the two values are significantlydifferent from each other, and in doing so, determine whetherthe production rule or its counterfactual significantly generalizedto the data set. Odds ratio effect sizes per production rule arealso calculated. In this study, we tested MORF on 29 data setsobtained from the University of Edinburgh’s large MOOCprogram. In integrating across MOOCs, we choose theconservative and straightforward method of using Stouffer’s [25]Z-score method to combine the results per finding across themultiple MOOC data sets, to obtain a single statisticalsignificance result across all MOOCs. We also report mean andmedian odds ratios across data sets.12Participant uses moretrigrams than averageLikely to complete[26]313Participants uses lessmeaningful words thanaverageLikely to complete[26]14Participant uses moresophisticated words thanaverageLikely to complete[26]15Participant uses a widervariety of words thanaverageLikely to complete[26]Notes. Previous findings are presented as production rules. The articlesfrom which the findings were drawn from are also reported.The production rule system used in MORF was built using Jess,an expert system programming language [19]. All findings wereconverted into if-else production rules following the format, “If astudent who is attribute does operator , then outcome .”Attributes are pieces of information about a student, such aswhether a student reports a certain goal on a pre-coursequestionnaire. Operators are actions a student does within theSCOPE OF ANALYSISIn a first report on MORF’s infrastructure, we attempted toreplicate a set of 21 previously published findings in a singleMOOC on Big Data in Education [9]. Six findings analyzed inthis first report required questionnaire data that was notavailable for the broader set of MOOCs investigated in thecurrent study. As such, the current study analyzes the remaining15 of these findings on MOOC completion across 29 iterations of17 different MOOCs offered through Coursera by the Universityof Edinburgh. There was a total of 514,656 registrants and86,535,662 user events across these 29 MOOC data sets.Within the context of these MOOCs, we investigate previouslypublished findings from five papers demonstrating thatdiscussion forum behaviors were associated with successfulcourse completion. This category of findings was studied for tworeasons. First, it has importance to the design of effectiveMOOCs. Understanding the role that discussion forumparticipation plays in course completion is important todesigning discussion forums that create a positive socialenvironment that enhances learner success [28]. Second, itrepresents a type of finding that has been difficult to investigateat scale with existing data sets, since there has been limitedsharing of the type of discussion forum data necessary for this

type of research, due to the difficulty of deidentifying this typeof data. Prominent findings on MOOC completion involving timespent within the forums, as compared to other activities, werealso considered.These five past papers found that writing longer posts [5, 26],writing posts more often [8, 26], starting a thread, receivingreplies on one’s thread, and replying to others’ threads [5, 8, 27],and just generally spending more time in the forums and onquizzes [22] were significantly associated with coursecompletion. The original papers on these findings involved oneedX MOOC on Electronics [22], and Coursera MOOCs onSurviving Disruptive Technology [27], Algebra [5, 7],Microeconomics [5, 7], and Big Data in Education [26]. The fulllist of findings investigated is given in Table 2.One area of particular interest for many MOOC researchers islearners’ failure to complete MOOC courses, due the problem’simportance and potential actionability. Completion is importanteven beyond the context of a single MOOC. Though not allMOOC learners have the goal of completion [29], completion isone of the best predictors of eventual participation in thecommunity of practice associated with the MOOC [21]. As such,understanding why learners fail to complete MOOCs may enablethe design of interventions that increase the proportion ofstudents who succeed in MOOCs. The studies included in thispaper’s set of analyses sought to understand which studentbehaviors were significantly related to course completion, as astep towards designing interventions.In the first of these five articles, De Boer and colleagues [22]explored the impact of resource use on achievement withinedX’s first MOOC, Circuits and Electronics, offered in Spring2012. The class reportedly drew students from nearly everycountry in the world. The study correlated course completion tothe amount of time spent on different online course resources,and found that time spent on the forums and time spent onassignments were predictive of higher overall final scores(required for course completion with a certificate), even whencontrolling for prior ability and country of origin. These resultsshow that time allocation is an important predictor of studentsuccess in MOOCs.Two studies by Yang and her colleagues [5, 7] explored dropoutrates, confusion, and forum posting behaviors within twoCoursera MOOCs, one on Algebra and the other onMicroeconomics. Their first study developed a survival modelthat measured the influence of student behavior and socialpositioning within the discussion forum on student dropout rateson a week-to-week basis. The second study attempted toquantify the effect of behaviors indicative of confusion onparticipation through the development of another survivalmodel. They found that the more a participant engaged inbehaviors they believed indicative of confusion (i.e., startingthreads more frequently than the average student), the lowertheir probability of retention in the course. The findings of thesetwo studies on the relationship of posting behavior (i.e., startingthreads, writing frequent and lengthy posts, and responding toothers’ posts) to course completion are crucial to the design ofMOOCs because they suggest that social factors are associatedwith a student’s propensity to drop out during their progressionthrough a MOOC.Crossley and colleagues [26] conducted a similar investigationon the relationship between discussion forum posting behaviorsand MOOC completion in a MOOC on Big Data in Education. Intheir study, they also found that a range of linguistic features,computed through natural language processing, were associatedwith successful MOOC completion, including the use ofconcrete, meaningful, and sophisticated words, and the use ofbigrams and trigrams. Concreteness is assessed based on howclosely a word is connected to specific objects. “If one candescribe a word by simply pointing to the object it signifies, suchas the word apple, a word can be said to be concrete, while if aword can be explained only using other words, such as infinityor impossible, it can be considered more abstract [30, p. 762].”Meaningfulness is assessed based on how related a word is toother words. According to the definition in [30], words like“animal,” for example, are likely to be more meaningful thanfield-specific terms like “equine”. Lexical sophistication involvesthe “depth and breadth of lexical knowledge [30].” It is usuallyassessed using word frequency indices, which look at thefrequency by which words from multiple large-scale corporaappear in a body of text [30]. More concrete or moresophisticated words were found to be associated with a greaterprobability of course completion, while more meaningful wordswere found to be associated with a lower probability of coursecompletion. The findings of their study have importantimplications for how individual differences among students thatgo beyond observed behaviors (e.g., language skills and usagechoices) can predict success.As mentioned, the current study attempts to replicate 15previously published findings relating to participant behaviorsand MOOC completion. These findings are presented in Table 2as if-then production rules; the previous articles the findingswere drawn from are also included. The findings are divided intothree categories: findings involving data drawn from clickstreamlogs concerning time spent on specific activities within theMOOC (Rules 1-2), findings involving data drawn from thediscussion forum that look at the participants’ posting behavior(Rules 3-9), and findings involving data from the forum poststhat look at linguistic features of the participants’ contributions(Rules 10-15). The Tool for the Automated Analysis of LexicalSophistication 1.4, or TAALES [30], and the Tool for theAutomatic Analysis of Cohesion 1.0, or TAACO [31], were usedto generate the linguistic variables used in the analyses.In TAALES, sophistication is derived from word occurrenceacross multiple large-scale corpora and are computed using fivefrequency indices: the Thorndike-Lorge index based on Lorge’s4.5 million-word corpus on magazine articles [32], the Brownindex [33] based on the 1 million-word London-Lund Corpus ofEnglish Conservation [34], the Kucera-Francis index based onthe Brown corpus, which consists of about 1 million wordspublished in the US [35], the British National Corpus (BNC)index based on about 100 million word of written and spokenEnglish in Great Britain [36], and the SUBTLEXus index basedon a corpus of subtitles from about 8000 films and televisionseries in the US [37]. TAALES returns a sophistication score percorpus. The more words from these five corpuses are used, thehigher the respective sophistication score is. For moreinformation on these corpora, see [30]. Bigram and trigramfrequency are two other metrics of lexical sophistication [30],i.e., the more bigrams and trigrams used, the more sophisticateda body of text is.One production rule studied in this paper is a re-parameterizedversion of an original finding that was carried over into thecurrent study from the first use of MORF in a single MOOC [9].Rule 8 was the original finding, i.e., participants havingrespondents on their threads in the discussion forum. Within [9],

we created a variant of this rule, Rule 9, participants havingmore respondents on their threads than average, due to therelatively low numbers of threads with zero respondents in someMOOCs.Table 3. Meta-analysis Results per Production Rule5Production RulesZp -nullMore time in forums26.93 0.0012900More time on assignments26.93 0.0012900Longer posts than average11.76 0.0011511326.04 0.001270223.84 0.0012504Starts a thread12.34 0.00115014Starts threads moreafrequently than average *26.39 0.0010272Has respondents22.29 0.0012603Has respondents greaterthan average22.72 0.00124051.510.1313521Uses more bigrams12.68 0.00115113Uses more trigrams12.84 0.0011611210.18 0.0011601317.54 0.0012009-4.11 0.00121314Posts more frequentlythan averageResponds more frequentlythan averageUses more concrete wordsabUses less meaningfulwordsUses more sophisticatedwordsUses wider variety ofawordsShaded bands indicate that our replication found the reverse of thepublished finding.bItalics represent null results.* All outcomes are “likely to complete,” except for the rule suffixed by anasterisk, where the outcome is “not likely to complete.”4with data privacy limitations. Users are also able to eithercontribute their own data sets to MORF, or conduct their ownanalyses against MORF’s data set, which is currently comprisedof 131 iterations of 61 MOOCs.USING MORFRESULTSThe results of the 15 analyses across MOOCs can be found inTables 3 and 4. In Table 3, each row represents the result oftesting each previously published finding across the full set ofMOOCs.The table reports each finding, again presented as an if-thenproduction rule, the respective Z-scores and p-values for theanalysis across MOOCs, as well as the number of MOOCs inwhich the finding significantly replicated, the number of MOOCsthat had the counterfactual replicate, and the number of MOOCswhere the finding failed to replicate in in either direction.Counterfactuals that are statistically significant overall, acrossMOOCs, are marked by shaded bands. Findings that failed toreplicate in either direction are italicized. Table 4 reports themean and median odds ratio effect sizes of each production ruleacross the 29 data sets.As shown in Table 3, two of the 15 previous findings had theircounterfactuals come out statistically significant, i.e., they hadthe opposite result from the result previously reported. WhereasYang and colleagues [7] found that students who start threads onthe forums more frequently than the average student are lesslikely to complete, we found that in 27 cases out of 29 (with 0positive replications and 2 null effects) that students who startthreads less frequently are less likely to complete. Also, whereasCrossley and colleagues [26] found that students who used awider variety of words in their forum posts than the averagestudent were more likely to complete, we found in 13 cases outof 29 (with 2 positive replications, and 14 null effects) thatstudents who used a narrower variety of words were more likelyto complete. Finally, one finding, which originally stated thatstudents who used more concrete words in their forum poststhan the average student were more likely to complete, failed toreplicate overall in either direction (with 3 positive replications,and 5 negative replications). The remaining 12 of the 15 previousfindings replicated significantly across the 29 data sets.6IMPLICATIONSThe production rule analysis of MORF makes use of twodifferent kinds of data: 1) clickstream events used to analyze therules relating to the amount of time spent in the forums and onthe assignments, and 2) relational database forum data used toanalyze the rules relating to forum behavior and linguisticfeatures. MORF utilizes Amazon Web Services (AWS) for datastorage of the clickstream events, which are stored in Amazon S3buckets, and database access for the forum-related data viaAmazon’s Relational Database Service (RDS).Twelve of the fifteen production rules investigated significantlyreplicated across the data sets. The previously published findingsrelated to time spent in the forums and on assignments – statingthat more time spent on these activities is associated withcompletion – replicated significantly across all 29 data sets.These findings indicate that spending more time with the coursecontent, either through engaging in or observing the discussionsin the forums or through engaging with the course assignments,is associated with completion.When MORF is run, it connects securely and remotely to AWS toaccess all necessary data. The user simply needs to state whichcourses and course iterations they intend to run the productionrule analysis on, and once the analysis is complete, the user ispresented with the results of the analysis. This consists of the listof MOOCs currently in MORF’s data storage, whether or noteach production rule replicated significantly within each courseiteration, and the significance level and effect size for eachanalysis, as well as the overall analysis.This is likely for multiple reasons. More motivated participantsare likely to spend more time within the MOOC and are alsomore likely to complete. Spending more time with the materialmay also increase the chance of successful performance andcompletion. In an environment such as MOOCs, where studentshave the freedom to disengage at any point in the course,knowing that time spent in the discussion forums is associatedwith remaining engaged till completion indicates that attentionshould be spent on designing engaging and positive discussionforum experiences that encourage participation.Utilizing such an architecture protects data ownership byenabling users to run analyses without getting direct access toany of the raw data, a crucial feature in conducting research

Table 4. Mean and Median Odds Ratio Effect Sizes perProduction RuleProduction RulesOdds RatioMeanOdds RatioMedianMore time in forums27.23512.060More time on assignments251.979121.349Longer posts than average1.3621.2384.6673.4062.9592.569Starts a thread1.8741.676Starts threads more frequentlyathan average *4.6013.571Has respondents2.3211.997Has respondents greater thanaverage2.5442.2501.0361.076Uses more bigrams1.3761.292Uses more trigrams1.3901.281Uses less meaningful words0.7990.782Uses more sophisticated words1.6231.4720.9870.875Posts more frequently thanaverageResponds more frequently thanaverageUses more concrete wordsbUses wider variety of wordsaaShaded bands indicate that our replicati

RDX data exchange has made limited data from multiple universities accessible to researchers at other universities [13], but has also restricted the data available due to concerns about privacy, restricting key data necessary to replicating many published analyses. The moocDB data format and moocRP analytics tools were developed with a goal of .