Transcription

CassandraStructured Storage System over a P2P NetworkAvinash Lakshman, Prashant Malik

Why Cassandra? Lots of data– Copies of messages, reverse indices ofmessages, per user data. Many incoming requests resulting in a lotof random reads and random writes. No existing production ready solutions inthe market meet these requirements.

Design Goals High availability Eventual consistency– trade-off strong consistency in favor of highavailability Incremental scalability Optimistic Replication “Knobs” to tune tradeoffs between consistency,durability and latency Low total cost of ownership Minimal administration

Data ModelName : tid2Value : Binary Value : Binary Value : Binary Value : Binary TimeStamp : t1TimeStamp : t2TimeStamp : t3TimeStamp : t4ColumnFamily1 Name : MailListKEYColumn Familiesare declaredupfrontSuperColumnsare added andmodifiedColumnsaredynamicallyadded andmodifieddynamicallyName : tid1Columns areadded andType : SimpleSort : NamemodifiedName : tid3 dynamicallyName : tid4ColumnFamily2Name : WordListType : SuperName : alohaSort : TimeName : ly3 Name : SystemType : SuperSort : NameName : hint1Name : hint2Name : hint3Name : hint4 Column List Column List Column List Column List

Write Operations A client issues a write request to a randomnode in the Cassandra cluster. The “Partitioner” determines the nodesresponsible for the data. Locally, write operations are logged andthen applied to an in-memory version. Commit log is stored on a dedicated disklocal to the machine.

Write cont’dKey (CF1 , CF2 , CF3) Data sizeMemtable ( CF1)Commit Log Number of Objects LifetimeMemtable ( CF2)Binary serializedKey ( CF1 , CF2 , CF3 )Memtable ( CF2)Data file on diskK128 OffsetDedicated Disk Key name Size of key Data Index of columns/supercolumns Serialized column family ---K256 Offset---K384 Offset---Bloom Filter Key name Size of key Data Index of columns/supercolumns Serialized column family (Index in memory)BLOCK Index Key Name Offset, Key Name Offset---

CompactionsK1 Serialized data K2 Serialized data K3 Serialized data -Sorted---K2 Serialized data K4 Serialized data K10 Serialized data K5 Serialized data K30 Serialized data K10 Serialized data DELETED--Sorted---MERGE SORTIndex FileK1 Serialized data Loaded in memoryK2 Serialized data K3 Serialized data K1 OffsetK5 OffsetK30 OffsetBloom FilterSortedK4 Serialized data K5 Serialized data K10 Serialized data K30 Serialized data Data FileSorted----

Write Properties No locks in the critical pathSequential disk accessBehaves like a write back CacheAppend support without read aheadAtomicity guarantee for a key “Always Writable”– accept writes during failure scenarios

ReadClientQueryResultCassandra ClusterClosest replicaRead repair ifdigests differResultReplica ADigest ResponseReplica BDigest QueryDigest ResponseReplica C

Partitioning And Replicationh(key1)1 0EAN 3CFh(key2)BD1/210

Cluster Membership and FailureDetection Gossip protocol is used for cluster membership.Super lightweight with mathematically provable properties.State disseminated in O(logN) rounds where N is the number ofnodes in the cluster.Every T seconds each member increments its heartbeat counter andselects one other member to send its list to.A member merges the list with its own list .

Accrual Failure Detector Valuable for system management, replication, load balancing etc.Defined as a failure detector that outputs a value, PHI, associatedwith each process.Also known as Adaptive Failure detectors - designed to adapt tochanging network conditions.The value output, PHI, represents a suspicion level.Applications set an appropriate threshold, trigger suspicions andperform appropriate actions.In Cassandra the average time taken to detect a failure is 10-15seconds with the PHI threshold set at 5.

Properties of the Failure Detector If a process p is faulty, the suspicion levelΦ(t) Æ as t Æ .If a process p is faulty, there is a time after which Φ(t) is monotonicincreasing.A process p is correct Ù Φ(t) has an ub over an infinite execution.If process p is correct, then for any time T,Φ(t) 0 for t T.

Implementation PHI estimation is done in three phases– Inter arrival times for each member are stored in a samplingwindow.– Estimate the distribution of the above inter arrival times.– Gossip follows an exponential distribution.– The value of PHI is now computed as follows: Φ(t) -log10( P(tnow – tlast) )where P(t) is the CDF of an exponential distribution. P(t) denotes theprobability that a heartbeat will arrive more than t units after the previousone. P(t) ( 1 – e-tλ )The overall mechanism is described in the figure below.

Information Flow in theImplementation

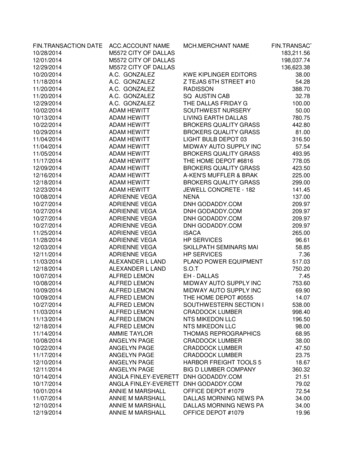

Performance Benchmark Loading of data - limited by networkbandwidth. Read performance for Inbox Search inproduction:Search Interactions Term SearchMin7.69 ms7.78 msMedian15.69 ms18.27 msAverage26.13 ms44.41 ms

MySQL Comparison MySQL 50 GB DataWrites Average : 300 msReads Average : 350 ms Cassandra 50 GB DataWrites Average : 0.12 msReads Average : 15 ms

Lessons Learnt Add fancy features only when absolutelyrequired. Many types of failures are possible. Big systems need proper systems-levelmonitoring. Value simple designs

Future work Atomicity guarantees across multiple keysAnalysis support via Map/ReduceDistributed transactionsCompression supportGranular security via ACL’s

Questions?

In Cassandra the average time taken to detect a failure is 10-15 . Performance Benchmark Loading of data - limited by network . Median 15.69 ms 18.27 ms Average 26.13 ms 44.41 ms. MySQL Comparison MySQL 50 GB Data Writes Average : 300 ms Reads Average : 350 ms Cassandra 50 GB Data Writes Average : 0.12 ms Reads Average .