Transcription

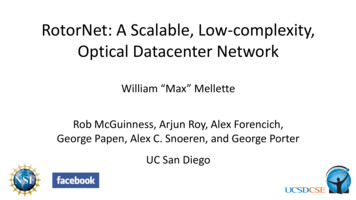

RotorNet: A Scalable, Low-complexity,Optical Datacenter NetworkWilliam “Max” MelletteRob McGuinness, Arjun Roy, Alex Forencich,George Papen, Alex C. Snoeren, and George PorterUC San Diego

Toward 100 Petabit/second datacentersChallenge: deliver (very) low-cost bandwidth at scale Co-design:ProtocolTopologyHardware New protocolsLoad balancing, congestion control, New topologiesJellyfish, Longhop, Slimfly, New hardwareOptical circuit switching, RF/optical wireless, Same switching modelNew “Rotor” switching modelRotorNet “Future-proof” bandwidth (2 today) simple control 2

Don’t packet switches work fine?Electronic PacketSwitchFat Tree (Sigcomm ‘08)ASICPacket switch capacity growth: 2 / 2 years Network capacity growth: 2 / year(A. Singh et al., SIGCOMM 2015)3

Optical switching – benefits & barriersElectronic PacketSwitchCopper:25 Gb/sASICOptical CircuitSwitchI/O limitsbandwidthASICASICASICASICASICASICFiber: 1 Tb/sCheap,future-proofbandwidth 4

Optical switching – benefits & barriersData plane doesn’t scale to entire datacenter!Queue occupancyQueue sOutputsElectronic PacketSwitchCopper:25 Gb/sASICI/O limitsbandwidthSendingracks/hostsFiber: 1 Tb/sOptical fbandwidth5

Rotor switching model simplifies controlCrossbarmodel:Queue occupancyReal-time scheduleSchedulingN inputportsN outputportsCrossbar No (central) controlRotor switchmodel:N – 1 matchings ,Fixed schedule1 2 1 3N inputports1 4N outputportsRotorswitch Bounded reduction in throughput6

Rotor switches have a simpler implementationOptical Rotor switch:Optical Crossbar:N mirrorsHard-wired matchingsN mirrorsN inputportsN outputportsMirrorMirror Cost and complexity scale with:PortsEx. 2,048 ports:4,096 mirrors2,048 directionsMatchings ( Ports)2 mirrors16 directions7

RotorNet architecture overview Forwarding?Topology?Optical Rotor switch More scalableRotor switching model Simpler control8

1-hop forwarding over Rotor switch Wait for direct path:Matching cycle 1Matching cycle 2Node 1 2, 3, 4Node 2 3, 4, 1 Node 3 4, 1, 2Node 4 1, 2, 3Uniform traffic 100% throughputTime But datacenter traffic can be sparse 9

1-hop forwarding & sparse traffic low throughput Wait for direct path:Matching cycle 1Matching cycle 2Node 1 4 Problem: single flow 33% throughputTime Hint at improvement: network is underutilized10

2-hop forwarding better for sparse traffic Not new: Valiant (’82) & Chang et al. (’02)Matching cycle 1Matching cycle 2Node 1 4, 3, 2Node 2 3, 4, 1 Node 3 4, 1, 2Node 4 1, 2, 3TimeThroughput: Single flowUniform traffic33% (1-hop) 100% (2-hop)100% (1-hop) 50% (2-hop) Optimization: can we adapt between 1-hop and 2-hop forwarding?11

RotorLB: adapting between 1 & 2-hop forwardingRotorLB (Load Balancing) overview: Default to 1-hop forwardingEndpoint 1Endpoint 2New matchingOffer Send traffic over 2 hops onlywhen there is extra capacityAccept Discover capacity usingin-band pairwise protocol:Send trafficNew matchingTime RotorLB is fully distributed12

Throughput of forwarding approachesMSFT[1]FB(256 ports)Ideal packetswitchRotorLB(web)[2][1] Ghobadi et al.Sigcomm ’16[2] Roy et al.Sigcomm ’15FB (Hadoop)[2]2-hop forwarding3:1 packetswitch1-hop forwardingOne connectionUniform traffic13

Throughput of forwarding approachesMSFT[1]FB (web)[2]Price of simplecontrolFB (Hadoop)[2]2 bandwidth(similar cost)(256 ports)Ideal packetswitch[1] Ghobadi et al.Sigcomm ’16[2] Roy et al.Sigcomm ’153:1 packetswitch14

RotorNet architecture overview RotorLB Distributed, high throughputTopology?Optical Rotor switch More scalableRotor switching model Simpler control15

How should we build a network from Rotor switches?Rotor switchAt large scale:t1 t2 t3 t4 t5 t6 t7 High latency:Sequentially step throughmany matchings Fabrication challenge:Monolithic Rotor switchwith many matchings M1 M2 M 3 M4 M5 M6 M7ToRRack Single point of failure16

Distributing Rotor matchings lower latencyRotor switchesFault tolerantReduced latency: Access matchings inparallelSimplifies Rotor switches: Matchings ports More scalable, lessexpensivet1 t2 t3t1 t2 t3t1 t2 t3 M1 M2 M3M4 M5-M6 M7-ToRRack 17

Rotor switching is feasible todayPrototype Rotor switchValidated feasibility ofentire architecture:(8 endpoints)RotorLBInputs /OutputsMatchingsRotorNet topologyOptical Rotor switchOpticsRotor switch model100 faster switching thancrossbar18

RotorNet scales to 1,000s of racks Rotor switch design point: 2,048 ports, 1,000 faster switching than crossbarDetails in: W. Mellette et al., Journal of Lightwave Technology ’16W. Mellette et al., OFC ’16 128 Rotor switches2,048-rack data center: Latency (cycle time) 3.2 msPacketswitches Faster than 10 ms crossbarreconfiguration timeToRHybrid network for lowlatency applicationsRack 19

RotorNet component comparisonNetwork# Packet switches# Transceivers# Rotor switchesBandwidth3:1 Fat Tree2.6 k103 k033 %RotorNet, 10% packet2.3 k84 k12870 %RotorNet, 20% packet2.5 k96 k12870 %RotorNet delivers: Today: Bandwidth 2 less expensive Future: Cost advantage grows with bandwidth Benefits of optical switching without control complexity20

A scalable, low-complexity optical datacenter networkRotorNet architecture: RotorLB Distributed, high throughput RotorNet topology Fast cycle timeOptical Rotor switch More scalableN – 1 matchings Rotor switching model Simpler controlThis work was supported by theNSF and a gift from Facebook21

Toward 100 Petabit/second datacenters Challenge: deliver (very) low-cost bandwidth at scale 2 RotorNet "Future-proof bandwidth (2 today) simple control Same switching model New hardware Optical circuit switching, RF/optical wireless, New topologies Jellyfish, Longhop, Slimfly,