Transcription

An Evaluation of Constituency-based HyponymyExtraction from Privacy PoliciesMorgan C. Evans1, Jaspreet Bhatia2, Sudarshan Wadkar2 and Travis D. Breaux2Bard College, Annandale-on-Hudson, New York, United States1Carnegie Mellon University, Pittsburgh, Pennsylvania, United States2me4582@bard.edu, jbhatia@cs.cmu.edu, swadkar@cs.cmu.edu, breaux@cs.cmu.eduAbstract—Requirements analysts can model regulateddata practices to identify and reason about risks of noncompliance. If terminology is inconsistent or ambiguous,however, these models and their conclusions will beunreliable. To study this problem, we investigated anapproach to automatically construct an information typeontology by identifying information type hyponymy inprivacy policies using Tregex patterns. Tregex is a utilityto match regular expressions against constituency parsetrees, which are hierarchical expressions of naturallanguage clauses, including noun and verb phrases. Wediscovered the Tregex patterns by applying contentanalysis to 30 privacy policies from six employment, health, and news.) From this dataset, threesemantic and four lexical categories of hyponymyemerged based on category completeness and wordorder. Among these, we identified and empiricallyevaluated 72 Tregex patterns to automate the extractionof hyponyms from privacy policies. The patterns matchinformation type hyponyms with an average precision of0.72 and recall of 0.74.Index Terms—Hyponym, hypernym, natural languageprocessing, ontology, privacy policy, compliance.I. INTRODUCTIONPersonal privacy concerns how personal information iscollected, used, and shared within information systems. Toreduce the risk of privacy violations, regulators requirecompanies to rationalize their data practices and comply withprivacy laws, such as the E.U. Data Protection Directive95/46/EC and the Health Insurance Portability andAccountability Act (HIPAA) Privacy Rule. To helprequirements analysts design legally compliant, privacypreserving systems, new methods have been proposed. Forexample, Ghanavati’s compliance framework for businessprocess specification as applied to Canada’s privacy healthlaw [11], Maxwell and Anton’s production rule system forextracting legal requirements from privacy law [22], Breauxet al.’s Eddy language for asserting privacy principles [4, 6],and Paja et al.’s STS-ml tool for analyzing privacy andsecurity requirements in socio-technical systems [24]. Thesemethods and the problems that they address present achallenge to requirements analysts: what does the category ofpersonal information formally consist of, in order to infer theconsequences of collecting and sharing such information in aformalization of data practices?In this paper, we report results from developing andevaluating an automated method for extracting informationontology from privacy policies. Privacy policies are posted atmost websites, and frequently required by best practice orprivacy law, e.g., HIPAA and the Gramm-Leach Bliley Act.These policies describe the data practices of online services,and frequently include data practice descriptions for physicallocations where services are rendered. In general, a privacypolicy describes what information is collected, how it isused, and with whom it is shared. These descriptionsfrequently include examples that illustrate relevant kinds ofinformation, called sub-ordinate terminology or hyponyms.When interpreting these policies and law, statements thatregulate an information type could logically regulate anyhyponyms, for example, any restrictions on “contactinformation” could also be applied to “email address.”The contributions of this paper are four-fold: first, weidentify a taxonomy of hyponymy patterns that describes thecomplete set of hyponyms manually identified among 30privacy policies; second, we formalize these patterns usingTregex, a tree regular expression language for matchingconstituency parse trees [18]; third, we report the number ofinformation types covered by these patterns, called coverage,when compared to a lexicon of information types extractedfrom the same policies using the method by Bhatia et al. [1];and fourth, we analyze the variation of information typesacross domains.The remainder of the paper is organized as follows: inSection II, we review background concepts and related work;in Section III, we present our approach to identifyinghyponymy automatically; in Section IV, we present resultsand the evaluation of our approach; and in Section V, wediscuss our results and future work.II. BACKGROUND AND RELATED WORKWe now review hyponymy in natural language, Tregexand related work.Hyponyms are specific phrases that are sub-ordinate toanother, more general phrase, which is called the hypernym[15]. Speakers and readers of natural language typically usethe linking verb phrase is a kind of to express the relationshipbetween a hyponym and hypernym, e.g., a GPS location is akind of real-time location. Other semantic relationships ofinterest include meronyms, which describe a part-whole

relationship, homonyms, which describe a word that has twounrelated meanings, and polysemes, which describe a wordwith two related meanings [15]. A popular online lexicaldatabase that contains hyponyms is called WordNet [23].Hearst first proposed a set of six lexico-syntactic patternsto identify hyponyms in natural language texts using nounphrases and regular expressions [12]. The patterns aredomain independent and include the indicative keywords“such as,” “including,” and “especially,” among others. TheHearst approach applies grammar rules to a unification-basedconstituent analyzer over part-of-speech (POS) tags to findnoun phrases that match the pattern, which are then checkedagainst an early version of WordNet for verification [12].The approach was unable to work for meronymy in text.Snow et al. applied WordNet and machine learning to anewswire corpus to identify lexico-syntactic patterns andhyponyms [29]. Their approach includes the six Hearstpatterns and resulted in a 54% increase in the number ofwords over WordNet. Unlike Hearst and Snow et al.,information types are rarely found in WordNet: among the1300 information types used in our approach describedherein, only 17% of these phrases appear in WordNet, andonly 19% of the phrases matched by our hyponymy patternsappear in WordNet. This means that requirements analystswho want to find the category of an information type, or findthe members of an information category, will be unlikely tofind these answers in WordNet. Our work aims to identifythese hyponyms for reuse by requirements analysts in futureprojects.The identification of hyponyms and hypernyms can beconsidered as a case of categorization phenomena which isstudied extensively in cognitive sciences. Of particularinterest to our work is Rosch’s category theory [25] andTversky’s formal approach to category resemblance [31].Rosch introduced category theory to define terminology forunderstanding how abstractions relate to one another [25].This includes the construction of taxonomies, which relatecategories through class inclusion: the more inclusive acategory is, the higher that category appears in thetaxonomy. Higher-level categories are hypernyms, whichcontain lower-level categories or hyponyms. In addition,Rosch characterizes categories by the features they share andshe uses this designation to introduce the concept of cuevalidity, which is the probability that a cue x is the predictorof a category y. Categories with high cue validity are whatRosch calls basic-level categories. In our analysis,hyponyms are frequently linked to a higher-level categorythat can also be considered as a basic-level category;however, the features that define these categories are nottypically found in policy texts, and instead they are tacitknowledge.An important assumption in Rosch’s definition oftaxonomy is that each category can at most be a member ofone other category. Information type names violate thisassumption, because an “e-mail address” can be classified asboth “login information” and “contact information,”depending on how the e-mail address is used in aninformation system. Thus, information types may be moreamenable to mathematical comparison using Tversky’scategory resemblance, which is a measure in which disjointcategories combine when their shared features outweigh theirunshared features [31]. Category resemblance also accountsfor asymmetry in similarity [31], which may account fordifferences arising from confusion among hyponymy,meronymy, homonymy and polysemy. Our approach toextract hyponyms from text does not account for thesemeasured interpretations by Rosch and Tversky, but insteadrelies on the policy author’s authority to control meaning.In our approach, we use Tregex, which is a utilitydeveloped by Levy and Andrew to match constituency parsetrees [18]. Constituency parse trees are constructedautomatically from POS-tagged sentences in which eachword is tagged with a POS tag, such as a noun, verb,adjective, or preposition tag, among others using StanfordCoreNLP [21]. Tregex has been used to generate questionsfrom declarative sentences [13], to evaluate textsummarization [30], to characterize temporal requirements[19], and to generate an interpretative regulatory policy [16].While natural language processing (NLP) of requirementstexts can scale analysis to large corpora, the role of NLPshould not be overstated, since it does not account for humaninterpretation [26]. Jackson and Zave argue that requirementsengineering is principally concerned with writing accuratesoftware specifications, which require explicit statementsabout domain phenomena [14]. While significant work hasbeen done to improve specification, a continuing weakness isthat problems are frequently formalized using low-levelprogramming concepts (classes, data, and operations) asopposed to using richer, problem-oriented ontologies [17]. Inthis paper, we investigate an approach for extracting aninformation type ontology from higher-order descriptions ofinformation systems embodied in privacy policies. Webelieve these ontologies can improve how we reason aboutand analyze privacy requirements for web-based and mobileinformation systems.III. AUTOMATED HYPONYMY EXTRACTIONWe now introduce our research questions, followed byour research method based on content analysis and Tregex.RQ1. What are the different ways to express hyponymy inprivacy policies, and what categories emerge tocharacterize the linguistic mechanisms for expressinghyponymy?RQ2. What are the Tregex patterns that can be used toautomatically identify hypernymy and how accurateare these patterns?RQ3. What percentage of information type coverage can beextracted by applying the hyponymy patterns toprivacy policies?RQ4. How does hypernymy vary across policies within asingle domain, and across multiple domains?

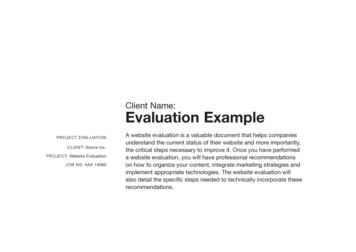

Collect policiesfrom websitesItemizeparagraphsAnnotatehyponymyIdentify Tregexpatterns235471Legend:AutomatedPerformed OnceCrowdsourcedOutputOutput used asinput to next taskParseconstituencies6Identify types ofinformationInformation typehyponymy8Match Tregexpatternsnetworking websites in the US. Table I presents the 30policies in our development set and 12 policies in our test setby category and date last updated.TABLE I. PRIVACY POLICY DATASETS FOR HYPONYMY STUDYDevelopment DatasetFigure 1 presents an overview of our approach to answerthe research questions. During Steps 1 and 2, the analystprepares the input text to the NLP tools used in Steps 3 and4, and to the crowdworker platform in Step 6, which is basedon Amazon Mechanical Turk (AMT).Steps 1-2 are performed manually by an analyst, once foreach policy, which requires 30-90 minutes per policy. InStep 1, the input text begins as a text file, which can beextracted from HTML or a PDF document. In Step 2, theanalyst itemizes the text into paragraphs consisting of 50-120words, while ensuring that each paragraph’s context remainsundivided. This invariant can lead to paragraphs that exceed120 words, which are balanced by smaller 50-60 wordparagraphs. The 120-word limit is based on the average timerequired by one crowdworker to identify information typesin Step 6, which averages 60 seconds [5].Filter falsepositivesIn Step 3, two or more analysts perform content analysison the same 120-word paragraphs from Step 2 to manuallyidentify and categorize hyponymy in privacy policy text. Theanalysts meet periodically to agree on heuristics andguidelines for annotating hyponymy, before combining thehyponymy annotations with corresponding constituencyparses from Step 4 to infer matching Tregex patterns in Step5. The Tregex patterns are identified in a manual,interpretive process performed once: the patterns are thenused in an automated Step 7 to find hyponymy relationships.The Tregex patterns are generic and do produce falsepositives. To filter out false positives, we use crowdworkerannotations produced in Step 6. If a Tregex pattern matches aphrase that has not been annotated by at least twocrowdworkers as an information type, that match isdiscarded as a false positive. We describe each of these stepsin detail in the following sub-sections.A. Annotating HyponymyResearch question RQ1 asks how hyponymy appears inprivacy policies in the wild. To answer RQ1, we selected 30privacy policies across six domains: shopping, telecom,social networking, employment, health, and news (see TableI). These policies are part of a US-centric conveniencesample, although, we include a mix of shopping companieswho maintain both online and brick-and-mortar stores, andwe chose the top telecom websites and five top socialTest DatasetFig. 1. Hyponymy Extraction FrameworkCompany’sPrivacy ed23andmeHealthVaultMayo ClinicMyFitnessPalWebMDABC NewsAccuweatherBloombergReutersWashPostBarnes and NobleCostcoLowesOver TCharter Comm.ComcastTime WarnerVerizonDiceUSJobsCVSFitbitCNNFox pingSocial NetworkingSocial NetworkingSocial NetworkingSocial NetworkingSocial gSocial NetworkingSocial /153/29/1712/31/16The policies are first prepared by removing sectionheaders and boilerplate language that does not describerelevant data practices, before saving the prepared data to aninput file for an AMT task, as described by Steps 1 and 2 inFigure 1. The task employs an annotation tool developed byBreaux and Schaub [5], which allows analysts to selectrelevant phrases matching a category. The analysts are askedto annotate three types of phrases for each hyponymyrelationship identified: a hypernym phrase, which describesthe general category phrase; one or more hyponym phrases,which describe members of the category; and any keywords,

which signal the hyponymy relationship. For example, inFigure 2, the phrase “personal information” is the hypernym,which is followed by the keywords “for example,” whichindicate the start of a clause that contains the hyponyms“name,” “address” and “phone number.”HypernymRefinementkeywordWe may collect personal information from you, for example,your name, address and phone number.HyponymsFig. 2. Hyponymy AnnotationsThe annotation process employs two-cycle coding [27].In the first cycle, the policies are annotated to identify theprospective hyponym patterns, after which the second-cycleis applied to group these patterns into emergent categories.The two-cycle coding process begins with an initial set offive policies, during which guidelines and examples aredeveloped by the analysts to improve consistency and tosupport replication. Next, the analysts meet to discuss theirinitial results, to reconcile differences and to refine theguidelines. After agreeing on the guidelines and initialcategories, the analysts annotate the remaining policies,before meeting again to reconcile disagreements andmeasure the kappa.The itemized policy paragraphs are also used as input forcrowdsourced annotations, as described in Step 6. Thepurpose of the crowd's annotations is to identify all relevantinformation types as “information.” This task is similar to theanalysts' annotation task; however, the annotation“information” is not as specific as the annotations forhypernym, hyponymy, and refinement keyword. Thecrowdworkers would annotate the sentence in Figure 2 with“personal information,” “name,” “address,” and “phonenumber” as information types. Each paragraph that theanalysts annotate is also annotated by five crowdworkers onAMT. If at least two of the crowdworkers annotate a phraseas “information,” it is considered a valid annotation. Eachvalid annotation from the crowd can be used as a type ofvalidation for matching Tregex patterns.During annotation, the analyst may encounter nestedhypernymy, which occurs when a hypernym-keywordhyponym triple has a second triple embedded within thephrase, often within the hyponym phrase. For example, thesentence in Figure 3: “Self-reported information includesinformation you provide to us, including but not limited to,personal traits (e.g., eye color, height)” contains three nestedhyponymy relations. The phrase “information you provide tous” is the hyponym of “self-reported information,” and it isalso the hypernym of “personal traits.” Similarly, “personaltraits” is the hyponym of “information you provide to us”and it is the hypernym of “eye color, height.” To correctlyextract the hyponym-hypernym pairs, we first coded theannotated phrases in numerical order, then we representedhypernym-keyword-hyponym triples using a three-characteralphanumeric sequence that corresponds to the order ofphrases in the sentence; repeated numbers represent the samephrase in one or more relations. For example, the sentence inFigure 3 would have the code “123; 345; 567” wherein the“3” represents “information you provide to us” as thehyponym in the first relation and the hypernym in the secondrelation, and “5” represents “personal eported information includes information you provide to us,including but not limited to, personal traits (e.g., eye color, Fig. 3. Nested HyponymyB. Identifying Tregex PatternsTregex is a language for matching subtrees in aconstituency parse tree [18]. The Tregex patterns are createdby examining the parse tree for each annotated sentence in ahyponymy category. Figure 4 presents the constituency parsetree for the sentence from Figure 2. The colors in the figureshow which part of the parse tree matches which part of theTregex pattern. In the parse tree, the root tree node labeledROOT appears in the upper, left-hand corner with a single,immediate child labeled S; each child is indented slightly tothe right under the parent, and siblings are indentedequidistant from the left-hand side of the figure. Thematching Tregex pattern below the parse tree has three parts:a noun phrase (NP) that is assigned to a variable named“hypernym” via the equal sign (in blue), followed by a dollarsign that indicates a sibling pattern, which is the keywordphrase (in green), followed by a less-than sign that indicatesan immediate child node, which is another NP assigned tothe variable “hyponym” (in red). Tregex provides a means toanswer RQ2 by expressing patterns that match the annotatedhypernyms and hyponyms and their lexical coordination bythe keywords.We developed a method to write Tregex patterns tomatch hyponymy. Given a constituency parse tree, the firststep is to traverse the tree upwards from each hypernym andhyponym until you find a shared ancestor node that bridgesthe two constituents. In Figure 4, the verb (VB) “collect” isan immediate child of the reference node verb phrase (VP).The VP is not present in the Tregex pattern, though it is thereason the NP and the prepositional phrase (PP) are definedas sister nodes ( ) in the matching Tregex pattern also inFigure 4. The reference node is omitted in the Tregex patternto keep the pattern in its most general form. Once it isestablished that we can relate two parts of the tree in onepattern, we traverse the two subtrees back down until we areable to isolate the constituents, in this case, a NP containingthe hypernym and hyponym. When extracting the desired NPrepresenting either the hypernym or hyponym we mustmaintain a level of generalizability. To do this we referencethe NP using relationships between itself and ancestor nodes,such as a parenthetical phrase (PRN) as a sister of the NP itmodifies or a NP as the immediate, right sister of the VB to

which it is the object. By encoding these relationships intothe pattern versus directly copying the word order, we avoidover specification.Constituency Parse Tree(ROOT(S(NP (PRP We))(VP (MD may)(VP (VB collect)(NP (JJ personal) (NN information))(PP (IN from)(NP (PRP you)))This nounphrase (NP) isassigned to thevariable“hypernym”(, ,)(PP (IN for)(NP(NP (NN example))This prepositional phrase describesthe keywords that indicate thehyponymy relation(, ,)(NP (PRP your) (NN name) (, ,) (NN address)(CC and)(NN phone) (NN number))))))(. .)))Matching Tregex Pattern*This nounphrase (NP)is assignedto thevariable“hyponym”NP hypernym (PP ((IN for) (NP (NN example))) NP hyponym)*The A B means “both node A and B have the same parent node,”the A B means “node B is an immediate child of node A,” andthe A B means “node B is some child of node A"Fig. 4. Tregex Pattern MatcherThe development of Tregex patterns is a balance betweengenerally characterizing the lexical relationships amongwords in a pattern, and specializing the pattern to avoid falsepositives. The pattern shown in Figure 4, which matchesinformation type hyponyms after the “for example”keywords, can also match a data purpose hyponym: e.g., “weshare your personal information for marketing, for example,product and service notifications.” To automatically filter outsuch hyponyms that are not information type hyponyms, weuse a lexicon constructed from crowd sourced informationtype tasks, described by Breaux and Schaub [5]. In theinformation type tasks, the crowdworkers are asked toannotate all information types in a given paragraph. Forinstance, in the privacy statement: “We collect your personalinformation such as your name, and address ”, thecrowdworkers would have annotated the phrases “personalinformation”, “name” and “address” as information types.We evaluated the Tregex patterns by comparing thenumber of hypernym-hyponym pairs identified across all 30policies and comparing that to the pairs identified by trainedanalysts. While a pattern may produce a false-negative in onepolicy, it could find that pair in another policy. Thisevaluation strategy prioritizes our goal to extract a generalontology from multiple policies, over a separate goal toattribute hypernym-hyponym pairs to specific policies.In addition, we developed a test dataset (see Table I)which we used to test the accuracy of our approach. The testset consists of 12 policies that were annotated by trainedanalysts. The test dataset was not used during thedevelopment of the Tregex patterns, and were annotated afterwe produced the Tregex patterns from the developmentdataset.C. Ontological CompletenessThe question RQ3 asks what percentage of informationtype coverage can be extracted by applying the Tregexpatterns to privacy statements. To answer RQ3, wedeveloped three types of lexicons – crowdworker lexicon,analyst lexicon and Tregex lexicon. The crowdworkerlexicon consists of all the information types in our dataset of30 privacy policies (see Table I). For the construction of thislexicon, we use the entity extractor developed by Bhatia andBreaux [1], which takes as input the crowd sourced tasks foreach policy, where the crowdworkers have annotated all theinformation types in the policies [5]. The analyst lexicon isconstructed by using the entity extractor on the analysts’hyponymy annotations described in Section III.A. TheTregex lexicon is constructed using the entity extractor onthe information type hyponymy identified by the Tregexpatterns, as described in Section III.B. We compare thecrowdworker lexicon, the analyst lexicon and the Tregexlexicon to understand what percentage of information typesare covered by the hyponymy patterns.IV. EVALUATION AND RESULTSWe now describe our results from the content analysis,Tregex pattern development and lexicon comparisons.A. Hyponymy Taxonomy from Content AnalysisThe first and second authors annotated the 30 policydevelopment dataset shown in Table I. This processconsumed 10 and 11 hours for each annotator, respectively,and yielded 304 annotated instances of hyponymy after finalreconciliation. The guidelines that were developed can besummarized as follows: only annotate information type nounphrases; annotations should not span more than a singlesentence and should include any modifying prepositional orverb phrases that qualify the information type; and if thenoun phrase is an enumeration, annotate all noun phrasestogether.The second-cycle coding to categorize the hyponymyrelationships was based first on the refinement keywordsemantics, and next based on the relative order of thehypernym (H), keyword (K) and hyponym (O) in the privacypolicy text. We answer RQ1 by defining the followingresulting categories: Incomplete Refinement (Inc.): The keywords suggest thatthe hyponymy consists of an incomplete subset of thephrases that can be used as hyponyms for the givenhypernym. For instance, the keywords “such as” and“including” indicate that the given hyponyms are part ofan incomplete list. Complete Refinement (Com.): The keywords indicate thatthe hyponyms are the complete list that belong to thehypernym. For instance, the keywords, “consists of” and“i.e.” indicate that the given list of hyponyms are completefor the respective hypernym.

Implied Refinement (Imp.): The refinement keyword is apunctuation such as a colon (:) or dash (-) and indicatesthat there is an implied hyponymy.The resulting syntactic categories are defined as follows: HKO – The hypernym occurs first, followed by thekeyword, followed by the hyponym. This pattern ispredominantly used to illustrate examples (hyponyms) ofleading technical words (the hypernym). OKH – The hyponym occurs first, followed by thekeyword, followed by the hypernym. This categorydescribes lists in which the last term generalizes thepreceding terms. HO – The hypernym occurs first followed by thehyponym, and there is no keyword. This category is foundwhen the hypernym is the section header, followed by asubsection of implied hyponyms; there are no keywordsthat explicitly indicate the hyponymy. KHO – The keyword occurs first, followed by thehypernym, followed by the hyponym. This category is rareand uses a colon to separate the hypernym from a list ofhyponyms.We measured the degree of agreement above chanceusing Fleiss’ Kappa [10] for the hyponym categories fromthe second-cycle coding. Each hyponymy instance isassigned a semantic category and a syntactic category. TheKappa was computed using the composition of categories.For example, a hyponymy relationship that belongs to theincomplete semantic category and HKO syntactic category isassigned to the category combination of {Inc.-HKO}. TheFleiss Kappa for all mappings from annotations to hyponymcategories and the two analysts was 0.99, which is a veryhigh probability of agreement above chance alone.Table II and III presents the keyword taxonomies for thesemantic and syntactic categories, respectively: including theCategory, the Refinement Keywords that help detect thehyponymy, and the proportion of annotations in the categoryacross all 30 policies (Freq.). The most frequent categoryamong the semantic categories was incomplete refinement.TABLE II. KEYWORD TAXONOMY FOR SEMANTIC nementImpliedRefinementRefinement Keywordssuch as, such, include, including,includes, for example, e.g., like,contain, (and or any as well as any certain) other, concerning, relatingto,, is known as, classifies asconsists of, is, i.e., either, constitute,of your, following types of, in(), :, -, . (section header)Freq.96.05%2.96%0.98%TABLE III. KEYWORD TAXONOMY FOR SYNTACTIC CATEGORIESCategoryHKOOKHHOKHORefinement Keywordssuch as, such, including, for example,include, includes, concerning, is, e.g., like,i.e., of your, contain, relating to, thatrelates to, generally not including, consistsof, concerning, either, ( ), :, (and or any as well as any certain) other,constitute, as, other, is known as, classifiesas, is consideredNonefollowing types ofH: Hypernym, O: Hyponym, K: KeywordFreq.88.48%10.52%0.66%0.33%TABLE IV. FREQUENCY OF HYPONYMY CATEGORIESSyntacticCategoriesSemantic 022KHO100129293304TotalH: Hypernym, O: Hyponym, K: Keyword; Inc.: Incomplete Refinement,Com.: Complete Refinement, Imp.: Implied RefinementB. Tregex Pattern EvaluationWe identified a total of 72 Tregex patterns to answerRQ2, which can be used to automatically identify hyponymyin privacy policies. Due to space limitations, we only presentan example subset of Tregex patterns in Table V. The HOsyntactic category, which has no keywords, cannot bereliably characterized by a high precision pattern, i.e., lowfalse positives.TABLE V.

constituent analyzer over part-of-speech (POS) tags to find noun phrases that match the pattern, which are then checked against an early version of WordNet for verification [12]. The approach was unable to work for meronymy in text. Snow et al. applied WordNet and machine learning to a newswire corpus to identify lexico-syntactic patterns and