Transcription

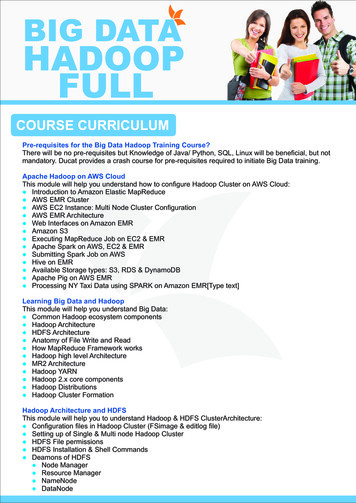

BIG DATAHADOOPFULLCOURSE CURRICULUMPre-requisites for the Big Data Hadoop Training Course?There will be no pre-requisites but Knowledge of Java/ Python, SQL, Linux will be beneficial, but notmandatory. Ducat provides a crash course for pre-requisites required to initiate Big Data training.Apache Hadoop on AWS CloudThis module will help you understand how to configure Hadoop Cluster on AWS Cloud:l Introduction to Amazon Elastic MapReducel AWS EMR Clusterl AWS EC2 Instance: Multi Node Cluster Configurationl AWS EMR Architecturel Web Interfaces on Amazon EMRl Amazon S3l Executing MapReduce Job on EC2 & EMRl Apache Spark on AWS, EC2 & EMRl Submitting Spark Job on AWSl Hive on EMRl Available Storage types: S3, RDS & DynamoDBl Apache Pig on AWS EMRl Processing NY Taxi Data using SPARK on Amazon EMR[Type text]Learning Big Data and HadoopThis module will help you understand Big Data:l Common Hadoop ecosystem componentsl Hadoop Architecturel HDFS Architecturel Anatomy of File Write and Readl How MapReduce Framework worksl Hadoop high level Architecturel MR2 Architecturel Hadoop YARNl Hadoop 2.x core componentsl Hadoop Distributionsl Hadoop Cluster FormationHadoop Architecture and HDFSThis module will help you to understand Hadoop & HDFS ClusterArchitecture:l Configuration files in Hadoop Cluster (FSimage & editlog file)l Setting up of Single & Multi node Hadoop Clusterl HDFS File permissionsl HDFS Installation & Shell Commandsl Deamons of HDFSl Node Managerl Resource Managerl NameNodel DataNode

lllllllSecondary NameNodeYARN DeamonsHDFS Read & Write CommandsNameNode & DataNode ArchitectureHDFS OperationsHadoop MapReduce JobExecuting MapReduce JobHadoop MapReduce FrameworkThis module will help you to understand Hadoop MapReduce framework:l How MapReduce works on HDFS data setsl MapReduce Algorithml MapReduce Hadoop Implementationl Hadoop 2.x MapReduce Architecturel MapReduce Componentsl YARN Workflowl MapReduce Combinersl MapReduce Partitionersl MapReduce Hadoop Administrationl MapReduce APIsl Input Split & String Tokenizer in MapReducel MapReduce Use Cases on Data setsAdvanced MapReduce ConceptsThis module will help you to learn:l Job Submission & Monitoringl Countersl Distributed Cachel Map & Reduce Joinl Data Compressorsl Job Configurationl Record ReaderPigThis module will help you to understand Pig Concepts:l Pig Architecturel Pig Installationl Pig Grunt shelll Pig Running Modesl Pig Latin Basicsl Pig LOAD & STORE Operators[Type text]l Diagnostic Operatorsl DESCRIBE Operatorl EXPLAIN Operatorl ILLUSTRATE Operatorl DUMP Operatorl Grouping & Joiningl GROUP Operatorl COGROUP Operatorl JOIN Operatorl CROSS Operatorl Combining & Splittingl UNION Operatorl SPLIT Operatorl Filteringl FILTER Operatorl DISTINCT Operatorl FOREACH Operator

lllllllllSortingl ORDERBYFIRSTl LIMIT OperatorBuilt in Fuctionsl EVAL Functionsl LOAD & STORE Functionsl Bag & Tuple Functionsl String Functionsl Date-Time Functionsl MATH FunctionsPig UDFs (User Defined Functions)Pig Scripts in Local ModePig Scripts in MapReduce ModeAnalysing XML Data using PigPig Use Cases (Data Analysis on Social Media sites, Banking, Stock Market & Others)Analysing JSON data using PigTesting Pig SctiptsHiveThis module will build your concepts in learning:l Hive Installationl Hive Data typesl Hive Architecture & Componentsl Hive Meta Storel Hive Tables(Managed Tables and External Tables)l Hive Partitioning & Bucketingl Hive Joins & Sub Queryl Running Hive Scriptsl Hive Indexing & Viewl Hive Queries (HQL); Order By, Group By, Distribute By, Cluster By, Examplesl Hive Functions: Built-in & UDF (User Defined Functions)l Hive ETL: Loading JSON, XML, Text Data Examplesl Hive Querying Datal Hive Tables (Managed & External Tables)l Hive Used Casesl Hive Optimization Techniquesl Partioning(Static & Dynamic Partition) & Bucketingl Hive Joins Map BucketMap SMB (SortedBucketMap) Skewl Hive FileFormats ( ORC SEQUENCE TEXT AVRO PARQUET)l CBOl Vectorizationl Indexing (Compact BitMap)l Integration with TEZ & Sparkl Hive SerDer ( Custom InBuilt)l Hive integration NoSQL (HBase MongoDB Cassandra)l Thrift API (Thrift Server)l UDF, UDTF & UDAFl Hive Multiple Delimitersl XML & JSON Data Loading HIVE.l Aggregation & Windowing Functions in Hivel Hive Connect with TableauSqoopl Sqoop Installationl Loading Data form RDBMS using Sqoopl Sqoop Import & Import-All-Tablel Fundamentals & Architecture of Apache Sqoopl Sqoop Jobl Sqoop Codegenl Sqoop Incremental Import & Incremental Export

llllllllSqoop MergeImport Data from MySQL to Hive using SqoopSqoop: Hive ImportSqoop MetastoreSqoop Use CasesSqoop- HCatalog IntegrationSqoop ScriptSqoop ConnectorsFlumeThis module will help you to learn Flume Concepts:l Flume Introductionl Flume Architecturel Flume Data Flowl Flume Configurationl Flume Agent Component Typesl Flume Setupl Flume Interceptorsl Multiplexing (Fan-Out), Fan-In-Flowl Flume Channel Selectorsl Flume Sync Processorsl Fetching of Streaming Data using Flume (Social Media Sites: YouTube, LinkedIn, Twitter)l Flume Kafka Integrationl Flume Use CasesKAFKAThis module will help you to learn Kafka concepts:l Kafka Fundamentalsl Kafka Cluster Architecturel Kafka Workflowl Kafka Producer, Consumer Architecturel Integration with SPARKl Kafka Topic Architecturel Zookeeper & Kafkal Kafka Partitionsl Kafka Consumer Groupsl KSQL (SQL Engine for Kafka)l Kafka Connectorsl Kafka REST Proxyl Kafka OffsetsOozieThis module will help you to understand Oozie concepts:l Oozie Introductionl Oozie Workflow Specificationl Oozie Coordinator Functional Specificationl Oozie H-catalog Integrationl Oozie Bundle Jobsl Oozie CLI Extensionsl Automate MapReduce, Pig, Hive, Sqoop Jobs using Ooziel Packaging & Deploying an Oozie Workflow ApplicationHBaseThis module will help you to learn HBase Architecture:l HBase Architecture, Data Flow & Use Casesl Apache HBase Configurationl HBase Shell & general commandsl HBase Schema Designl HBase Data Modell HBase Region & Master Serverl HBase & MapReduce

lllllllllllBulk Loading in HBaseCreate, Insert, Read Tables in HBaseHBase Admin APIsHBase SecurityHBase vs HiveBackup & Restore in HBaseApache HBase External APIs (REST, Thrift, Scala)HBase & SPARKApache HBase CoprocessorsHBase Case StudiesHBase TrobleshootingData Processing with Apache SparkSpark executes in-memory data processing & how Spark Job runs faster then Hadoop MapReduce Job.Course will also help you understand the Spark Ecosystem & it related APIs like Spark SQL, SparkStreaming, Spark MLib, Spark GraphX & Spark Core concepts as well.This course will help you to understand Data Analytics & Machine Learning algorithms applying to variousdatasets to process & to analyze large amount of data.l Spark RDDs.l Spark RDDs Actions & Transformations.l Spark SQL : Connectivity with various Relational sources & its convert it into Data Frame using Spark SQLl Spark Streamingl Understanding role of RDDl Spark Core concepts : Creating of RDDs: Parrallel RDDs, MappedRDD, HadoopRDD, JdbcRDD.l Spark Architecture & Components.BIG DATA PROJECTSProject #1: Working with MapReduce, Pig, Hive & FlumeProblem Statement : Fetch structured & unstructured data sets from various sources like Social MediaSites, Web Server & structured source like MySQL, Oracle & others and dump it into HDFS and thenanalyze the same datasets using PIG,HQL queries & MapReduce technologies to gain proficiency inHadoop related stack & its ecosystem tools.Data Analysis Steps in :l Dump XML & JSON datasets into HDFS.l Convert semi-structured data formats(JSON & XML) into structured format using Pig,Hive & MapReduce.l Push the data set into PIG & Hive environment for further analysis.l Writing Hive queries to push the output into relational database(RDBMS) using Sqoop.l Renders the result in Box Plot, Bar Graph & others using R & Python integration with Hadoop.Project #2: Analyze Stock Market DataIndustry: FinanceData : Data set contains stock information such as daily quotes ,Stock highest price, Stock opening price onNew York Stock Exchange.Problem Statement: Calculate Co-variance for stock data to solve storage & processing problems related tohuge volume of data.l Positive Covariance, If investment instruments or stocks tend to be up or down during the same timeperiods, they have positive covariance.l Negative Co-variance, If return move inversely,If investment tends to be up while other is down, thisshows Negative Co-variance.Project #3: Hive,Pig & MapReduce with New York City Uber Tripsl Problem Statement: What was the busiest dispatch base by trips for a particular day on entire month?l What day had the most active vehicles.l What day had the most trips sorted by most to fewest.l Dispatching Base Number is the NYC taxi & Limousine company code of that base that dispatched theUBER.l active vehicles shows the number of active UBER vehicles for a particular date & company(base).Trips is the number of trips for a particular base & date.

Project #4: Analyze Tourism DataData: Tourism Data comprises contains : City Pair, seniors travelling,children traveling, adult traveling,car booking price & air booking price.Problem Statement: Analyze Tourism data to find out :l Top 20 destinations tourist frequently travel to: Based on given data we can find the most populardestinations where people travel frequently, based on the specific initial number of trips booked for aparticular destinationl Top 20 high air-revenue destinations, i.e the 20 cities that generate high airline revenues for travel, sothat the discount offers can be given to attract more bookings for these destinations.l Top 20 locations from where most of the trips start based on booked trip count.Project #5: Airport Flight Data Analysis : We will analyze Airport Information System data that givesinformation regarding flight delays,source & destination details diverted routes & others.Industry: AviationProblem Statement: Analyze Flight Data to:l List of Delayed flights.l Find flights with zero stop.l List of Active Airlines all countries.l Source & Destination details of flights.l Reason why flight get delayed.l Time in different formats.Project #6: Analyze Movie RatingsIndustry: MediaData: Movie data from sites like rotten tomatoes, IMDB, etc. Problem Statement: Analyze the movie ratingsby different users to:l Get the user who has rated the most number of moviesl Get the user who has rated the least number of moviesl Get the count of total number of movies rated by user belonging to a specific occupationl Get the number of underage usersProject #7: Analyze Social Media Channels :l Facebookl Twitterl Instagraml YouTubel Industry: Social Medial Data: DataSet Columns : VideoId, Uploader, Internal Day of establishment of You tube & the date ofuploading of the video,Category,Length,Rating, Number of comments.l Problem Statement: Top 5 categories with maximum number of videos uploaded.l Problem Statement: Identify the top 5 categories in which the most number of videos are uploaded,the top 10 rated videos, and the top 10 most viewed videos.l Apart from these there are some twenty more use-cases to choose: Twitter Data Analysisl Market data AnalysisPartners :JavaNOIDAGHAZIABADPITAMPURA (DELHI)A-43 & A-52, Sector-16,Noida - 201301, (U.P.) INDIA70-70-90-50-90 91 99-9999-32131, Anand Industrial Estate,Near ITS College, Mohan Nagar,Ghaziabad (U.P.)70-70-90-50-90Plot No. 366, 2nd Floor,Kohat Enclave, Pitampura,( Near- Kohat Metro Station)Above Allahabad Bank,New Delhi- 110034.70-70-90-50-90GURGAON1808/2, 2nd floor old DLF,Near Honda Showroom,Sec.-14, Gurgaon tionSOUTH EXTENSION(DELHI)D-27,South Extension-1New Delhi-11004970-70-90-50-90 91 98-1161-2707

HADOOP FULL Pre-requisites for the Big Data Hadoop Training Course? There will be no pre-requisites but Knowledge of Java/ Python, SQL, Linux will be beneficial, but not . This module will help you to learn HBase Architecture: lHBase Architecture, Data Flow & Use Cases lApache HBase Configuration lHBase Shell & general commands lHBase .