Transcription

Squirrel: Testing Database Management Systemswith Language Validity and Coverage FeedbackRui Zhong† , Yongheng Chen§ , Hong Hu§ , Hangfan Zhang† , Wenke Lee§ and Dinghao Wu†† PennState University§ GeorgiaConsidering the infinite input space and rare bug-triggering queries,this bruteforce-like enumeration is ineffective in finding memoryerror bugs from DBMSs.In recent years, fuzzing has been widely adopted as a softwaretesting technique to detect memory error vulnerabilities [33, 43, 46,66]. Different from generation-based testing, fuzzing relies on therandom mutation to create new test cases, and utilizes feedback,like code coverage, to guide the input space exploration. Startingfrom the seed corpus, a fuzzer randomly mutates existing inputs,like flipping several bits, to generate slightly different variants. Itruns the target program with the new input and detects abnormalbehaviors such as execution crashes and assertion failures. Duringthe execution, the fuzzer also records the code path information,like executed blocks or branches. The input that triggers new codepaths has a higher priority to be selected for another round of mutation. With a large amount of effort spent on improving the fuzzingefficiency [34, 49, 63] and efficacy [26, 31, 42, 51], fuzzers have successfully found thousands of bugs from popular applications [55].However, it is challenging to apply fuzzing techniques to testDBMSs, as DBMSs perform two correctness checks, the syntacticcheck and the semantic check before executing an SQL query. Specifically, the DBMS first parses each SQL query to get its syntacticmeaning. If the query has any grammar errors, the DBMS will stopthe execution and immediately bail out with an error message. Otherwise, the DBMS further checks the query for semantic errors, likeusing a non-existent table, and will bail out in any case of semanticerrors. After these two checks, the DBMS creates several executionplans and picks the most efficient one to execute the query. Therefore, to reach the deep logic of a DBMS, the query should be correctsyntactically and semantically.Random byte mutation used by current fuzzing techniques cannot easily generate syntax-correct or semantics-correct inputs. Forexample, AFL, the representative mutation-based fuzzer [66], cangenerate 20 million queries for SQLite [4] within 24 hours, butonly 30% of them can pass the syntax check, and 4% have correctsemantic meaning. However, most of the DBMS code is responsiblefor query plan construction, optimization, and execution, and onlya small portion is used for syntax-check and semantics-check. Forexample, 20,000 semantics-correct queries generated by AFL trigger19,291 code branches in SQLite, while the same number of syntaxincorrect queries only cover 9,809 branches – only 50.8% of theformer. Therefore, current fuzzing techniques cannot trigger thedeep logic of DBMSs nor comprehensively explore program states.In this paper, we propose a system, Sqirrel, to address thesechallenges so that we can effectively fuzz DBMSs. Our systemembeds two key techniques, the syntax-preserving mutation andthe semantics-guided instantiation. To generate syntax-correct SQLAbstractFuzzing is an increasingly popular technique for verifying software functionalities and finding security vulnerabilities. However,current mutation-based fuzzers cannot effectively test databasemanagement systems (DBMSs), which strictly check inputs forvalid syntax and semantics. Generation-based testing can guarantee the syntax correctness of the inputs, but it does not utilize anyfeedback, like code coverage, to guide the path exploration.In this paper, we develop Sqirrel, a novel fuzzing frameworkthat considers both language validity and coverage feedback to testDBMSs. We design an intermediate representation (IR) to maintainSQL queries in a structural and informative manner. To generatesyntactically correct queries, we perform type-based mutationson IR, including statement insertion, deletion and replacement.To mitigate semantic errors, we analyze each IR to identify thelogical dependencies between arguments, and generate queries thatsatisfy these dependencies. We evaluated Sqirrel on four popularDBMSs: SQLite, MySQL, PostgreSQL and MariaDB. Sqirrel found 51bugs in SQLite, 7 in MySQL and 5 in MariaDB. 52 of the bugs are fixedwith 12 CVEs assigned. In our experiment, Sqirrel achieves 2.4 243.9 higher semantic correctness than state-of-the-art fuzzers,and explores 2.0 -10.9 more new edges than mutation-based tools.These results show that Sqirrel is effective in finding memoryerrors in database management systems.1Institute of TechnologyIntroductionDatabase Management Systems (DBMSs) are the integral components of modern data-intensive systems [9, 10, 13, 29, 39, 58]. Likeall other complicated systems, DBMSs contain many bugs that notonly affect their functionalities but also enable malicious attacks.Among all the threats, the infamous memory error bugs enableattackers to leak and even corrupt memory of running DBMS processes, which may finally lead to remote code execution [28, 38],database breach [7, 30] or denial-of-service (DoS) [16, 24]. For example, the recent “Collection #1” data breach reveals 773 millionemail addresses and 21 billion passwords [36].Generation-based testing techniques are the de facto bug-findingtools for DBMSs [5]. These techniques require developers to createa formal model that precisely captures the definition of SQL (Structured Query Language). Based on the model, the tools enumerateall possible SQL queries to verify the functionalities of DBMSs orfind bugs. However, generation-based testing tools have limitedeffectiveness as they distribute the effort evenly to every SQL query. The two lead authors contributed equally to this work.ACM CCS 2020, Orlando, USA2020. ACM ISBN 978-x-xxxx-xxxx-x/YY/MM. . . 15.00https://doi.org/10.1145/nnnnnnn.nnnnnnn1

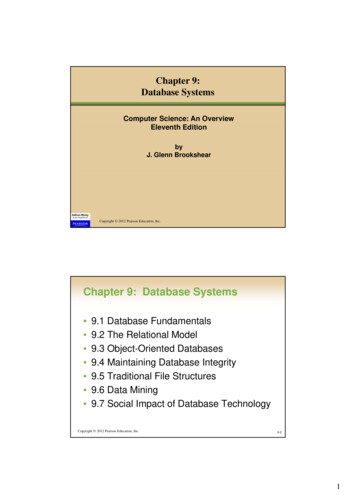

ACM CCS 2020, Orlando, USARui Zhong† , Yongheng Chen§ , Hong Hu§ , Hangfan Zhang† , Wenke Lee§ and Dinghao Wu†bitflip ‘SELECT’After mutationOriginal queryqueries, we design an intermediate representation (IR) to maintainqueries in a structural and informative manner. Each IR statementcontains at most two operands, and each operand is also another IRstatement. Each IR has a structure type that indicates the syntacticstructure (e.g., SELECT a FROM b), and data types (e.g., table name).Before the mutation, our system strips concrete data from the IRand only keeps a skeleton of operations. Then, we perform threerandom mutations, including inserting type-matched operands,deleting optional operands or replacing operands with other typematched ones. The type-based mutation guarantees the generatedquery has the correct syntax. Next, we perform the query analysisto figure out the expected dependencies between different IR data.For example, the data in a SELECT clause should be a column ofthe table in the FROM clause. We fill each stripped IR with concretedata, and make sure they satisfy all expected dependencies. Atlast, we translate the IR back into SQL and feed it to the DBMSfor testing. Sqirrel combines the benefits of the coverage-basedfuzzing (i.e., guided exploration) and the model-based generation(i.e., high language correctness), and thus can trigger the deep logicof DBMSs and find severe bugs.We implement Sqirrel with 43,783 lines of C code, whichmainly focuses on the syntax-preserving mutation and thesemantics-guided instantiation. We reuse AFL’s code for coverage collection and input prioritization. Our design of Sqirrelis generic and it should work with other fuzzers after some engineering effort.To understand the effectiveness of our system, we use Sqirrelto test four popular DBMSs: SQLite, MySQL, PostgreSQL and MariaDB.Sqirrel successfully found 63 memory error issues within 40 days,including 51 bugs in SQLite, 7 bugs in MySQL and 5 bugs in MariaDB.As a comparison, Google OSS-Fuzz detected 19 bugs from SQLitein 40 months and 15 bugs from MySQL in 5 months [34]. We haveresponsibly reported all of these bugs to the DBMS developers andreceived positive feedback. At the time of paper writing, 52 bugshave been fixed. We even get CVE numbers for 12 bugs due to theirsevere security consequences, like stealing database contents.We inspect various aspects of fuzzing, and compare Sqirrelwith other state-of-the-art tools, including the mutation-basedfuzzer AFL and Angora, the generation-based tool SQLsmith, thestructural fuzzer GRIMOIRE and the hybrid fuzzer QSYM. During the24-hour testing, Sqirrel successfully finds nine unique bugs whileothers detect one or zero bugs. Sqirrel discovers 2.0 -10.9 morenew edges than mutation-based tools, and achieves a comparableresult to the generation-based tester SQLsmith. It also gets 2.4 243.9 higher semantic correctness than other tools.We make the following contributions in this paper:SELECT c2, c6FROM t1, t2WHERE t1.c1 t2.c5RELECT c2, c6FROM t1, t2WHERE t1.c1 t2.c5After generationSELECT c2, c6FROM t1, t2WHERE t1.c1 t3.c5change table nameDatabase Management Systemsyntax errorparsevalidationsemantic erroroptimization & executionc1 c2c5 c6alice read1 alice1 readt1 2 bobt2 3 writeFigure 1: Challenges of testing DBMSs. A DBMS takes four steps toprocess one SQL query. Among them, parse checks syntactic correctness,and validation examines semantic validity. Random mutation unlikely guarantees the syntactic correctness, while grammar-based generation may failto enforce semantic correctness.2Problem DefinitionIn this section, we first briefly describe how a DBMS handles SQLqueries. Then, we introduce existing DBMS testing techniques andillustrate their limitations in finding bugs hidden in the deep logic.Finally, we present our insight to solve this problem.2.1Query Processing in DBMSModern DBMSs usually process an SQL query in four phases: parse,validation, optimization and execution [6], as shown in Figure 1.After receiving an SQL query, the DBMS first parses the query toget its syntactic meaning. The parser breaks the query into individual tokens, and checks them against the grammar rules. If anysyntactic error is detected, the DBMS will immediately terminatethe execution and return an error message to the client. Second, theDBMS checks the semantic correctness of the query in the validation phase, like whether tables exist in the database or columns areunambiguous. Most semantic errors can be detected in this phase.Next, in the optimization phase, the query optimizer constructsseveral possible query plans and attempts to determine the mostefficient one for executing the given query. Finally, the DBMS executes the chosen plan on the database and sends the result backto the client. Therefore, the execution will reach the second phaseonly if the query is syntactically correct, and will proceed to thelast two phrases if the query is semantically correct.Motivating Example. The "Original query" in Figure 1 first joinstwo tables t1 and t2, and searches for the rows where the c1 columnof t1 is the same as the c5 column of t2. For each matched row,the query returns the value of c2 and c6. The DBMS finds thatthis query passes the syntactic check and the semantic check. Itsearches in the database and finally returns "alice read". We propose syntax-preserving mutation and semantics-guidedinstantiation to address the challenges of fuzzing DBMSs. We implement Sqirrel, an end-to-end system that combinesmutation and generation to detect DBMS bugs. We evaluated Sqirrel on real-world DBMSs and identified 63memory error issues. The result shows that Sqirrel outperforms existing tools on finding bugs from DBMSs.2.2Challenges of DBMS TestingThere are mainly two ways to generate SQL queries for testingDBMSs: model-based generation and random mutation. The modelbased generation follows a precise grammar-model and thus canconstruct syntactically correct inputs. For example, SQLsmith [5],a popular DBMS testing tool, generates syntax-correct test casesfrom the abstract syntax tree (AST) [60] directly. However, withoutany guidance, the model-based generation sequentially scans theWe plan to release the code of Sqirrel to help DBMS developerstest their products and to boost future research in securing DBMSs.2

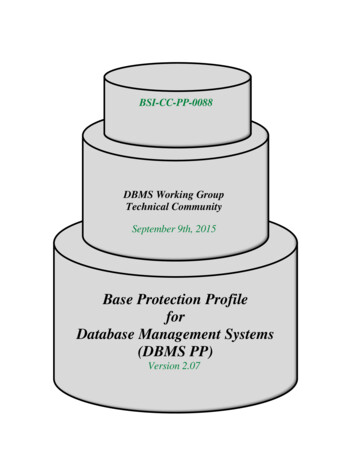

Squirrel: Testing Database Management Systems with Language Validity and Coverage FeedbackSquirrelwhole input space. Considering that many queries are handled inthe same way by DBMSs, this method cannot efficiently explorethe program’s state space. Further, the generation-based methodcan hardly guarantee the semantic correctness [35], and querieswith incorrect semantics will be filtered out by the DBMS duringthe validation. Figure 1 shows a query constructed by a generator(After generation). Although this query is syntactically correct, itcannot be executed because the table t3 in the WHERE clause doesnot exist in the current database.Random mutation updates existing inputs to generate new ones.To improve the performance, fuzzers utilize the feedback from previous executions to evaluate the priority of the generated inputs. Ifthe feedback indicates the previous input is interesting, like triggering a new execution path, fuzzers will put it in a queue for furthermutation. In this way, fuzzers will collect more and more interestingtest cases and thus can explore the program’s state space efficiently.Statistics show that random mutation with feedback-driven workswell in many software. For example, Google found over 5,000 vulnerabilities with their feedback-driven mutation-based fuzzer [43, 55].However, grammar-blind mutation strategies have low effectiveness in handling structured inputs, like SQL and JavaScript [60].For example, random flipping the bits of a SQL keyword hardlyproduces another valid keyword, and the whole query will becomesyntactically incorrect. Figure 1 shows such a case (After mutation),where flipping the last bit of S in SELECT leads to an invalid keywordRELECT. The DBMS will reject the new query in the parse phase.We design evaluation to understand the quality of AFL-generatedSQL queries, and the importance of syntax-correctness andsemantics-correctness. Specifically, we use AFL to test SQLite for24 hours, which generates 20 million queries. However, only about30% of them are syntactically correct, and merely 4% for them canpass semantic checks. We randomly pick 20,000 semantics-correctqueries, and find that they trigger 19,291 distinct code branchesin SQLite. The same number of syntax-incorrect and semanticsincorrect queries only reach 9,809 and 12,811 branches, respectively.These results show the low validation rate of AFL-generated queries,and the importance of semantics-correctness for exploring the program state space.2.3ACM CCS 2020, Orlando, USAIRTranslationSeed tiationInteresting queriesFuzzingCrashesFigure 2: Overview of Sqirrel. Sqirrel aims to find queries that crashthe DBMS. Sqirrel first lifts queries from SQL to IR; then, it mutates IR togenerate new skeletons; next, it fills the skeleton with concrete operands;finally, it runs the new query and detects bugs.queries from the AST. However, due to the strict type-constraintand complicated operations, mutating AST is as challenging asmodifying SQL queries.Improving Semantic Correctness. Since ensuring the semanticcorrectness of generated SQL queries is proved to be NP-hard [44],we will try practical solutions to improve the semantic correctnessas much as possible. Existing generation-based tools define a setof query templates. Each template represents a complete queryand contains specific, static constraints between operands [20].However, due to the limited human effort, these frameworks cannotguarantee the expressiveness of their SQL templates. We tackle thisproblem through dynamic query instantiation. Given the skeletonof a syntax-correct SQL query (i.e., without concrete operands),our method first builds its data dependency graph according topredefined basic rules. For example, the operand of SELECT can bea column name of the table used in FROM. Then, we try to fill theskeleton with concrete operands whose relations satisfy the datadependency graph. With the instantiation, the semantic correctnessrate is high enough for testing DBMSs.3Overview of SqirrelFigure 2 shows an overview of our DBMS testing framework,Sqirrel. Given a set of normal SQL queries, Sqirrel aims to findqueries that render the execution of DBMSs crash. A query meansa test case and may contain multiple SQL statements. Sqirrelstarts with an empty database and requires the query to createthe content. Sqirrel achieves its goal with four key components:Translator, Mutator, Instantiator, and SQL Fuzzer. First, Sqirrelselects one query 𝐼 from a queue that consists of both initial queriesand saved interesting queries. Second, the Translator translates 𝐼into a vector of IRs 𝑉 . Meanwhile, the Translator strips the concretevalues from 𝑉 to make it a query skeleton. Our Mutator modifies𝑉 through insertion, deletion and replacement to produce a newIR vector 𝑉 ′ — 𝑉 ′ is syntactically correct. Next, our Instantiatorperforms data dependency analysis of 𝑉 ′ and builds a data dependency graph. Then, the Instantiator selects new concrete values thatsatisfy the data dependency and fills 𝑉 ′ with these values. Since thedata dependency is satisfied, 𝑉 ′ is likely to be semantically correct.Finally, we convert 𝑉 ′ back to a SQL query 𝐼 ′ and run the DBMSwith 𝐼 ′ . If the execution crashes, we find an input that triggers abug. Otherwise, if 𝐼 ′ triggers a new execution path of the program,we save it into the queue for further mutation.Our ApproachOur idea in this paper is to introduce syntax-correct and semanticsaware mutation into fuzzing, so we can take advantage of mutationbased techniques and generation-based mechanisms to maximizeour ability to test DBMSs.Generating Syntax-Correct Queries. We design a new intermediate representation (IR) to maintain SQL queries in a structuraland informative way and adopt type-based mutations to guaranteethe syntactic correctness. Each IR statement simply contains atmost two operands, and therefore our mutation just has to handletwo values. Each statement has an associated grammar type, likeSelectStmt for SELECT statement, while each data has a semantictype, like table name. Our mutation performs type-based operations, including inserting type-matched operands, deleting optionaloperands and replacing operands with type-matched ones. We stripthe concrete data from each IR, like table names, to focus on mutating the skeleton. The IR-based mutation effectively preserves thesyntactic correctness. Some generation-based tools generate SQL4Intermediate RepresentationWe design an intermediate representation (IR) of SQL to supportthe syntax-correct query mutation. We translate each query fromSQL to our IR, mutate the IR and translate the new IR back to SQL3

ACM CCS 2020, Orlando, USA12345678910111213141516Rui Zhong† , Yongheng Chen§ , Hong Hu§ , Hangfan Zhang† , Wenke Lee§ and Dinghao Wu†// l: left child, r: right child, d: data, t: data typeV1 (Column,l 0, r 0, op 0, d "c2", t ColumnName);V2 (ColumnRef, l V1, r 0, op 0, d 0);V3 (Expr,l V2, r 0, op 0, d 0);V4 (Column,l 0, r 0, op 0, d "c6", t ColumnName);V5 (ColumnRef, l V4, r 0, op 0, d 0);V6 (Expr,l V5, r 0, op 0, d 0);V7 (SelectList, l V3, r V6, op 0, d 0);// the optional left child can be DINSTRICTV8 (SelectClause, l 0, r V6, op.prefix "SELECT", d 0);.//Unknown type for intermediate IRsVa (Unknown,l V8, r V14, op 0, d 0);Vb (Unknown,l Va, r V25, op 0, d 0);// the optional right child can be an ORDER clauseV26 (SelectStmt, l Vb, r 0, op 0, d olumnc12119exprcolumnreftablet220columnc5Figure 4: AST of the running example. Sqirrel parses the SQL queryand represents it in AST, and finally translate AST to IR.Figure 3: IR of the running example SQL query. The correspondingAST tree is shown in Figure 4.helps developers to adopt unified and simple mutation strategies.We can perform statement insertion, deletion and replacementwhile keeping the syntactic correctness. We present the algorithmsabout translation between SQL queries and IRs in Appendix B.query for execution. Our design of the IR aims to achieve threegoals: the IRs can represent any SQL statements (expressive); theformat and operation of IRs are uniform (general); the translationbetween IR and SQL is efficient (simple).The IR is in the static single assignment (SSA) form. A query, or atest case, contains one or more IR statements. Each statement is anassignment, where the left-hand side is the destination variable andthe right-hand side is either a literal or an operator with operands.We add the following fields in IR to store the necessary information.5Syntax-Preserving MutationWe classify tokens in an SQL query into two groups based on theirfunctionalities. SQL keywords and mathematical operators definewhat operations to be performed, and we call these tokens structure.Other tokens specify the targets of defined operations, and we callthem data. Data can be literal that makes basic sense, like a constantvalue 1, or can express semantic meaning, like table names.We observe that changing structure tokens has more impacton the DBMS execution than that of changing data tokens. Thedifference comes from two reasons. First, altering structure willchange the operations of the query, and thus trigger differentfunctions, while a DBMS may use the same logic to handle different literal data. For example, SQLite takes almost the samepath to process query A:"SELECT c FROM t WHERE c 1" and queryB:"SELECT c FROM t WHERE c 10", but uses significantly different code to handle C:"SELECT c FROM t WHERE c 1". Second, randomly modifying semantic-related data is likely to generate a semantically incorrect query, which a DBMS will refuse to execute.For example, replacing c in query A with the column in anothertable leads to an invalid query. In either case, random data mutationis less productive than random structure mutation.Therefore, we strip data from the query IR and apply mutationmainly on structures. We leave the data modification in §6. ir type: the type of one IR statement. This type is based onthe corresponding node in the AST, like column type for columnnames or expr type for expressions. We also define a specialtype Unknown to represent intermediate statements that have nocorresponding node in the AST. operator: consisting of SQL keywords [11] and mathematicaloperators [12]. It indicates the operation the IR performs andincludes three parts: the prefix op prefix, the interfix op midand the suffix op suffix. For example, the IR of "CREATE triggerBEGIN list END" has prefix CREATE, interfix BEGIN and suffix END. left operand, right operand: the operands of the IR operator.The operand is either another IR statement, or can be NULL if theoperand is optional or not required. data value: the concrete data the IR carries, like table name t1. data type: data type, like ColumnName for column names.We provide the formal definition of the IR grammar in Appendix A.Figure 3 shows the IRs of our motivating example in Figure 1(Original query). The corresponding AST is given in Figure 4. V1and V4 represent the column names c2 and c6, which are corresponding to nodes 1 and 4 in Figure 4. They do not contain anyoperator or operand but have the ColumnName data type and properdata values. V2 and V5 define references to columns (V1 and V4),and V3 and V6 create two expressions. Each of them only has oneoperand. V7 describes the parameter list of SELECT, including c2 andc6. V8 represents the SELECT clause, which could have DISTINCT asits left operand (NULL here). SELECT appears before the left operand,so it is the operator prefix. Since our IR only allows at most twooperands, we have to use two intermediate nodes, Va and Vb, to connect three nodes 8 , 14 and 25 to construct the IR of SelectStmt.Their ir types are set to Unknown. Finally, V26 defines the SELECTstatement, which is node 26 in Figure 4.Our IR is just a sequence of assignment statements. This linearrepresentation, different from tree or graph structures (like AST),5.1Structure-Data SeparationWe walk through the IRs to replace each data with a predefinedvalue based on its type data type. Specifically, we replace semanticdata with string “x”, change constant numbers to 1 or 1.0 and updateall strings to “a”. Therefore, after the separation, the running example “SELECT c2,c6 FROM t1,t2 WHERE t1.c1 t2.c5” becomes“SELECT x,x FROM x,x, WHERE x.x x.x”. Both query A and B become "SELECT x FROM x WHERE x 1", while query C is changed to"SELECT x FROM x WHERE x 1".Storing IR in Library. We use a dictionary called IR library tostore various IRs. The key of the dictionary is the IR type, while thevalue is a list of IRs. IRs in one list have the same type, and theirstructures are exclusively different. For example, after separation,queries A, B, and C have the same SelectStmt type, and they should4

Squirrel: Testing Database Management Systems with Language Validity and Coverage FeedbackCREATECREATECREATESELECTSELECT x,x FROM x,x WHERE x.x x.x;V8 (SelectClause, l 0, r V6, op.prefix "SELECT” );Va (Unknown, l V8, r V14, op 0, d 0);Vb (Unknown, l Va, r V25, op 0, d 0);V26 (SelectStatement, l Vb, r 0, op 0, d 0);InsertionSELECT x,x FROM x,x WHERE x.x x.x ORDER BY x;Vc (OrderbyClause, );V26 (SelectStatement, l Vb, r Vc, op 0, d 0);ReplacementDeletionACM CCS 2020, Orlando, USASELECT count(x,x) FROM x,x WHERE x.x x.x;Vc (CountClause, );V8 (SelectClause, l 0, r Vc, op.prefix "SELECT” );SELECT x,x FROM x,x;Vb (Unknown, l Va, r 0, op 0, d 0);TABLE x1TABLE x4TABLE x7x10, x11(x2 INT, x3 INT)(x5 INT, x6 INT)(x8 INT, x9 INT)FROM x12, x13 WHERE x14.x15 x16.x17DataTypex1, x4, x7x2, x3x5, x6x8, x9x10, x11x12, x13x14, 11x8,x9isAnElementx7isAx15x14x13x16x17Figure 5: Mutation strategies on IR programs, including type-basedinsertion and replacement, and deletion of optional operands.Figure 6: Data dependency example. This example consists of three newCREATE statements and our running example. In "Relation", we show twotypes of relations: "isAnElement" (dashed line) and "isA" (solid line).be stored in the same list. We remove query B from the list asit shares the same structure as A. Whenever we need an IR of acertain type, we find the corresponding list from the dictionary andrandomly return one element. As we show in Figure 2, Sqirrelaccepts seed queries to initialize the IR library. Whenever Sqirrelfinds that the generated IR has a new structure, we add it to thecorresponding list in the library. We set a limit on the maximumnumber of IRs in the library to avoid excessive memory usage.of the original results; we delete the right child of Vb to effectivelyremove the WHERE clause. All three new IRs are syntactically correct.Unknown Type. As we mention in §4, some IRs have type Unknownas they do not have corresponding nodes in the AST. We useUnknown type to perform fuzzy type-matching without searchingfor concrete types, which may accelerate our query generation.The syntax validation, which always needs one-time parsing, isunaffected. However, without accurate type-matching, Sqirrelmay create some invalid queries.5.2Type-Based MutationWe define a set of type-based mutations to update the left andright operands of an IR or the IR itself. Our mutation focus onoperands as other members of the IR cannot be easily changed: theoperator is closely related to the IR type, like the SELECT operatorin SelectClause IR, while data type is decided by its position inthe query, like a variable after "CREATE TABLE" must be a tablename. Therefore, our mutations either operate on the whole IR ormodify its operands. Specifically, for each IR 𝑣 in the IR programwe perform the following mutations with certain probability:6Semantics-Guided InstantiationSemantics-correct queries enable fuzzers to dig deeply into DBMSs’execution logic and discover bugs effectively. However, generatingsemantics-correct test cases is an unsolved challenge for fuzzingprograms that take structured, semantics-binding inputs [44]. Previous research shows that 90% of the test cases generated by jsfunfuzz [48], a state-of-the-art JavaScript fuzzer, are semanticallyinvalid [35]. Similar problems also exist in DBMS testing.We propose a data instantiation algorithm to improve the semantic correctness of generated SQL queries. As mentioned in §5,after mutation the IR program is a syntax-correct skeleton withdata stripped. Our instantiator first analyzes the dependency between different data, and fill the skeleton with concrete values thatsatisfy all dependencies. After the instantiation, the query has ahigh chance to be semantically correct. Insertion adds an IR into an appropriate position of 𝑣. If the leftchild of 𝑣 is empty, we randomly pick an IR 𝑤 from the IR librarythat shares the same type as 𝑣. If the left child of 𝑤 is not empty,we use it as the left child of 𝑣. The same operation applies to theright child. Replacement changes 𝑣 or its operands. We first randomly pickone IR 𝑤 of the same type as 𝑣 from the IR library. Then we copythe children of 𝑤 to 𝑣, or we can replace 𝑣 with 𝑤 and update all𝑣’s references to 𝑤. Deletion removes a 𝑣 as a whole by simply replacing it with anempty IR. The same operation can be applied to its children.6.1Data Dependency InferenceData dependency describes the relationship between semanticbinding data. Any unsatisfied dependency will make the queryfail semantic checks. Figure

current mutation-based fuzzers cannot effectively test database management systems (DBMSs), which strictly check inputs for valid syntax and semantics. Generation-based testing can guaran-tee the syntax correctness of the inputs, but it does not utilize any feedback, like code coverage, to guide the path exploration.