Transcription

I.J. Information Engineering and Electronic Business, 2017, 6, 1-9Published Online November 2017 in MECS (http://www.mecs-press.org/)DOI: 10.5815/ijieeb.2017.06.01ASR for Tajweed Rules: Integrated with SelfLearning EnvironmentsAhmed AbdulQader Al-BakeriFaculty of Computing and Information Technology, King Abdulaziz University, Jeddah, Saudi ArabiaEmail: ahmedalbakiri@gmail.comAbdullah Ahmad BasuhailFaculty of Computing and Information Technology, King Abdulaziz University, Jeddah, Saudi ArabiaEmail: abasuhail@kau.edu.saReceived: 02 June 2017; Accepted: 01 August 2017; Published: 08 November 2017Abstract—Due to the recent progress in technology, thetraditional learning setting in several fields has beenrenewed by different environments of learning, most ofwhich involve the use of computers and networking toachieve a type of e-learning. With great interestsurrounding the Holy Quran related research, only a fewscientific research has been conducted on the rules ofTajweed (intonation) based on automatic speechrecognition (ASR). In this research, the use of ASR andMVC design is proposed. This system enhances thelearners’ basic knowledge of Tajweed and facilitates selflearning. The learning process that is based on ASRensures that the students have the proper pronunciation ofthe verses of the Holy Quran. However, the traditionalmethod requires that both students and teacher meet faceto-face. This requirement is a limitation to enhancingindividuals’ learning. The purpose of this research is touse speech recognition techniques to correct students’recitation automatically, bearing in mind the rules ofTajweed. In the final steps, the system is integrated withself-learning environments which depend on MVCarchitectures.Index Terms—Automatic Speech Recognition (ASR),Acoustic model, Phonetic dictionary, Language model,Hidden Markov Model, Model View Controller (MVC).I. INTRODUCTIONThe Quran is the Holy book for the Muslims. TheQuran contains guidance for life, which has to be appliedby the Muslim people. In order to achieve this goal, it isimportant for the Muslims to understand the Quranclearly so they can be capable of applying it. Recitation isone of the Holy Quran related sciences. Previously, it wasnecessary to have a teacher of Quran and a student tomeet face-to-face for the student to learn the recitationorally. It is the only certified way to guarantee that acertain verse of the Holy Quran is recited correctly.Nowadays, because of the continuous increase andhuge demand of people to learn the Quran, severalorganizations have started serving the learners byCopyright 2017 MECSproviding an online instructor in order to help them learnhow to recite the verses of the Holy Quran correctlyaccording to the rules of intonation (Tajweed science).Prophet Muhammad (peace be upon him) is the founderof the rules of this science, and certain Sahabah(companions of the prophet; may Allah be pleased withthem) have learned from him the rules of pronunciation,and then those Sahabah have taught the secondgeneration [1]. The process has continued up in the samemanner till now.The model proposed in this research facilitatesteaching the recitation of the Holy Quran online so thatstudents can practice Quran rules through using automaticspeech recognition (ASR).This approach of teaching has several benefits such asthe delivery to individuals who cannot attend the Halaqat(sessions) held at the masjids (mosques), and it facilitatesQuran teaching styles to receive more than the longestablished model. The extreme importance of recitationand memorization of the Quran is due to the numerousbenefits for readers and learners, as stated in Quran andSunnah [2]. The learning of Quran is achieved by aqualified reciter (called sheikh qari) who has elderlicensed linked to the transmission chain until it reachesthe Messenger of Allah, Prophet Muhammad (peace beupon him). Detailed information about the Holy Quranand its sciences can be found in many resources; forexample, see [3].Due to the extensive use of the Internet and itsavailability, there is a strong need to develop a systemthat emulates the traditional way of the Quran teaching.There is some research that focuses on these issues, suchas Miqra’ah, which is a server that uses virtually over theInternet.The Holy Quran is written in the Arabic language,which is considered as a complex morphologicallanguage. From the perspective of ASR, the combinationof letters is pronounced in the same way or different,depending on the Harakat used in upper and lower-casecharacter [4]. Intrinsic motivation to develop ASR asparticipation to serve the Holy Quran sciences and itsproposed approaches is needed to implement a system toI.J. Information Engineering and Electronic Business, 2017, 6, 1-9

2ASR for Tajweed Rules: Integrated with Self-Learning Environmentscorrect the pronunciation mistakes and integrate it withina self-learning environment. Therefore, we suggest theuse of the Model View Controller (MVC) as a basestructure that helps in massive development such as thisresearch. Phonetic Quran is a special case of Arabicphonemes where there is a guttural letter followed by anyother letter. This case is called guttural manifestation.Gutturalness, in Quran, relates to the quality of beingguttural (i.e., producing a particular sound that comesfrom the back of the throat). The articulation of Quranemphatics affects adjacent vowels.There are many commercial packages that are available,such as audio applications to recite (Tarteel) the HolyQuran. One among these packages is the Quran AutoReciter (QAR) [5]; however, this application does notsupport the rules of Tajweed to verify and validate theQuranic recitation.The field of speech recognition in Quranic voicerecognition is a significant field, where the processingand acoustic model has a relation with Arabic phonemesand articulation of each word; thus, the research in therecitation of Quran could be taken from a different aspect.In general, and especially in computer science, thereare substantial research achieves meant to produceworthy results in the correction of the pronunciation ofthe Quran words according to the rules of Tajweed.Hassan Tabbal has done research on the topic ofautomated delimiters, which extracts ayah (verse) froman audio file and then converts verses of Quran into anaudio file using the technology of speech recognitiontools. The developed system depends on the frameworkof Sphinx IV [6].Putra, Atmaja, and Prananto developed a learningsystem that used speech recognition for the recitation ofthe Quranic verses to reduce obstacles in learning theQuran and to facilitate the learning process. Theirimplementation depended on the Gaussian MixtureModel (GMM) and the Mel Frequency Cepstral (MFCC)features. The system produces good results for aneffective and flexible learning process. The method oftemplate referencing was used in that research [7]. NoorJamaliah, in her master thesis in the field of speechrecognition, used Mel Frequency Cepstral Coefficients(MFCC) for extracting feature from the input sound, andshe used the Hidden Markov Model (HMM) forrecognition and training purposes. The engine showedrecognition rates that exceeded 86.41% (phonemes), and91.95% (ayates) [8].Arabic sound is among the first of the world’slanguages that have been analyzed and described. Thearticulation manner and place of each sound in Arabicwere documented and identified in the eighth century ADby a famous book written by Sibawayah called Al-Kitaab.Since then, not much work has been added to the treatiseof Sibawayah. Recently, King Abdulaziz City for Scienceand Technology (KACST) has been doing research on theArabic Phonetics using many tools that treated signalsand captured images from glottis, which also include theair pressure, side and front facial images, airflow, lingualpalatal contact, perception and nasality. The raw data ofCopyright 2017 MECSKAPD are available on 3 CDs for researchers [9]. Aresearch on e-Halagat is demonstrated in [10].Noureddine Aloui with other researchers used DiscreteWalsh Hadamard Transform (DWHT), where the originalspeech is converted into stationary frames, and thenapplied the DWHT to the output signal. The performanceis evaluated by using some objective criteria such asNRMSE, SNR, CR, and PSNR [11].Nijhawan and Soni used the MFCC for featureextraction to build Speaker Recognition System (SRS)[12]. The training phase was done by calculating MFCC,executing VQ, finding the nearest neighbor usingEuclidean distance, and then computing centroid andcreating codebook for each speaker. After the completionof the training process, the testing phase is achievedthrough calculating MFCC, finding the nearest neighbor,finding minimum distance and then decision making.Reference [13] presented the use of DTW algorithm tocompare between the MFCC features extraction of thelearner and the MFCC features of the teacher, which wasstored previously in the server. DTW is a technique usedfor measuring the distance between the student's speechsignal and the exemplar’s (teacher) speech signal. Theresults of DTW comparison is given the closest numberto zero when the two words are similar and greater thanzero if the two words are differentiated.Carnegie Mellon University has developed a group ofspeech recognition systems called Sphinx. Sphinx 3 isamong these systems [14]; speech recognition waswritten in C for a decoder; a modified speech recognitionwas written in Java, and the Pocketsphinx is a lightweightspeech recognition library written in C as well [15].Sphinx-II speech recognition can be used to constructmedium, small, or large lexicon applications. Sphinx is aspeaker-independent recognition system and continuousspeech using statistical language n-gram and hiddenMarkov acoustic models (HMMs).II. THE RESEARCH PROBLEMThrough progress in technology, the traditionallearning environments in several fields have beenrenewed and now primarily use computer systems andnetworks to achieve a type of e-learning. With the greatinterest in the Holy Quran research, there is littlescientific research that has been conducted in regard tothe rules of Tajweed based on ASR and using a helpfularchitecture that could help to enhance the e-learningenvironment. Depending on speech recognitiontechnology, open-source speech recognition tools are ofinterest in the research, not just in the Holy Quransciences but also to build learning environments fordifferent languages. It is important to use automaticspeech recognition to train the system to recognize theQuranic Ayat (verses) recited by different reciters. Whensuch a system is built, it will improve the level oflearning one can achieve through reading the Holy Quran.At the same time, there are no time limits imposed on thestudent to learn. The learning will be dependent on hisavailable time, because he can use the system wheneverI.J. Information Engineering and Electronic Business, 2017, 6, 1-9

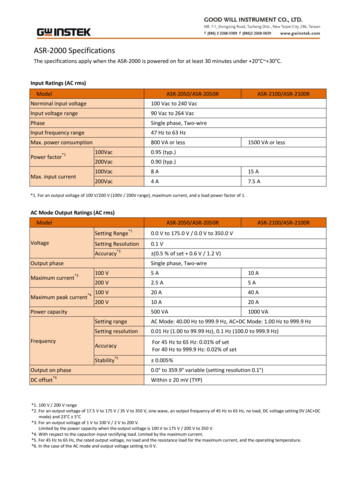

ASR for Tajweed Rules: Integrated with Self-Learning Environmentshe is free. This method establishes a great newenvironment for the learner to practice the recitation ofthe Quran based on the Tajweed rules.There is no database for the Holy Quran, which can beengaged directly to the training process, so our goal is toimplement this database and make it available to the otherresearchers.III. RESEARCH METHODOLOGYThe recitation sound is unique, recognizable andreproducible according to specific pronunciation rules ofTajweed. The system’s input is transcription phonetic of aspeech utterance and a speech signal. Thus, this researchrequires having a reciter to take samples out of inputspeech, extraction of features, training features, patternclassification and matching. These stages are essentialcomponents of a verse recitation formulation for speechrecognition architecture. Automated speech recognitionfor checking Tajweed rules is illustrated in Fig. 1. Itdemonstrates the correction of the learner’s verserecitation. The training phase and matching phase areincluded in this system. The algorithm of Hidden MarkovModel (HMM) was selected for feature training, featureextraction, and pattern recognition.3(1) Features: when the number of parameters is large,we attempt to enhance it. By splitting speech on frames,we can calculate the numbers from speech. The length ofeach frame is typically ten milliseconds.(2) Model: here, the mathematical object describes themodel and the commonly spoken word attributes aregathered using a mathematical object. Hidden MarkovModel is the speech model. The process in this model ispresented at sequential states which change in certainprobability with one another. The speech is describedthrough this sequential model.(3) The process of matching itself, which compares allmodels with all feature vectors. At each stage, we get thebest matching variants that we maintain and extend toproduce the best results of matching in the next frame.Speech recognition requires the combination of threeentities in order to produce a speech recognition engine.These three entities are the acoustic model, phoneticdictionary, and language model.The properties of the acoustic model for the atomicacoustic unit are also known as the senone. The phoneticdictionary includes a mapping from phones to words. Torestrict word search in Hidden Markov Models, we usethe language model. This model expresses the wordwhich could follow previously recognized words. Thematching is achieved in a sequential process and helps torestrict the process of matching by disrobing words thatcould not be probable. N-gram language models are themost popular language models where the finite stateautomation defines the speech sequences.A. Transcription fileThe link between the Quranic Ayat and their audiofiles is achieved through the transcription file. Thedelimiters s and /s are used for the transcription ofthe audio file’s contents which consist of Quranic Ayah,so each audio recorded and used in the ASR engineshould be uniquely identified as it is written in thetranscription file. Table 1 illustrates an example of thetranscription file for one Surah (chapter) of the HolyQuran.Currently, in the audio file, we have recorded one ofthe authors’ voice as a reciter and the voices of 10 famousreciters of the Holy Quran.Table 1. Transcription File for Surah Al-lkhlas بِس ِْم ه /s (alhosari112 0) s الر ِح ِيم الرحْ َم ِن ه َّللاِ ه قُ ْل ه َُو ه /s (alhosari112 1) s َّللاُ أَ َحد ه /s (alhosari112 2) s ُ ص َمد َّللاُ ال ه s لَ ْم يَ ِل ْد َولَ ْم يُولَ ْد /s (alhosari112 3) s َولَ ْم يَ ُك ْن لَهُ ُكفُ ًوا أَ َحد /s (alhosari112 4)Fig.1. Automated speech recognition system for checking Tajweed rules.The steps of the approach to speech recognition are toget a waveform, divide it on utterances by silences, andthen attempt to recognize what the speaker said in eachutterance. We try to match all possible combination ofwords with audio by selecting the best results of thematch combinations. The essential components of thematching process include:Copyright 2017 MECSAccording to this template, the audio file is indexed as:first is the reciter, followed by chapter number, followedby an underscore, and finally the number of Ayah.B. CorpusThe corpus consists of the voice of Quran reciter. Thisis a vocal database with a sample rate of 16 kHz and amono wave format, as presented in Table 2. It's importantI.J. Information Engineering and Electronic Business, 2017, 6, 1-9

4ASR for Tajweed Rules: Integrated with Self-Learning Environmentsthat the duration of the silence at the beginning and at theend is no more than 0.2 seconds.Table 2. Recording ParametersParametersValuesSampling16khz, 16-bitwav formatMono wavCorpusAl-Ikhlas, AlrahmanSpeakers10 recitersThe high recognition rate is based on the corpuspreparation, where the chosen word should be selectedcarefully to be representative of the language and savedas high quality. The words should be exchanged with theselected language, but for our case, we have chosen twochapters of the Holy Quran, which can be extendable totrain more chapters in the corpus.C. Acoustic Model TrainingThe grammar used in the system is getting throughprocessing the Quranic text in certain statistical steps thatgenerate the Quran language model. The toolkit ofcmuclmtk tools [16] is used here to get the uni-grams, bigrams, and tri-grams. Fig. 3 illustrates the languagemodel process. The steps to create the Quran languagemodel is to first count the uni-gram words. The secondstep is to convert it to task vocabulary, the input as aword unigram file, which is the output of text2wfreq. Theoutput is a vocabulary file, where the file contains theword corresponding to its number of occurrences. Thethird step is to produce the tri-grams and bi-grams basedon the previous vocabulary. At the end, the output isconverted to a binary format or to the ARPA (AdvancedResearch Projects Agency) format language model.The language model format should be delimitedbetween s and /s tags. The text consists of diversesentences, so each utterance is indicated by two signs; thefirst is s , which is bounded as the start of sentences,and /s to mark the end of sentences.The Hidden Markov Model is provided through thecomponents of the acoustic models and uses the Quranictri-phones to recognize an Ayah of the Holy Quran. Thestructure of HMM is presented in Fig. 2. The figureshows the basic structure of HMM. There are five stateswith three emitting states used to present the acousticmodel of tri-phone. The Gaussian mixture density is usedto train the state emission. In this representation, a letterfollowed by two numbers, such as a12, is the probabilitytransition, and the b1, b2, and b3 are the emissionprobabilities. The Gaussian Mixture probabilities withHidden Markov Model are called Continuous HiddenMarkov Model (CHMM). The term P (xt j) is theprobability of xt observation with given transition state j;q0, q1, q3.qt is a state sequence; Nj,k is a k-th Gaussiandistribution, and Wj,k is the mixture weights. Its equationis:b j xt P xt qt j x 2 W j ,k N j ,k xt M(1)Fig.3. Language Model Creation.The effective technique to build speech recognitionwith a large vocabulary is through the CHMM method.The fundamental difference between n-gram modelsdepends mainly on the N chosen. It's difficult to get theentire word history probability in a sentence, so in thisresearch, we use the method of N-1 words such as thetrigram model n 3, which takes the two previous wordsinto account while the n 2 takes the two preceding wordsonly, and the n 1 takes one word at a time.E. Phonetic DictionaryFig.2. Bakis Model structure.D. Quranic Language ModelCopyright 2017 MECSThe phonetic dictionary involves all phonemes that areused in the transcription file, where the phonemes are thesymbolic representation of spoken words in the audiofiles. The dictionary now is dynamically created throughcoding in Python language. The diacritic marks such as ُ,and ِ, are considered in the process. Table 3 shows themapping between a word and its phone. We havegenerated these marks using the syllable. The results ofthese two different schemes are shown in the resultssection.I.J. Information Engineering and Electronic Business, 2017, 6, 1-9

ASR for Tajweed Rules: Integrated with Self-Learning EnvironmentsThe long vowel length is generally equivalent to twoshort vowels; /AW/ is the diphthong of Fatha and Damma,and the /AY/ is the diphthong of Fatha and Kasra. Itcomes when Fatha appears before undiacritized ' ي ,' whilethe /AW/ acts when an undiacritized ' 'و appears and Fathacomes before that. The /T/ and /K/ correspond to ' 'ت and' 'ك respectively. They are counted as voiceless stopsletters—closely to their English counterparts. The Dhadletter ' 'ض , corresponds to /DD/ in its English counterpart.Table 3. Phonetic Transcription for Surah Al-lkhlasE AE: L AE: E IHE AE: N IH NE AE HH AE D UH NE AE F N AE: N IH NE AE L L AE:E AE Q TT AH: R IXِ َآَلء آن أ َ َحد أ َ ْفنَان أ َ هَل َ أ َ ْق ار ِ ط E AE N أ َ ْن E AE Y Y UH H AEَ أَيُّه إِ ْست َب َْرق E IH S T AE B R AA Q IX N .Table 4. Phoneme List for Arabic meArabicLetter/B//T//TH//JH/ ب /AE/ ض /AE://AA//AA:/َ ـ ـَا َ ـ َ ـ /DD/ ت ث ج /TT//DH//Al/ ط ظ ع /HH//KH//D/ ح خ د /AH//AH://UH/َ ق َقا ُ ـ /GH//F//Q/ غ ف ق /DH//R/ ذ ر /UW//UX/ ـُو ُ غ /K//AY//Z//S//SH/ ز س ش /IH//IY//IX/ ب ِ ـ ِي ِ غ /M//N//H/ ك ـَي م ن هـ /SS//E/ ص ء /AW//L/ ـَو ل /W//Y/ و ي Several languages are not supported by CMUdic toproduce the dictionary file, so we can do this in severalways. The Arabic language is one of those languages thatare not supported by CMUdic, so we have created thedictionary of Arabic language, considering the rules onthe lookup dictionary. Some languages provide a list ofphonemes which the programmer can use toautomatically generate the phoneme. In our case, wechose the dictionary building algorithm using threefamous techniques used to produce the pronunciationdictionary. These techniques are:1- Rule based2- Recurrent neural network (RNN)3- Lookup dictionaryThere is difficulty in producing the pronunciation filedue to some issues, such as irregular pronunciation. Thereis open-source software that can be used to produce amapping between a word and its phonemes; espeak is asoftware that can be used to create a phonetic dictionary.Many languages have tools that can reduce the timeneeded to build the dictionary file, and then it can be usedthereafter.IV. SET OF ARABIC PHONEMESEach Arabic phoneme corresponds to its Englishrepresentation symbol, as is shown in Table 4. Thechosen phoneme symbol is taken into consideration of theEnglish ASR phoneme, and it’s closely similar to Arabicphoneme. Specifically, the set of phonemes that we haveused depends on the research that has been done byKACST about Text-to-Speech systems [17,18]. The /AE/,/UH/, and /IH/, are symbols of short vowels in the Arabiclanguage, which represent the diacritical marks Fatha,Damma, and Kasra respectively. The pharyngealizedallophone of the /AE/ is /AA/.The pharyngealized allophone of Damma /UH/ is /UX/,and for Kasra /IH/, it’s /IX/. The /UW/ is the long vowelfor Damma, followed by ' و ,' and /AE:/ for Fatha, followedby ' ا ,' and /IY/ is for Kasra, followed by ' ;'ي these /UW/,/AE:/, and /IY/ are considered long vowel allophones ofthe Arabic language.Copyright 2017 MECS5The phone /Q/ is a representation for emphatic Arabicletter ' ;'ق /E/ is a representation for plosive sound ' ء ,' andphone /G/ represents the ' 'ج . The representation phonesfor voiced fricative letters in Arabic are /DH/, /Z/, /GH/,and /AI/, which are ' 'ظ 'ز' 'غ' 'ع . The Arabic phones thatare similar to resonant of the English phones, are /R/for ' ر ,' /L/ for ' 'ل , /W/ for ' 'و , and /Y/ for ' 'ي .V. INTEGRATED ENVIRONMENTSASR redirects learners to its site when they log into thesystem as demonstrated in Fig. 4. There are severalchoices that appear on the page to help learner's followup with their learning progress and allow them tocommunicate with their instructor. In addition, the systemprovides the ASR to allow learners listen to the Quranreciter and then record their sounds so the ASR enginecorrects them in the case of incorrect pronunciation.Moreover, a teacher can revise the progress history ofeach student (the student’s scores will be displayed on hispage), and they can offer advice to their students toimprove their level of learning. The administrator isresponsible for adding, deleting, updating the informationof any staff in the system and managing the entire system.The administrator is also responsible for the assignmentof students to certain teachers and allocating theproportion of students to each instructor. In addition, hecan assign the students to groups according to their ages,such as students under the age of 20 being allocatedthrough the administrator and moved to the proper class.I.J. Information Engineering and Electronic Business, 2017, 6, 1-9

6ASR for Tajweed Rules: Integrated with Self-Learning EnvironmentsThe system can make rulers (parents) monitor theprogress of their children.In the training process, the first scenario we havefollowed is the use of phonemes, and the second scenariois the use of the syllables to train the system. We foundthat using the syllable to train the data is workable forsmall data where we get 100% accuracy of the systemwith a syllable as shown in Table 6. These results wereachieved without any insertion, deletion, or substitutionerrors, but when we increased the amount of data, thisaccuracy decreased. So, we decided to work on phonemesrather than syllables.As shown in Table 5, the total training words is 19, thecorrect words are 18, and there are two error words. Thetotal percentage of correct words is 94.74%, while therate of error is 10.53%, and the accuracy rate is 89.47%.The insertions are one, and the deletions are one, whilethe substitutions are zero.Table 5. Quran Automatic Speech Recognition for Surah Al-lkhlasFig.4. Integrated Environments with ASR.VI. RESULTS AND DISCUSSIONThe word error rate (WER) is metric that assesses theASR performance. In the ASR results, there is percentageof error, which refers to the number of errors in themisrecognized words that occurred in the speech. Thus,the WER is a measurement of the performance of ASR.The situation regarding the continuous speech recognitionhas some differences. In continuous speech recognition,the WER is not efficient enough to measure theperformance of ASR because the sequence of words incontinuous speech recognition has additional errors thatcould occur in the results of ASR. First is wordsubstitution; it occurs when there is a replacement of aword and when an incorrect word is put in place of acorrect word, such as when exactly the speaker speaks aword that the ASR engine recognizes as another word.The second error that could happen in the continuousspeech recognition is the deletion of words. Worddeletion happens when the speaker spells a word, but thisword is not recognized in the results of ASR. At the end,the last error is an insertion—where the actual spokenword is recognized, and there is an extra word not spoken,but the ASR system recognized it as spoken word.We used the phonemes described in the previoussection with Surah (chapter) 112 from the Holy Quran.The number of tried states is static for now, and theGaussian Mixtures dimension has a straight effect on thespeech recognition performance. The training for databrings two choices. The first training is contextdependent, which is used for large data, and the second iscontext-independent, which is used to train the systemthat has a short data. In other words, when we have asmall amount of data, we can use the context-independenttraining more effectively.Copyright 2017 MECSAyahWordsSubsDelsIns%Accuracy الرحِ ِيم الرحْ َم ِن ه َّللا ه ِ بِس ِْم ه قُلْ ه َُو ه َّللاُ أ َ َحد 4000100401075 ه ُ ص َمد َّللاُ ال ه َل ْم يَ ِل ْد َو َل ْم يُولَ ْد 2001504000100 َولَ ْم َي ُك ْن لَهُ ُكفُ ًوا أ َ َحد 5000100Building the ASR based on the syllable is analternative method where there are two approaches thatcan be followed to segment the speech into units. Usingsyllables to segment speech brings greater results thanusing phonemes. The testing word for phonemes andsyllable is 19 words. The accuracy of the syllable in ASRis 100%, and 89.47% for using phonemes. Usingsyllables is a better choice for ASR in some cases, suchas when the training data is not large. If there are manyutterances needed to be converted to their correspondingsyllable, then the conversion process is going to be morecomplex due to the absence of rules for the creation ofsyllable units. The limitation on the number of syllablesdecreases the accuracy of the system. The determinationof the syllable’s boundary is a difficult process as well.Table 6. Automatic Speech Recognition for Surah Al-lkhlas usingSyllableAyahWordsSubsDelsIns%Accuracy الرحِ ِيم الرحْ َم ِن ه َّللا ه ِ بِس ِْم ه قُلْ ه َُو ه َّللاُ أ َ َحد 44401004440100 ه ُ ص َمد َّللاُ ال ه َ لَ ْم يَ ِل ْد َولَ ْم يُول ْد 22201004440100 َولَ ْم َي ُك ْن لَهُ ُكفُ ًوا أ َ َحد 5550100Table 7 shows comparisons between the syllables andthe results of the phonemes. The process of training isbased on the Hidden Markov Model for syllable andphoneme. The changing is done on the pronunciationdictionary to test the system based on two differentapproaches that can be used to build the dictionary.Tables 8 and 9 help to take the proper combinations ofdifferent reciters, where we can exclude the inapplicableI.J. Information Engineering and Electronic Business, 2017, 6, 1-9

ASR for Tajweed Rules: Integrated with Self-Learning Environmentsresults of recognition to increase the accuracy of thesystem. The chosen word and its right pronunciation is areflective job, where the results we have is affected by theway of building the pronunciation file for each word. Thesubstitution is seen as complex work due to its need formore states than insertion and deletion. The PercentageCorrect and Word Accuracy are calculated using thefollowing equations: Words Correct Percentage Correct 100 * Correct length (2) Correct Length ( Subs Dels Ins) Word Accuracy 100 * Correct length (3)Table 7. Comparison between Syllable and Phonemes1 Surah2 383Correct1819288258Errors20109151% Correct94.7410075.2067.36% Error10.53028.4639.43% s105148Substitution004477Table 8. Quran ASR Using Phonemes (Second Five Reciters)Reciter12345Total 1109219130195% Correct% 0221049369.8734.6765.331745

surrounding the Holy Quran related research, only a few scientific research has been conducted on the rules of Tajweed (intonation) based on automatic speech recognition (ASR). In this research, the use of ASR and MVC design is proposed. This system enhances the learners' basic knowledge of Tajweed and facilitates self-learning.