Transcription

laboratoryinformaticsguide 2014www.scientific-computing.com/lig2014from the publishers of

2011 PerkinElmer, Inc. 400280 06. All trademarks or registered trademarks are the property of PerkinElmer, Inc. and/or its subsidiaries.BREAKTHROUGH MOMENT#46QUERYING YOUR DATAAND INSTANTLYVISUALIZING THE ANSWERScientific breakthroughs don’t happen every day. But with the TIBCO Spotfire platform, they just mighthappen more often. TIBCO Spotfire software visualizes your data in stunning, impactful analyses—givingyou immediate insights and even answering questions you haven’t posed yet.With such a powerful tool, you can take complete control of your data: Combine data from multiple sources—chemical structures, text, numbers, images, chemicalproperties, biological assays, and more.Identify new relationships, isolate significant outliers, and easily spot trends and patterns.TIBCO Spotfire software connects to data sources with just a few clicks—and runs visualizations instantly.It’s that easy.Download your free trial and get startedon your next breakthrough tingworld

contents Laboratory informatics guide 2014WelcomeINTEGRATED LABORATORIES4Peter Boogaard reviews efforts to make the laboratory anintegrated operationDATA INTERCHANGE10Standardisation is crucial in a world where sharing is increasinglycommonplace, argues John TriggINFORMATICS IN ACTION14Sophia Ktori canvasses the views of some key users ofinformatics systems – from laboratories to multinationalsProduct round-up20A selection of informatics products currently on the market,rounded up by Robert RoeAnalysis on the go26Robert Roe discovers how mobile phones and associated softwareare becoming increasingly important in laboratoriesSuppliers30A comprehensive listing of suppliers,consultants and integratorsThere is a paradox at the heart of this year’s LaboratoryInformatics Guide. On the one hand, we have an articleshowing how technology developed for the consumermarket can help drive down costs and improve efficienciesin the work of the analytical laboratory. But we also have anarticle lamenting how the sort of information sharing thatconsumers take for granted – think Flickr or Facebook – iscurrently impossible in laboratory informatics.For very good reasons, change tends to be slow in thisdiscipline. No one can play with electronic systems to seehow to make them more efficient or cheaper – not if thosesystems have to conform to regulations issued by the USFood and Drug Administration and counterpart bodies inother countries. Change has to be carefully orchestrated.However, the pressure for change never goes away.Analytical laboratories, whether in discovery or qualitycontrol, have to justify their cost and demonstrate to highermanagement that costs are being driven down.As Robert Roe’s article on page 26 demonstrates, sometimesthe change comes from technologies developed in entirelydifferent spheres for entirely different purposes. Mobilephones and tablet computers, developed for the consumermarket, are forcing informatics vendors to modify theirsystems to allow for the input, processing and analysis of datathrough these devices.Peter Boogaard reports on the progress being made towardsthe integrated and paperless laboratory on page 4. However,the lack of common standards for interchanging laboratorydata is an obstacle to the further development of informaticsand progress has been frustratingly slow, as John Triggdiscusses on page 10.So this year, the glass is half-full. Let us hope that by thetime next year’s Laboratory Informatics Guide is published, wecan report that the cup is brimming over!Tom WilkieEditor-in-chiefEDITORIAL AND ADMINISTRATIVE TEAM Editor: Beth Harlen (editor.scw@europascience.com)Feature Writers: Robert Roe, Peter Boogaard, John Trigg, Sophia KtoriProduction editor: Tim GillettCirculation/readership enquiries: Pete Vine (subs@europascience.com)ADVERTISING TEAMAdvertising Sales Manager: Darren Ebbs (darren.ebbs@europascience.com) Tel: 44 (0) 1223 275465 Fax 44 (0) 1223 213385Business Development Manager: Sarah Ellis-Miller (sarah.ellis.miller@europascience.com) Tel: 44 (0) 1223 275466 Fax 44 (0) 1223 213385Advertising Production: David Houghton (david.houghton@europascience.com) Tel: 44 (0)1223 275474 Fax: 44 (0) 1223 213385CORPORATE TEAM Publishing Director: Warren Clark Chairman and Publisher: Dr Tom WilkieWeb: www.scientific-computing.comSUBSCRIPTIONS: The Laboratory Informatics Guide 2014 is published by Europa Science Ltd, which also publishes Scientific Computing World. Free registration is available to qualifying individuals (register online at www.scientific-computing.com). Subscriptions 100 a year for six issues to readers outside registration requirements. Single issue 20. Orders to ESL, SCW Circulation, 9 Clifton Court, Cambridge CB1 7BN, UK.Tel: 44 (0)1223 211170. Fax: 44 (0)1223 213385. 2013 Europa Science Ltd. Whilst every care has been taken in the compilation of this magazine, errors or omissions are not the responsibility of the publishers or of theeditorial staff. Opinions expressed are not necessarily those of the publishers or editorial staff. All rights reserved. Unless specifically stated, goods or services mentioned are not formally endorsed by Europa Science Ltd, whichdoes not guarantee or endorse or accept any liability for any goods and/or services featured in this publication.US copies: Scientific Computing World (ISSN 1356-7853/USPS No 018-753) is published bi-monthly for 100 per year by Europa Science Ltd, and distributed in the USA by DSW, 75 Aberdeen Rd, Emigsville PA 17318-0437.Periodicals postage paid at Emigsville PA. Postmaster: Send address corrections to Scientific Computing World PO Box 437, Emigsville, PA 17318-0437.Cover: 14 3

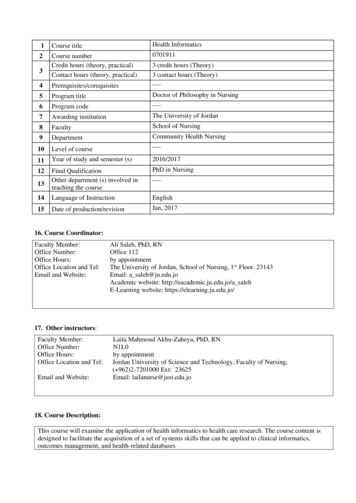

Laboratory informatics guide 2014 Integrated laboratoriesNixx Photography/ShutterstockJoining upthe laboratoryPeter Boogaard reviews efforts to makethe laboratory an integrated operationIt is easier to get data into scientificdatabases than to get valuableinformation out of it. For years, wehave been spending time and money tointegrate systems and processes in thelaboratory’s knowledge value chain. Manylaboratory integration projects are underpressure to deliver on their expectations, asdefined at the kick-off of. So why is it thatlaboratory integration is so difficult? Whatare the obstacles to creating value for theconsumers of the laboratory data? Do weknow what these users need and how theywould like to consume this information?Imagine that in the music world, eachlabel has its own proprietary music fileformat. How would you be able to sharemusic? By default, standards make it easierto create, share, and integrate data. Dowe know the requirements of such a datastandard? What about managing metadatacontrolled vocabularies? Data standardsare the rules by which data are describedand recorded. In order to share, exchange,and understand data, we must standardisethe format (data container) as well as themeaning (metadata/context). As of today,there is no unified scientific data standardin place to support heterogeneous andmulti-discipline analytical technologies.There have been several attempts butthey are limited in scope, not extensibleor incomplete, resulting in recurring,cumbersome and expensive softwarecustomisations.Pay attention to theconsumer of the dataIntegrating laboratory instruments startedwhen instrument vendors, such as PerkinElmer and Beckmann Instruments, createdthe first laboratory information managementsystem (LIMS) software, in the early 1980s.4 www.scientific-computing.com/lig2014The initial objective was to support thelaboratory manager with tools to createsimple reporting capabilities to enable thecreation of simple certificate of analysis (CoA)reports. These systems were initially designedto support a single consumer, namely thescientists and lab managers. In today’s world,consumers of laboratory data can be foundStephen Covey phrased it verynicely: ‘Seek first to understand .And then to be understood.’ It maysound obvious, but it still remains avaluable statement before startingany automation projectacross the entire product lifecycle, and mayinclude external organisations such as CROsand CMOs (Table 1). A different mind-set isrequired to adapt to this expanded view ofthe world. It is critical to first analyse whothese new lab-data consumers are, and getan understanding of what their objectivesare. Often forgotten, but as important, isto investigate what their perspective is onusability. The newcomers may be a nontechnical audience! Stephen Covey phrasedit very nicely: ‘Seek first to understand .And then to be understood.’1 It may soundobvious, but it still remains a valuablestatement before starting any automationproject. Table 1: Selected consumers of laboratory information dataConsumerObjectiveImpact / benefitPatientAssure secure instant access tomedical data for doctors.Better healthcare at lower costFellowscientistRe-use experimental data and leveragelearning. Higher efficiency and quality.Consistent meta and context dataHigher efficiency and qualityLegalProtect company IPConsistent externalisation processes(CROs)FinanceUnderstand overall life-cycle cost ofoperationHolistic overall viewCustomercareProduct complaints and productinvestigationsSecure branding image of companyRegulationFaster responses to complianceinquiriesSimpler mechanism to auditheterogeneous scientific dataManagementIdentify areas for continuousimprovement in process. Reduce costsRisk-based information acrossheterogeneous data systemsStability labsSimpler mechanism to createe-submissions. Ability to submitstandardised e-stability data packagesFaster responses during studies,Increased efficiencyCRO/CMOFocus on lowering cost/analysis bydecreasing IT complexity and overheadAcceleration move from paper to‘paper-on-glass’ITReduce bespoke/custom systems.Consolidation of systems. Reduce costsUnified systems. Simplify IT processes

Integrated laboratoriesPerkinElmerLaboratory informatics guide 2014Example of dashboard or the scientific researcher, the ability l Fllllto record data, make observations,describe procedures, include images,drawings and diagrams and collaboratewith others to find new chemicalcompounds, biological structures, withoutany limitation, requires a flexible userinterface. or the QA/QC analyst or operator, theFrequirements for an integrated laboratoryare quite different. A simple, naturallanguage based platform to ensure thatproper procedures are followed will beliked. o investigate a client’s complaintTprofessionally, the customer careemployee requires a quick and completedashboard report to look at metrics for allcases, assignments, and progress in realtime, by task, severity, event cause, androot cause. The devil is in the detail, andthat’s where the laboratory data may givesignificant insights. egal: Instead of saying ‘we saw thatLa couple of years ago, but we don’tremember much about it’, sensitiveinformation can searched and retrieved,including archives. uring regulatory inspections ‘show meDall the data during this time frame, whichraw material batches were involved andshow me all the details’.Heterogeneous scientific challengesThe lack of data standards is a seriousconcern in the scientific community. It mayseem a boring topic these days, but the needfor standardisation in our industry, hasnever been higher. Without such standards,automating data capture from instruments6 www.scientific-computing.com/lig2014or data systems can be challenging and isexpensive. Initiatives such as the AllotropeFoundation 2 are working hard to addressthese badly needed common standards.The Allotrope Foundation is aninternational not-for-profit association ofbiotech and pharmaceutical companiesbuilding a common laboratory informationThe framework will includemetadata dictionaries, datastandards, and class librariesfor managing analytical datathroughout its lifespanframework for an interoperable means ofgenerating, storing, retrieving, transmitting,analysing and archiving laboratory data,and higher-level business objects such asstudy reports and regulatory submissionfiles. The deliverables from the foundation,sponsored by industry leaders such asPfizer, Abbott, Amgen, Baxter, BI, BMS,Merck, GSK, Genentech, Roche and others,are an extensible framework that defines acommon standard for data representationto facilitate data-processing, data-exchange,and verification. One of the ultimate goalsis to eliminate widespread inefficienciesin laboratory data management, archival,transmittal, and retrieval, and to supporta start-to-finish product quality lifecycle,which would enable cross-functionalcollaboration between research,development, quality assurance andmanufacturing.The framework will include metadatadictionaries, data standards, and classlibraries for managing analytical datathroughout its lifespan. Existing or emergingstandards will be evaluated and used asappropriate, to avoid ‘reinventing the wheel’.It is a wake-up call for the industry but onethat may be muted by our risk-avoiding,sceptical mindset. It is reminiscent of howthe database technologies emerged in the1970s. The reader is challenged to identifythe similarities between the developmentof SQL, and the initiative to create anintelligent and automated analyticallaboratory.Glass half full or half empty?The deployment of computerised databasesystems started in the 1960s, when the useof corporate computers became mainstream.There were two popular database modelsin this decade: a network model calledCODASYL; and a hierarchical model calledIMS. In 1970, Ted Codd (IBM) publishedan important paper to propose the use of arelational database model. His ideas changedthe way people thought about databases. Inhis model, the database’s schema, or logicalorganisation, is disconnected from physicalinformation storage, and this became thestandard principle for database systems.Several query language were developed,however the structured query language, orSQL, became the standard query languagein the 1980s and was embraced by the Table 2: SQL pros and consWhy traditional hierarchicalwas initially abandonedThe SQL a success storyComplex architectureExtensible open architectureSlow responsesSplit physical & meta dataVendor boundProduct independentInflexible and fixed data schemasUser definable flexible ad-hoc queries capabilitiesRequired mindset changeAvailability of faster computers and networksInvasive technologySingle database language

Allotrope FoundationLaboratory informatics guide 2014 Integrated laboratoriesInnovation Ecosystemopen data standards l vendor agnosticScientific dataCollectionDocumentPreperationLC-UVInformation toConsumersCandidateSelectionYour CompanyCertificateof AnalyticsClickMethod/Data TransferCROWateret ceteraCMORegulatorySubmissionThermal.et cetera.PartnershipsThe benefits of open data standards entire industry. Vendor specific proprietaryextensions (e.g. PL/SQL 3) were allowed inthe concept, allowing individual vendors toextend capabilities.Now back to the laboratory. The currentsituation is that there is no frameworkfor scientific data standards. Formats arevendor bound, product dependent, and inmany cases based upon a closed architectureand are complex in nature. There areplausible reasons why, at this moment, ourindustry has no general accepted raw-dataand metadata standards, but should we notlearn from other industries and adopt bestpractices?The Analytical Information MarkupLanguage (AnIML) is the emerging ASTMXML standard for analytical chemistrydata. The project is a collaborative effortbetween many groups and individuals andis sanctioned by the ASTM subcommitteeE13.15.4 An AnIML is a standardised dataformat that allows for storing and sharing ofexperimental data. It is suitable for a wideAnIML converters or plug-in in third partycomponents.Other examples of changes in the waylaboratories may operate in the future relateto how balance and titration instrumentvendors are increasing the value of theirinstruments by implementing approved andpre-validated methods in their instruments.This may sound a small step, but it mayhave a significant impact on validationefforts in the laboratory and manufacturingoperations, such as fewer points of failureduring operation, less customisation ofsoftware and better documentation.The desire to convert manufacturingprocesses from traditional batch-orientedprocesses to a continuous operation hasaccelerated process analytical techniques(PAT) technologies as a way to createrange of analytical measurement techniques.AnIML documents can capture laboratoryworkflows and results, no matter whichinstruments or measurement techniqueswere used.E-Workbook Suite (IDBS) allows spectrafiles to be dropped in from the experimentwhereby they are automatically convertedto AnIML and rendered. The renderingapplication then allows the scientist toannotate the spectra with searchablechemical structures, text, hyperlinks toother systems and records. The AnIML datais also indexed alongside everything elseallowing specific searching of metadata andproperties. These processes are non-invasivemeaning that the originals raw data files arealso kept.An application programming interface(API) specifies how some softwarecomponents should interact with eachother, allowing customers and third partiesto extend the types of spectra that aresupported by writing new raw data toE-Workbook Suite (IDBS) allowsspectra files to be dropped in fromthe experiment whereby they areautomatically converted to AnIMLand renderedsustainable and flexible approaches formanufacturing operations. PAT is expectedto grow significantly in the next decade.Over time, in-line, @line and on-lineanalysis will complement and potentiallysubstitute off-line (batch oriented)laboratory manufacturing processes.International regulatory authorities suchas ICH, FDA and ISPE are evaluating thesenew processes intensively and developingnew workflows. These processes will havea high impact on how QA/QC laboratorieswill operate in next decade. Internationalindustry standards such as ANSI/ISA-88(covering batch process control) and ANSI/ISA-95 (covering automated interfacesbetween enterprise and control systems),are commonly used in manufacturing. ByReferencesThe 7 Habits of Highly Effective People – StephenCovey 19901 Table 3: Applying standards requires a different mindset2Allotrope Foundation – www.allotrope.orgPL/SQL (Procedural Language/Structured QueryLanguage) is Oracle Corporation’s procedurallanguage extension for SQL and the Oraclerelational database3 8Glass half emptyGlass half fullThe market is too disperseTechnologies are emerging rapidlyTechnology not availableXML and AnIML are accepted as standardsVendor protectionEmpowered customersPoor performanceConsistent unified long time archive process www.scientific-computing.com/lig2014Subcommittee E13.15 on Analytical Data www.astm.org/COMMIT/SUBCOMMIT/E1315.htm4 Technology Management In The Age Of TheCustomer - Forrester research 20135

Integrated laboratoriesTable 4: Potential integrated laboratory killer appsLaboratory integration killer appsArchivingThe perception of data archiving is often related only to storingdata. Having meta-data standards as part of the archive procedure, will enable data to be re-used for collaboration betweendifferent instruments in-house or externally with CROsData finder and data viewerThe ability to do full context searching across heterogeneousdata sources, across in-house and external data systems andarchives and display in unified viewerRegulatory reviewsThe ability to build and transmit to regulatory agencies a standard data package for inspection without altering the underlyinginformation (e.g. regulatory submissions, stability studies)Reduction in (re)-qualificationprocessesIn a GxP environment, the ability to automatically update USPmethods across individual instruments will significantly reducethe requalification processincorporating these standards, scientistswill be able to mine information fromdevelopment and manufacturing forimproved process and product design.In addition, information is more readilytransferable between systems. For example,a recipe delivered in early development canbe rapidly transferred to a lab executionsystem for API manufacture and then to amethod execution system for mainstreammanufacturing. ERP and MES applicationsare using these standards and it is verylikely that integrated laboratory datamanagement capabilities will be includedwithin their software capabilities.ConclusionEmpowered customers are disruptingevery industry. Technology managers mustbroaden their agenda to consider not just Laboratory informatics guide 2014infrastructure and traditional internal ITprocesses, but also activities to ensure theydeliver value for their ‘client’.5 The powerof an integrated laboratory environmentis its ability to find detailed answers tosupport the overall business process. Itis pure waste to perform labour-intensivehunting for information across multivendor, multi-technique databases, manualtranscription checking and to manuallycreate reports. Having a common industrystandard framework will decrease processvariability resulting in better quality andoverall consistency. Non-invasive processeshave proven to be successful in otherindustries. It is now up to the industry,regulatory bodies and vendors of scientificinstrumentation and software platformsto make it happen. Integrating laboratoryinformation really means integratingscientific data collected in the laboratoryand beyond. Time will tell if this industryhas been able to adopt such a strategy. lPeter Boogaard is an independent laboratoryinformatics consultantBrain Fog?Access and Re-Use the Wealth of KnowledgeGenerated with Every ExperimentUnify Analytical and Chemical Datafrom different techniques/instruments in asingle software environmentGenerate Knowledge from Informationwith dynamic visualization tools andadvanced algorithmsCreate Intelligence to Gain Insightswith instantly re-usable, searchableinformation stored from experimentsIntegrate with existing LIMS, ELNs,and other informatics systems.ACD/Spectrus Platformwww.acdlabs.com/ULIMaking Unified Laboratory Intelligence a Realitywww.scientific-computing.com/lig2014 9

Ramcreations/ShutterstockData interchange standards:what, why and when?Standardisation is crucial in a world where sharing is increasinglycommonplace, argues John Trigghy can ordinary consumers sharetheir data when the laboratoryinformatics community cannot?Sharing and collaboration are becomingsecond nature in the consumer world wherethe ability to communicate and transfer dataover the web has become a routine part ofeveryday life; to the point where the terms‘upload’ and ‘download’ are part of everydayvocabulary. A simple example is the abilityto take a photograph on a camera phone,and immediately upload it to a photosharing site, or email it, or ‘message’ it ortweet it. The ability to do this is totallydependent on standards; the internetprovides the infrastructure; wi-fi or telecomsprovide the messaging; and the device(camera/phone) generates the data (photo) ina format that can be used by otherapplications. It’s a process everyone takes forgranted, without having to worry about thedata format of the photo and whether therecipient will be able to open it. It may bethought a simplistic example, particularlywhen compared to the complexity oflaboratory systems that serve an extensiverange of measurement and other services,but the underlying principle paints a vividpicture of how laboratory systems ought tobe able to work together.Yet the laboratory informatics communityhas been debating for decades the topic ofstandards for interchanging data, or moreaccurately the lack of such standards.On one side of the debate is a compellingargument that says laboratories will be ableW10 www.scientific-computing.com/lig2014to work more efficiently and effectively ifthere are common standards for exchangingdata. On the other side, the vendors arguethat standards would constrain innovationin the development of tools for capturingand processing data. In the background is amore political viewpoint: proprietary dataformats facilitate a commercial ‘lock-in’ forthe vendors; and the adoption of open datastandards would disrupt the marketplace,not only for the vendors, but also for thethird-party systems’ integrators.Few people could argue against thebenefits of data standards. Laboratorieswould welcome the ability to acquire dataand then process it, view it, store it, shareOne of the consequences of thetransition from paper to digitaltechnologies is that we are going intothe unknown, and we will becometotally dependent on technologyit, re-analyse it, and preserve it withoutthe constraints of proprietary data-capturesoftware and integration tools. Theadvantages include not only ease of use,but also the reduction in costs associatedwith third party and custom solutions tointerface laboratory devices and systems. Aless obvious benefit is the ability to archivedata in a human-readable format over thelong term. One of the consequences of thetransition from paper to digital technologiesis that we are going into the unknown,and we will become totally dependent ontechnology in order to access electronicrecords. Basically we will no longer haveany physical artefacts that representour accumulated records of laboratoryexperimentation and their outcomes. Itwill all be digital; the IT industry has apoor track record when it comes to digitalpreservation.However, the long-term preservationof electronic records does present oneexample of where a standard – PDF orPDF/A – has been adopted in the laboratoryworld. However, electronic documentstandards, such as PDF and PDF/A, havea very different purpose from that of datainterchange standards. With regard tothe write-up of an experiment, PDF orPDF/A can preserve a rendition of the datagenerated in the experiment, but does notpreserve the data itself. In order to preservethe data and to maintain the capability touse and re-use it over the long term, then adata interchange standard is necessary.The transition from paper to electroniclab notebooks created the need to be able topreserve, for several decades, the integrity,authenticity, and readability of experimentalrecords to support business and scientificrequirements. PDF and PDF/A both haveISO registration as open standards, andare typically used to provide the electronicrendition of the experimental ‘document’.The primary purpose is the preservationof a flat document, including the text,fonts, graphics, and other information

Laboratory informatics guide 2014Marie Nimrichterova/S.John/Shutterstockstandardsneeded to display it. PDF/A is a versionof the portable document format (PDF)developed specifically for archivingelectronic documents. It differs from PDFby omitting features that are unsuitable forlong-term archiving, such as font linking (asopposed to font embedding). It identifies a‘profile’ for electronic documents to ensurethat they can be reproduced in exactlythe same way over years to come. Key tothis reproducibility is the requirement forPDF/A documents to be 100 per cent selfcontained. All the information necessaryfor displaying the document in the samemanner every time is embedded in the file.The business case for data interchangestandards is quite clear, as companiesfocus on: productivity (cost reduction);outsourcing or externalisation; andinnovation:l I mprovedlproductivity is increasinglydependent on automation and theelimination of inefficient steps andmanipulations in data handling.Standards for data interchange wouldsimplify instrument interfaces, cutinterfacing costs, reduce errors, andsimplify validation; O utsourcing, or externalisation,involves communicating and sharingdata across wide geographic areasand disparate technologies. Standardsfor data interchange can facilitatethese communications by removingthe dependence of the data on theapplication that created them. In otherwords, the data can be stored, viewed,and manipulated in applications otherthan the one in which it was created;andl Innovationarises from correlating,mining, visualising, and making sense ofdata from multiple sources. Again, theseprocesses can be easier and faster, if datacan be stored and accessed in standardformats.A few standards for interchanginglaboratory data do exist, but they have notbeen adopted on an industry-wide scale.If the business case is so strong, whyis it taking so long to make any progress?From a technology perspective, there hasnever been a better time to exploit thepotential of standards: the development ofa global infrastructure in the form of theinternet has provided a platform that otherconsumer and business domains are takingfull advantage of, as the example of photosharing illustrates. However, digitisingthe laboratory has been a slow journey, www.scientific-computing.com/lig2014 11

stretching over four decades. As laboratoriesbecome progressively ‘paperless’, progresstowards a fully integrated environment willdepend on overcoming the limitations posedby the current lack of data standards.Organisations such as the Pistoia Alliance,the Allotrope Foundation, and the Consortiumfor Standardisation in Lab Automation (SiLA)have recognised and taken action to addressthe issue from the standpoint of ‘industryassociations’.As Peter Boogaard explains in the previousarticle, the Allotrope Foundation (www.allotrope.org) is an international associationof biotech and pharmaceutical companiesthat are collaborating to build a commonlaboratory information framework for aninteroperable means of generating, storing,retrieving, transmitting, analysing, andarchiving laboratory data, as well as higherlevel busine

the lack of common standards for interchanging laboratory data is an obstacle to the further development of informatics and progress has been frustratingly slow, as John Trigg discusses on page 10. So this year, the glass is half-full. Let us hope that by the time next year's Laboratory Informatics Guide is published, we