Transcription

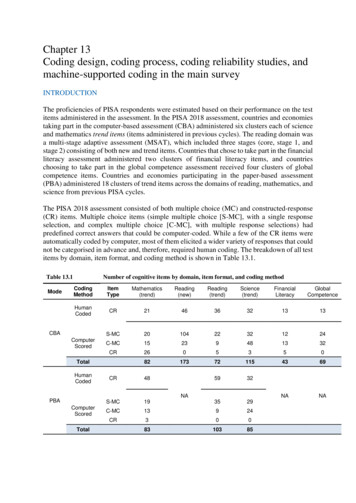

CODING, CRYPTOGRAPHY and CRYPTOGRAPHIC PROTOCOLSprof. RNDr. Jozef Gruska, DrSc.Faculty of InformaticsMasaryk UniversityOctober 6, 2016

YGNEQOGVQ ETASVQITCSJA NGEVWTG

Technické řešenı́ této výukové pomůcky je spolufinancováno Evropským sociálnı́m fondem a státnı́m rozpočtemČeské republiky.

CONTENTS1Basics of coding theory2Linear codes3Cyclic, convolution and Turbo codes - list decoding4Secret-key cryptosystems5Public-key cryptosystems, I. Key exchange, knapsack, RSA6Public-key cryptosystems, II. Other cryptosystems, security, PRG, hashfunctions7Digital signatures8Elliptic curves cryptography and factorization9Identification, authentication, privacy, secret sharing and e-commerce10Protocols to do seemingly impossible and zero-knowledge protocols11Steganography and Watermarking12From theory to practice in cryptography13Quantum cryptography14History and machines of cryptography

BASIC INFORMATION - I.Lectures will start (hopefully) always in time.Materials/slides of the lecture will be onhttp://www.fi.muni.cz/usr/gruska/crypto16For first 10 lectures there will be home exercises - (4-8)each time. They will be posted at the above web pagealways on Tuesday, before the lecture, at 18.00.At the lecture web page you find instructions how tosubmitt solutions of exercises and how they will beevaluated.More points you get for exercises more easy will beyour exam - rules are on the above web page.For lectures I will use: computer slides, overheadprojector slides and, sometimes, also the blackboard.prof. Jozef GruskaIV0540.5/66

BASIC INFORMATION - II.There will be also unobligatory exercise-tutorial sessionsfor this course. They will disscuss subjects dealt with inlecture in more detais or mathemtics behind.One session will be in Czech-Slovak with Bc. MichalAjdarów, Wednesday, B204, 18.00-20.00.One session will be in English with RNDr. LuděkMatyska, Wednesday, B204, 16.00-18.00.Likely, the most efficient use of the lectures is to printmaterials of each lecture before the lecture and tomake your comments into them during the lecture.prof. Jozef GruskaIV0540.6/66

BASIC INFORMATION - II.Lecture’s web page contains also Appendix important very basic facts from the number theory andalgebra that you should, but may not, know and youwill need - read and learn them carefully.Whenever you find an error or misprint in lecture notes,let me know - extra points.prof. Jozef GruskaIV0540.7/66

BASIC INFORMATION - II.To your dispoosal there are also lecture notes ”ExercisesBook” that you can upload from IS system for the lectureIV054, through links ”Ucebni materialy –¿ Exercise Book”Lecture notes contain selected exercises from thehomeworks for the past lectures on Coding, Cryptographyand Cryptographic Protocols” with solutions.prof. Jozef GruskaIV0540.8/66

LITERATURER. Hill: A first course in coding theory, Claredon Press, 1985V. Pless: Introduction to the theory of error-correcting codes, John Willey, 1998J. Gruska: Foundations of computing, Thomson International Computer Press, 1997J. Gruska: Quantum computing, McGraw-Hill, 1999A. Salomaa: Public-key cryptography, Springer, 1990D. R. Stinson: Cryptography: theory and practice, CRC Press, 1995W. Trappe, L. Washington: Introduction to cryptography with coding theoryB. Schneier: Applied cryptography, John Willey and Sons, 1996J. Gruska: Quantum computing, McGraw-Hill, 1999 (For additions and updatings:http://www.mcgraw-hill.co.uk/gruska)S. Singh: The code book, Anchor Books, 1999D. Kahn: The codebreakers. Two story of secret writing. Macmillan, 1996 (Anentertaining and informative history of cryptography.)S. Vaudenay: A classical introduction to cryptography, Springer, 2006J. Gruska: Coding, Cryptography and Cryptographic Protocols, lecture notes,http://www.fi.muni.cz/usr/gruska/crypto15

INTRODUCTION - THREE KEY POINSTransmission of classical information in time and space is nowadays very easy(especially through noiseless channels).It took centuries, and many ingenious developments and discoveries (writing, bookprinting, photography, movies, telegraph, telephone, radio transmissions,TV, -soundsrecording – records, tapes, discs) and, especially, the idea of the digitalisation of all formsof information, to discover fully this property of information.Coding theory develops methods to protect information when transmitted through noisychannels.Information is becoming an increasingly valuable commodity for bothindividuals and society.Cryptography develops methods how to ensure secrecy of information and identity,privacy as well as anonymity of users.A very important property of information is that it is often very easy to makeunlimited number of copies of information.Steganography Watermarking develop methods to hide important information ininnocently looking information (what can be used also to protect intellectual properties).

Part IBasics of coding theory

PROLOGUEPROLOGUEprof. Jozef GruskaIV0541. Basics of coding theory12/66

ROSETTA SPACECRAFTIn 1993 in Europe Rosetta spacecraft project started.In 2004 Rosetta spacecraft was launched.In August 2015 Rosetta spacecraft got on the orbit of the comet 67P (one of 4000known comets of the solar systems) and sent to earth a lot of photos of 67P.In spite of the fact that the comet 67P is 720 millions of kilometers from the earthand there is a lot of noise for signals on the way encoding of photos arrived in such aform that they could be decoded to get excellent photos of the comet.All that was, to the large extent, due to the enormously high level codingtheory already had in 1993.Since that time coding theory has made another enormous progress that hasallowed, among other things, almost perfect mobile communication andtransmission of music in time and space.prof. Jozef GruskaIV0541. Basics of coding theory13/66

ROSETTA spacecraftprof. Jozef GruskaIV0541. Basics of coding theory14/66

ROSETTA LANDING - VIEW from 21 km -29.9.2016prof. Jozef GruskaIV0541. Basics of coding theory15/66

ROSETTA LANDING - VIEW from 51 m -29.9.2016prof. Jozef GruskaIV0541. Basics of coding theory16/66

CHAPTER 1: BASICS of CODING THEORYABSTRACTCoding theory - theory of error correcting codes - is one of the most interesting andapplied part of informatics.Goals of coding theory are to develop systems and methods that allow to detect/correcterrors caused when information is transmitted through noisy channels.All real communication systems that work with digitally represented data, as CD players,TV, fax machines, internet, satellites, mobiles, require to use error correcting codesbecause all real channels are, to some extent, noisy – due to variousinterference/destruction caused by the environmentCoding theory problems are therefore among the very basic and most frequentproblems of storage and transmission of information.Coding theory results allow to create reliable systems out of unreliable systems tostore and/or to transmit information.Coding theory methods are often elegant applications of very basic concepts andmethods of (abstract) algebra.This first chapter presents and illustrates the very basic problems, concepts, methods andresults of coding theory.prof. Jozef GruskaIV0541. Basics of coding theory17/66

CODING - BASIC CONCEPTSError-correcting codes are used to correct messages when they are (erroneously)transmitted through noisy dingDecodingWuserYESuserC(W) noise C'(W)Error correcting frameworkExamplemessageYES or NOYESEncoding 0000001001YES 00000NO 11111Decoding0100100000A code C over an alphabet Σ is a nonempty subset of Σ (C Σ ).A q-nary code is a code over an alphabet of q-symbols.A binary code is a code over the alphabet {0, 1}.Examples of codesC 1 {00, 01, 10, 11} C 2 {000, 010, 101, 100}C 3 {00000, 01101, 10111, 11011}prof. Jozef GruskaIV0541. Basics of coding theory18/66

CHANNELis any physical medium in which information is stored or through which information istransmitted.(Telephone lines, optical fibres and also the atmosphere are examples of channels.)NOISEmay be caused by sunspots, lighting, meteor showers, random radio disturbances, poortyping, poor hearing, . . . .TRANSMISSION GOALS1Encoding of information should be very fast.2Very similar messages should be encoded very differently.3Transmission of encoded messages should be very easy.4Decoding of received messages should be very easy.5Correction of errors introduced in the channel should be reasonably easy.6Maximal amount of information should be transfered per a time unit.BASIC METHOD OF FIGHTING ERRORS: REDUNDANCY!!!Example: 0 is encoded as 00000 and 1 is encoded as 11111.prof. Jozef GruskaIV0541. Basics of coding theory19/66

CHANNELS - MAIN TYPESDiscrete channels and continuous channels are maintypes of channels.With an example of continuous channels we will deal inchapter 3. Two main models of noise in discretechannels are:Shannon stochastic (probabilistic) noise model:Pr(y x) (probability of the output y if the input is x) isknown and the probability of too many errors is low.Hamming adversarial (worst-case) noise model:Channel acts as an adversary that can arbitrarilycorrupt the input codeword subject to a bound on thenumber of errors.prof. Jozef GruskaIV0541. Basics of coding theory20/66

DISCRETE CHANNELS - MATHEMATICAL VIEWSFormally, a discrete Shannon stochastic channel is described by a triple C (Σ, Ω, p),whereΣ is an input alphabetΩ is an output alphabetPr is a probability distribution on Σ Ω and for each i Σ, o Ω, Pr (i, o) is theprobability that the output of the channel is o if the input is i.IMPORTANT CHANNELSBinary symmetric channel maps, with fixed probability p0 , each binary input intoopposite one. Hence, Pr(0, 1) Pr(1, 0) p0 and Pr(0, 0) Pr(1, 1) 1 p0 .Binary erasure channel maps, with fixed probability p0 , binary inputs into {0, 1, e},where e is so called the erasure symbol, and Pr(0, 0) Pr(1, 1) p0 ,Pr(0, e) Pr(1, e) 1 p0 .prof. Jozef GruskaIV0541. Basics of coding theory21/66

BASIC CHANNEL CODING PROBLEMSSummary: The task of a communication channel codingis to encode the information sent over the channel in sucha way that even in the presence of some channel noise,several errors can be detected and/or corrected.prof. Jozef GruskaIV0541. Basics of coding theory22/66

BASIC IDEA of ERROR CORRECTIONDetails of the techniques used to protect information against noise in practice aresometimes rather complicated, but basic principles are mostly easily understood.The key idea is that in order to protect a messageagainst a noise, we should encode the message byadding some redundant information to the message.In such a case, even if the message is corrupted by a noise, there will be enoughredundancy in the encoded message to recover – to decode the messagecompletely.prof. Jozef GruskaIV0541. Basics of coding theory23/66

MAJORITY VOTING DECODING - BASIC IDEAThe basic idea of so called majority votingdecoding/principle or of maximal likelihooddecoding/principle, when a code C is used, isto decode a received message w 0by a codeword w that is the closest one to w 0in the whole set of the potential codewords of agiven code C .prof. Jozef GruskaIV0541. Basics of coding theory24/66

EXAMPLEIn case: (a) the encoding0 0001 111,is used,(b) the probability of the bit error is p 12and,(c) the following majority voting decoding000, 001, 010, 100 000and111, 110, 101, 011 111is used,then the probability of an erroneous decoding (for the case of 2 or 3 errors) is3p 2 (1 p) p 3 3p 2 2p 3 pprof. Jozef GruskaIV0541. Basics of coding theory25/66

EXAMPLE: Coding of a path avoiding an enemy territoryStory Alice and Bob share an identical map (Fig. 1) gridded as shown in Fig.1. OnlyAlice knows the route through which Bob can reach her avoiding the enemy territory.Alice wants to send Bob the following information about the safe route he should take.NNWNNWWSSWWNNNNWWNAliceHQThree ways to encode the safe route from Bob toAlice are:1NC 1 {N 00, W 01, S 11, E 10}In such a case any error in the code word000001000001011111010100000000010100would be a disaster.2x BobFig. 1C 2 {000, 011, 101, 110}A single error in encoding each of symbols N, W, S, E can be detected.3C 3 {00000, 01101, 10110, 11011}A single error in decoding each of symbols N, W, S, E can be corrected.prof. Jozef GruskaIV0541. Basics of coding theory26/66

BASIC TERMINOLOGYDatawords - words of a messageCodewords - words of some code.Block code - a code with all codewords of the same length.Basic assumptions about channels1Code length preservation. Each output word of a channel has the same length asthe input codeword.2Independence of errors. The probability of any one symbol being affected by anerror in transmissions is the same.Basic strategy for decodingFor decoding we use the so-called maximal likelihood principle, or nearest neighbordecoding strategy, or majority voting decoding strategy which says thatthe receiver should decode a received word w’asthe codeword w that is the closest one to w’.prof. Jozef GruskaIV0541. Basics of coding theory27/66

HAMMING DISTANCEThe intuitive concept of “closeness“ of two words is well formalized through Hammingdistance h(x, y ) of words x, y . For two words x, yh(x, y) the number of symbols in which the words x and y differ.Example:h(10101, 01100) 3,h(fourth, eighth) 4Properties of Hamming distanceh(x, y ) 0 x yh(x, y ) h(y , x)3 h(x, z) h(x, y ) h(y , z) triangle inequalityAn important parameter of codes C is their minimal distance.12h(C ) min{h(x, y ) x, y C , x 6 y },Therefore, h(C ) is the smallest number of errors that can change one codeword intoanother.Basic error correcting theorem1 A code C can detect up to s errors if h(C ) s 1.2 A code C can correct up to t errors if h(C ) 2t 1.Proof (1) Trivial. (2) Suppose h(C ) 2t 1. Let a codeword x is transmitted and aword y is received with h(x, y ) t. If x 0 6 x is any codeword, then h(y , x 0 ) t 1because otherwise h(y , x 0 ) t 1 and therefore h(x, x 0 ) h(x, y ) h(y , x 0 ) 2t 1what contradicts the assumption h(C ) 2t 1.prof. Jozef GruskaIV0541. Basics of coding theory28/66

BINARY SYMMETRIC CHANNELConsider a transition of binary symbols such that each symbol has probability of errorp 12 .01-p0pBinary symmetric channelp111-pIf n symbols are transmitted, then the probability of t errors is p t (1 p)n t ntIn the case of binary symmetric channels, the ”nearest neighbour decoding strategy” isalso ”maximum likelihood decoding strategy”.prof. Jozef GruskaIV0541. Basics of coding theory29/66

POWER of PARITY BITSExample Let all 211 of binary words of length 11 be codewordsand let the probability of a bit error be p 10 8 .Let bits be transmitted at the rate 107 bits per second.The probability that a word is transmitted incorrectly is approximately11p(1 p)10 11.10871110Therefore 108 · 11 0.1 of words per second are transmitted incorrectly.Therefore, one wrong word is transmitted every 10 seconds, 360 erroneous words everyhour and 8640 words every day without being detected!Let now one parity bit be added.Any single error can be detected!!!The probability of at least two errors is: 1 (1 p)12 12(1 p)11 p 12(1 p)10 p 2 10661627Therefore, approximately 106616 · 10 5.5 · 10 9 words per second are transmitted with an12undetectable error.109Corollary One undetected error occurs only once every 2000 days! (2000 5.5 86400).prof. Jozef GruskaIV0541. Basics of coding theory30/66

TWO-DIMENSIONAL PARITY CODEThe two-dimensional parity code arranges the data into a two-dimensional array andthen to each row (column) parity bit is attached.Example Binary string10001011000100101111is represented and encoded as follows10000111010100011100 010110111101010000111011100000Question How much better is two-dimensional encoding than one-dimensional encoding?prof. Jozef GruskaIV0541. Basics of coding theory31/66

NOTATIONS and EXAMPLESNotation: An (n, M, d)-code C is a code such thatn - is the length of codewords.M - is the number of codewords.d - is the minimum distance in C .Example:C 1 {00, 01, 10, 11} is a (2,4,1)-code.C 2 {000, 011, 101, 110} is a (3,4,2)-code.C 3 {00000, 01101, 10110, 11011} is a (5,4,3)-code.Comment: A good (n, M, d)-code has small n, large M and also large d.prof. Jozef GruskaIV0541. Basics of coding theory32/66

EXAMPLES from DEEP SPACE TRAVELSExamples (Transmission of photographs from the deep space)In 1965-69 Mariner 4-5 probes took the first photographs of another planet - 22photos. Each photo was divided into 200 200 elementary squares - pixels. Eachpixel was assigned 6 bits representing 64 levels of brightness. and so calledHadamard code was used.Transmission rate was 8.3 bits per second.In 1970-72 Mariners 6-8 took such photographs that each picture was broken into700 832 squares. So called Reed-Muller (32,64,16) code was used.Transmission rate was 16200 bits per second. (Much better quality pictures could bereceived)prof. Jozef GruskaIV0541. Basics of coding theory33/66

HADAMARD CODEIn Mariner 5, 6-bit pixels were encoded using 32-bit long Hadamard code that couldcorrect up to 7 errors.Hadamard code has 64 codewords. 32 of them are represented by the 32 32 matrixH {hIJ }, where 0 i, j 31 andhij ( 1)a0 b0 a1 b1 . a4 b4where i and j have binary representationsi a4 a3 a2 a1 a0 , j b4 b3 b2 b1 b0The remaining 32 codewords are represented by the matrix H.Decoding was quite simple.prof. Jozef GruskaIV0541. Basics of coding theory34/66

CODES RATESFor q-nary (n, M, d)-code C we define the code rate, or information rate, RC , byRC lgq M.nThe code rate represents the ratio of the number of needed input data symbols to thenumber of transmitted code symbols.If a q-nary code has code rate R, then we say that it transmits R q-symbols per a channeluse - or R is a number of bits per a channel use (bpc) - in the case of binary alphabet.Code rate (6/32 for Hadamard code), is an important parameter for realimplementations, because it shows what fraction of the communication bandwidth isbeing used to transmit actual data.prof. Jozef GruskaIV0541. Basics of coding theory35/66

The ISBN-code IEach book till 1.1.2007 had International Standard Book Number which was a 10-digitcodeword produced by the publisher with the following structure:lpmw x1 . . . x10languagepublishernumberweighted check sum0077095030such thatP10i 1 (11 i)xi 0 (mod11)The publisher has to put x10 X if x10 is to be 10.The ISBN code was designed to detect: (a) any single error (b) any double error createdby a transpositionSingle error detectionLet X x1 . . . x10 be a correct code and letY x1 . . . xj 1 yj xj 1 . . . x10 with yj xj a, a 6 0In such a case:P10i 1 (11prof. Jozef Gruska i)yi P10i 1 (11IV054 i)xi (11 j)a 6 0 (mod11)1. Basics of coding theory36/66

The ISBN-code IITransposition detectionLet xj and xk be exchanged.P10i 1 (11 i)yi P10i 1 (11 i)xi (k j)xj (j k)xk (k j)(xj xk ) 6 0 (mod11)if k 6 j and xj 6 xk .prof. Jozef GruskaIV0541. Basics of coding theory37/66

New ISBN codeStarting 1.1.2007 instead of 10-digit ISBN code a 13-digitISBN code is being used.New ISBN number can be obtained from the old one by precedingthe old code with three digits 978.For details about 13-digit ISBN seehttp://www.en.wikipedia.org/Wiki/International Standard Book Numberprof. Jozef GruskaIV0541. Basics of coding theory38/66

EQUIVALENCE of CODESDefinition Two q-ary codes are called equivalent if one can be obtained from the other bya combination of operations of the following type:(a) a permutation of the positions of the code.(b) a permutation of symbols appearing in a fixed position.Question: Let a code be displayed as an M n matrix. To what correspond operations(a) and (b)?Claim: Distances between codewords are unchanged by operations (a), (b).Consequently, equivalent codes have the same parameters (n,M,d) (and correct the samenumber of errors). 0 01 10101Examples of equivalent codes 0 0 1 0 0 0 0 0 1 1(1)1 1 1 1 1 1 1 0 0 0 0 0 0 0 0 0 0 1 0 11(2) 1 1 11 1 0 2 2 22 0 1 1120 2 0 1Lemma Any q-ary (n, M, d)-code over an alphabet {0, 1, . . . , q 1} is equivalent to an(n, M, d)-code which contains the all-zero codeword 00 . . . 0.prof. JozefGruskaIV054 1. Basics of coding theory39/66ProofTrivial.

THE MAIN CODING THEORY PROBLEMA good (n, M, d)-code should have a small n, large M and large d.The main coding theory problem is to optimize one of the parameters n, M, d for givenvalues of the other two.Notation: Aq (n, d) is the largest M such that there is an q-nary (n, M, d)-code.Theorem(a) Aq (n, 1) q n ;(b) Aq (n, n) q.Proof(a) First claim is obvious;(b) Let C be an q-nary (n, M, n)-code. Any two distinct codewords of C have to differ inall n positions. Hence symbols in any fixed position of M codewords have to bedifferent. Therefore Aq (n, n) q. Since the q-nary repetition code is(n, q, n)-code, we get Aq (n, n) q.prof. Jozef GruskaIV0541. Basics of coding theory40/66

EXAMPLEExample Proof that A2 (5, 3) 4.(a) Code C3 , page (?), is a (5, 4, 3)-code, hence A2 (5, 3) 4.(b) Let C be a (5, M, 3)-code with M 5.By previous lemma we can assume that 00000 C .C has to contain at most one codeword with at least four 1’s. (otherwised(x, y ) 2 for two such codewords x, y )Since 00000 C , there can be no codeword in C with at most one or two 1.Since d 3, C cannot contain three codewords with three 1’s.Since M 4, there have to be in C two codewords with three 1’s. (say 11100,00111), the only possible codeword with four or five 1’s is then 11011.prof. Jozef GruskaIV0541. Basics of coding theory41/66

DESIGN of ONE CODE from ANOTHER ONETheorem Suppose d is odd. Then a binary (n, M, d)-code exists iff a binary(n 1, M, d 1)-code exists.Proof Only if case: Let C be a binary (n, M, d) code. Let PnC 0 x1 . . . xn xn 1 x1 . . . xn C , xn 1 i 1 xi mod 2Since parity of all codewords in C 0 is even, d(x 0 , y 0 ) is even for allx 0, y 0 C 0.Hence d(C 0 ) is even. Since d d(C 0 ) d 1 and d is odd,d(C 0 ) d 1.Hence C 0 is an (n 1, M, d 1)-code.If case: Let D be an (n 1, M, d 1)-code. Choose code words x, y of D such thatd(x, y ) d 1.Find a position in which x, y differ and delete this position from all codewords of D.Resulting code is an (n, M, d)-code.prof. Jozef GruskaIV0541. Basics of coding theory42/66

A COROLLARYCorollary:If d is odd, then A2 (n, d) A2 (n 1, d 1).If d is even, then A2 (n, d) A2 (n 1, d 1).ExampleA2 (5, 3) 4 A2 (6, 4) 4(5, 4, 3)-code (6, 4, 4)-code0011prof. Jozef Gruska01010110IV05400110101by adding check.1. Basics of coding theory43/66

A SPHERE and its VOLUMENotation Fqn - is a set of all words of length n over the alphabet {0, 1, 2, . . . , q 1}Definition For any codeword u Fqn and any integer r 0 the sphere of radius r andcentre u is denoted byS(u, r ) {v Fqn h(u, v ) r }.Theorem A sphere of radius r in Fqn , 0 r n contains n n1 (q 1) n2 (q 1)2 . . . 0nr (q 1)rwords.Proof Let u be a fixed word in Fqn . The number of words that differ from u in mpositions is n(q 1)m .mprof. Jozef GruskaIV0541. Basics of coding theory44/66

GENERAL UPPER BOUNDS on CODE PARAMETERSTheorem (The sphere-packing (or Hamming) bound)If C is a q-nary (n, M, 2t 1)-code, thenM n 0 n1 (q 1) . . . nt (q 1)t q n(1)Proof Since minimal distance of the code C is 2t 1, any two spheres of radius t centredon distinct codewords have no codeword in common. Hence the total number of words inM spheres of radius t centred on M codewords is given by the left side in (1). Thisnumber has to be less or equal to q n .A code which achieves the sphere-packing bound from (1), i.e. such a code that equalityholds in (1), is called a perfect code.Singleton bound: If C is an q-ary (n, M, d) code, thenM q n d 1prof. Jozef GruskaIV0541. Basics of coding theory45/66

A GENERAL UPPER BOUND on Aq (n, d)Example An (7, M, 3)-code is perfect ifM70 71 27i.e. M 16An example of such a code:C 4 {0000000, 1111111, 1000101, 1100010, 0110001, 1011000, 0101100,0010110, 0001011, 0111010, 0011101, 1001110, 0100111, 1010011, 1101001, 1110100}Table of A2 (n, d) from 1981n5678910111213141516d 34816204072-79144-158256512102420482560-3276d 52224612243264128256256-340d 72222448163236-37For current best results see http://www.codetables.deprof. Jozef GruskaIV0541. Basics of coding theory46/66

LOWER BOUND for Aq (n, d)The following lower bound for Aq (n, d) is known as Gilbert-Varshamov bound:Theorem Given d n, there exists a q-ary (n, M, d)-code withM qnPd 1 n(q 1)j(j 0j)and thereforeAq (n, d) prof. Jozef GruskaIV054qnPd 1 n(q 1)jj 0 ( j )1. Basics of coding theory47/66

ERROR DETECTIONError detection is much more modest aim than error correction.Error detection is suitable in the cases that channel is so good that probability of an erroris small and if an error is detected, the receiver can ask the sender to renew thetransmission.For example, two main requirements for many telegraphy codes used to be:Any two codewords had to have distance at least 2;No codeword could be obtained from another codeword by transposition of twoadjacent letters.prof. Jozef GruskaIV0541. Basics of coding theory48/66

PICTURES of SATURN TAKEN by VOYAGERPictures of Saturn taken by Voyager, in 1980, had 800 800 pixels with 8 levels of brightness.Since pictures were in color, each picture was transmittedthree times; each time through different color filter. Thefull color picture was represented by3 800 800 8 13360000 bits.To transmit pictures Voyager used the so called Golaycode G24 .prof. Jozef GruskaIV0541. Basics of coding theory49/66

GENERAL CODING PROBLEMImportant problems of information theory are how to define formally such concepts asinformation and how to store or transmit information efficiently.Let X be a random variable (source) which takes any value x with probability p(x). Theentropy of X is defined byPS(X ) x p(x)lg p(x)and it is considered to be the information content of X.In a special case, of a binary variable X which takes on the value 1 with probability p andthe value 0 with probability 1 p, then the information content of X is:S(X ) H(p) p lg p (1 p)lg (1 p)1Problem: What is the minimal number of bits needed to transmit n values of X ?Basic idea: Encode more (less) probable outputs of X by shorter (longer) binary words.Example (Moorse code - 1838)a .b -. c -.-. d -.e.f .-. g –.h .i .j .— k -.- l .-. m –n -.o — p .–. q –.r .-.s .tu .v .- w .– x -.- y -.– z –.1Notation lg (ln) [log] will be used for binary, natural and decimal logarithms.prof. Jozef GruskaIV0541. Basics of coding theory50/66

Samuel Moorseprof. Jozef GruskaIV0541. Basics of coding theory51/66

SHANNON’s NOISELESS CODING THEOREMShannon’s noiseless coding theorem says that in order to transmit n values of X, we need,and it is sufficient, to use nS(X ) bits.More exactly, we cannot do better than the bound nS(X ) says, and we can reach thebound nS(X ) as close as desirable.Example: Let a source X produce the value 1 with probability p and the value 0 with probability 1 p 3414Assume we want to encode blocks of the outputs of X of length 4.By Shannon’s theorem we need 4H( 14 ) 3.245 bits per blocks (in average)A simple and practical method knownper a 4-bit message.mess. codemess. 01111000 01111111000as Huffman code requires in this case 3.273 erve that this is a prefix code - no codeword is a prefix of another codeword.prof. Jozef GruskaIV0541. Basics of coding theory52/66

DESIGN of HUFFMAN CODE IIGiven a sequence of n objects, x1 , . . . , xn with probabilities p1 . . . pn .Stage 1 - shrinking of the sequence.Replace xn 1 , xn with a new object yn 1 with probability pn 1 pn and rearrangesequence so one has again non-increasing probabilities.Keep doing the above step till the sequence shrinks to two .10.03.04.02Stage 2 - extending the code - Apply again and again the following method.If C {c1 , . . . , cr } is a prefix optimal code for a source Sr , then C 0 {c10 , . . . , cr0 1 } isan optimal code for Sr 1 , whereci0 ci1 i r 1cr0 cr 1cr0 1 cr 0.prof. Jozef GruskaIV0541. Basics of coding theory53/66

DESIGN of HUFFMAN CODE IIStage 2 Apply again and again the following method:If C {c1 , . . . , cr } is a prefix optimal code for a source Sr , then C 0 {c10 , . . . , cr0 1 } isan optimal code for Sr 1 , whereci0 ci1 i r 1cr0 cr 1cr0 1 cr 0.0.15 - 0110.5 - 10.28 - 010.13 - 0100.22 - 000.12 - 0010.5 - 00.04 - 010100.08 - 01010.04 - 010110.05 - 01000.03 - 010010.02 - 010000.1 - 0001 .50.50.50.50.50011 .15.15.15.15.22001 .12.12.12.13.15000 .10.10.10.12 1 .1301011 .0401010 .04.05.04.08 1 .10 0.050101001 .03.04 0101000 .020prof. Jozef GruskaIV054.50101. Basics of coding theory.50 1.28 1 .50 0.22 054/66

A BIT OF HISTORY IThe subject of error-correcting codes arose originally as a response to practical problemsin the reliable communication of digitally encoded information.The discipline was initiated in the paperClaude Shannon: A mathematical theory of communication, Bell Syst.Tech. JournalV27, 1948, 379-423, 623-656Shannon’s paper started the scientific discipline information theory and error-correctingcodes are its part.Originally, information theory was a part of electrical engineering. Nowadays, it is animportant part of mathematics and a

CONTENTS 1 Basics of coding theory 2 Linear codes 3 Cyclic, convolution and Turbo codes - list decoding 4 Secret-key cryptosystems 5 Public-key cryptosystems, I. Key exchange, knapsack, RSA 6 Public-key cryptosystems, II. Other cryptosystems, security, PRG, hash functions 7 Digital signatures 8 Elliptic curves cryptography and factorization 9 Identi cation, authentication, privacy, secret .