Transcription

CODING THEORYa first courseHenk C.A. van Tilborg

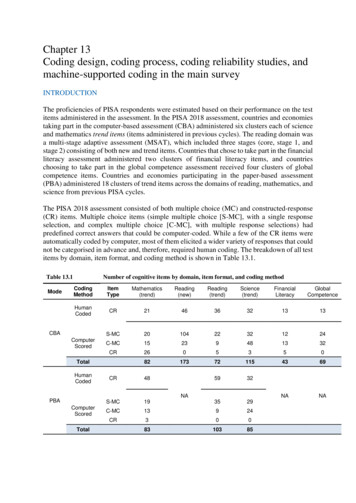

ContentsContentsiPrefaceiv1A communication system11.11.21.31.4IntroductionThe channelShannon theory and codesProblems11372Linear block codes92.12.22.32.42.5Block codesLinear codesThe MacWilliams relationsLinear unequal error protection codesProblems9121922253Some code constructions313.13.23.33.4Making codes from other codesThe Hamming codesReed-Muller codesProblems31343642ii

4Cyclic codes and Goppa codes454.14.24.34.44.54.64.7Introduction; equivalent descriptionsBCH codes and Reed-Solomon codesThe Golay codesGoppa codesA decoding algorithmGeometric Goppa codesProblems455256576066785Burst correcting codes835.15.25.35.45.55.6Introduction; two boundsTwo standard techniquesFire codesArray codeOptimum, cyclic, b-burst-correcting codesProblems8385879094976Convolutional codes996.16.26.36.46.56.6An example(n, k)-convolutional codesThe Viterbi decoding algorithmThe fundamental path enumeratorCombined coding and modulationProblems99100105107111114AFinite fields117BTables of GF (2m ), m 3, 4, 5, 6143iii

CSolutions to the 8154161167171176ivtototototototoChapter 1Chapter 2Chapter 3Chapter 4Chapter 5Chapter 6Appendix ABibliography183Index186

PrefaceAs the title of this book already suggests, this manuscript is intended to be a textbooksuitable for a first course in coding theory. It is based on a course that is taught forseveral years at the Eindhoven University of Technology. The students that follow thiscourse are mostly in the third or fourth year of their undergraduate program. Typically,half of them are computer science students, a third of them study mathematics and theremainder are students in electrical engineering or information technology.All these students are familiar with linear algebra and have at least a rudimentaryknowledge of probability theory. More importantly, it is assumed here that they arefamiliar with the theory of finite fields and with elementary number theory. Clearly thelatter is not the case at many universities. It is for this reason that a large appendixhas been added (Appendix A), containing all the necessary prerequisites with regard tofinite field theory and elementary number theory.All chapters contain exercises that we urge the students to solve. Working at theseproblems seems to be the only way to master this field. As a service to the student thatis stranded with a problem or to give a student a chance to look at a (possibly different)solution, all problems are completely worked out in Appendix C.The main part of this manuscript was written at the University of Pretoria in the summerof 1991. I gladly acknowledge the hospitality that Gideon Kühn and Walter Penzhornextended to me during my stay there.I would like to express my gratitude to Patrick Bours, Martin van Dijk, Tor Helleseth,Christoph Kirfel, Dirk Kleima, Jack van Lint, Paul van der Moolen, Karel Post, HansSterk, René Struik, Hans van Tilburg, Rob Versseput, Evert van de Vrie, Øyvind Ytrehusand many of the students for all the corrections and suggestions that they have givenme.A special word of thanks I owe to Iwan Duursma who made it possible to include at avery late stage a section on algebraic-geometry codes (he presented me a first draft forSection 4.6). Finally, I am indebted to Anneliese Vermeulen-Adolfs for her instrumentalhelp in making this manuscript publisher-ready.v

Eindhoventhe NetherlandsApril 3, 1993viHenk van Tilborg

Chapter 1A communication system1.1IntroductionCommunicating information from one person to another is of course an activity that isas old as mankind. The (mathematical) theory of the underlying principles is not so old.It started in 1948, when C.E. Shannon gave a formal description of a communicationsystem and, at the same time, also introduced a beautiful theory about the concept ofinformation, including a good measure for the amount of information in a message.In the context of this book, there will always be two parties involved in the transmissionof information. The sender of the message(s) (also called the source) and the receiver(also called the sink). In some applications the sender will write information on a medium(like a floppy disc) and the receiver will read it out later. In other applications, the senderwill actually transmit the information (for instance by satellite or telephone line) to thereceiver. Either way, the receiver will not always receive the same information as wassent originally, simply because the medium is not always perfect. This medium will bediscussed in the next section. In Section 1.3 we will discuss Shannon’s answer to theproblem of transmission errors.1.2The channelThe medium over which information is sent, together with its characteristics, is calledthe channel. These characteristics consist of an input alphabet X, an output alphabetY, and a transition probability function P .Unless explicitly stated otherwise, it will always be assumed that successive transmissionshave the same transition probability function and are independent of each other. Inparticular, the channel is assumed to be memoryless.1

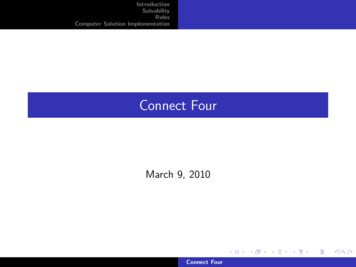

CHAPTER 1. A COMMUNICATION SYSTEM1 p 11 PPPP p PP p PPPP PP 0 0 1 pFigure 1.1: The binary symmetric channelDefinition 1.2.1 A channel (X, Y ; P ) consists of an alphabet X of input symbols, analphabet Y of output symbols, and for each x in X and y in Y the conditional probability p(y x) that symbol y is received, when symbol x was transmitted (this probability isindependent of previous and later transmissions).Of coursePy Yp(y x) 1 for all x in X.The channel that we shall be studying the most will be the Binary Symmetric Channel,shortened to BSC. It is depicted in Figure 1.1.Definition 1.2.2 The Binary Symmetric Channel is the channel (X, Y ; P ) with bothX and Y equal to {0, 1} and P given by p(1 0) p(0 1) p and p(0 0) p(1 1) 1 pfor some 0 p 1.So, with probability 1 p a transmitted symbol will be received correctly and withprobability p it will be received incorrectly. In the latter case, one says that an error hasoccurred.Of course, if p 21 the receiver gets a more reliable channel by inverting the receivedsymbols. For this reason we shall always assume that 0 p 12 .The BSC gives a fair description of the channel in many applications. A straightforwardgeneralization is the q-ary symmetric channel. It is defined by X Y, both of cardinalityq, and the transition probabilities p(y x) 1 p, if x y, and p(y x) p/(q 1), ifx 6 y.Another type of channel is the Gaussian channel, given by X { 1, 1}, Y IR and theprobability density function p(y x) which is the Gaussian distribution with x as meanand with some given variance, depending on the reliability of the channel, i.e.p(y x) 122e (y x) /2σ .2πσIf the actual channel is Gaussian, one can reduce it to the BSC, by replacing every y 0by 1 and every y 0 by 0, and by writing 0 instead of the the input symbol 1. Bydoing this, one loses information about the reliability of a received symbol. Indeed, thereceived symbol y 1.2 is much more likely to come from the transmitted symbol 1,than the received symbol y 0.01. By reducing the Gaussian channel to the BSC, onethrows away information about the transmitted symbol.2

1.3. SHANNON THEORY AND CODESIn some applications the channel is inherently of the BSC-type and not a reduction ofthe Gaussian channel.Similarly, by dividing IR into three appropriate regions, ( , a], ( a, a) and[a, ), a 0, one obtains the following channel.Definition 1.2.3 The binary symmetric error and erasure channel is a channel(X, Y ; P ) with X {0, 1}, Y {0, , 1}, and P given by p(0 0) p(1 1) 1 p0 p00 ,p(1 0) p(0 1) p0 and p( 0) p( 1) p00 for some non-negative p0 and p00 with0 p0 p00 1.The symbol means that there is too much ambiguity about the transmitted symbol.One speaks of an erasure.In the above channels, the errors and erasures in transmitted symbols occur independently of each other. There are however applications where this is not the case. If theerrors tend to occur in clusters we speak of a burst-channel. Such a cluster of errors iscalled a burst. A more formal definition will be given in Chapter 5. Even a mixture ofthe above error types can occur: random errors and bursts.Unless explicitly stated otherwise, we will always assume the channel to be the BSC.1.3Shannon theory and codesIf one wants to transmit a 1 over the BSC that has error probability p, one can increase thereliability of the transmission by repeating the transmission a few times, say altogetherfive times. Similarly one can send five 0’s if a single 0 needs to be transmitted. Thereceiver can use a simple majority vote on the received sequence y1 , y2 , y3 , y4 , y5 to decidewhat the most likely transmitted symbol is. For instance, if 1,1,0,0,1 is received, the mostlikely transmitted sequence is of course 1,1,1,1,1.With this system, it is still possible that the receiver makes an error, namely if threeor more errors have occurred in a transmitted 5-tuple. If at most two errors occurredduring the transmission, the receiver will make the correct estimate of the transmittedinformation. The probability of correct transmission of the information is given by theprobability that no error occurred, plus the probability that exactly one of the fivecoordinates is in error, plus the probability that exactly two of the five coordinates arein error, so it is given by!!5 15 2(1 p) p (1 p)4 p (1 p)3 .125For p 0.01, this probablity is 0.999986 as opposed to 0.99 when only one symbolwas transmitted. Of course, the price that has been paid for this dramatic increase inreliability is the transmission of five bits instead of just one!3

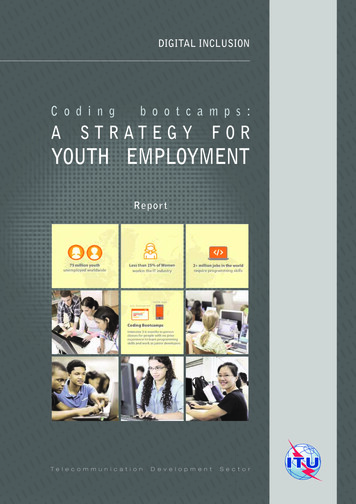

CHAPTER 1. A COMMUNICATION SYSTEMsendera encoderx channely decodera0 receiverFigure 1.2: A communication systemTransmitting more symbols than is strictly necessary to convey the message is calledadding redundancy to the message. Regular languages know the same phenomenon. Thefact that one immediately sees the obvious misprints in a word, like ‘lacomotivf’, meansthat the word contains more letters than are strictly necessary to convey the message.The redundant letters enable us to correct the misspelling of the received word.Definition 1.3.1 An encoder is a mapping (or algorithm) that transforms each sequenceof symbols from the message alphabet A to a sequence of symbols from the input alphabetX of the channel, in such a way that redundancy is added (to protect it better againstchannel errors).A complete decoder is an algorithm that transforms the received sequence of symbolsof the output alphabet Y of the channel into a message stream over A. If a decodersometimes fails to do this (because it is not able to do so or to avoid messages that aretoo unreliable) one speaks of an incomplete decoder.The channel, encoder, and decoder, together with the sender and receiver, form a socalled communication system. See Figure 1.2, where a denotes the message stream, x asequence of letters in X, y a sequence of letters in Y and a0 a message stream.A decoder that always finds the most likely (in terms of the channel probabilities) transmitted message stream a0 , given the received sequence y, is called a maximum likelihooddecoder. The decoder that was described above for the encoder that repeated eachinformation bit five times is an example of a maximum likelihood decoder.In Section 1.2 we have seen how the reduction of the Gaussian channel to the BSC throwsaway (valuable) information. A decoder that uses the output of this (reduced) BSC asits input is called a hard decision decoding algorithm, while it is called a soft decisiondecoding algorithm otherwise.A final distinction is the one between encoders (and decoders) with and without memory.Definition 1.3.2 If the encoder maps k-tuples of symbols from the message alphabet Ain a one-to-one way to n-tuples of symbols from the input alphabet X of the channel(independent of the other input k-tuples), the resulting set of A k output n-tuples iscalled a block code. For the elements of a block code one uses the name codeword.Definition 1.3.3 If the encoder maps k-tuples of symbols from the message alphabetA to n-tuples of symbols from the input alphabet X of the channel in a way that alsodepends on the last m input k-tuples, where m is some fixed parameter, the resulting4

1.3. SHANNON THEORY AND CODESsequence of output n-tuples is called a convolutional code. These output sequences arealso named codewords.For a long stream of message symbols, one of course has to break it up into segments oflength k each before they can be handled by the encoder.Convolutional codes will be discussed in Chapter 6. Block codes form the main topicof this book. They will be extensively discussed in Chapters 2–5. Note that the codediscussed before, in which each message symbol is repeated four times, is an example ofa block code with k 1 and n 5.Let M denote the size of A and let q denote the size of X. If all k-tuples of M -arysymbols are equally likely, one needs dk log2 M e bits to denote one of them. This maynot be so obvious in general, but when M is some power of 2, this is a straightforwardobservation. Similarly for each of q n equally likely n-tuples of q-ary symbols one needsn log2 q bits. Let the information rate R denote the amount of information that an inputsymbol of the channel contains. It follows thatR k log2 M.n log2 qIf q 2, this reduces to R (1.1)k log2 M.nIf q M, equation (1.1) simplifies to R k/n. The interpretation of the information ratein this case corresponds with the intuitive interpretation: if k information symbols aremapped into n channel input symbols from the same alphabet, the information densityin these channel input symbols is k/n.By using block and convolutional codes, the sender wants to get information to thereceiver in a more reliable way than without using the codes. By repeating each information symbol sufficiently many times, one can achieve this and obtain a reliabilityarbitrarily close to 1. However, the price that one pays is the inefficient use of thechannel: the rate of this sequence of codes tends to zero!What Shannon was able to prove in 1948 (see: Shannon, C.E., A mathematical theory ofcommunication, Bell Syst. Tech. J., 27, pp. 379-423, 623-656, 1948) is that, as long asthe rate R is smaller than some quantity C, one can (for sufficiently long block lengthsn) find encodings at rate R, such that the probability of incorrect decoding (when usingmaximum likelihood decoding) can be made arbitrarily small, while this is not possiblefor rates above that quantity! This result forms the foundation of the whole theory oferror-correcting codes.Definition 1.3.4 The entropy function h(p) is defined for 0 p 1 by(h(p) p log2 p (1 p) log2 (1 p),0,if 0 p 1,if p 0 or 1.(1.2)5

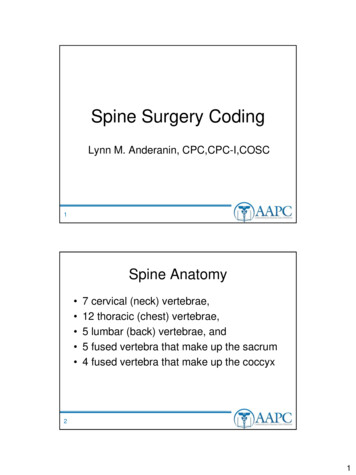

CHAPTER 1. A COMMUNICATION SYSTEMp ppppppppppppppppppppppppppppp p p ppppppppp pp pppppppp ppppppppp ppppppppp pppppp ppppppppppppppppppppppp ppppppppppppppppppppppppppppp0p1 pppppp ppppppppppppppp pppppppppppppp pppppppppppp pppppppp pppppppppppppppppppppppp pppppppp pppp p00.5(b):p1C 1 h(p)Figure 1.3: The entropy function h(p) and the capacity C of the BSCAlthough it will not be further discussed here, Shannon’s information theory makesit possible to interpret h(p) as the uncertainty that the receiver of a specific binarysymbol (transmitted over the BSC with error probability p) still has about the actuallytransmitted symbol. In other words, 1 h(p) is the amount of information that thereceived symbol carries about the transmitted symbol.Theorem 1.3.5 (Shannon) Consider the BSC with error probability p and let C 1 h(p). Then, for each rate R with R C, an infinite sequence of encodings El exists,where El maps binary kl -tuples to binary nl -tuples with kl dRnl e (so El has rate R),such that the corresponding maximum-likelihood decoding algorithms have a probabilityof incorrect decoding that goes exponentially fast to 0 as a function of nl , for l .For rates R greater than C, no encodings can be made with error probabilities tending tozero.One should realize that a decoding algorithm for an infinite class of codes that does notalways yield the most likely transmitted sequence may still have a negligible probablityof incorrect decoding, when the length of these codes tends to infinity.The quantity C in Theorem 1.3.5 is called the capacity of the channel. The entropyfunction and the capacity function are depicted in Figure 1.3.As one can see in Figure 1.3 (b), the BSC with p 1/2 cannot be used to transmit anyinformation. The receiver may as well write down his own sequence of symbols instead oflistening to the channel. At the other extreme, p 0 and p 1 imply that informationcan be sent at rate 1.It is the ultimate goal of coding theory to find (families of) codes that approach thecapacity of the BSC and that have efficient decoding algorithms.6

1.4. PROBLEMS1.4Problems1.4.1 Suppose that four messages are encoded into 000000, 001111, 110011 and 111100.These four messages are transmitted with equal probablity over a BSC with errorprobability p. If one receives a sequence different from the four sequences above,one knows that errors have been made during the transmission.What is the probability that errors have been made during the transmission andthat the receiver will not find out?1.4.2 Suppose that either ( 1, 1, 1) or ( 1, 1, 1) is transmitted (each with probability 1/2) over the Gaussian channel defined by the density function12p(y x) e (y x) /2 .2πWhat is the most likely transmitted sequence when the word ( 1, 0.01, 0.01)is received?What is the answer to this question if a maximum likelihood, hard decision decoding algorithm has been applied?7

CHAPTER 1. A COMMUNICATION SYSTEM8

Chapter 2Linear block codes2.1Block codesIn this chapter, block codes for the q-ary symmetric channel will be introduced. Let nbe fixed and let the input and output symbols of the channel belong to an alphabet Qof cardinality q. The set of Q-ary n-tuples is denoted by Qn .A distance metric on Qn that reflects the properties of the q-ary symmetric channel verywell, is the following.Definition 2.1.1 The Hamming distance d(x, y) between x (x1 , x2 , . . . , xn ) and y (y1 , y2 , . . . , yn ) in Qn is given byd(x, y) {1 i n xi 6 yi } .(2.1)In words: d(x, y) is the number of coordinates, where x and y differ. It follows fromthe properties of the q-ary symmetric channel that the more x and y differ, the moreunlikely one will be received if the other was transmitted.It is very simple to verify that d(x, x) 0, d(x, y) d(y, x) and that the triangleinequality holds: d(x, z) d(x, y) d(y, z) for any x, y and z in Qn . So the Hammingdistance is indeed a distance function.A q-ary block code C of length n is any nonempty subset of Qn . The elements of C arecalled codewords. If C 1, the code is called trivial. Quite often one simply speaks ofa “code” instead of a “block code”.To maximize the error-protection, one needs codewords to have sufficient mutual distance.Definition 2.1.2 The minimum distance d of a non-trivial code C is given byd min{d(x, y) x C, y C, x 6 y}.9(2.2)

CHAPTER 2. LINEAR BLOCK CODESThe error-correcting capability e is defined by %d 1e .2(2.3)The reason for the name error-correcting capability is quite obvious. If d is the minimumdistance of a code C and if during the transmission of the codeword c over the channelat most e errors have been made, the received word r will still be closer to c than to anyother codeword. So a maximum likelihood decoding algorithm applied to r will result inc.However, codes can also be used for error-detection. Let t be some integer, t e. Thenit follows from the definition of d that no word in Qn can be at distance at most t fromone codeword, while at the same time being at distance up to d t 1 from some othercodeword. This means that one can correct up to t errors, but also detect if more thant have occurred, as long as not more than d t 1 errors have occurred.Similarly, a code C with minimum distance d can also be used for the simultaneouscorrection of errors and erasures. Let e and f be fixed such that 2e f d. A receivedword r with at most f erasures cannot have distance e from two different codewordsc1 and c2 at the other n f coordinates, because that would imply that d(c1 , c2 ) 2e f d. It follows that C is e-error, f -erasure-correcting.A different interpretation of Definition 2.1.2 is that spheres of radius e around the codewords are disjoint. Let Br (x) denote the sphere of radius r around x, defined by{y Qn d(y, x) r}. To determine the cardinality of Br (x) we first want to findthe number of words at distance i to x. To find these words, one needs to choose exactlyi of the n coordinatesof x and replace each by one of the other q 1 alphabet symbols. So there are ni (q 1)i words at distance i from x and thus Br (x) rXni 0i!(q 1)i .(2.4)Since all spheres with radius e around the C codewords are disjoint and there are onlyq n distinct words in Qn , we have proved the following theorem.Theorem 2.1.3 (Hamming bound) Let C be an e-error-correcting code. Then C eXni 0i!(q 1)i q n .(2.5)Let d(x, C) denote the distance from x to the code C, so d(x, C) min{d(x, c) c C}.The next notion tells us how far a received word can possibly be removed from a code.Definition 2.1.4 The covering radius ρ of a code C is given byρ max{d(x, C) x Qn }.10(2.6)

2.1. BLOCK CODESSince every word x in Qn is at distance at most ρ to some codeword, say c, it is alsoinside at least one of the spheres of radius ρ around the codewords (namely Bρ (c)). Sothese spheres together cover Qn . This proves the following theorem.Theorem 2.1.5 Let C be a code with covering radius ρ then C ρXnii 0!(q 1)i q n .(2.7)It follows from (2.5) and (2.7) that e ρ for every code. Equality turns out to bepossible!Definition 2.1.6 An e-error-correcting code C with covering radius ρ is called perfectife ρ.A different way of saying that an e-error-correcting code C is perfect is “the spheres withradius e around the codewords cover Qn ” or “every element in Qn is at distance at moste from a unique codeword”.Equations (2.5) and (2.7) imply the following theorem.Theorem 2.1.7 (Sphere packing bound) Let C be an e-error-correcting code. ThenC is perfect if and only if C eXnii 0!(q 1)i q n .(2.8)We have already seen a code that is perfect: the binary code of length 5 with just thetwo codewords (0, 0, 0, 0, 0) and (1, 1, 1, 1, 1). It is a special example of what is called theq-ary repetition code of length n:nC {(c, c, . . . , c) c Q}.z} {This code has minimum distance d n. So, for odd n the code has e (n 1)/2. In thebinary case one can take Q {0, 1} and gets C {0, 1}, where 0 (0, 0, . . . , 0) and1 (1, 1, . . . , 1). It follows that a word with at most (n 1)/2 coordinates equal to 1, isin B(n 1)/2 (0), but not in B(n 1)/2 (1), while a word with at least (n 1)/2 coordinatesequal to 1, is in B(n 1)/2 (1), but not in B(n 1)/2 (0). This means that ρ (n 1)/2 eand that the binary repetition code of odd length is perfect.Instead of stating that C is a code of length n, minimum distance d and cardinality M ,we shall just say that C is a (n, M, d) code.Clearly if one applies the same coordinate permutation to all the codewords of C oneobtains a new code C 0 that has the same parameters as C. The same is true if for eachcoordinate one allows a permutation of the symbols in Q. Codes that can be obtained11

CHAPTER 2. LINEAR BLOCK CODESfrom each other in this way are called equivalent. From a coding theoretic point of viewthey are the same.The rate of a q-ary code C of length n is defined byR logq C .n(2.9)This definition coincides with (1.1), if one really maps q-ary k-tuples into q-ary n-tuples(then R k/n), but it also coincides with (1.1) in general. Indeed, if the encoder in(1.3.1) has an input alphabet of size C and maps each input symbol onto a uniquecodeword (so k 1 and M C ), equation (1.1) reduces to (2.9).Before we end this section, two more bounds will be given.Theorem 2.1.8 (Singleton bound) Let C be a q-ary (n, M, d) code. ThenM q n d 1 .(2.10)Proof: Erase in every codeword the last d 1 coordinates. Because all codewordsoriginally have distance at least d, the new words will still all be distinct. Their lengthis n d 1. However, there are only q n d 1 distinct words of length n d 1 over analphabet of size q.2Codes with parameters (n, q n d 1 , d) (i.e. for which the Singleton bound holds withequality) are called maximum-distance-separable codes or simply MDS codes.Now that we have seen several bounds that give an upper bound on the size of a codein terms of n and d, it is good to know that also lower bounds exist.Theorem 2.1.9 (Gilbert-Varshamov bound) There exist q-ary (n, M, d) codes satisfyingqn.(2.11)M Pd 1 n i(q 1)i 0 iProof: As long as a q-ary (n, M, d) code C satisfies M Pd 1qnthe spheres with(ni)(q 1)iradius d 1 around the words in C will not cover the set of all q-ary n-tuples, so onecan add a word to C that has distance at least d to all elements of C.i 022.2Linear codesIf one assumes the alphabet Q and the code C to have some internal structure, one canconstruct and analyze codes much more easily than in the absence of such structures.12

2.2. LINEAR CODESFrom now on Q will always have the structure of the Galois field GF (q), the finite fieldwith q elements (for the reader who is not familiar with finite fields Appendix A isincluded). As a consequence q pm for some prime p. Very often q will simply be 2.Now that Q GF (q), we can associate the set of words Qn with an n-dimensional vectorspace over GF (q). It will be denoted by Vn (q). For Vn (2) we shall simply write Vn . Theelements in Vn (q) are of course vectors, but will occasionally still be called words. Thesymbols denoting vectors will be underlined.If a codeword c has been transmitted but the vector r is received, the error pattern,caused by the channel, is the vector e withr c e.(2.12)The addition in (2.12) denotes the vector addition in Vn (q). The number of errors thatoccurred during the transmission is the number of non-zero entries in e.Definition 2.2.1 The Hamming weight w(x) of a vector x is the number of non-zerocoordinates in x. Sow(x) d(x, 0).Now that Qn has the structure of the vector space Vn (q), we can define the most important general class of codes.Definition 2.2.2 A linear code C of length n is any linear subspace of Vn (q).If C has dimension k and minimum distance d, one says that C is an [n, k, d] code.Note that a q-ary (n, M, d) code C has cardinality M, while a q-ary [n, k, d] code C islinear and has cardinality q k . The parameter d in the notations (n, M, d) and [n, k, d] issometimes omitted.To determine the minimum distance of an unstructured (n, M, d) code C one has toMcompute the distance between all 2 pairs of codewords. For linear codes withoutfurther structure, the next theorem will prove that this complexity is only M 1. Thisis the first bonus of the special structure of linear codes.Theorem 2.2.3 The minimum distance of a linear code C is equal to the minimumnon-zero weight in C.Proof: Since C is linear, with x and y in C also x y is in C. The theorem now followsfrom the two observations:d(x, y) d(x y, 0) w(x y),w(x) d(x, 0),which state that the distance between any two distinct codewords is equal to the weightof some non-zero codeword and vice versa.13

CHAPTER 2. LINEAR BLOCK CODES2So, to determine the minimum distance of a linear code, one never needs to do morethan to find the lowest weight of all M 1 non-zero codewords.There are two standard ways of describing a k-dimensional linear subspace: one by meansof k independent basis vectors, the other uses n k linearly independent equations. Bothtechniques turn out to be quite powerful in this context.Definition 2.2.4 A generator matrix G of an [n, k, d] code C is a k n matrix, of whichthe k rows form a basis of C. One says “the rows of G generate C”.It follows thatC {aG a Vk (q)}(2.13)The codeword c aG in (2.13) is the result of the encoding algorithm “multiplicationby G” applied to the so-called information vector or message vector a.Example 2.2.5 A generator matrix of the q-ary [n, 1, n] repetition code is given byG (1 1 · · · · · · 1).Example 2.2.6 The binary even weight code is defined as the set of all words of evenweight. It is a linear code with parameters [n, n 1, 2]. A generator matrix of the evenweight code is given by G 100.0010.0001.0··· ··· 0··· ··· 0··· ··· 0. . . .··· ··· 1111.1 . Examples 2.2.5 and 2.2.6 are also examples of what is called a generator matrix instandard form, i.e. a generator matrix of the form (Ik P ), where Ik is the k k identitymatrix. If G is in standard form, the first k coordinates of a codeword aG producethe information vector a itself! For this reason these coordinates are called informationsymbols. The last n k coordinates are added to the k information symbols to makeerror-correction possible.Since the generator matrix of a linear code has full row rank, it is quite obvious that anylinear code is equivalent to a linear code that does have a generator matrix in standardform. The quantity r n k is called the redundancy of the code.Non-linear codes of cardinality q k sometimes also have the property that on the firstk coordinates all q k possible (information) sequences occur. These codes are calledsystematic on the first k coordinates. It follows from the proof of the Singleton

5.5 Optimum, cyclic, b-burst-correcting codes 94 5.6 Problems 97 6 Convolutional codes 99 6.1 An example 99 6.2 (n,k)-convolutional codes 100 6.3 The Viterbi decoding algorithm 105 . 1.2 The channel The medium over which information is sent, together with its characteristics, is called