Transcription

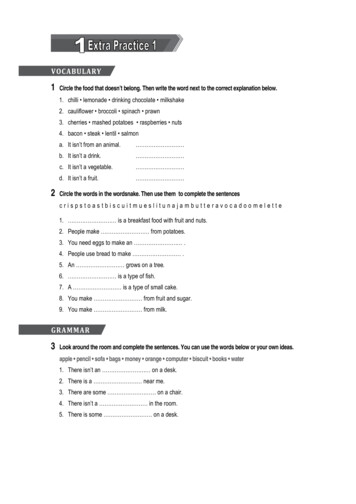

Is There No There There?Video Conferencing Softwareas a Performance MediumScot Gresham-LancasterCogswell Pollytechnical College1175 Bordeaux DriveSunnyvale, CA 01 (408) 541-0100slancaster@cogswell.eduArmstrong’s, “That's one small step for [a] man, one giant leapfor mankind.”2 comes to mind as an extreme example of this.The crackling distortion of this message enhanced our beliefin its reality.ABSTRACTThis paper surveys past performances in which the authorcollaborated with several other dancers, musicians, and mediaartists to present synchronized co-located performances at twoor more sites. This work grew out of the author's participationin the landmark computer music ensemble, “The HUB”. Each ofthe various performances was made possible by an evolvingarray of video conferencing hardware and software. These willbe discussed. The problems and interesting side effectspresented by latency and dropouts are a unique part of thisperformance practice. Leveraging the concepts of shared space,video and audio feedback generate evolving forms created b ythe combinations of the space, the sounds and the movementsof the participants. The ubiquity of broadband Internetconnections and the integration and constant improvement ofvideo conferencing software in modern operating systemsmakes this unique mode of performance an essential area ofresearch and development in new media performance.2. Computer Network MusicFrom the mid 1970’s until the present there has been anongoing area of music research and performance that involvesthe use of the network and software programs to create adynamic new music based on a web of messages passedbetween networks of various type.3 The author’s participationin the computer network performance ensemble the HUB was afactor in thinking through the future of the use of the networkas a component in new formal organization for musicperformance.4 Relative to the focus of this paper is therealization that the most interesting work done in this contextwas in the local network pieces. The geographically separatedpieces done near the end of the 1990’s seemed not t ocontribute to the richness of the ideas and seemed like more ofa gimmick that didn’t add to the advancement of the art.Keywords: videoconference, improvisation, live performance,video audio codec, network latencyIntroduction3. Videoconferencing breakthroughs enable anew style of workingWhat happens to the concept of performance when it becomesinstantaneous and distributed? There is no “now” in this newcontext. There is a shared delay. The degradation of signalsbetween spaces and the dimensional flattening created b yvideo and audio compression does not deter the imaginationof the participants. The mind-opening spectacle of real timebeing distorted in time and space is an especially rich newcontribution to the types of performance possible.In the time frame of the last five years the technology hasfinally offered this ability to “broadcast” bidirectional videoand audio using the Internet as the transport layer. What beforewas only available to major networks or over local cableaccess, was now possible by anyone with Internet access ofsufficient bandwidth and means to sufficiently compress thevideo and analog signals for transmission as data packets.These capabilities were associated with videoconferencing andintended for users to use the net as a video telephone primarilyfor distance meetings for the enterprise sector.Antiphony is defined as: “Music exploiting directional andcanonic opposition of widely spaced choirs or groups ofinstruments to create perspectives in sound.”1 It has alwaysbeen a part of the vocabulary of musical performance. Perhapsthe iconic St. Mark’s in Venice in the early sixteenth century i semblematic of the introduction of these factors into thewestern musical canon. The center of Venetian music was St.Mark's cathedral itself. Built in the manner of eastern basilicas,it had 2 choir lofts with 2 organs that came to define Venetianmusic.In the last 5 years there has been some major advances in bothbandwidth of the network infrastructure and the signalcompression capabilities of desktop computers. The initialwork could only be done in the context of a universitynetworks and using very expensive hardware video and audiocompression codecs. Today we are running rehearsals fromhome with our own personal computers and software codecsthat are integrated into the operating system. We are oftenconnected over WIFI and certainly can use the availablebandwidth of residential DSL or Cable for the Internettransport layer.Through the mass media we have experienced a new“antiphony”. Broadcast television has given us a familiaritywith the idea of instantaneous communication from multiplelocations.The crackling delayed lunar voice of Neil-1-

From the outset with our initial work there are specifictechnical questions regarding the relationship of the remotedancers to the impenetrable wall of the video projection andthe effect of delay on the rhythmic structure of music in eachspace. In the construction of all these pieces we have had t odeal with the inherent latency of the use of long distanceInternet use. As a normal user of the Internet, we have neverhad a guarantee of “QOS” or quality of service and thereforewere constantly faced with latencies and dropouts that becamecharacteristic of this sort of performance. It has been gratifyingto witness a steady improvement in the signal quality andshortening delays that in performance can be very problematic.software to optimize for the latency still present in the networkat that time. We used Linux based machines running VIC andRAT6 for the videoconferencing channels, but during theperformance only the audio from California was sent t oSwitzerland. We used the OSC7 protocol and Max otudpexternal developed by Matt Wright at CNMAT in Berkeley t ocommunicate midi from William Thibault’s video motiondetection software “Grabbo”8. This software is based o npattern matching of a histogram of the polar extraction ofvectors created by a given video frame. Features include,Anyone interest in pursuing this form of performance maythink that using the streaming server technology would be agood alternative, however there are some unforeseen problems.“Live” streaming provide by Windows Media, Icecast, RealNetworks, Darwin Streaming server, and Quicktime StreamingServer all substantially buffer the signal before they stream it.Often these buffers are a minute or longer. The very least I havebeen able to achieve is a seven second delay. While it i sinteresting to shift what is perceived as “now”, between bothsites by 14 seconds, in the performance of music it becomespractically unusable in a multi site interactive context. Thetechnology is evolving rapidly and this problem couldbecome a part of the legacy, but as of this writing it remains afactor and makes this streaming server technology unusable.2.) Image Matching: displays the closest match, andoutputs its index.1.) MIDI output to internal synth or MIDI port3.) Interpolation: keyframe images are associated with3D positions, and incoming video images comparedto keyframes to compute interpolated 3D positions.4.) Real-time computer visionThe advantage of using Thibault’s software was that, unlikemost video to mid conversion software that was “zone” basedthis software mapped to midi to the specific image on the framewhich could be matched to the dancers position. This gave thecancer a motion vocabulary that could drive the musicalstructures in a level of abstraction that was directly tied to thedancers gesture vocabulary. We also introduced text fragmentsinto the performance that were modified by a combination ofthe accumulation of the dancer’s repetitions, and the average“ping” delays we were experiencing over the network.The most interesting aspect of this work is that it points to anew form of performance that was not present in the purelyelectronic work of the computer music network. The use ofaudio and the close integration of movement and music atremote sites create a sense of shared place that was never partof the earlier computer music network work.4. A short historical record of performancesusing video conferencing technology4.2 "Calpurnia's Dream Obscured by Movement", was a partof Cultivating Communities: Dance in the Digital AgeInternet2's Fall Member Meeting at the USC's Bing Theatre CSUHayward (Oct. 2002)9Scot Gresham-Lancaster, Composer, Performer, and InstrumentDesignWilliam Thibault, Multimedia Program DesignerThomas Hird, Stage Setting and DirectionKristen Peralta, Dancer (remote, Hayward)Wilson Engel, Dancer (on-stage, Bing)Calpurnia's dream from Shakespeare's Julius Caesar was theinspiration for this dance, video, and MIDI performance. Themetrics generated by a 3D analysis of the video frames weretranslated to MIDI parameters. These MIDI control streamswere then used as control elements for the audio that wasstreamed back to both performance sites. The audio elements4.1 "incubator:how2gather" at HyperWerk in Basel,Switzerland and CSUHayward, USA with William Thibault,Scot Gresham-Lancaster, Iren Schwatz, Kathryn GreshamLancaster, Sam Ashley (June 2000)5This piece used a unique combination of hardware and-2-

included the use of “pure data”10 spectral-resynthesisvocoding of a reading of Calpurnia's dream, modulated in thefrequency and magnitude domains by the motions of thedancers. The control streams were also used to drive melodiccontours.establish a personal link between the main players in thenetworking of a given venue. This committed involvement i scrucial to making certain that the performance will actually bepossible.To analyze the video, each frame was first processed to extractedge information. The frequency of occurrence of each edgeorientation was then collected into a histogram. Each bin ofthe histogram was then interpreted as a component of a highdimensional feature vector. A set of frames was selected askeyframes, and each keyframe was associated with a point i n3D. For each new video frame, the Euclidean distances betweenthe feature vector of the current frame and the feature vectors ofthe keyframes were computed. A 3D position wais theninterpolated between the 3D positions associated with thekeyframes, by weighting each keyframe by its feature vector'sdistance from the current frame's feature vector.4.3 "New Journey for Four," at CSUH and the iEAR Studios atRensselaer Polytechnic Institute in Troy, with PaulineOliveros, June Watanabe, Scot Gresham-Lancaster, Jay Rizzetto(Oct. 2002)11This was a much more straightforwarduseofvideoconferencing software, video cameras and large formatprojection. In this case Pauline Oliveros primarily soloed o naccordion to June Watanabe’s choreography. They wereaccompanied by live trumpet and electronics. The simplicityand grace of this performance made clear that this was not justa gimmick, but a very viable new form of complex antiphony.The advantage of being able to continue collaborations overgreat distances became most clearly apparent with thisperformance.The metrics generated by this 3D analysis of the video frameswere translated to MIDI parameters. These MIDI controlstreams were then used as control elements for the audio thatwas streamed back to both performance sites. The audioelements included spectral-resynthesis vocoding of a readingof Calpurnia's dream, modulated in the frequency andmagnitude domains by the motions of the dancers. The controlstreams were also used to drive melodic contours.The most interesting technical problem to arise in the contextof this piece was that the “last mile” problem made it so wealmost couldn’t do the performance. The “last mile” problem i sso named, because you can have the fastest connectionavailable and if there is one 10 Mb router or switch in the lastten feet of the signal chain your data throughput will be 1 0Mb. In this case, an anonymous system administrator at RPI,upstream from our onsite network expert, had set a switch t othrottle traffic coming from the OC3 connection that waslinked to the Internet2 backbone all the way to the studio atCSUHayward. This had the effect of completely throttling allour interactivity.Technology used was a 10 Mbps MPEG-2 video stream usingVBrick 6200 series MPEG-2 Encoder/Decoder. Patternrecognition of vector based histogram from real-time videokeyframes used for direct control of sound synthesis on aCabybara 320 running a KYMA smalltalk implementation.Another interesting aspect of this performance was the use of ashadow puppet screen at UCSC which acted as a metaphorharking back to the some of the historical theatrical rootsimplicit in this new form of performance that is so dependenton impenetrable screen that are projections of far waylocations.Using iperf and traceroute on a Linux machine we were able t ocreate logs that showed the exact ip address of the routercausing the problem. Only after we presented these logs to theuninvolved system operator were we able to proceed. This costus an entire rehearsal cycle. I would advice anyone attemptingto do a piece of this nature, to run these sort of checks with theInformation Technology specialists at all ends of theperformance venues before going into rehearsal. Otherwisenon-technical participants have nothing to work with.The technical problem with this performance had to do withthe interaction with the much larger technical administrationof the multi site dance event that this was a part of. Whenplanning this performance we had made the decision to notsend a technical representative to the USC site. This was notonly for budgetary reasons, but also for aesthetic reasons. If i tis necessary to send someone from each remote site to eachother site the advantage and unique strength of thisperformance practice is to a certain extent lost. Otherparticipants thought this was a bad notion and sent teams oftechnical people to the remote site. There is a good chance thatthey would have been there anyway for the conference, butnever the less it lead to the unfortunate outcome of beinglargely ignored by particularly the audio engineer at the USCevent. For this reason at the Bing Theater during theperformance only one of the 2-channel feed was played in thehall. From our return feed we knew this was the case, butbecause we did not have an advocate representative there, theproblem was never addressed despite repeated attempts to d oso. This was an example of how truly virtual the other end ofthis sort of arrangement actually is.This points up the need to create a relationship, early in theprocess, that includes an open and proactive dialog betweenthe network administrators at all the sites and the individualsrunning the video and audio codec for the performance.Meetings are fine, but what actually makes this work out is t o4.4 "Peerings" Rensselaer Polytechnic Institute in Troy, NYand Mills College in Oakland,CA (April 2003)12-3-

A collaborative Internet2 performance between vocalists,musicians, dancers, designers, and audience membersexploring the mediated space of live Internet performance.Performers from Rensselaer Polytechnic Institute in Troy, NYand Mills College in Oakland, CA will perform in a commonvisual and acoustic space by virtue of the high-speed Internet,the Synthetic Space Environment (a research project ofRensselaer Polytechnic Institute), and the interdisciplinaryCenter for Immersive Technology (iCIM) at California StateUniversity, Hayward.There was a great variety of technology that was used in thisperformance. Since it was a collaboration between architecturaldesign students, dancers, and musicians, each piece focused o ndifferent aspect of technology. The first piece was simply twosingers in shrouds singing with each other with the image ofthe remote singer’s shroud projected the other’s shroud. Thiscreated a recursion between the two video images that wasaugmented by the latency of the signal. The singersthemselves had to also deal with a latency that was sometimesin excess of 1 second. The next piece was a computer networkmusic style network piece, like “the HUB” where twoperformers shared parameters between a common Supercollider3 patch13.The usual obscurity that accompanies theinscrutable nature of watching individuals play music on alaptop computer remained with the added baffling factor thatthey were a continent apart. Finally two dance troopsinteracted in a virtual space defined with 3d software to createa shared virtual space.4.5 "AB TIME I" Montevideo Center in Marseilles and theiCIM at CSUHayward (Oct. 2003)Marseilles: Jean Marc Montera Musician, Michele RicozziDancer, Choreographer, Inés Hernandez Dancer, PatrickLaffont - Video artistHayward: Scot Gresham-Lancaster, Musician – Video, KristenPeralta, Dancer.This was the first time that we used the iChat AV software thatis now integrated into the Mac OS X operating system. We hadtried NetMeeting on Windows and RAT and VIC on Linux, butthese were not satisfactory solutions for reliable 2-wayconnection. We were surprised to find that the performance ofthe iChat software was far superior to the multi thousand-dollarencoding boxes we had used previously. Additionally, it wasmuch easier to configure. This is a factor that made it possibleto collaborate directly with artists that might not necessarilyhave the networking knowledge needed to function in thisenvironment.This is the first time where we were dealing with the scale ofthe dancers in the respective sites when the video images weremapped onto each other. This problem of scale is aninteresting one that is a complex relationship between thedistance of the camera from the screen, the focal length andzoom of the cameras lens as well as the size and zoom of theprojection from the other space. Additionally, the scale of eachrespective participant changes relative to the distance from thescreen and/or the camera lens. Since these performances weremade in spaces that were not dedicated to the work, it was oftendifficult to set up the respective focal lengths to accommodatethese scaling problems. As a result, there was always anamusing moment at the end of the performance when the bowswere being taken that the performers from one site wereproportionally larger than the other site. This is a real attributeof this style of work that many choreographers have takenadvantage of during this performance and since.In this piece dancers were dealing directly with thephenomenon of the video projector and its relationship withthe video camera at the other site. By shooting the projectedscreen of the remote site and sending it a feedback loop i screated that is made up of the elements of the other screen andthe legacy delay of the previous screen as it is sent back fromthe remote video camera. What happens is a phenomenon thatis like video feedback but has the interesting attribute of eachlayer being first the remote space and then the delayed imageof the current space, etc. The delay is created by the inherentlatency of the network. This creates an impenetrable membranethat is a record of the reaction of the dancers on the remote site,as well as a record of the local site. Since the softwareautomatically flips the horizontal axis of the video image,movements left to right are translated to right to left on theirreturn to the site. Also, the faulty regeneration of the image viaprojector to video camera link, created a layer-by-layerghosting of the past. Add to that, the network artifacts andvisual flaws of the process and you get a new performanceenvironment that reflects the interaction of the movement,while augmenting it in a way that is a direct artifact of theprocess used. Video artist Patrick Laffont deserves credit for-4-

having this insight. Like most elegant ideas, it is obvious andstraightforward once you know about it.SKALEN collective in Marseilles, iEAR studio RPI and MillsCollege Dance Dept. (Jan. 2005)Similarly the musicians used the delay between sites as anelement in the performance. This has been a “problem” in otherperformances. The existence of this delay will always be afactor. At the very least there will be the light speed delaybetween two perfect network connections plus whatever time i ttakes the various routers and switches to transfer the datapackets from site to site. Another interesting factor is that thisdelay is not like a typical digital delay that musicians are usedto. For example, let us say there is a delay of 800 ms. Thatmeans at 75 bpm the note will be arriving at the remote site aquarter note later. This means even with out a normal reactiontime the soonest a return note will come back to the originalsite is 1600 ms or 2 beats later at the given tempo. Also, thesonic material that accompanies that beat will be one beat late.These factors must be accounted for when trying to coordinatelive playing in rhythm in this context.Marseilles: Jean Marc Montera Musician, Michele RicozziDancer, Choreographer, Inés Hernandez Dancer, FabienAlmakiewicz Dancer, José Maria Alves Dancer, Choreographer,Inés Hernandez Dancer, Patrick Laffont - Video artistRensselaer Polytechnical College, Troy, NY: Pauline OliverosMusician, Tomi Hahn dance and music, and associatesMills College in Oakland, CA: Scot Gresham-Lancaster:(Music & Video),Holly Furgason (Dance)This most recent performance was possibly the most ambitiousyet. In this case, opposing screens were setup and each of theremote sites had a view of the third remote site, but shotthrough the intermediary site. Therefore, a given location saw aprojected shot of remote space #1, with a projection on thewall of remote space #2. The trick being that the shot of remotespace two would have a projection of the host space, in thebackground with the network delayed image of the remotespace 1 and two in it, etc. We also, began doing more withzooming and changing the framing of the video shot so thescaling issues became very pronounced throughout the piece.In both AB TIME 1 and II there was a focus in the form o nsingling out combinations of performers from the varioussites for duos and trios between sites. This enabled musiciansto play for remote dance groupings and to interact with theother musicians, at the other sites, in varying combinations.One way to look at it is in terms of the speed of sound t odistance. Just for convenience lets say that the network delayis 1000 ms or 1 sec then the effective distance between theplayers is 340.29 meters, at least at sea level. This i sapproximately like playing 3 professional size soccer fieldsaway from each other. There is a constant shifting of thisnetwork lag, and so these timings and the perceived distancesimplied will shift as well. Given this, one can understand whyengineers have put these tremendous buffers on the playbackof “live” streams. However, in this performance, we decided t olook at this as a “feature” not a “bug” and organized thestructure of the music around the shifting delay. This enabledinteresting shifts of rhythmic texture as the separated “now” ofthe two spaces was trying to be reconciled throughout theperformance. Hence the name AB TIME, ironicallycommenting on the lack of a real “absolute” time in thiscontext, and sounding just a little like an exercise program.One of the interesting discoveries of this performance regardedthe need to improve the schema for audio monitoring betweensites. As in any live performance, monitoring of the othermusicians is critical. Also, like in many other situations, thereis a cultural bias towards the visual and therefore the audioaspect of all the videoconferencing software we have tried i sinsufficient. In the next performance we are planning to try inear monitors to avoid the feedback problems that we have beenstruggling with throughout. Much more work needs to be doneto improve the problems of audio quality associated withusing conventional videoconferencing software.Another interesting aspect of this performance was the use ofthe built in echo canceling as an acoustic artifact. In the sameway that we necessarily used the various “glitches” of theimperfect video, the constant injection of the reversed phasesignal at the delay time works imperfectly to squelch theacoustic feedback introduced by the latency. Thoseimperfections were used at times in the piece in an exaggeratedmanner, to add a residual “timbre” that was irreproducibleoutside of this specific context.5. Future ResearchThe principle line of research now is to work towards creating abetter codec for real time streaming. The audio quality ofstreaming servers such as icecast, QSS, and Darwin Streamingserver is more than sufficient for our needs. Since theseprograms have such large buffers, even in “real” timestreaming they are unusable for actual interactive liveperformance between shared sites. The plan is to take a closerlook at the Darwin streaming server source code and see ifsomething can be done to shorten this latency. This willnecessarily introduce problems of feedback since the timedelay phase reversal integration that is in many of the audiochannel codecs for video conferencing will not be present. Tothat end the prototype is being built in max/msp14 using theoggcast 15 external. This prototyping environment will allowfor spectral remapping of the incoming audio to compensatefor the delay artifacts. Also, the ogg vorbis open sourcestandard that oggcast uses has capabilities to support up t o128 channels. This makes it very attractive for the creation of amuch needed monitor and technical infrastructure back4.6 "AB TIME II"-5-

channel audio needed to coordinate the various performinggroups in a live context.Laffont for making much of this recent work possible. Withoutsomeone on the other end there is no way to do this.This is an ongoing process, that is tracking the new resourcesas they become available. It is clear in working in this contextthat much more research and types of work need to beexplored. These pieces have always been done in the context ofan ad-hoc assembly of rehearsal and planning that very rarelyhad complete institutional support at all sites. In a more stablecontext between cooperating institutions much of thequestions about the use of scaling and delay, as well astechnical issues regarding the multicasting of the variousstreams involved in such a performance, could be morethoroughly addressed.7. REFERENCES[1] utchinson/m0026610.html[2] One Giant Leap : Neil Armstrong's Stellar AmericanJourney – Leon Wagener; Forge Books (April 24, 2004)pg 181-185[3] Indigenous to the Web - Chris Brown and John ff/Also, accompanying this work is some ongoing work i ncollaboration with Obscura Digital LLC16 to integratesurround video in the context of live multi-site performance.Obscura Digital is doing pioneering work in “developinghigh-impact immersive content and providing the systems t odisplay it.” The integration of a surround audio and videopresence of multiple sites being shared across the Internet is avision of a future performance that is a rich and expandableway for global performances to take place.[4] The Aesthetic and History of the Hub: The Effects ofChanging Technology on Network Computer Music –Leonardo Music Journal, Vol. 8, pp 39-44, 1998[5] http://gallery.hyperwerk.ch/hypix/album24?&page 3[6] http://www.open4all.info/wiki/drazen/Open Source Streaming Platform[7] http://www.cnmat.berkeley.edu/OpenSoundControl/[8] http://www.grabbo.com/Finally is the need to integrate the use of software instrumentsthat a shared between the spaces. With the proliferation of OSCin many music applications, it has become much easier forcomposer/instrument designers can create whole orchestras ofsoftware instruments that have shared and reciprocal controlsbetween performance sites. The HUB’s reunion concert at theDutch Electronic Arts Festival 2004 included the performanceof several pieces in which remote participants contributed overvideo/audio channels as well as with OSC commands thatplayed local instruments. This portends the integration ofthese two performance modes into a seamless whole.[9] http://arts.internet2.edu/calpurnia.html[10] http://puredata.org[11] http://www.mcs.csuhayward.edu/ tebo/icim/i2performance/journey.jpg[12] http://www.o-art.org/peerings/[13] http://supercollider.sourceforge.net/[14] http://cycling74.com[15] http://www.nullmedium.de/dev/oggpro/[16] http://www.obscuradigital.com/1. Conclusion[17] http://www.deeplistening.org/pauline/[18] www.mills.edu/LIFE/CCM/CCM.homepage.htmFrom the author’s experience, one thing is certain; this is notjust a single performance piece, but also a Meta configurationthat could even become a future traditional form ofperformance practice. It is rich in artistic potential as well astechnical challenge. It offers the participants and users aunique opportunity to explore the concept of virtual sharedspace and leverages the difference between the physical bodyand the projected image in a unique way. Musically it offers anew challenge to musicians to conceive of a music that i srelativistic in nature. Where the absolute now is not shared b ythe musicians and where the “one” of any bar of music is notsomething that exists at the same time in any of the sharedspaces. As the tools for creating new transformations of videoand audio become available, the potential for collaborators t owork in an extended way across space and, in a limited sense,across time is very promising.[19] http://skalen.org/6. ACKNOWLEDGMENTSThanks to Pauline Oliveros17 and her crew at RPI mostespecially Information Technologist Igor Broos, the MillsCollege Center for Contemporary Music18 and DanceDepartment, particularly Les Stuck and Kathleen McClintock,Dr. William Thibault and his ongoing research extending therange of the possible, and finally SKALEN Artistic group19with a particular thanks to both Jean

video conferencing software in modern operating systems makes this unique mode of performance an essential area of research and development in new media performance. Keywords: videoconference, improvisation, live performance, video audio codec, network latency Introduction What happens to the concept of performance when it becomes