Transcription

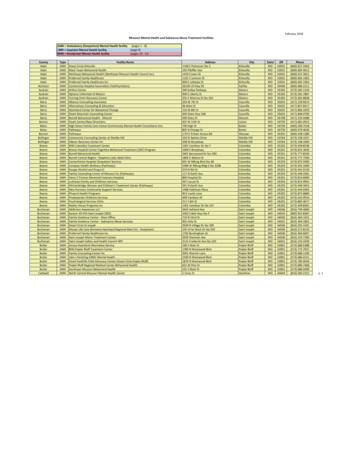

CSEP 573Chapters 3-5Problem Solving using Search“First, they do an on-line search” CSE AI FacultyExample: The 8-puzzle1 2 3487 6 51 2 34 5 67 821

startExample: Route Planningend3Example: N Queens4 Queens42

Example: N Queens4 Queens5State-Space Search ProblemsGeneral problem:Given a start state, find a path to a goal state Can test if a state is a goal Given a state, can generate its successor statesVariants: Find any path vs. a least-cost path Goal is completely specified, task is just to find the path– Route planning Path doesn’t matter, only finding the goal state– 8 puzzle, N queens63

Tree Representation of 8-Puzzle Problem Space7fringe ( frontier in the textbook) is the set of all leaf nodes available for expansion84

9action Rightchildren105

11126

13147

15168

17189

19 O(bd ), i.e., exponential in d2010

O(bd ), i.e., exponential in dO(bd )21 O(bd ), i.e., exponential in dO(bd )Space is the big problem for BFS.Example: b 10, 10,000 nodes/sec, 1KB/noded 3 Î 1000 nodes, 0.1 sec, 1MBd 5 Î 100,000 nodes, 10 secs, 100 MBd 9 Î 109 nodes, 31 hours, 1 TB2211

(used when step costs are unequal)(use priority queue)(small positive constant; 0 cost may cause infinite loop)232412

252613

272814

293015

313216

333417

353618

(“GRAPH-SEARCH” in textbook)37(“GRAPH-SEARCH” in textbook)(m maximum depth)3819

39(may find a solution but least cost solutionmay be on a different branch)4020

414221

434422

454623

474824

495025

Increasing path-cost limits instead of depth limitsThis is called Iterative lengthening search (exercise 3.17)515226

startForwards vs. BackwardsendProblem: Find the shortest route53Bidirectional SearchMotivation: bd/2 bd/2 bdCan use breadth-first search or uniform-cost searchHard for implicit goals e.g., goal “checkmate” in chess5427

Repeated StatesFailure to detect repeated states can turn a linear problem into anexponential one! (e.g., repeated states in 8 puzzle)Graph search algorithm: Store expanded nodes in a set calledclosed (or explored) and only add new nodes to the fringe55Graph Search5628

All these methods are slow (blind)Can we do better?57Informed SearchUse problem-specific knowledge to guide search (use “heuristicfunction”)5829

Best-first SearchGeneralization of breadth first searchPriority queue of nodes to be exploredEvaluation function f(n) used for each nodeInsert initial state into priority queueWhile queue not emptyNode head(queue)If goal(node) then return nodeInsert children of node into pr. queue59Who’s on (best) first?Breadth first search is special case of best first with f(n) depth(n)Dijkstra’s Algorithm is best first with f(n) g(n)where g(n) sum of edge costs from startto n6030

Greedy best-first searchEvaluation function f(n) h(n) (heuristic) estimate of costfrom n to goale.g., Route finding problems: hSLD(n) straight-line distancefrom n to destinationGreedy best-first search expands the node that appears to beclosest to goal61Example: Lost inRomaniaNeed: Shortest path from Arad to Bucharest6231

Example: Greedily Searching for BucharesthSLD(Arad)63Example: Greedily Searching for Bucharest6432

Example: Greedily Searching for Bucharest65Example: Greedily Searching for BucharestGreeddoesn’tpay!Not optimal!Arad, Sibiu, Rimnicu Vilcea, Pitesti, Bucharest shorter6633

Properties of Greedy Best-First SearchComplete? No – can get stuck in loops (unless closed list is used)Time? O(bm), but a good heuristic can give dramaticimprovementSpace? O(bm) -- keeps all nodes in memory a la breadth firstsearchOptimal? No, as our example illustrated67A* Search(Hart, Nilsson & Rafael 1968) Best first search with f(n) g(n) h(n)g(n) sum of edge costs from start to nh(n) heuristic function estimate of lowest cost pathfrom n to goal If h(n) is “admissible” then search will be optimalUnderestimates costof any solution whichcan be reached from node{6834

Back in RomaniaAgainstartAici noienergieiar!end69A* Example for Romaniaf(n) g(n) h(n) whereg(n) sum of edge costs from start to nh(n) hSLD(n) straight-line distance from n to destination7035

A* Example71A* Example7236

A* Example73A* Example7437

A* Example75Admissible heuristicsA heuristic h(n) is admissible iffor every node n,h(n) h*(n)where h*(n) is the true cost to reach the goal state from n.An admissible heuristic never overestimates the cost to reach thegoal, i.e., it is optimistic7638

Admissible HeuristicsIs the Straight Line Distance heuristic hSLD(n)admissible?77Admissible HeuristicsIs the Straight Line Distance heuristic hSLD(n)admissible?Yes, it never overestimates the actual road distanceTheorem: If h(n) is admissible, A* using TREESEARCH is optimal.7839

Optimality of A* (proof)Suppose some suboptimal goal G2 has been generated and is in thefringe. Let n be an unexpanded node in the fringe such that nis on a shortest path to an optimal goal G.f(G2) g(G2) g(G)f(G) g(G)f(G2) f(G)sincesincesincefromh(G2) 0G2 is suboptimalh(G) 0above79Optimality of A* (cont.)Suppose some suboptimal goal G2 has been generated and is in thefringe. Let n be an unexpanded node in the fringe such that nis on a shortest path to an optimal goal G.f(G2) f(G)h(n) h*(n)from prev slidesince h is admissibleg(n) h(n) g(n) h*(n)f(n) f(G) f(G2)Hence f(n) f(G2) A* will never select G2 for expansion.8040

Optimality of A*A* expands nodes in order of increasing f valueGradually adds "f-contours" of nodes81Okay, proof is done!Time to wake up AIrocks!8241

Properties of A*Complete? Yes (unless there are infinitely many nodeswith f f(G) )Time? Exponential (for most heuristic functions inpractice)Space? Keeps all generated nodes in memory(exponential number of nodes)Optimal? Yes83Admissible heuristicsE.g., for the 8-puzzle, what are some admissibleheuristic functions? (for # steps to goal state)h1(n) ?h2(n) ?8442

Admissible heuristicsE.g., for the 8-puzzle:h1(n) number of misplaced tilesh2(n) total Manhattan distance (no. of squares fromdesired location of each tile)h1(S) ?h2(S) ?S85Admissible heuristicsE.g., for the 8-puzzle:h1(n) number of misplaced tilesh2(n) total Manhattan distance (no. of squares fromdesired location of each tile)h1(S) ? 8h2(S) ? 3 1 2 2 2 3 3 2 188643

DominanceIf h2(n) h1(n) for all n (both admissible) then h2dominates h1h2 is better for search87DominanceE.g., for 8-puzzle heuristics h1 and h2, typicalsearch costs (average number of nodes expandedfor solution depth d):d 12 IDS 3,644,035 nodesA*(h1) 227 nodesA*(h2) 73 nodesd 24 IDS too many nodesA*(h1) 39,135 nodesA*(h2) 1,641 nodes8844

In general, A* not practical for large scaleproblems due to memory requirements(all generated nodes in memory)Idea: Use iterative deepeningIterative-Deepening A*Like iterative-deepening search, butcutoff is f cost ( g h) rather than depthAt each iteration, cutoff is smallest f cost amongnodes that exceeded cutoff on prev iterationef L 21f L 15afdbc9045

Back to Admissable Heuristicsf(x) g(x) h(x)g: cost so farh: underestimate of remaining costse.g., hSLDWhere do heuristics come from?91Relaxed ProblemsDerive admissible heuristic from exact cost of a solutionto a relaxed version of problem For route planning, what is a relaxed problem?Relax requirement that car stay on road ÆStraight Line Distance becomes optimal costCost of optimal solution to relaxed problem cost of optimal solution for real problem9246

Heuristics for eight puzzle7 2 35 1 68 4Æstart1 2 34 5 67 8goalWhat can we relax?Original Problem: Tile can move from location A to B ifA is horizontally or vertically next to B and B is blank93Heuristics for eight puzzle7 2 35 1 68 4Æ1 2 34 5 67 8Relaxed 1: Tile can move from any location A to any location BCost h1 number of misplaced tilesRelaxed 2: Tile can move from A to B if A is horizontally orvertically next to B (note: B does not have to be blank)Cost h2 total Manhattan distanceYou can try other possible heuristics in your HW #19447

Need for Better HeuristicsPerformance of h2 (Manhattan Distance Heuristic) 8 Puzzle 1 second 15 Puzzle1 minute 24 Puzzle65000 yearsCan we do better?Adapted from Richard Korf presentation95Creating New HeuristicsGiven admissible heuristics h1, h2, , hm, none of themdominating any other, how to choose the best?Answer: No need to choose only one! Use:h(n) max {h1(n), h2(n), , hn(n)}h is admissible (why?)h dominates all hi (by construction)Can we do better with:h’(n) h1(n) h2(n) hn(n)?9648

Pattern DatabasesIdea: Use solution cost of a subproblem as heuristic. For8-puzzle: pick any subset of tilesE.g., 3, 7, 11, 12Precompute a table Compute optimal cost of solving just these tiles– This is a lower bound on actual cost with all tiles For all possible configurations of these tiles– Could be several million Use breadth first search back from goal state– State position of just these tiles (& blank) Admissible heuristic hDB for complete state costof corresponding sub-problem state in databaseAdapted from Richard Korf presentation97Combining Multiple DatabasesCan choose another set of tiles Precompute multiple tablesHow to combine table values? Use the max trick!E.g. Optimal solutions to Rubik’s cube First found w/ IDA* using pattern DBheuristics Multiple DBs were used (diff subsets ofcubies) Most problems solved optimally in 1 day Compare with 574,000 years for IDSAdapted from Richard Korf presentation9849

Drawbacks of Standard Pattern DBsSince we can only take max Diminishing returns on additional DBsWould like to be able to add values– But not exceed the actual solution cost (toensure admissible heuristic)– How?99Adapted from Richard Korf presentationDisjoint Pattern DBsPartition tiles into disjoint sets For each set, precompute table Don’t count moves of tiles not in set––––1 2 3 45 6 7 89 10 11 12This makes sure costs are disjoint13 14 15Can be added without overestimating!E.g. For 15 puzzle shown, 8 tile DB has 519 million entriesAnd 7 tile DB has 58 millionDuring search Look up costs for each set in DB Add values to get heuristic function value Manhattan distance is a special case of this ideawhere each set is a single tileAdapted from Richard Korf presentation10050

Performance15 Puzzle: 2000x speedup vs Manhattan dist IDA* with the two DBs solves 15 Puzzleoptimally in 30 milliseconds24 Puzzle: 12 millionx speedup vs Manhattan IDA* can solve random instances in 2days. Requires 4 DBs as shown– Each DB has 128 million entries Without PDBs: 65000 yearsAdapted from Richard Korf presentation101Next: Local SearchHow to climb hillsHow to reach the top by annealingHow to simulate and profit from evolution10251

Local search algorithmsIn many optimization problems, the path to the goalis irrelevant; the goal state itself is the solutionFind configuration satisfying constraints,e.g., n-queensIn such cases, we can use local search algorithmsKeep a single "current" state, try to improve it103Example: n-queensPut n queens on an n n board with no twoqueens on the same row, column, or diagonal10452

Hill-climbing search"Like climbing Everest in thick fog with amnesia"105Hill-climbing searchProblem: depending on initial state, can getstuck in local maxima10653

Example: 8-queens problemHeuristic?(Value function)h number of pairs of queens that are attacking eachother, either directly or indirectlyh 17 for the above state (would like to minimize this)107Example: 8-queens problemA local minimum with h 1. Need h 0How to find global minimum (or maximum)?10854

Simulated AnnealingIdea: escape local maxima by allowing some "bad"moves but gradually decrease their frequency109Properties of simulated annealingOne can prove: If T decreases slowly enough,then simulated annealing search will find a globaloptimum with probability approaching 1Widely used in VLSI layout, airline scheduling, etc11055

Local Beam SearchKeep track of k states rather than just oneStart with k randomly generated statesAt each iteration, all the successors of all k statesare generatedIf any one is a goal state, stop; else select the kbest successors from the complete list andrepeat.111Hey, perhaps sexcan improvesearch?11256

Sure – check out yebook.113Genetic AlgorithmsA successor state is generated by combining two parent statesStart with k randomly generated states (population)A state is represented as a string over a finite alphabet (often astring of 0s and 1s)Evaluation function (fitness function). Higher values for betterstates.Produce the next generation of states by selection, crossover,and mutation11457

Example: 8-queens problem87654321StringRepresentation:16257483Can we evolve a solution through genetic algorithms?115Example: Evolving 8 Queens?Sorry, wrong queens11658

Example: Evolving 8 QueensFitness function: number of non-attacking pairs of queens(min 0, max 8 7/2 28)24/(24 23 20 11) 31% probability of selection forreproduction23/(24 23 20 11) 29% etc117Queens crossing over11859

Let’s move on toadversarial gamesAdversarial GamesPrograms that can play competitive boardgamesMinimax SearchAlpha-Beta Pruning12060

Games formationchess, checkers,go, othellochancebackgammon,monopolypoker,bridge, scrabble121Games & Game TheoryWhen there is more than one agent, the future is noteasily predictable anymore for the agentIn competitive environments (conflicting goals),adversarial search becomes necessaryIn AI, we usually consider special type of games: board games, which can be characterized asdeterministic, turn-taking, two-player, zero-sumgames with perfect information12261

Games as SearchComponents: States: Initial state: Successor function: Terminal test: Utility function:123Games as SearchComponents: States: board configurations Initial state: the board position and which playerwill move Successor function: returns list of (move, state)pairs, each indicating a legal move and the resultingstate Terminal test: determines when the game is over Utility function: gives a numeric value in terminalstates (e.g., -1, 0, 1 in chess for loss, tie, win)12462

Games as SearchConvention: first player is called MAX,2nd player is called MINMAX moves first and they take turns until game isoverWinner gets reward, loser gets penaltyUtility values stated from MAX’s perspectiveInitial state and legal moves define the game treeMAX uses game tree to determine next move125Tic-Tac-Toe Example12663

Optimal Strategy: Minimax SearchFind the contingent strategy for MAX assuming aninfallible MIN opponentAssumption: Both players play optimally!Given a game tree, the optimal strategy can bedetermined by using the minimax value of each node(defined recursively):MINIMAX-VALUE(n) UTILITY(n)maxs succ(n) MINIMAX-VALUE(s)mins succ(n) MINIMAX-VALUE(s)If n is a terminalIf n is a MAX nodeIf n is a MIN node127Two-Ply Game Tree“Ply” move by 1 player12864

Two-Ply Game Tree129Two-Ply Game Tree13065

Two-Ply Game TreeMinimax decision A1Minimax maximizes the worst-case outcome for max131What if MIN does not play optimally?Definition of optimal play for MAX assumes MINplays optimally Maximizes worst-case outcome for MAXIf MIN does not play optimally, MAX will do evenbetter (i.e. at least as much or more utility obtainedthan if MIN was optimal) [Exercise 5.7 in textbook]13266

Another example( 4 ply)13313467

13513668

13713869

Choose thismove139Minimax Algorithm14070

Properties of minimaxComplete? Yes (if tree is finite)Optimal? Yes (against an optimal opponent)Time complexity? O(bm)Space complexity? O(bm) (depth-firstexploration)141Good enough?Chess: branching factor b 35 game length m 100 search space bm 35100 10154The Universe: number of atoms 1078 age 1021 millisecondsCan we search more efficiently?14271

Next Class:Wrap up of searchLogic and ReasoningTo do:Homework #1Sign up for class mailing list72

3 5 Example: N Queens 4 Queens 6 State-Space Search Problems General problem: Given a start state, find a path to a goal state Can test if a state is a goal Given a state, can generate its successor states Variants: Find any path vs. a least-cost path Goal is completely specified, task is just to find the path – Route planning