Transcription

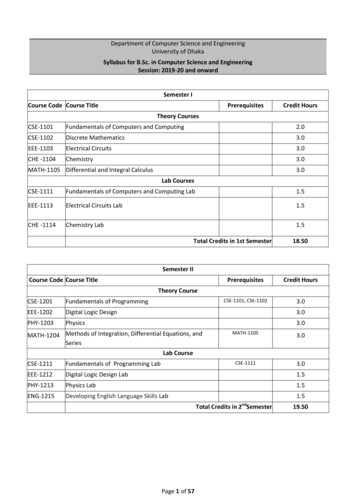

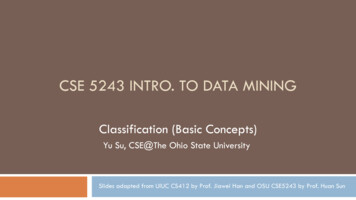

CSE 5243 INTRO. TO DATA MININGAdvanced Frequent Pattern Mining&Locality Sensitivity HashingHuan Sun, CSE@The Ohio State University11/07/2017Slides adapted from Prof. Jiawei Han @UIUC, Prof. Srinivasan Parthasarathy @OSU

SPADE 2Problems in the GSP Algorithm Multiple database scans Complex hash structures with poor locality Scale up linearly as the size of dataset increasesSPADE: Sequential PAttern Discovery using Equivalence classes Use a vertical id-list database Prefix-based equivalence classes Frequent sequences enumerated through simple temporal joins Lattice-theoretic approach to decompose search spaceAdvantages of SPADE 3 scans over the database Potential for in-memory computation and parallelizationPaper ?doi 10.1.1.113.6042&rep rep1&type pdf

SPADEId lists of each item3Paper ?doi 10.1.1.113.6042&rep rep1&type pdf

SPADEId lists of each item4Paper ?doi 10.1.1.113.6042&rep rep1&type pdf

5

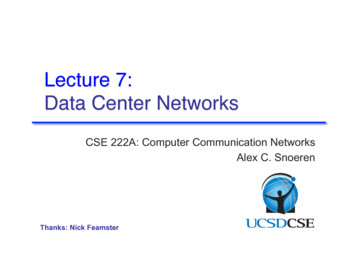

MMDS Secs. 3.2-3.4.Slides adapted from: J. Leskovec, A. Rajaraman,J. Ullman: Mining of Massive Datasets,http://www.mmds.orgFINDING SIMILAR ITEMSSlides also adapted from Prof. Srinivasan Parthasarathy @OSU

Task: Finding Similar Documents Goal: Given a large number (𝑵𝑵 in the millions or billions) ofdocuments, find “near duplicate” pairsApplications: Mirrorwebsites, or approximate mirrors remove duplicates Similar news articles at many news sites cluster7

Task: Finding Similar Documents Goal: Given a large number (𝑵𝑵 in the millions or billions) ofdocuments, find “near duplicate” pairsApplications: Mirrorwebsites, or approximate mirrors remove duplicates Similar news articles at many news sites clusterWhat are the challenges, compared with detecting exact duplicates?8

Task: Finding Similar Documents Goal: Given a large number (𝑵𝑵 in the millions or billions) of documents,find “near duplicate” pairsApplications:Mirror websites, or approximate mirrors remove duplicates Similar news articles at many news sites cluster Problems: Manysmall pieces of one document can appear out of order in another Too many documents to compare all pairs Documents are so large or so many (scale issues)9J. Leskovec, A. Rajaraman, J. Ullman: Mining of Massive Datasets, http://www.mmds.org

Two Essential Steps for Similar Docs1.2.Shingling: Convert documents to setsMin-Hashing: Convert large sets to short signatures, whilepreserving similarityHost of follow up applicationse.g. Similarity SearchData PlacementClustering etc.10J. Leskovec, A. Rajaraman, J. Ullman: Mining of Massive Datasets, http://www.mmds.org

The Big PictureSimilarity SearchData PlacementClustering etc.DocumentThe setof stringsof length kthat appearin the document11Signatures:short integervectors thatrepresent thesets, andreflect theirsimilarityJ. Leskovec, A. Rajaraman, J. Ullman: Mining of Massive Datasets, http://www.mmds.org

DocumentThe setof stringsof length kthat appearin the documentSHINGLINGStep 1: Shingling: Convert documents to sets

Documents as High-Dim Data Step 1: Shingling: Convert documents to sets Simple approaches: Document set of words appearing in document Document set of “important” words Don’t work well for this application. Why? 13Need to account for ordering of words!A different way: Shingles!J. Leskovec, A. Rajaraman, J. Ullman: Mining of Massive Datasets, http://www.mmds.org

Define: Shingles A k-shingle (or k-gram) for a document is a sequence of k tokensthat appears in the doc Tokenscan be characters, words or something else, depending on theapplication Assume tokens characters for examples14J. Leskovec, A. Rajaraman, J. Ullman: Mining of Massive Datasets, http://www.mmds.org

Define: Shingles A k-shingle (or k-gram) for a document is a sequence of k tokensthat appears in the doc Tokenscan be characters, words or something else, depending on theapplication Assume tokens characters for examples 15Example: k 2; document D1 abcabSet of 2-shingles: S(D1) {ab, bc, ca}J. Leskovec, A. Rajaraman, J. Ullman: Mining of Massive Datasets, http://www.mmds.org

Define: Shingles A k-shingle (or k-gram) for a document is a sequence of k tokensthat appears in the doc Tokenscan be characters, words or something else, depending on theapplication Assume tokens characters for examples Example: k 2; document D1 abcabSet of 2-shingles: S(D1) {ab, bc, ca} Anotheroption: Shingles as a bag (multiset), count ab twice: S’(D1) {ab, bc, ca, ab}16J. Leskovec, A. Rajaraman, J. Ullman: Mining of Massive Datasets, http://www.mmds.org

Shingles: How to treat white-space chars?It makes sense to replace any sequence of one or more white-space characters (blank, tab,newline, etc.) by a single blank.This way distinguishes shingles that cover two or more words from those that do not.17J. Leskovec, A. Rajaraman, J. Ullman: Mining of Massive Datasets, http://www.mmds.org

How to choose K? Documents that have lots of shingles in common have similar text,even if the text appears in different orderCaveat: You must pick k large enough, or most documents will havemost shingles k 5 is OK for short documents k 10 is better for long documents18J. Leskovec, A. Rajaraman, J. Ullman: Mining of Massive Datasets, http://www.mmds.org

Compressing Shingles To compress long shingles, we can hash them to (say) 4 bytes Likea Code Book If #shingles manageable Simple dictionary sufficese.g., 9-shingle bucket number [0, 2 32 - 1](using 4 bytes instead of 9)19J. Leskovec, A. Rajaraman, J. Ullman: Mining of Massive Datasets, http://www.mmds.org

Compressing Shingles To compress long shingles, we can hash them to (say) 4 bytes Likea Code Book If #shingles manageable Simple dictionary suffices Doc represented by the set of hash/dict. values of its k-shingles Idea:Two documents could (rarely) appear to have shingles in common,when in fact only the hash-values were shared20J. Leskovec, A. Rajaraman, J. Ullman: Mining of Massive Datasets, http://www.mmds.org

Compressing Shingles To compress long shingles, we can hash them to (say) 4 bytes Likea Code Book If #shingles manageable Simple dictionary suffices Doc represented by the set of hash/dict. values of its k-shingles Example: k 2; document D1 abcab Set of 2-shingles: S(D1) {ab, bc, ca}Hash the singles: h(D1) {1, 5, 7}21J. Leskovec, A. Rajaraman, J. Ullman: Mining of Massive Datasets, http://www.mmds.org

Similarity Metric for Shingles Document D1 is a set of its k-shingles C1 S(D1) Equivalently, each document is a 0/1 vector in the space of k-shingles 22Each unique shingle is a dimensionVectors are very sparseJ. Leskovec, A. Rajaraman, J. Ullman: Mining of Massive Datasets, http://www.mmds.org

Similarity Metric for Shingles Document D1 is a set of its k-shingles C1 S(D1) Equivalently, each document is a 0/1 vector in the space of k-shingles 23Each unique shingle is a dimensionVectors are very sparseA natural similarity measure is the Jaccard similarity:sim(D1, D2) C1 C2 / C1 C2 J. Leskovec, A. Rajaraman, J. Ullman: Mining of Massive Datasets, http://www.mmds.org

Motivation for Minhash/LSH Suppose we need to find similar documents among 𝑵𝑵 𝟏𝟏 milliondocumentsNaïvely, we would have to compute pairwise Jaccard similarities forevery pair of docs𝑵𝑵(𝑵𝑵 𝟏𝟏)/𝟐𝟐 5*1011 comparisons At 105 secs/day and 106 comparisons/sec,it would take 5 days 24For 𝑵𝑵 𝟏𝟏𝟏𝟏 million, it takes more than a year J. Leskovec, A. Rajaraman, J. Ullman: Mining of Massive Datasets, http://www.mmds.org

DocumentThe setof stringsof length kthat appearin the documentSignatures:short integervectors thatrepresent thesets, and reflecttheir similarityMINHASHINGStep 2: Minhashing: Convert large variable length sets toshort fixed-length signatures, while preserving similarity

Encoding Sets as Bit Vectors 26Many similarity problems can be formalized as finding subsets thathave significant intersectionJ. Leskovec, A. Rajaraman, J. Ullman: Mining of Massive Datasets, http://www.mmds.org

Encoding Sets as Bit Vectors Many similarity problems can be formalized as finding subsets thathave significant intersectionEncode sets using 0/1 (bit, boolean) vectors 27One dimension per element in the universal setInterpret set intersection as bitwise AND, andset union as bitwise ORJ. Leskovec, A. Rajaraman, J. Ullman: Mining of Massive Datasets, http://www.mmds.org

Encoding Sets as Bit Vectors Many similarity problems can be formalized as finding subsets thathave significant intersectionEncode sets using 0/1 (bit, boolean) vectors Interpret set intersection as bitwise AND, andset union as bitwise ORExample: C1 10111; C2 10011 28One dimension per element in the universal setSize of intersection 3; size of union 4,Jaccard similarity (not distance) 3/4Distance: d(C1,C2) 1 – (Jaccard similarity) 1/4J. Leskovec, A. Rajaraman, J. Ullman: Mining of Massive Datasets, http://www.mmds.org

From Sets to Boolean Matrices Rows elements (shingles)DocumentsColumns sets (documents) 29Note: Transposed Document Matrix1 in row e and column s if and only if e is a valid shingle ofdocument represented by sColumn similarity is the Jaccard similarity of the correspondingsets (rows with value 1)Typical matrix is sparse!Shingles 1110101100110001100111101010J. Leskovec, A. Rajaraman, J. Ullman: Mining of Massive Datasets, http://www.mmds.org

Outline: Finding Similar Columns So far:Documentsdocuments a set of shingles Represent a set as a boolean vector in a matrixShingles A301110101100110001100111101010J. Leskovec, A. Rajaraman, J. Ullman: Mining of Massive Datasets, http://www.mmds.org

Outline: Finding Similar Columns So far:Documentsdocuments a set of shingles Represent a set as a boolean vector in a matrix Next goal: Find similar columns while computingsmall signatures Similarity31of columns similarity of signaturesShingles A1110101100110001100111101010J. Leskovec, A. Rajaraman, J. Ullman: Mining of Massive Datasets, http://www.mmds.org

Outline: Finding Similar Columns Next Goal: Find similar columns, Small signatures Naïve approach: 321) Signatures of columns: small summaries of columnsJ. Leskovec, A. Rajaraman, J. Ullman: Mining of Massive Datasets, http://www.mmds.org

Outline: Finding Similar Columns Next Goal: Find similar columns, Small signatures Naïve approach:1) Signatures of columns: small summaries of columns 2) Examine pairs of signatures to find similar columns 33Essential: Similarities of signatures and columns are related3) Optional: Check that columns with similar signatures are really similarJ. Leskovec, A. Rajaraman, J. Ullman: Mining of Massive Datasets, http://www.mmds.org

Outline: Finding Similar Columns Next Goal: Find similar columns, Small signatures Naïve approach:1) Signatures of columns: small summaries of columns 2) Examine pairs of signatures to find similar columns 3) Optional: Check that columns with similar signatures are really similarWarnings: Comparing all pairs may take too much time: Job for LSH 34Essential: Similarities of signatures and columns are relatedThese methods can produce false negatives, and even false positives (if the optional check isnot made)J. Leskovec, A. Rajaraman, J. Ullman: Mining of Massive Datasets, http://www.mmds.org

Hashing Columns (Signatures) : LSH principle Key idea: “hash” each column C to a small signature h(C), such that:(1) h(C) is small enough that the signature fits in RAM (2) sim(C1, C2) is the same as the “similarity” of signatures h(C1) and h(C2) 35J. Leskovec, A. Rajaraman, J. Ullman: Mining of Massive Datasets, http://www.mmds.org

Hashing Columns (Signatures) : LSH principle Key idea: “hash” each column C to a small signature h(C), such that:(1) h(C) is small enough that the signature fits in RAM (2) sim(C1, C2) is the same as the “similarity” of signatures h(C1) and h(C2) Goal: Find a hash function h(·) such that:If sim(C1,C2) is high, then with high prob. h(C1) h(C2) If sim(C1,C2) is low, then with high prob. h(C1) h(C2) 36J. Leskovec, A. Rajaraman, J. Ullman: Mining of Massive Datasets, http://www.mmds.org

Hashing Columns (Signatures) : LSH principle Key idea: “hash” each column C to a small signature h(C), such that:(1) h(C) is small enough that the signature fits in RAM (2) sim(C1, C2) is the same as the “similarity” of signatures h(C1) and h(C2) Goal: Find a hash function h(·) such that:If sim(C1,C2) is high, then with high prob. h(C1) h(C2) If sim(C1,C2) is low, then with high prob. h(C1) h(C2) 37Hash docs into buckets. Expect that “most” pairs of near duplicate docshash into the same bucket!J. Leskovec, A. Rajaraman, J. Ullman: Mining of Massive Datasets, http://www.mmds.org

Min-Hashing Goal: Find a hash function h(·) such that: ifsim(C1,C2) is high, then with high prob. h(C1) h(C2) if sim(C1,C2) is low, then with high prob. h(C1) h(C2) Clearly, the hash function depends on the similarity metric: Not38all similarity metrics have a suitable hash functionJ. Leskovec, A. Rajaraman, J. Ullman: Mining of Massive Datasets, http://www.mmds.org

Min-Hashing Goal: Find a hash function h(·) such that: ifsim(C1,C2) is high, then with high prob. h(C1) h(C2) if sim(C1,C2) is low, then with high prob. h(C1) h(C2) Clearly, the hash function depends on the similarity metric: Not 39all similarity metrics have a suitable hash functionThere is a suitable hash function for the Jaccard similarity: It is calledMin-HashingJ. Leskovec, A. Rajaraman, J. Ullman: Mining of Massive Datasets, http://www.mmds.org

Min-Hashing 40Imagine the rows of the boolean matrix permuted under randompermutation πJ. Leskovec, A. Rajaraman, J. Ullman: Mining of Massive Datasets, http://www.mmds.org

Min-Hashing 41Imagine the rows of the boolean matrix permuted under randompermutation πDefine a “hash” function hπ(C) the index of the first (in thepermuted order π) row in which column C has value 1:hπ (C) minπ π(C)J. Leskovec, A. Rajaraman, J. Ullman: Mining of Massive Datasets, http://www.mmds.org

Min-Hashing 42Imagine the rows of the boolean matrix permuted under randompermutation πDefine a “hash” function hπ(C) the index of the first (in thepermuted order π) row in which column C has value 1:hπ (C) minπ π(C)Use several (e.g., 100) independent hash functions (that is,permutations) to create a signature of a columnJ. Leskovec, A. Rajaraman, J. Ullman: Mining of Massive Datasets, http://www.mmds.org

Min-Hashing 43Imagine the rows of the boolean matrix permuted under randompermutation πDefine a “hash” function hπ(C) the index of the first (in thepermuted order π) row in which column C has value 1:hπ (C) minπ π(C)Use several (e.g., 100) independent hash functions (that is,permutations) to create a signature of a columnJ. Leskovec, A. Rajaraman, J. Ullman: Mining of Massive Datasets, http://www.mmds.org

Zoo example (shingle size k 1)Universe{ dog, cat, lion, tiger, mouse}[ cat, mouse, lion, dog, tiger][ lion, cat, mouse, dog, tiger]A { mouse, lion }44J. Leskovec, A. Rajaraman, J. Ullman: Mining of Massive Datasets, http://www.mmds.org

Zoo example (shingle size k 1)Universe{ dog, cat, lion, tiger, mouse}[ cat, mouse, lion, dog, tiger][ lion, cat, mouse, dog, tiger]A { mouse, lion }45mh1(A) min ({mouse, lion } ) mousemh2(A) min ({ mouse, lion } ) lionJ. Leskovec, A. Rajaraman, J. Ullman: Mining of Massive Datasets, http://www.mmds.org

Key FactFor two sets A, B, and a min-hash function mhi():Unbiased estimator for Sim using K hashes (notation policy – thisis a different K from size of shingle)46J. Leskovec, A. Rajaraman, J. Ullman: Mining of Massive Datasets, http://www.mmds.org

Min-Hashing ExamplePermutation π47Input matrix (Shingles x ture matrix M2121J. Leskovec, A. Rajaraman, J. Ullman:Mining of Massive Datasets, http://www.mmds.org

Min-Hashing Example2nd element of the permutationis the first to map to a 1Permutation π48Input matrix (Shingles x ture matrix M2121J. Leskovec, A. Rajaraman, J. Ullman:Mining of Massive Datasets, http://www.mmds.org

Min-Hashing ExamplePermutation π49Input matrix (Shingles x Documents)Signature matrix M2 4101021213 210017 1010121416 301011 601015 710104 51010J. Leskovec, A. Rajaraman, J. Ullman:Mining of Massive Datasets, http://www.mmds.org

Min-Hashing Example2nd element of the permutationis the first to map to a 1Permutation π50Input matrix (Shingles x Documents)Signature matrix M2 4101021213 210017 1010121416 301011 601015 710104 510104th element of the permutationis the first to map to a 1J. Leskovec, A. Rajaraman, J. Ullman:Mining of Massive Datasets, http://www.mmds.org

Min-Hashing ExamplePermutation π51Input matrix (Shingles x Documents)Signature matrix M2 4 3101021213 2 410017 1 7010121416 3 2010112121 6 601015 7 110104 5 51010J. Leskovec, A. Rajaraman, J. Ullman:Mining of Massive Datasets, http://www.mmds.org

Note: Another (equivalent) way is tostore row indexesor raw shingles(e.g. mouse, lion):Min-Hashing Example1265341165342nd element of the permutationis the first to map to a 1Permutation π52Input matrix (Shingles x Documents)Signature matrix M2 4 3101021213 2 410017 1 7010121416 3 2010112121 6 601015 7 110104 5 510104th element of the permutationis the first to map to a 1J. Leskovec, A. Rajaraman, J. Ullman:Mining of Massive Datasets, http://www.mmds.org

The Min-Hash Property0000Choose a random permutation πClaim: Pr[hπ(C1) hπ(C2)] sim(C1, C2)Why?11000110 One of the two cols had to have 1 atposition y53J. Leskovec, A. Rajaraman, J. Ullman: Mining of Massive Datasets, http://www.mmds.org

The Min-Hash Property0000Choose a random permutation πClaim: Pr[hπ(C1) hπ(C2)] sim(C1, C2)Why?11000110 Let X be a doc (set of shingles), y X is a shingleOne of the two cols had to have 1 atposition y54J. Leskovec, A. Rajaraman, J. Ullman: Mining of Massive Datasets, http://www.mmds.org

The Min-Hash Property0000Choose a random permutation πClaim: Pr[hπ(C1) hπ(C2)] sim(C1, C2)Why?11000110 Let X be a doc (set of shingles), y X is a shingleThen: Pr[π(y) min(π(X))] 1/ X It is equally likely that any y X is mapped to the min elementOne of the two cols had to have 1 atposition y55J. Leskovec, A. Rajaraman, J. Ullman: Mining of Massive Datasets, http://www.mmds.org

The Min-Hash Property0000Choose a random permutation πClaim: Pr[hπ(C1) hπ(C2)] sim(C1, C2)Why?11000110 Let X be a doc (set of shingles), y X is a shingleThen: Pr[π(y) min(π(X))] 1/ X 56It is equally likely that any y X is mapped to the min elementLet y be s.t. π(y) min(π(C1 C2))Then either:π(y) min(π(C1)) if y C1 , orπ(y) min(π(C2)) if y C2One of the two cols had to have 1 atposition yJ. Leskovec, A. Rajaraman, J. Ullman: Mining of Massive Datasets, http://www.mmds.org

The Min-Hash Property0000Choose a random permutation πClaim: Pr[hπ(C1) hπ(C2)] sim(C1, C2)Why?11000110 Let X be a doc (set of shingles), y X is a shingleThen: Pr[π(y) min(π(X))] 1/ X 57It is equally likely that any y X is mapped to the min elementLet y be s.t. π(y) min(π(C1 C2))Then either:π(y) min(π(C1)) if y C1 , orπ(y) min(π(C2)) if y C2One of the two cols had to have 1 atposition ySo the prob. that both are true is the prob. y C1 C2Pr[min(π(C1)) min(π(C2))] C1 C2 / C1 C2 sim(C1, C2)J. Leskovec, A. Rajaraman, J. Ullman: Mining of Massive Datasets, http://www.mmds.org

The Min-Hash Property (Take 2: simpler proof) Choose a random permutation πClaim: Pr[hπ(C1) hπ(C2)] sim(C1, C2)Why? Given a set X, the probability that any one element is the minhash under π is 1/ X (0) It is equally likely that any y X is mapped to the min elementGiven a set X, the probability that one of any k elements is themin-hash under π is k/ X (1) For C1 C2, the probability that any element is the min-hashunder π is 1/ C1 C2 (from 0) (2) For any C1 and C2, the probability of choosing the same min-hashunder π is C1 C2 / C1 C2 from (1) and (2) 58

Similarity for Signatures 59We know: Pr[hπ(C1) hπ(C2)] sim(C1, C2)Now generalize to multiple hash functionsThe similarity of two signatures is the fraction of the hash functions inwhich they agreeNote: Because of the Min-Hash property, the similarity of columns isthe same as the expected similarity of their signaturesJ. Leskovec, A. Rajaraman, J. Ullman: Mining of Massive Datasets, http://www.mmds.org

Min-Hashing ExamplePermutation π60Input matrix (Shingles x Documents)Signature matrix M2 4 3101021213 2 410017 1 7010121416 3 2010112121 6 601015 7 110104 5 51010Similarities:1-32-4 1-2Col/Col 0.75 0.75 0Sig/Sig 0.67 1.00 03-400J. Leskovec, A. Rajaraman, J. Ullman: Mining of Massive Datasets, http://www.mmds.org

Min-Hash Signatures Pick K 100 random permutations of the rowsThink of sig(C) as a column vectorsig(C)[i] according to the i-th permutation, the index of the firstrow that has a 1 in column Csig(C)[i] min (πi(C)) 61Note: The sketch (signature) of document C is small 𝟏𝟏𝟏𝟏𝟏𝟏 bytes!We achieved our goal! We “compressed” long bit vectors intoshort signaturesJ. Leskovec, A. Rajaraman, J. Ullman: Mining of Massive Datasets, http://www.mmds.org

Implementation Trick Permuting rows even once is prohibitiveApproximate Linear Permutation HashingPick K independent hash functions (use a, b below) Apply the idea on each column (document) for each hash function and get minhash signatureHow to pick a randomhash function h(x)?Universal hashing:ha,b(x) ((a·x b) mod p) mod Nwhere:a,b random integersp prime number (p N)62J. Leskovec, A. Rajaraman, J. Ullman: Mining of Massive Datasets, http://www.mmds.org

Summary: 3 Steps Shingling: Convert documents to sets We used hashing to assign each shingle an IDMin-Hashing: Convert large sets to short signatures, whilepreserving similarity Weused similarity preserving hashing to generate signatures withproperty Pr[hπ(C1) hπ(C2)] sim(C1, C2) We63used hashing to get around generating random permutationsJ. Leskovec, A. Rajaraman, J. Ullman: Mining of Massive Datasets, http://www.mmds.org

64Backup slides

Sequential Pattern Mining in Vertical Data Format:The SPADE Algorithm A sequence database is mapped to: SID, EID Grow the subsequences (patterns) one item at a time by Apriori candidate generationSIDSequence1 a(abc)(ac)d(cf) 2 (ad)c(bc)(ae) 3 (ef)(ab)(df)cb 4 eg(af)cbc min sup 2Ref: SPADE (SequentialPAttern Discovery usingEquivalent Class) [M. Zaki2001]65

PrefixSpan: A Pattern-Growth ApproachSID 66Sequence10 a(abc)(ac)d(cf) 20 (ad)c(bc)(ae) 30 (ef)(ab)(df)cb 40 eg(af)cbc min sup 2PrefixSuffix (Projection) a (abc)(ac)d(cf) aa ( bc)(ac)d(cf) ab ( c)(ac)d(cf) Prefix and suffix Given a(abc)(ac)d(cf) Prefixes: a , aa , a(ab) , a(abc) , Suffix: Prefixes-basedprojection PrefixSpan Mining: Prefix Projections Step 1: Find length-1 sequential patterns a , b , c , d , e , f Step 2: Divide search space and mine each projected DB a -projected DB,PrefixSpan (Prefix-projected b -projected DB,Sequential pattern mining) Pei, et al. @TKDE’04 f -projected DB,

PrefixSpan: Mining Prefix-Projected DBsSIDSequence10 a(abc)(ac)d(cf) 20 (ad)c(bc)(ae) 30 (ef)(ab)(df)cb 40 eg(af)cbc prefix a Length-2 sequentialpatterns aa , ab , (ab) , ac , ad , af (abc)(ac)d(cf) ( d)c(bc)(ae) ( b)(df)cb ( f)cbc prefix aa 67Length-1 sequential patterns a , b , c , d , e , f prefix c , , f prefix b a -projected DB aa -projected DBmin sup 2prefix af af -projected DB b -projected DB Major strength of PrefixSpan: No candidate subseqs. to be generated Projected DBs keep shrinking

Consideration:Pseudo-Projection vs. Physical PrImplementation ojection Major cost of PrefixSpan: Constructing projected DBs When DB can be held in main memory, use pseudo projection No physically copying suffixes Pointer to the sequence Offset of the suffixs a(abc)(ac)d(cf) a s a : ( , 2) Physical projectionSuggested approach: (abc)(ac)d(cf) ab But if it does not fit in memory 68Suffixes largely repeating in recursive projected DBss ab : ( , 5) Integration of physical and pseudo-projection Swapping to pseudo-projection when the data fits in memory ( c)(ac)d(cf)

CloSpan: Mining Closed Sequential Patterns 69A closed sequential pattern s: There exists no superpattern s’ such that s’ כ s, and s’ ands have the same supportWhich ones are closed? abc : 20, abcd :20, abcde : 15Why directly mine closed sequential patterns? Reduce # of (redundant) patterns Attain the same expressive powerProperty P1: If s כ s1, s is closed iff two project DBs have the samesizeExplore Backward Subpattern and Backward Superpatternpruning to prune redundant search spaceGreatly enhances efficiency (Yan, et al., SDM’03)

CloSpan: When Two Projected DBs Have the Same Size If s כ s1, s is closed iff two project DBs have the same size When two projected sequence DBs have the same size? Here is one example:IDSequence1 aefbcg 2 afegb(ac) 3 (af)ea a efbcg fegb(ac) af Backward subpattern pruning bcg egb(ac) ea 70 ( f)ea Only need to keepsize 12 (includingparentheses)Backward superpattern pruning b min sup 2 f e cg fbcg bcg (ac) gb(ac) egb(ac) a ea size 6) b cg (ac)

Chapter 7 : Advanced Frequent Pattern Mining Mining Diverse Patterns Sequential Pattern Mining Constraint-Based Frequent Pattern Mining Graph Pattern Mining Pattern Mining Application: Mining Software Copy-and-Paste Bugs Summary71

Constraint-Based Pattern Mining72 Why Constraint-Based Mining? Different Kinds of Constraints: Different Pruning Strategies Constrained Mining with Pattern Anti-Monotonicity Constrained Mining with Pattern Monotonicity Constrained Mining with Data Anti-Monotonicity Constrained Mining with Succinct Constraints Constrained Mining with Convertible Constraints Handling Multiple Constraints Constraint-Based Sequential-Pattern Mining

Why Constraint-Based Mining? Finding all the patterns in a dataset autonomously?—unrealistic! Pattern mining in practice: Often a user-guided, interactive process User directs what to be mined using a data mining query language (or a graphical userinterface), specifying various kinds of constraintsWhat is constraint-based mining? Too many patterns but not necessarily user-interested!Mine together with user-provided constraintsWhy constraint-based mining? User flexibility: User provides constraints on what to be mined Optimization: System explores such constraints for mining efficiency 73E.g., Push constraints deeply into the mining process

Various Kinds of User-Specified Constraints in Data MiningKnowledge type constraint—Specifying what kinds of knowledge to mine Data constraint—using SQL-like queries Ex.: Find products sold together in NY stores this yearDimension/level constraint—similar to projection in relational database Ex.: In relevance to region, price, brand, customer categoryInterestingness constraint—various kinds of thresholds Ex.: Strong rules: min sup 0.02, min conf 0.6, min correlation 0.7Rule (or pattern) constraint 74Ex.: Classification, association, clustering, outlier finding, The focus of this studyEx.: Small sales (price 10) triggers big sales (sum 200)

TIDPattern Space Pruning with Pattern Anti-MonotonicityTransaction10a, b, c, d, f, h20b, c, d, f, g, h 30b, c, d, f, gIf an itemset S violates constraint c, so does any of its superset40a, c, e, f, g That is, mining on itemset S can be terminatedmin sup 275 A constraint c is anti-monotone Ex. 1: c1: sum(S.price) v is anti-monotone Ex. 2: c2: range(S.profit) 15 is anti-monotoneItemPriceProfita10040 Itemset ab violates c2 (range(ab) 40)b400 So does every superset of abc150 20 Ex. 3. c3: sum(S.Price) v is not anti-monotoned35 15 Ex. 4. Is c4: support(S) σ anti-monotone?e55 30f45 10g8020h105 Yes! Apriori pruning is essentially pruning with an anti-monotonic constraint!Note: item.price 0Profit can be negative

Pattern Monotonicity and Its Roles TIDTransaction10a, b, c, d, f, h20b, c, d, f, g, h30b, c, d, f, g40a, c, e, f, gmin sup 276ItemPriceProfita10040b400c150 20d35 15e55 30f45 10g8020h105A constraint c is monotone: If an itemset S satisfies theconstraint c, so does any of its superset That is, we do not need to check c in subsequent mining Ex. 1: c1: sum(S.Price) v is monotone Ex. 2: c2: min(S.Price) v is monotone Ex. 3: c3: range(S.profit) 15 is monotone Itemset ab satisfies c3 So does every superset of abNote: item.price 0Profit can be negative

Data Space Pruning with Data Anti-MonotonicityTIDTransaction10a, b, c, d, f, h20b, c, d, f, g, h30b, c, d, f, g40a, c, e, f, g min sup 277ItemPriceProfita10040b400c150 20d35 15e55 30f45 10g8020h105 A constraint c is data anti-monotone: In the mining process, if a data entry tcannot satisfy a pattern p under c, t cannot satisfy p’s superset either Data space pruning: Data entry t can be prunedEx. 1: c1: sum(S.Profit) v is data anti-monotone Let constraint c1 be: sum(S.Profit) 25 T30: {b, c, d, f, g} can be removed since none of their combinations canmake an S whose sum of the profit is 25Ex. 2: c2: min(S.Price) v is data anti-monotone Consider v 5 but every item in a transaction, say T50 , has a price higherthan 10Ex. 3: c3: range(S.Profit) 25 is data anti-monotoneNote: item.price 0Profit can be negative

Expressing Patterns in Compressed Form: Closed Patterns How to handle such a challenge?Solution 1: Closed patterns: A pattern (itemset) X is closed if X is frequent, andthere exists no super-pattern Y

20 Compressing Shingles To compress long shingles, we can hash them to (say) 4 bytes Like a Code Book If #shingles manageable Simple dictionary suffices Doc represented by the set of hash/dict. values of its k-shingles Idea: Two documents could (rarely) appear to have shingles in common, when in fact only the hash- values were shared J. Leskovec, A. Rajaraman, J. Ullman: Mining of Massive .