Transcription

Neural tuning and representational geometryNikolaus Kriegeskorte1,2,3 * † and Xue-Xin Wei4,5,6,7 * †1) Zuckerman Mind Brain Behavior Institute, Columbia University, New York, NY, USA2) Department of Psychology, Columbia University, New York, NY, USA3) Department of Neuroscience, Columbia University, New York, NY, USAarXiv:2104.09743v1 [q-bio.NC] 20 Apr 20214) Department of Neuroscience, University of Texas at Austin, Austin, TX, USA5) Department of Psychology, University of Texas at Austin, TX, USA6) Center for Perceptual Systems, University of Texas at Austin, TX, USA7) Institute for Neuroscience, University of Texas at Austin, TX, USA* co-first authors; † co-senior authors. Listed alphabetically.Correspondence: nk2765@columbia.edu; weixx@utexas.eduAbstractA central goal of neuroscience is to understand the representations formed by brain activity patterns andtheir connection to behavior. The classical approach is to investigate how individual neurons encodethe stimuli and how their tuning determines the fidelity of the neural representation. Tuning analysesoften use the Fisher information to characterize the sensitivity of neural responses to small changesof the stimulus. In recent decades, measurements of large populations of neurons have motivated acomplementary approach, which focuses on the information available to linear decoders. The decodableinformation is captured by the geometry of the representational patterns in the multivariate responsespace. Here we review neural tuning and representational geometry with the goal of clarifying the relationship between them. The tuning induces the geometry, but different sets of tuned neurons can inducethe same geometry. The geometry determines the Fisher information, the mutual information, and thebehavioral performance of an ideal observer in a range of psychophysical tasks. We argue that futurestudies can benefit from considering both tuning and geometry to understand neural codes and revealthe connections between stimulus, brain activity, and behavior.1

21IntroductionBrain computation can be understood as the transformation of representations across stages of processing [1]. Information about the world enters through the senses, is encoded and successively re-codedso as to extract behaviorally relevant information and transform its representational format to enable theinferences and decisions needed for successful action. Neuroscientists often focus on the code in oneparticular neural population at a time (let’s call it area X), summarily referring to the processes producingthe code in X as the encoder, and the processes exploiting the code in X as the decoder [2].The neural code in our area of interest X is classically characterized in terms of the tuning of individualneurons. A tuning curve describes the dependence of a neuron’s firing on a particular variable thoughtto be represented by the brain. Like a radio tuned to a particular frequency to select a station, a "tuned"neuron [3] may selectively respond to stimuli within a particular band of some stimulus variable, whichcould be a spatial or temporal frequency, or some other property of the stimulus such as its orientation,position, or depth [3, 4, 5, 6]. As a classic example, the work by Hubel and Wiesel [7, 8, 9] demonstratedthat the firing rate of many V1 neurons is systematically modulated by the retinal position and orientationof an edge visually presented to a cat. The dependence was well described by a bell-shaped tuningcurve [4, 5, 10, 6, 11]. Tuning provides a metaphor and the tuning curve a quantitative description of aneuron’s response as a function of a particular stimulus property. A set of tuning curves can be usedto define a neural population code [12, 13, 14, 15, 16]. Consider the problem of encoding a circularvariable, such as the orientation of an edge segment, with a population of neurons. A canonical modelassumes that each neuron has a (circular) Gaussian tuning curve with a different preferred orientation.The preferred orientations are distributed over the entire cycle, so the neural population can collectivelyencode any orientation.Over the past several decades, systems neuroscience has characterized neural tuning in different brainregions and species for a variety of stimulus variables and modalities (e.g., [8, 17, 18, 19, 20, 21, 22,12, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34]). We have also accumulated much knowledge on howtuning changes with spatial context [35, 36, 37], temporal context [38, 39, 40, 41, 42, 43, 44, 45, 46],internal states of the animal such as attention [47, 48, 49], and learning [50, 51, 52]. The tuning curveis a descriptive tool, ideal for characterizing strong dependencies, as found in sensory cortical areas, ofindividual neurons’ responses on environmental variables of behavioral importance. However, when thetuning curve is reified as a computational mechanism, it can detract from non-stimulus-related influenceson neuronal activity, such as the spatial and temporal environmental context and the internal networkcontext, where information is encoded in the population activity, and computations are performed in anonlinear dynamical system [53, 54] whose trial-to-trial variability may reflect stochastic computations[55]. Despite their limitations, tuning functions continue to provide a useful first-order description of howindividual neurons encode behaviorally relevant information in sensory, cognitive, and motor processes.A complementary perspective is that of a downstream neuron that receives input from a neural population and must read out particular information toward behaviorally relevant inferences and decisions. This

3decoding perspective addresses the question what information can be read out from a population of neurons using a simple biologically plausible decoder, such as a linear decoder. Decoding analyses havegained momentum more recently, as our ability to record from a large number of channels (e.g., neuronsor voxels) has grown with advances in cell recording [56, 57, 58] and functional imaging [59, 60]. A lineardecoding analysis reveals how well a particular variable can be read out by projecting the response pattern onto a particular axis in the population response space. A more general characterization of a neuralcode is provided by the representational geometry [61, 62, 63, 64, 65, 66, 67, 68, 69, 70, 71, 72, 73, 74].The representational geometry captures all possible projections of the population response patterns andcharacterizes all the information available to linear and nonlinear decoders [75]. The representationalgeometry is defined by all pairwise distances in the representational space among the response patternscorresponding to a set of stimuli. The distances can be assembled in a representational dissimilarity matrix (RDM), indexed horizontally and vertically by the stimuli, where we use “dissimilarity" as a moregeneral term that includes estimators that are not distances in the mathematical sense [75]]. The RDMabstracts from the individual neurons, providing a sufficient statistic invariant to permutations of the neurons and, more generally, to rotations of the ensemble of response patterns. These invariances enable usto compare representations among regions, individuals, species and between brains and computationalmodels [76, 77, 70, 78].The neural tuning determines the representational geometry, so the two approaches are deeply related.Nevertheless they have originally been developed in disconnected literatures. Tuning analysis has beenprevalent in neuronal recording studies, often using simple parametric stimuli and targeting lower-levelrepresentations. The analysis of representational geometry has been prevalent in the context of largescale brain-activity measurements, especially in functional MRI (fMRI) [79], often using natural stimuliand targeting higher-level representations. In recent years, there has been growing cross-pollinationbetween these literatures. Notably, encoding models for simple parametric [80] and natural stimuli [81,82] have been used to explain single-voxel fMRI responses, and a number of studies have characterizedneural recordings in terms of representational geometry [67, 83, 84, 70, 71].Tuning and geometry are closely related, and the respective communities using these methods havedrawn from each other. Here we clarify the mathematical relationship of tuning and geometry and attemptto provide a unifying perspective. We review the literature and explain how tuning and geometry relateto the quantification of information-theoretical quantities such as the Fisher information (FI) [85] and themutual information (MI) [86]. Examples from the literature illustrate how the dual perspective of tuningand geometry illuminates the content, format, and computational roles of neural codes.

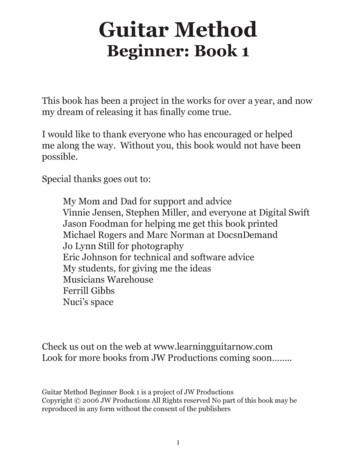

40bhomogeneous,wide tuningcnon-uniformstimulus gain1100110200πorientation [rad]10-12100120020πorientation0-1-11 00.52002010orientationπ0π020-101-1 (PCA)0.220FI [a.u.]neuronsdmax0min0rigid transfomationof representationin (b)distance01Stimulus v.s.representationaldistanceMDSvariance explainedmax1RDMorientationhomogeneous,narrow tuningactivityaFisherinformation /2orientation disparitylow00.50low010# of PCs20Figure 1: Neuronal tuning determines representational geometry. Each row shows a specific configuration of the neuralcode for encoding a circular variable, such as orientation. Each column shows a particular way to characterize the neural code.The first two columns show traditional tuning-based characterizations. The small inset on top of the population response matrixplots the summed population activity as a function of the stimulus orientation. The third column plots the Fisher informationof the neural population as a function of the stimulus (arbitrary units), which provides a local measure of the coding fidelity.Specifically, the square root of Fisher information gives a lower bound of the discrimination threshold in fine discriminationtasks. The last four columns show the geometry of the neural code, using a representational dissimilarity matrix (RDM), 3d multi-dimensional scaling, and the relation between distance in the stimulus space (horizontal axis) and representationaldistance (vertical axis). The last column plots the proportion of variance explained by individual principal components. Thisreflects the dimensionality of the representation. Several general insights emerge from this framework: (1) Tuning width canmodulate the representational geometry, without changing the allocation of Fisher information (compare rows a and b). (2)Fisher information only provides a local measure of the geometry. It does not fully determine the geometry (compare a, b, d).(3) Non-uniform stimulus gain (c) can change the representational precision (as reflected in the Fisher information) for particularparts of the stimulus space. (4) The same representation can manifest itself in different neural response patterns (compare band d). The configuration in (d) is obtained by a rigid transformation (shift and rotation) of the codes in (b).

5BOX 1: Mathematical definitionsEntropy. The entropy of a random variable r with a probability density p(r) is a measure of our uncertainty about the variableZand is defined as:H(r) p(r) · log p(r) dr(1)The conditional entropy of a random variable r given another random variable θ is defined as:ZH(r θ) p(r, θ) · log p(r θ) dr dθ(2)Mutual information. The mutual information (MI) I(r; θ) between response r and stimulus θ measures the information thatthe response conveys about the stimulus (and vice versa). The MI can be thought of as the shared entropy, or entropyoverlap, that is the number of bits by which the entropy H(r, θ) of the joint distribution of both variables is smaller than thesum of their individual entropies H(r) and H(θ):I(r; θ) H(r) H(θ) H(r, θ)(3)Equivalently, the MI is the reduction of the entropy of one of the variables when we learn about the other:I(r; θ) H(r) H(r θ) H(θ) H(θ r)Note that MI depends on the distribution of both variables. In more explicit terms, the MI can be expressed as:Z Zp(r, θ)I(r; θ) p(r, θ) logdrdθ.p(r)p(θ)(4)(5)Fisher information. The Fisher information (FI) of a neural population code is a function of the stimulus. For each location instimulus space, the FI reflects how sensitive the code is to local changes of the stimulus. Assume a population of N neuronsencoding stimulus variable θ, with tuning curves fi . The population response is denoted as r. The FI can be defined asZd2J(θ) 2 log p(r θ)p(r)dr.(6)dθIf θ is a vector, rather than a scalar, description of the stimulus, J(θ) becomes the FI matrix, characterizing the magnitudeof the response pattern change for all directions in stimulus space. The FI of a neuron is determined by the neuron’s tuningcurve and noise characteristics. Assuming Poisson spiking, and tuning curve f (θ), where θ is the encoded stimulus property,the Fisher information J(θ) can be computed asJ(θ) f 0 (θ)2.f (θ)(7)Under the assumption of additive stimulus-independent Gaussian noise (with noise variance equal to 1), the Fisher information is simply the squared slope of the tuning curveJ(θ) f 0 (θ)2 .(8)For a neural population, if neurons have independent noise, the population FI is simply the sum of the FI for individualneurons. With correlated noise, evaluating the FI is more complicated as it depends on the correlation structure. FI dependson the specific parameterization of the stimulus space. More precisely, when θ is reparameterized as θ̃, the following relationqpJ(θ)dθ J(θ̃)dθ̃.(9)holds:Discrimination threshold. The square root of the Fisher information provides a lower bound on the discrimination thresholdδ(θ) in fine discrimination taskspδ(θ) C/ J(θ),(10)where C is a constant dependent on the definition of the psychophysical discrimination threshold.Variance-stabilizing transformation. For independent Poisson noise, we can apply a square-root transformation to anindividual neuron’s response ri [139, 140]. The resulting response will have approximately constant variance independentof firing rate. In general, assuming the variance of ri is a smooth function g(·) of its mean µ , one could first stabilize thevariance by applying the following transformation to obtain r i and the statements below would apply to r i .Z ri1pr i dµg(µ)(11)

622.1A dual perspectiveTuning determines mutual information and Fisher informationA tuning curve tells us how the mean activity level of an individual neuron varies depending on a stimulusproperty. The tuning curve, thus, defines how the stimulus property is encoded in the neuron’s activity.Conversely, knowing the activity of the neuron may enable us to decode the stimulus property. Howinformative the neural response is depends on the shape of the tuning curve. A flat tuning curve, oftendescribed as a lack of tuning, would render the response uninformative. If the neuron is tuned, it contains some information about the stimulus property. However, a single neuron may respond similarly todifferent stimuli. For example, a neuron tuned to stimulus orientation may respond equally to two stimulidiffering from its preferred orientation in either direction by the same angle. The resulting ambiguity maybe resolved by decoding from multiple neurons.For a population of neurons, the tuning function f (θ) can be defined as the expected value of the response pattern r given stimulus parameter θ:f (θ) Er p(r θ) [r] E[r θ].(12)In the special case of additive noise with mean zero, the response pattern obtains as r f (θ) , wheref (θ) is the encoding model that defines the tuning function for each neuron and is the noise.We can quantify the information contained in the neural code about the stimulus using the mutual information or the Fisher information, two measures that have been widely used in computational neuroscience. Mutual information (MI) is a key quantity of information theory that measures how muchinformation one random variable contains about another random variable [86]. The MI I(r; θ) measuresthe information the response r conveys about the stimulus θ (Box 1). Computing the MI requires assuming a prior p(θ) over the stimuli, because the MI is a function of the joint distribution p(r, θ) p(r θ) · p(θ)of stimulus and response.Over the past few decades, MI has been used extensively in neuroscience [87, 88, 89, 90]. For example,studies have aimed to quantify the amount of information conveyed by neurons about sensory stimuli[91, 87, 90, 89, 92, 93]. MI has also been used as an objective function to be maximized, so as toderive an efficient encoding of a stimulus property in a limited number of neurons ([94, 95, 96, 97, 98]).If a neural code optimizes the MI, the code must reflect the statistical structure of the environment. Forexample, in an MI-maximizing code, frequent stimuli should be encoded with greater precision. Oncea prior over the stimuli, a functional form of the encoding, and a noise model have been specified, thejoint distribution of stimulus and response is defined, and the MI can be computed. The parameters ofthe encoding can then be optimized to maximize MI [99, 100, 101]. If the tuning functions that maximizeMI match those observed in brains, the function to convey information about the stimulus may explainwhy the neurons exhibit their particular tuning [101, 102, 103, 104, 105, 106, 107, 90, 108, 109]. Theapplications of information theory in neuroscience have been the subject of previous reviews ([88, 89,

7110]).The MI provides a measure of the overall information the response conveys about the stimulus. It canbe thought of as the expected value of the reduction of the uncertainty about the stimulus that an idealobserver experiences when given the response, where the expectation is across the distribution of stimuli. However, the MI does not capture how the fidelity of the encoding depends on the stimulus. Someauthors have proposed stimulus-specific variants of MI [111, 112, 16, 113].Another stimulus-specific measure of information in the neural response is the Fisher information (FI).The FI has deep roots in statistical estimation theory [85, 114] and plays a central role in the frameworkwe propose here. The FI captures the precision with which a stimulus property is encoded in the vicinityof a particular value of the property [115, 116, 117, 95, 109]. An efficient code for a particular stimulusdistribution will dedicate more resources to frequent stimuli and encode these with greater precision. TheFI is a function of the stimulus and reflects the variation of the precision of the encoding across differentvalues of the stimulus property.A large body of work has studied how tuning parameters, such as tuning width and firing rate, are relatedto the FI [118, 119, 115, 120, 121, 122, 123, 124]. The FI is proportional to the squared slope of the tuningcurve when the noise is Gaussian and additive (Box 1). When the noise follows a Poisson distribution, theFI is the squared slope of the tuning curve divided by the firing rate. Beyond single neurons and particularnoise distributions, the FI provides a general stimulus-specific measure that characterizes coding fidelityof a neuronal population under various noise distributions. The FI sums across neurons, to yield theneural-population FI, if the neurons have independent noise. However, when the noise is correlatedbetween neurons, the Fisher information is more difficult to compute [125, 126, 127, 128, 129, 130]. Thisis still an active area of research [131, 132, 133, 134, 135, 121, 136, 261], and some progress has beenreviewed in [137, 138].2.2Tuning determines geometryA tuning curve describes the encoding of a stimulus property in an individual neuron. When we considera population of neurons, we can think of the response pattern across the n neurons as a point in an ndimensional space. Let’s assume the tuning curves are continuous functions of the stimulus property. Aswe sweep through the values of the stimulus property, the response pattern moves along a 1-dimensionalmanifold in the neural response space. Now imagine that stimuli vary along d property dimensions thatare all encoded in the neural responses with continuous tuning curves. As we move in the d-dimensionalstimulus-property space, the response pattern moves along a d-dimensional neural response manifold(Box 2).

8BOX 2: Neural response manifoldA neural response manifold is a set of response patterns elicited by a range of stimuli [141, 142, 54, 143]. In neuroscience,we often think of a curved hypersurface of the neural response space within which each stimulus has its unique location.The mathematical notion of manifold implies that the set of neural response patterns is locally homeomorphic to a Euclideanspace. This notion is particularly helpful when (1) the tuning curves are continuous, (2) the mapping between d-vectors ofstimulus properties and n-vectors of neural responses is bijective, and (3) we neglect the noise. Under these conditions, theset of neural response patterns is homeomorphic to the particular Euclidean space spanned by the stimulus properties, andwe can imagine mapping the stimulus space onto a hypersurface (which might be nonlinear, but will not intersect itself) in themultivariate response space.Consider a pair of neurons encoding orientation with sinusoidal tuning curves. Because both tuning curves are continuous,the response will vary continuously and cyclicly as the stimulus rotates. When the phases of the tuning curves are offset by90 , the response manifold is a perfect circle (a). As the phase offset increases, the manifold gradually morphs into an ellipsewith increasing aspect ratio (b, c). The shape of the manifold depends on the exact shape of the tuning curves. Panel (d)shows a crescent-like response manifold, induced by two von Mises tuning curves. For certain tuning functions, the responsehypersurface may self-intersect (e). In the neighborhood of the self-intersection, the hypersurface is not homeomorphic toany Euclidean space, and thus not a manifold. However, adding one additional neuron (neuron 3 in f), disentangles thehypersurface, and renders it a manifold again. The width of bell-shaped tuning curves (g and h) substantially modulatesthe geometry of the manifold, with narrower tuning curves (h) leading to a manifold that is highly curved and requires ahigher-dimensional linear subspace to explain most the variance (Fig. 1, compare rows a and b, rightmost column).These examples neglect the presence of noise. When the responses are noisy, they may still form a manifold, but not ad-dimensional manifold, whose dimensions correspond to the d stimulus properties of interest. Despite these caveats, thenotion of manifold appears useful, because it enables us to see the response patterns elicited by a d-dimensional space ofstimuli as a d-dimensional sheet in the multivariate response space [141, 142, 53, 54, 143, 144, 84, 145].1neuron 2000neuron 11-20c1102-202210neuron 1100neuron 1 1d1210-20e10211000neuron 111f2-2011022-2g30210.50neuron 1101311ne 0.50.5ur1on 0 0ron2neu0-220h131021neuron 311neuron 30stimulus2neuron 31neuron 2bneuron 2neuron 1neuron 20neuron 2neuron 21firing ratea-2201210.50.51neu 0.5ron20.51rneuon 1011ne 0.50.51uron 2 0 0 neuronThe concept of a neural response manifold [141, 142] helps us relate a uni-dimensional or multidimensional stimulus space to a high-dimensional neural response space. To understand the code, we mustunderstand the geometry of the neural response manifold. As we saw above, the FI tells us the magnitude of the response pattern change associated with a local move in stimulus space around any givenlocation θ. To define the representational geometry, however, we need a global characterization. Weneed to know the magnitudes of the response pattern changes associated with arbitrary moves from anystimulus to any other stimulus. The geometry is determined by the distances in multivariate responsespace that separate the representations of any two stimuli. The tuning curves define the response patterns, from which we can compute the representational distances.We could simply use the Euclidean distances d(θi , θj ) for all pairs (i, j) of stimuli, which can be computedon the basis of the tuning functions alone to define the representational geometry:d(θi , θj ) kf (θi ) f (θj )k.(13)

9However, we would like to define the representational geometry in a way that reflects the discriminability of the stimuli. The discriminability could be defined in various ways, for example as the accuracyof a Bayes-optimal decoder, as the accuracy or d0 of a linear decoder, or as the mutual informationbetween stimulus and neural response. The Euclidean distance between stimulus representations ismonotonically related to all these measures of the discriminability when the noise distribution is isotropic,Gaussian, and stimulus-independent. When the noise is anisotropic (and still Gaussian and stimulusindependent), we can replace the Euclidean distance with the Mahalanobis distanceq1dM ahal (θi , θj ) [f (θi ) f (θj )]T Σ 1 [f (θi ) f (θj )] kΣ 2 [f (θi ) f (θj )]k,(14)where Σ is the noise covariance matrix. The Mahalanobis distance will be monotonically related to thediscriminability [75].When the noise is Poisson and independent between neurons, a square-root transform can be usedto stabilize the variance and render the joint noise density approximately Gaussian and isotropic (Box1, [146]). How to best deal with non-Gaussian (e.g. Poisson) stimulus-dependent noise is an unresolvedissue.We have seen that the geometry of the representation depends on the tuning and the noise and can becharacterized by a representational distance matrix. Changing the tuning will usually change the representational geometry. Consider the encoding of stimulus orientation by a population of neurons thathave von Mises tuning with constant width of the tuning curves and a uniform distribution of preferredorientations. Since orientations form a cyclic set and the tuning curves are continuous, the set of representational patterns must form a cycle (Fig. 1a). However, two uniform neural population codes withdifferent tuning widths will have distinct representational geometries.With narrow tuning width (Fig. 1a), a given stimulus drives a sparse set of neurons. For intuition onthis narrow tuning regime, imagine a labeled-line code, where each neuron responds to a particularstimulus from a finite set (one neuron for each stimulus). As we move through the stimulus set, wedrive each neuron in turn. The set of response patterns, thus, is embedded in a linear subspace of thesame dimensionality as the number of neurons. Note also that narrow tuning yields a “splitter" code,i.e., a code that is equally sensitive to small and large orientation changes. Orientations differing by 30degrees (π/6 radians) might elicit non-overlapping response patterns and would, thus, be as distant inthe representational space as orientations differing by 90 degrees (π/2 radians, Fig. 1a, second-to-lastcolumn).With wide tuning width (Fig. 1b), the geometry approximates a circle. It would be exactly a circlefor two neurons with sinusoidal tuning with a π/4 shift in preferred orientation (where π is the cycleperiod for stimulus orientation), and in the limit of an infinite number of neurons with sinusoidal tuning(with preferred orientations drawn from a uniform distribution). For von Mises tuning curves and finitenumbers of neurons, wide tuning entails a geometry approximating a circle, with most of the varianceof the set of response patterns explained by the first two principal components. Wide tuning yields a“lumper" code, i.e., a code that is more sensitive to global orientation changes, than to local changes.

10Orientations differing by 30 degrees will elicit overlapping response patterns and would be much closerin the representational space than orientations differing by 90 degrees (Fig. 1b, right column).If we make the gain or the width of the tuning curves non-uniform across orientations, the geometry isdistorted with stimuli spreading out in representational space in regions of high Fisher information andhuddling together in regions of low Fisher information (as illustrated in the RDM and MDS in Fig. 1c).This section explained how a set of tuning functions induces a representational geometry. We definedthe geometry by its sufficient statistic, the representational distance matrix. The distances are measuredafter a transformation that renders the noise isotropic. We will see below that the distance matrix thencaptures all information contained in the code. Importantly, it also captures important aspects of theformat in which the information is encoded. For example, knowing the geometry and the noise distributionenables us to predict what information can be read out from the code with a linear decoder (or in fact anygiven nonlinear decoder capable of a linear transform).2.3Geometry does not determine tuningWe have seen that the set of tuning curves determines the geometry (given the noise distribution). Theconverse does not hold: The geometry does not determine the set of tuning curves. To understand this,let us assume that the noise is isotropic (or has been rendered isotropic by a whitening transform). It iseasy to see that rotating the ensemble of points corresponding to the representational patterns will leavethe geometry unchanged. Such a rotation could simply exchange tuning curves between neurons, whileleaving the set of tuning curves the same. In general, however, a rotation of the ensemble of points willsubstantially and qualitatively change the set of tuning curves (Fig. 1, compare b and d). The ne

to be represented by the brain. Like a radio tuned to a particular frequency to select a station, a "tuned" neuron [3] may selectively respond to stimuli within a particular band of some stimulus variable, which could be a spatial or temporal frequency, or some other property of the stimulus