Transcription

A NEW ERA FOR VIRTUALIZATIONTEAM AND STORAGE TEAMCOLLABORATIONMoshe KarabelnikGlobal Storage and Backup ManagerGlobal Telecommunication CorporateMoshe.Karabelnik@gmail.com

Table of ContentsIntroduction . 4How this document is built . 6Provisioning Storage for datastores. 7Data Mover (DM) and Virtual Data Mover (VDM) . 7RAID Group and Storage Pools . 7RAID Types . 8Fully Automated Storage Tiering for Virtual Pools (FAST VP) . 10FAST Cache . 11Storage for files such as templates and ISO files on datastores. 12Connectivity . 13Multipathing in Storage Area Network (SAN) environment . 13Multipathing in Network Attached Storage (NAS) environment . 14Implementing local storage or using FC or FCoE, iSCSI, NFS . 15NFS and iSCSI networking security . 16iSCSI Authentication . 16iSCSI discovery . 17Storage Groups (LUN Masking) and FC Zoning . 18Storage and Virtualization features and technologies . 19VMware vStorage APIs for Array Integration (VAAI) for SAN . 19VMware vStorage APIs for Array Integration (VAAI) for NAS . 21Solid State Drives (SSD) also called Enterprise Flash Drives (EFD) . 21Storage Distributed Resources Scheduler (SDRS) . 22Compression and Deduplication in NAS environment . 24Compression in SAN environment . 25FAST Clone . 25Full Clone . 25Cloning a virtual machine. 26Virtual disk provisioning . 272014 EMC Proven Professional Knowledge Sharing2

Virtual Provisioning of LUNs . 28Virtual Provisioning in NAS environment . 28vStorage API for Storage Awareness (VASA) . 29Storage I/O Control (SIOC) . 30Virtual Machine Swap File location . 30Host Cache . 31Paravirtual SCSI adapters (PVSCSI) . 31N-Port ID Virtualization for RDM LUNs (NPIV) . 32VMFS3 to VMFS5 upgrade . 32RAW Device Mapping (RDM) . 33Virtual Disk alignment . 33Diagnostics partition on a shared storage . 34Virtual SAN . 35Backup & data protection . 36Backup of VMs . 36Monitoring and management . 37Monitoring the VMware vSphere and EMC VNX environment . 37Virtual Storage Integrator (VSI) Unified Storage Management (USM) . 37Additional products: . 39PowerPath/VE . 39Site Recovery Manager (SRM) . 39Summary - main points of collaboration. 41What next? . 46Cloud concept and technologies: . 46Software Defined Data Center (SDDC): . 46Appendix – References . 47Disclaimer: The views, processes or methodologies published in this article are those of theauthors. They do not necessarily reflect EMC Corporation’s views, processes or methodologies.2014 EMC Proven Professional Knowledge Sharing3

IntroductionNot so long ago, at the beginning of the server virtualization era, it was very simple:there were two teams (or two persons in small implementations): the Storage team andthe server virtualization team (*). Usually, the Virtualization team asked for a specificstorage capacity for specific purpose/workload and that’s all. The Storage team createdthe datastore and gave it to the Virtualization team. Roles were clear for each team.Today, the situation is much more complex. New technologies and features emergedboth on the storage side and the virtualization side; for example, Storage DistributedResource Scheduling (SDRS), vStorage API for Array Integration (VAAI), Storage I/OControl (SIOC), Thin Provisioning, Snapshots, and Clones.Those new technologies and features put in question the exact roles of the servervirtualization team and the storage team not to mention that the interaction andcollaboration between those teams should be much tighter.Examples include: Should we thin provision storage on the virtualization level or on the storagelevel? Should we use SIOC? Should we implement VAAI? Which of the teams should take care of clones and snapshots?This article discusses the relevant technologies and features involved, explains thecollaboration between the storage team and the server virtualization team regardingeach technology or feature, and suggests appropriate tasks for each team regardingthose technologies while constructing the infrastructure for the first time as well as on aregular basis.This article does not get into a deep technology discussion but rather demonstrates andemphasizes what Storage and Virtualization teams should collaborate on.This article will interest any storage team member or server virtualization team memberthat would like to fully utilize the systems under their responsibility by enhancingcollaboration with the other team.2014 EMC Proven Professional Knowledge Sharing4

While this article focuses on technologies and features especially related to EMC VNX storage systems and VMware vSphere server virtualization environment, experiencedIT professionals could adopt it for any other technology.This article aims to cause Storage andVirtualization team members to think aboutnew and existing technologies and how theteams should collaborate regarding thosetechnologies.It’s easy to work as in old days and notcommunicate between teams. However, to fullyutilize the environment, it’s important for bothteams to collaborate more than ever.(*) – Virtualization team in this article refers to people handling the Server Virtualizationenvironment2014 EMC Proven Professional Knowledge Sharing5

How this article is builtThe main part of this article is divided into six main sections:1. Provisioning Storage for datastore2. Connectivity3. Storage and Virtualization features and technologies4. Backup & data protection5. Monitoring and management6. Additional productsEach section contains topics discussing technologies that can benefit from collaborationbetween the Storage and Virtualization teams.In each topic, we provide a technical explanation and what teams should collaboratebetween them regarding it.After discussing all of the technologies, there is a summary of main points ofcollaboration between the Storage team and Virtualization team.The article concludes with a section regarding the next level of collaboration.2014 EMC Proven Professional Knowledge Sharing6

Provisioning Storage for DatastoresData Mover and Virtual Data MoverA Data Mover (DM) is a physical device that moves Common Internet File System(CIFS) and Network File System (NFS) file services out of the storage system.A Virtual Data Mover (VDM) is a software feature that enables grouping of CIFS andNFS file systems and servers into virtual containers. Each VDM contains all the datanecessary to support one or more CIFS and/or NFS servers and their file systems.Utilizing NFS datastores in a vSphere and VNX environment requires one or more DMsor VDMs.In cases requiring higher separation between tenants of the vSphere environment onemight think about using VDM to completely segregate the data of different tenants thatuse the same (or other) vSphere environment. For example, due to compliancerequirements, segregate the data of Finance and Human Resources departments into adifferent VDM from other departments in a company.Another example is where two or more companies that use the same vSphereenvironment in a Public Cloud service might require that their data is fully segregatedfrom each other.VDM can move between physical DMs for load balancing to enhance performance. Itcan also move between DMs if one of the DMs is down due to failure or maintenance.Collaboration between Storage and Virtualization teamsWhile implementing NFS datastores in the environment, the Virtualization team should: alert the Storage team if there is a need for high segregation between differenttenants that use different datastores. In such cases, the Storage team might usedifferent DM or VDMs for different datastores emphasize if there is need for high availability. In such cases, the Storage teamshould use N 1 Data Movers to support failure of a Data Mover.RAID Group and Storage PoolsRAID Groups and Storage Pools are disk grouping mechanisms in the VNX storagesystem. RAID Group is a traditional approach to disk grouping and supports up to 16disks in a VNX environment. Storage Pools offers more flexible configuration in terms of2014 EMC Proven Professional Knowledge Sharing7

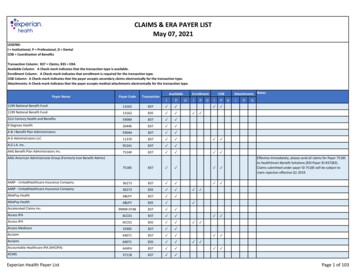

number of disks, space allocation, and additional advanced features. The advantage ofRAID Groups is the enhanced performance it provides compare to Storage Pools.Table 1 summarizes the main differences between RAID Groups and Storage Pools.Pool typeMaximum ragePooldependson VNXmodel7Table 1: Main differences between RAID Groups and Storage Pools - Table based on ( )Collaboration between Storage and Virtualization teamsThese days, the Storage team will usually use Storage Pools because it is more flexibleto use and has an enhanced set of features.If the Virtualization team knows about a new datastore that requires extremeperformance, they should mention it to the Storage team who can then decide if RAIDGroup is needed should the specific requirement arise.RAID TypesWith the VNX storage system, there are many RAID types that can be configured. Not allRAID types are supported by RAID Groups and Storage Pools.2014 EMC Proven Professional Knowledge Sharing8

Table 2 summarizes the RAID options for the VNX.RAID TypeHow it worksSupportSupportby RAIDbyGroupStorageMain featuresGroupRAID 0Striped RAIDYesNoNo data protectionRAID 1Striping across allYesNoOne mirror disk foreach data disk –spindlesmaximum protectionbut minimum diskutilizationRAID 1/0Mirroring and stripingYesYesOne mirror disk foreach data disk –across all spindlesmaximum protectionbut minimum diskutilizationRAID 3Striping andYesNodedicated parity diskNot widely used thesedays due to singleparity disk limitationRAID 5Striping withYesYesGood protection fordistributed paritymost scenarios withacross all disksefficient disk spaceusageRAID 6Striping withYesYesAdditional paritydistributed doublereduce efficiency inparity across all disksdisk usage and causeadditional latencyTable 2: RAID option for VNX2014 EMC Proven Professional Knowledge Sharing9

Collaboration between Storage and Virtualization teamsUsually, the Storage team sets RAID type for a specific datastore according toperformance and protection needs requested by the Virtualization team.The Virtualization team should be involved in that decision in the following situations: Extreme performance is needed for a specific application. Less performance is needed, i.e. the datastore only supports templates and ISOfiles. High protection is needed. No protection is needed for a specific datastore, i.e. the datastore is used fortesting. Specific application with specific needs, i.e. implementing database logs on RAID1/0 Raw Device Mapping (RDM).Fully Automated Storage Tiering for Virtual Pools (FAST VP)FAST VP is a feature that can be configured for a Storage Pool while that pool has twoor more disk types of the following: Flash or Enterprise Flash Disks (EFD) also called Extreme Performancetier - provides lowest capacity with highest performance Serial Attached SCSI (SAS) disks also called Performance tier - providesgood capacity and good performance Near-Line Serial Attached SCSI (NL-SAS) disks also called Capacity tier provides highest capacity and lowest performanceLogical Unit Numbers (LUNs) created within Storage Pools are distributed across one ormore of the above disk types. For example, a specific pool can be located partly onFlash disks and partly on NL-SAS disks.The FAST VP mechanism keeps records of how often each slice of LUN is used andthen relocates its data according to usage. A slice that is used occasionally will be storedon higher performance, higher cost disks while a slice that is not used will be located onlowest performance, lowest cost disks. Relocation of slices between tiers is not done inreal-time. Usually, it is performed as an off-hours scheduled task.2014 EMC Proven Professional Knowledge Sharing10

Collaboration between Storage and Virtualization teamsIt is important for the Virtualization team to be aware if the FAST VP feature is on or offfor each datastore since Storage Distributed Resource Scheduling (SDRS) on thevSphere side is not working properly while FAST VP is turned on. Both mechanismsrelocate VMs according to usage of datastores (see later in this article about FAST VPand SDRS recommendations for working together).A few types of tiering policies can be set while FAST VP is active:1. Auto-Tier – Maximize tier usage for a LUN automatically.2. Highest Available Tier – Set data on the highest available tier for performance.3. Lowest Available Tier – Set data on the lowest available tier when performance isnot needed.The simplest scenario is to set all vSphere LUNs on the Auto-Tier policy so the storagesystem is handling/optimizing data between tiers according to usage.If there is specific requirement for a specific LUN to have high performance it might bebest that the Virtualization team ask the Storage team to set that LUN with HighestAvailable Tier policy. In such a scenario, it might be wise to migrate Virtual MachineDisks (VMDKs) from LUN to LUN (Storage vMotion) in order to have same sort ofperformance characteristics for a specific LUN (for example, not to put templates andISO files in a LUN where policy is set to Highest Available Tier). A scenario when theVirtualization team asks for Lowest Available tier is when a LUN is populated with ISOfiles and templates.FAST CacheFAST Cache is an optimization technology to improve performance of applicationsconnected to the storage system. It uses Flash disks to store the most frequently useddata within the system. FAST Cache in VNX environments operate at 64KB extent size.A block within an extent that is accessed multiple times within a specific interval will bepromoted to the FAST Cache area (meaning it will be located on Flash disks foraccess).The storage system takes care that as block use becomes less frequent it willbe demoted from the FAST Cache area.FAST Cache improves performance especially for applications that use a specific set ofdata many times. This is contrary to applications as backup system that read all datasequentially.2014 EMC Proven Professional Knowledge Sharing11

Collaboration between Storage and Virtualization teamsThe Virtualization team should be aware that FAST Cache is enabled as it shouldenhance performance. Besides this, there is nothing to collaborate between the teamsregarding this.Storage for files such as templates and ISO files on datastoresThe Virtualization team usually needs to store some ISO files, VM templates and suchon datastores. They can do it on any datastore that exist on their system or they candedicate a specific datastore or datastores for this.Collaboration between Storage and Virtualization teamsIn cases where the Virtualization team uses any datastore for their ISO files andtemplates, the Storage team should do nothing (but keep in mind that it might be a wasteof expensive high performance disks for such usage – especially if not using amechanism such as FAST VP).If the Virtualization team uses a dedicated datastore or datastores for files such asthese, it should collaborate with the Storage team about it and the Storage team shouldthen: Use inexpensive disks for this datastore. Consider required protection level for this datastore (RAID type).2014 EMC Proven Professional Knowledge Sharing12

ConnectivityMultipathing in Storage Area Network (SAN) environmentMultipathing is a method enables use of more than one physical path to transfer databetween the vSphere environment and the storage environment.SAN configurations usually have two or more Host Bus Adapters (HBAs) available perESXi host. Also, there are two or more Fiber Channel (FC) connections on the storagesystem.VNX storage is an Active-Passive storage system; for each specific LUN there is onlyone Storage Processor (SP) that owns the LUN. Due to this, the default failover mode inthe

2014 EMC Proven Professional Knowledge Sharing 6 How this article is built The main part of this article is divided into six main sections: 1. Provisioning Storage for datastore 2. Connectivity 3. Storage and Virtualization features and technologies 4. Backup & data protection 5. Monitoring and management 6. Additional products