Transcription

Dynamo: Amazon’s Highly Available Key-value StoreGiuseppe DeCandia, Deniz Hastorun, Madan Jampani, Gunavardhan Kakulapati,Avinash Lakshman, Alex Pilchin, Swaminathan Sivasubramanian, Peter Vosshalland Werner VogelsAmazon.comOne of the lessons our organization has learned from operatingAmazon’s platform is that the reliability and scalability of asystem is dependent on how its application state is managed.Amazon uses a highly decentralized, loosely coupled, serviceoriented architecture consisting of hundreds of services. In thisenvironment there is a particular need for storage technologiesthat are always available. For example, customers should be ableto view and add items to their shopping cart even if disks arefailing, network routes are flapping, or data centers are beingdestroyed by tornados. Therefore, the service responsible formanaging shopping carts requires that it can always write to andread from its data store, and that its data needs to be availableacross multiple data centers.ABSTRACTReliability at massive scale is one of the biggest challenges weface at Amazon.com, one of the largest e-commerce operations inthe world; even the slightest outage has significant financialconsequences and impacts customer trust. The Amazon.complatform, which provides services for many web sites worldwide,is implemented on top of an infrastructure of tens of thousands ofservers and network components located in many datacentersaround the world. At this scale, small and large components failcontinuously and the way persistent state is managed in the faceof these failures drives the reliability and scalability of thesoftware systems.This paper presents the design and implementation of Dynamo, ahighly available key-value storage system that some of Amazon’score services use to provide an “always-on” experience. Toachieve this level of availability, Dynamo sacrifices consistencyunder certain failure scenarios. It makes extensive use of objectversioning and application-assisted conflict resolution in a mannerthat provides a novel interface for developers to use.Dealing with failures in an infrastructure comprised of millions ofcomponents is our standard mode of operation; there are always asmall but significant number of server and network componentsthat are failing at any given time. As such Amazon’s softwaresystems need to be constructed in a manner that treats failurehandling as the normal case without impacting availability orperformance.Categories and Subject DescriptorsTo meet the reliability and scaling needs, Amazon has developeda number of storage technologies, of which the Amazon SimpleStorage Service (also available outside of Amazon and known asAmazon S3), is probably the best known. This paper presents thedesign and implementation of Dynamo, another highly availableand scalable distributed data store built for Amazon’s platform.Dynamo is used to manage the state of services that have veryhigh reliability requirements and need tight control over thetradeoffs between availability, consistency, cost-effectiveness andperformance. Amazon’s platform has a very diverse set ofapplications with different storage requirements. A select set ofapplications requires a storage technology that is flexible enoughto let application designers configure their data store appropriatelybased on these tradeoffs to achieve high availability andguaranteed performance in the most cost effective manner.D.4.2 [Operating Systems]: Storage Management; D.4.5[Operating Systems]: Reliability; D.4.2 [Operating Systems]:Performance;General TermsAlgorithms, Management, Measurement, Performance, Design,Reliability.1. INTRODUCTIONAmazon runs a world-wide e-commerce platform that serves tensof millions customers at peak times using tens of thousands ofservers located in many data centers around the world. There arestrict operational requirements on Amazon’s platform in terms ofperformance, reliability and efficiency, and to support continuousgrowth the platform needs to be highly scalable. Reliability is oneof the most important requirements because even the slightestoutage has significant financial consequences and impactscustomer trust. In addition, to support continuous growth, theplatform needs to be highly scalable.There are many services on Amazon’s platform that only needprimary-key access to a data store. For many services, such asthose that provide best seller lists, shopping carts, customerpreferences, session management, sales rank, and product catalog,the common pattern of using a relational database would lead toinefficiencies and limit scale and availability. Dynamo provides asimple primary-key only interface to meet the requirements ofthese applications.Permission to make digital or hard copies of all or part of this work forpersonal or classroom use is granted without fee provided that copies arenot made or distributed for profit or commercial advantage and thatcopies bear this notice and the full citation on the first page. To copyotherwise, or republish, to post on servers or to redistribute to lists,requires prior specific permission and/or a fee.SOSP’07, October 14–17, 2007, Stevenson, Washington, USA.Copyright 2007 ACM 978-1-59593-591-5/07/0010. 5.00.Dynamo uses a synthesis of well known techniques to achievescalability and availability: Data is partitioned and replicatedusing consistent hashing [10], and consistency is facilitated byobject versioning [12]. The consistency among replicas duringupdates is maintained by a quorum-like technique and adecentralized replica synchronization protocol. Dynamo employs205195

This paper describes Dynamo, a highly available data storagetechnology that addresses the needs of these important classes ofservices. Dynamo has a simple key/value interface, is highlyavailable with a clearly defined consistency window, is efficientin its resource usage, and has a simple scale out scheme to addressgrowth in data set size or request rates. Each service that usesDynamo runs its own Dynamo instances.a gossip based distributed failure detection and membershipprotocol. Dynamo is a completely decentralized system withminimal need for manual administration. Storage nodes can beadded and removed from Dynamo without requiring any manualpartitioning or redistribution.In the past year, Dynamo has been the underlying storagetechnology for a number of the core services in Amazon’s ecommerce platform. It was able to scale to extreme peak loadsefficiently without any downtime during the busy holidayshopping season. For example, the service that maintainsshopping cart (Shopping Cart Service) served tens of millionsrequests that resulted in well over 3 million checkouts in a singleday and the service that manages session state handled hundredsof thousands of concurrently active sessions.2.1System Assumptions and RequirementsThe storage system for this class of services has the followingrequirements:Query Model: simple read and write operations to a data item thatis uniquely identified by a key. State is stored as binary objects(i.e., blobs) identified by unique keys. No operations spanmultiple data items and there is no need for relational schema.This requirement is based on the observation that a significantportion of Amazon’s services can work with this simple querymodel and do not need any relational schema. Dynamo targetsapplications that need to store objects that are relatively small(usually less than 1 MB).The main contribution of this work for the research community isthe evaluation of how different techniques can be combined toprovide a single highly-available system. It demonstrates that aneventually-consistent storage system can be used in productionwith demanding applications. It also provides insight into thetuning of these techniques to meet the requirements of productionsystems with very strict performance demands.ACID Properties: ACID (Atomicity, Consistency, Isolation,Durability) is a set of properties that guarantee that databasetransactions are processed reliably. In the context of databases, asingle logical operation on the data is called a transaction.Experience at Amazon has shown that data stores that provideACID guarantees tend to have poor availability. This has beenwidely acknowledged by both the industry and academia [5].Dynamo targets applications that operate with weaker consistency(the “C” in ACID) if this results in high availability. Dynamodoes not provide any isolation guarantees and permits only singlekey updates.The paper is structured as follows. Section 2 presents thebackground and Section 3 presents the related work. Section 4presents the system design and Section 5 describes theimplementation. Section 6 details the experiences and insightsgained by running Dynamo in production and Section 7 concludesthe paper. There are a number of places in this paper whereadditional information may have been appropriate but whereprotecting Amazon’s business interests require us to reduce somelevel of detail. For this reason, the intra- and inter-datacenterlatencies in section 6, the absolute request rates in section 6.2 andoutage lengths and workloads in section 6.3 are provided throughaggregate measures instead of absolute details.Efficiency: The system needs to function on a commodityhardware infrastructure. In Amazon’s platform, services havestringent latency requirements which are in general measured atthe 99.9th percentile of the distribution. Given that state accessplays a crucial role in service operation the storage system mustbe capable of meeting such stringent SLAs (see Section 2.2below). Services must be able to configure Dynamo such that theyconsistently achieve their latency and throughput requirements.The tradeoffs are in performance, cost efficiency, availability, anddurability guarantees.2. BACKGROUNDAmazon’s e-commerce platform is composed of hundreds ofservices that work in concert to deliver functionality ranging fromrecommendations to order fulfillment to fraud detection. Eachservice is exposed through a well defined interface and isaccessible over the network. These services are hosted in aninfrastructure that consists of tens of thousands of servers locatedacross many data centers world-wide. Some of these services arestateless (i.e., services which aggregate responses from otherservices) and some are stateful (i.e., a service that generates itsresponse by executing business logic on its state stored inpersistent store).Other Assumptions: Dynamo is used only by Amazon’s internalservices. Its operation environment is assumed to be non-hostileand there are no security related requirements such asauthentication and authorization. Moreover, since each serviceuses its distinct instance of Dynamo, its initial design targets ascale of up to hundreds of storage hosts. We will discuss thescalability limitations of Dynamo and possible scalability relatedextensions in later sections.Traditionally production systems store their state in relationaldatabases. For many of the more common usage patterns of statepersistence, however, a relational database is a solution that is farfrom ideal. Most of these services only store and retrieve data byprimary key and do not require the complex querying andmanagement functionality offered by an RDBMS. This excessfunctionality requires expensive hardware and highly skilledpersonnel for its operation, making it a very inefficient solution.In addition, the available replication technologies are limited andtypically choose consistency over availability. Although manyadvances have been made in the recent years, it is still not easy toscale-out databases or use smart partitioning schemes for loadbalancing.2.2Service Level Agreements (SLA)To guarantee that the application can deliver its functionality in abounded time, each and every dependency in the platform needsto deliver its functionality with even tighter bounds. Clients andservices engage in a Service Level Agreement (SLA), a formallynegotiated contract where a client and a service agree on severalsystem-related characteristics, which most prominently includethe client’s expected request rate distribution for a particular APIand the expected service latency under those conditions. Anexample of a simple SLA is a service guaranteeing that it will206196

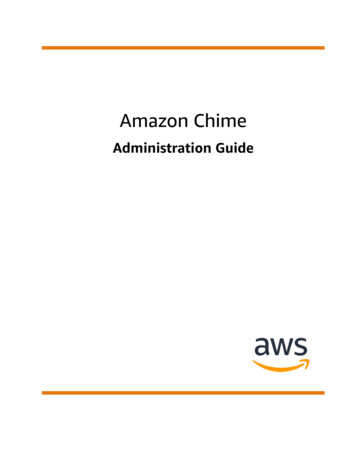

production systems have shown that this approach provides abetter overall experience compared to those systems that meetSLAs defined based on the mean or median.In this paper there are many references to this 99.9th percentile ofdistributions, which reflects Amazon engineers’ relentless focuson performance from the perspective of the customers’experience. Many papers report on averages, so these are includedwhere it makes sense for comparison purposes. Nevertheless,Amazon’s engineering and optimization efforts are not focused onaverages. Several techniques, such as the load balanced selectionof write coordinators, are purely targeted at controllingperformance at the 99.9th percentile.Storage systems often play an important role in establishing aservice’s SLA, especially if the business logic is relativelylightweight, as is the case for many Amazon services. Statemanagement then becomes the main component of a service’sSLA. One of the main design considerations for Dynamo is togive services control over their system properties, such asdurability and consistency, and to let services make their owntradeoffs between functionality, performance and costeffectiveness.2.3Figure 1: Service-oriented architecture of Amazon’splatformDesign ConsiderationsData replication algorithms used in commercial systemstraditionally perform synchronous replica coordination in order toprovide a strongly consistent data access interface. To achieve thislevel of consistency, these algorithms are forced to tradeoff theavailability of the data under certain failure scenarios. Forinstance, rather than dealing with the uncertainty of thecorrectness of an answer, the data is made unavailable until it isabsolutely certain that it is correct. From the very early replicateddatabase works, it is well known that when dealing with thepossibility of network failures, strong consistency and high dataavailability cannot be achieved simultaneously [2, 11]. As suchsystems and applications need to be aware which properties canbe achieved under which conditions.provide a response within 300ms for 99.9% of its requests for apeak client load of 500 requests per second.In Amazon’s decentralized service oriented infrastructure, SLAsplay an important role. For example a page request to one of thee-commerce sites typically requires the rendering engine toconstruct its response by sending requests to over 150 services.These services often have multiple dependencies, whichfrequently are other services, and as such it is not uncommon forthe call graph of an application to have more than one level. Toensure that the page rendering engine can maintain a clear boundon page delivery each service within the call chain must obey itsperformance contract.Figure 1 shows an abstract view of the architecture of Amazon’splatform, where dynamic web content is generated by pagerendering components which in turn query many other services. Aservice can use different data stores to manage its state and thesedata stores are only accessible within its service boundaries. Someservices act as aggregators by using several other services toproduce a composite response. Typically, the aggregator servicesare stateless, although they use extensive caching.For systems prone to server and network failures, availability canbe increased by using optimistic replication techniques, wherechanges are allowed to propagate to replicas in the background,and concurrent, disconnected work is tolerated. The challengewith this approach is that it can lead to conflicting changes whichmust be detected and resolved. This process of conflict resolutionintroduces two problems: when to resolve them and who resolvesthem. Dynamo is designed to be an eventually consistent datastore; that is all updates reach all replicas eventually.A common approach in the industry for forming a performanceoriented SLA is to describe it using average, median and expectedvariance. At Amazon we have found that these metrics are notgood enough if the goal is to build a system where all customershave a good experience, rather than just the majority. Forexample if extensive personalization techniques are used thencustomers with longer histories require more processing whichimpacts performance at the high-end of the distribution. An SLAstated in terms of mean or median response times will not addressthe performance of this important customer segment. To addressthis issue, at Amazon, SLAs are expressed and measured at the99.9th percentile of the distribution. The choice for 99.9% over aneven higher percentile has been made based on a cost-benefitanalysis which demonstrated a significant increase in cost toimprove performance that much. Experiences with Amazon’sAn important design consideration is to decide when to performthe process of resolving update conflicts, i.e., whether conflictsshould be resolved during reads or writes. Many traditional datastores execute conflict resolution during writes and keep the readcomplexity simple [7]. In such systems, writes may be rejected ifthe data store cannot reach all (or a majority of) the replicas at agiven time. On the other hand, Dynamo targets the design spaceof an “always writeable” data store (i.e., a data store that is highlyavailable for writes). For a number of Amazon services, rejectingcustomer updates could result in a poor customer experience. Forinstance, the shopping cart service must allow customers to addand remove items from their shopping cart even amidst networkand server failures. This requirement forces us to push thecomplexity of conflict resolution to the reads in order to ensurethat writes are never rejected.207197

Various storage systems, such as Oceanstore [9] and PAST [17]were built on top of these routing overlays. Oceanstore provides aglobal, transactional, persistent storage service that supportsserialized updates on widely replicated data. To allow forconcurrent updates while avoiding many of the problems inherentwith wide-area locking, it uses an update model based on conflictresolution. Conflict resolution was introduced in [21] to reducethe number of transaction aborts. Oceanstore resolves conflicts byprocessing a series of updates, choosing a total order among them,and then applying them atomically in that order. It is built for anenvironment where the data is replicated on an untrustedinfrastructure. By comparison, PAST provides a simpleabstraction layer on top of Pastry for persistent and immutableobjects. It assumes that the application can build the necessarystorage semantics (such as mutable files) on top of it.The next design choice is who performs the process of conflictresolution. This can be done by the data store or the application. Ifconflict resolution is done by the data store, its choices are ratherlimited. In such cases, the data store can only use simple policies,such as “last write wins” [22], to resolve conflicting updates. Onthe other hand, since the application is aware of the data schema itcan decide on the conflict resolution method that is best suited forits client’s experience. For instance, the application that maintainscustomer shopping carts can choose to “merge” the conflictingversions and return a single unified shopping cart. Despite thisflexibility, some application developers may not want to writetheir own conflict resolution mechanisms and choose to push itdown to the data store, which in turn chooses a simple policy suchas “last write wins”.Other key principles embraced in the design are:3.2Incremental scalability: Dynamo should be able to scale out onestorage host (henceforth, referred to as “node”) at a time, withminimal impact on both operators of the system and the systemitself.Symmetry: Every node in Dynamo should have the same set ofresponsibilities as its peers; there should be no distinguished nodeor nodes that take special roles or extra set of responsibilities. Inour experience, symmetry simplifies the process of systemprovisioning and maintenance.Decentralization: An extension of symmetry, the design shouldfavor decentralized peer-to-peer techniques over centralizedcontrol. In the past, centralized control has resulted in outages andthe goal is to avoid it as much as possible. This leads to a simpler,more scalable, and more available system.Heterogeneity: The system needs to be able to exploitheterogeneity in the infrastructure it runs on. e.g. the workdistribution must be proportional to the capabilities of theindividual servers. This is essential in adding new nodes withhigher capacity without having to upgrade all hosts at once.Among these systems, Bayou, Coda and Ficus allow disconnectedoperations and are resilient to issues such as network partitionsand outages. These systems differ on their conflict resolutionprocedures. For instance, Coda and Ficus perform system levelconflict resolution and Bayou allows application level resolution.All of them, however, guarantee eventual consistency. Similar tothese systems, Dynamo allows read and write operations tocontinue even during network partitions and resolves updatedconflicts using different conflict resolution mechanisms.Distributed block storage systems like FAB [18] split large sizeobjects into smaller blocks and stores each block in a highlyavailable manner. In comparison to these systems, a key-valuestore is more suitable in this case because: (a) it is intended tostore relatively small objects (size 1M) and (b) key-value storesare easier to configure on a per-application basis. Antiquity is awide-area distributed storage system designed to handle multipleserver failures [23]. It uses a secure log to preserve data integrity,replicates each log on multiple servers for durability, and usesByzantine fault tolerance protocols to ensure data consistency. Incontrast to Antiquity, Dynamo does not focus on the problem ofdata integrity and security and is built for a trusted environment.Bigtable is a distributed storage system for managing structureddata. It maintains a sparse, multi-dimensional sorted map andallows applications to access their data using multiple attributes[2]. Compared to Bigtable, Dynamo targets applications thatrequire only key/value access with primary focus on highavailability where updates are not rejected even in the wake ofnetwork partitions or server failures.3. RELATED WORK3.1 Peer to Peer SystemsThere are several peer-to-peer (P2P) systems that have looked atthe problem of data storage and distribution. The first generationof P2P systems, such as Freenet and Gnutella1, werepredominantly used as file sharing systems. These were examplesof unstructured P2P networks where the overlay links betweenpeers were established arbitrarily. In these networks, a searchquery is usually flooded through the network to find as manypeers as possible that share the data. P2P systems evolved to thenext generation into what is widely known as structured P2Pnetworks. These networks employ a globally consistent protocolto ensure that any node can efficiently route a search query tosome peer that has the desired data. Systems like Pastry [16] andChord [20] use routing mechanisms to ensure that queries can beanswered within a bounded number of hops. To reduce theadditional latency introduced by multi-hop routing, some P2Psystems (e.g., [14]) employ O(1) routing where each peermaintains enough routing information locally so that it can routerequests (to access a data item) to the appropriate peer within aconstant number of hops.1Distributed File Systems and DatabasesDistributing data for performance, availability and durability hasbeen widely studied in the file system and database systemscommunity. Compared to P2P storage systems that only supportflat namespaces, distributed file systems typically supporthierarchical namespaces. Systems like Ficus [15] and Coda [19]replicate files for high availability at the expense of consistency.Update conflicts are typically managed using specialized conflictresolution procedures. The Farsite system [1] is a distributed filesystem that does not use any centralized server like NFS. Farsiteachieves high availability and scalability using replication. TheGoogle File System [6] is another distributed file system built forhosting the state of Google’s internal applications. GFS uses asimple design with a single master server for hosting the entiremetadata and where the data is split into chunks and stored inchunkservers. Bayou is a distributed relational database systemthat allows disconnected operations and provides eventual dataconsistency [21].http://freenetproject.org/, http://www.gnutella.org208198

Table 1: Summary of techniques used in Dynamo andtheir advantages.Key KAGTechniqueAdvantagePartitioningConsistent HashingIncrementalScalabilityHigh Availabilityfor writesVector clocks withreconciliation duringreadsVersion size isdecoupled fromupdate rates.Handling temporaryfailuresSloppy Quorum andhinted handoffProvides highavailability anddurability guaranteewhen some of thereplicas are notavailable.Recovering frompermanent failuresAnti-entropy usingMerkle treesSynchronizesdivergent replicas inthe background.Membership andfailure detectionGossip-basedmembership protocoland failure detection.Preserves symmetryand avoids having acentralized registryfor storingmembership andnode livenessinformation.BFCENodes B, Cand D storekeys inrange (A,B)includingK.DFigure 2: Partitioning and replication of keys in Dynamoring.Traditional replicated relational database systems focus on theproblem of guaranteeing strong consistency to replicated data.Although strong consistency provides the application writer aconvenient programming model, these systems are limited inscalability and availability [7]. These systems are not capable ofhandling network partitions because they typically provide strongconsistency guarantees.3.3ProblemDiscussionTable 1 presents a summary of the list of techniques Dynamo usesand their respective advantages.Dynamo differs from the aforementioned decentralized storagesystems in terms of its target requirements. First, Dynamo istargeted mainly at applications that need an “always writeable”data store where no updates are rejected due to failures orconcurrent writes. This is a crucial requirement for many Amazonapplications. Second, as noted earlier, Dynamo is built for aninfrastructure within a single administrative domain where allnodes are assumed to be trusted. Third, applications that useDynamo do not require support for hierarchical namespaces (anorm in many file systems) or complex relational schema(supported by traditional databases). Fourth, Dynamo is built forlatency sensitive applications that require at least 99.9% of readand write operations to be performed within a few hundredmilliseconds. To meet these stringent latency requirements, it wasimperative for us to avoid routing requests through multiple nodes(which is the typical design adopted by several distributed hashtable systems such as Chord and Pastry). This is because multihop routing increases variability in response times, therebyincreasing the latency at higher percentiles. Dynamo can becharacterized as a zero-hop DHT, where each node maintainsenough routing information locally to route a request to theappropriate node directly.4.1System InterfaceDynamo stores objects associated with a key through a simpleinterface; it exposes two operations: get() and put(). The get(key)operation locates the object replicas associated with the key in thestorage system and returns a single object or a list of objects withconflicting versions along with a context. The put(key, context,object) operation determines where the replicas of the objectshould be placed based on the associated key, and writes thereplicas to disk. The context encodes system metadata about theobject that is opaque to the caller and includes information such asthe version of the object. The context information is stored alongwith the object so that the system can verify the validity of thecontext object supplied in the put request.Dynamo treats both the key and the object supplied by the calleras an opaque array of bytes. It applies a MD5 hash on the key togenerate a 128-bit identifier, which is used to determine thestorage nodes that are responsible for serving the key.4.2Partitioning AlgorithmOne of the key design requirements for Dynamo is that it mustscale incrementally. This requires a mechanism to dynamicallypartition the data over the set of nodes (i.e., storage hosts) in thesystem. Dynamo’s partitioning scheme relies on consistenthashing to distribute the load across multiple storage hosts. Inconsistent hashing [10], the output range of a hash function istreated as a fixed circular space or “ring” (i.e. the largest hashvalue wraps around to the smallest hash value). Each node in thesystem is assigned a random value within this space whichrepresents its “position” on the ring. Each data item identified bya key is assigned to a node by hashing the data item’s key to yieldits position on the ring, and then walking the ring clockwise tofind the first node with a position larger than the item’s position.4. SYSTEM ARCHITECTUREThe architecture of a storage system that needs to operate in aproduction setting is complex. In addition to the actual datapersistence component, the system needs to have scalable androbust solutions for load balancing, membership and failuredetection, failure recovery, replica synchronization, overloadhandling, state transfer, concurrency and job scheduling, requestmarshalling, request routing, system monitoring and alarming,and configuration management. Describing the details of each ofthe solutions

continuously and the way persistent state is managed in the face of these failures drives the reliability and scalability of the software systems. This paper presents the design and implementation of Dynamo, a highly available key-value storage system that some of Amazon's core services use to provide an "always-on" experience. To